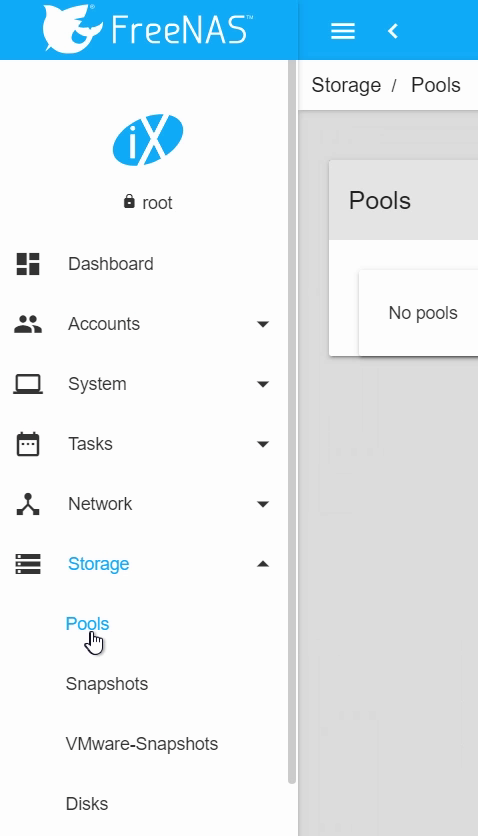

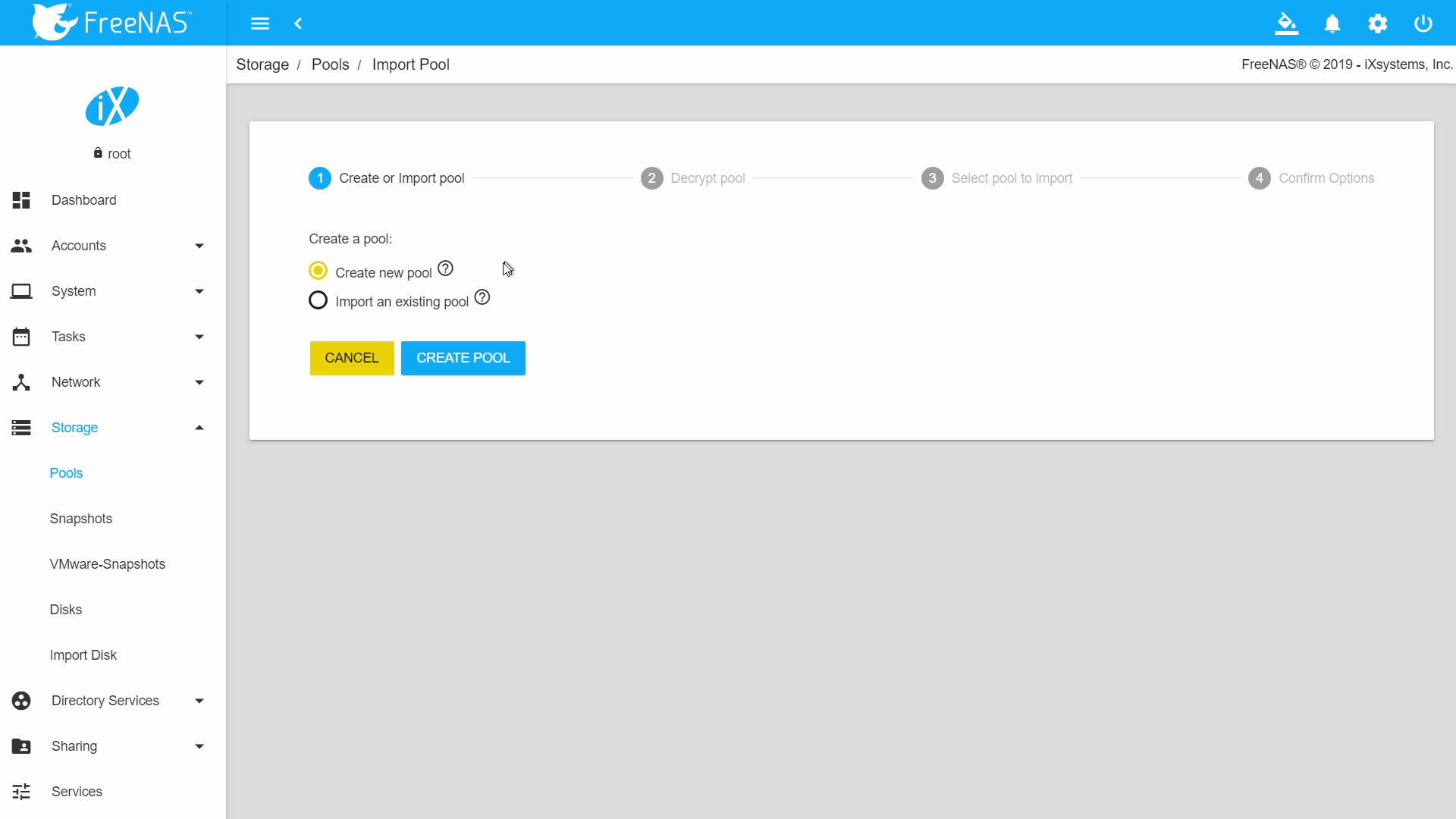

To begin, we are going to create a pool so storage disks can be allocated and shared. Head over to Storage, then Pools. This window lists all pools and datasets currently on your FreeNAS machine, and will not have any entries until you create a new pool or import a previously created pool. Click Add to create a new pool (or import an existing pool), then give it a name. NOTE: Some naming restrictions apply

Encryption can be used to protect sensitive data when removing a disk from your system. Encryption must be managed VERY carefully as you can potentially lose all access to your data if you forget the passphrase or lose the encryption keys.

FreeNAS uses the OpenZFS (ZFS) file system, which handles both disk and volume management. ZFS offers RAID options mirror, stripe, and its own parity distribution called RAIDZ that functions like RAID5 on hardware RAID. The file system is extremely flexible and secure, with various drive combinations, checksums, snapshots, and replications all possible. For a deeper dive on ZFS technology, read the ZFS Primer section of the FreeNAS documentation.

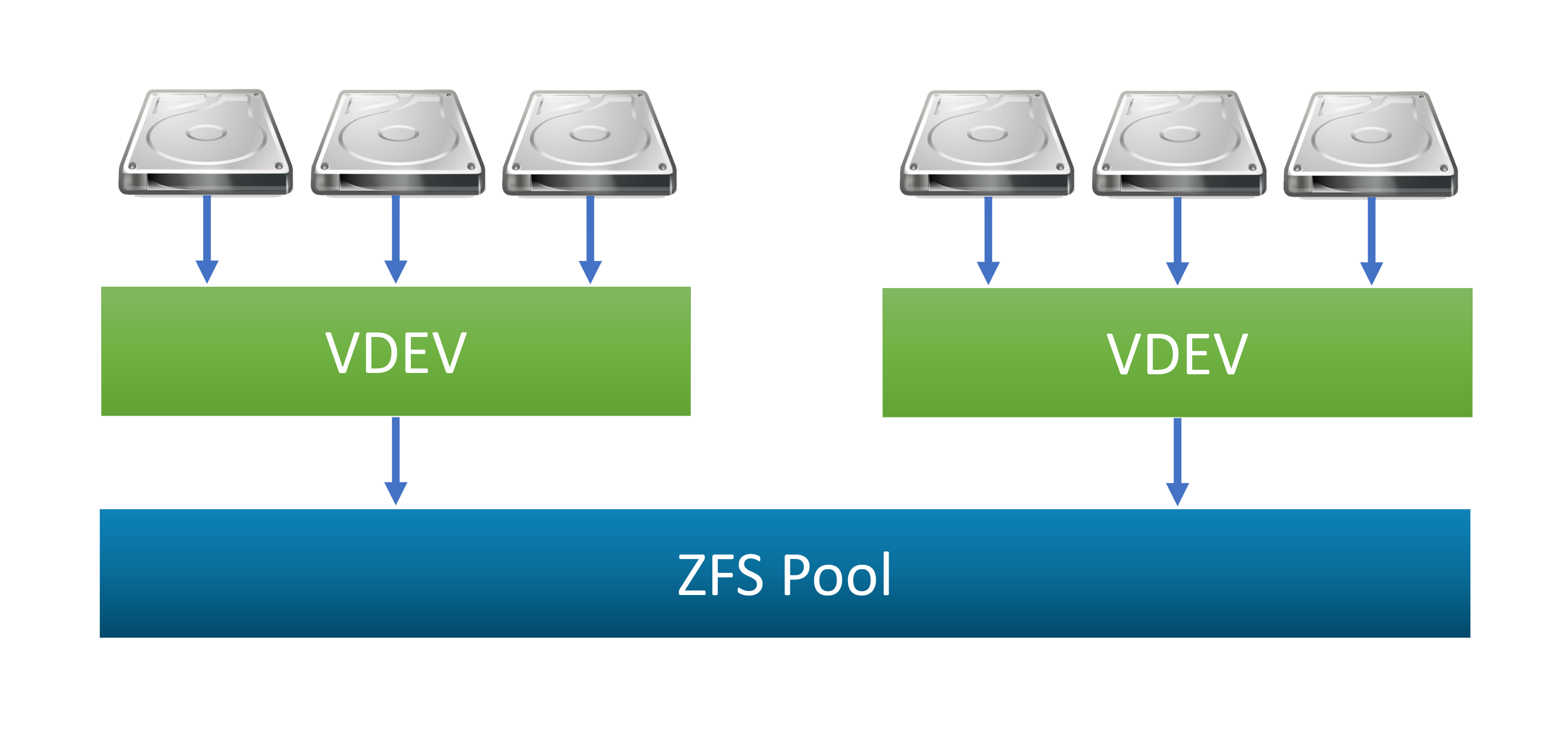

In ZFS, drives are logically grouped together into one or more vdevs. Each vdev can combine physical drives in a number of different configurations. If you have multiple vdevs, the pool data is striped across all the vdevs.

NOTE: If an entire vdev in a pool fails, all data on that pool will be lost. Ensure you have both redundant drives and hot spares ready to protect against data loss. We’ll be discussing the different RAIDZ options and vdev layouts below.

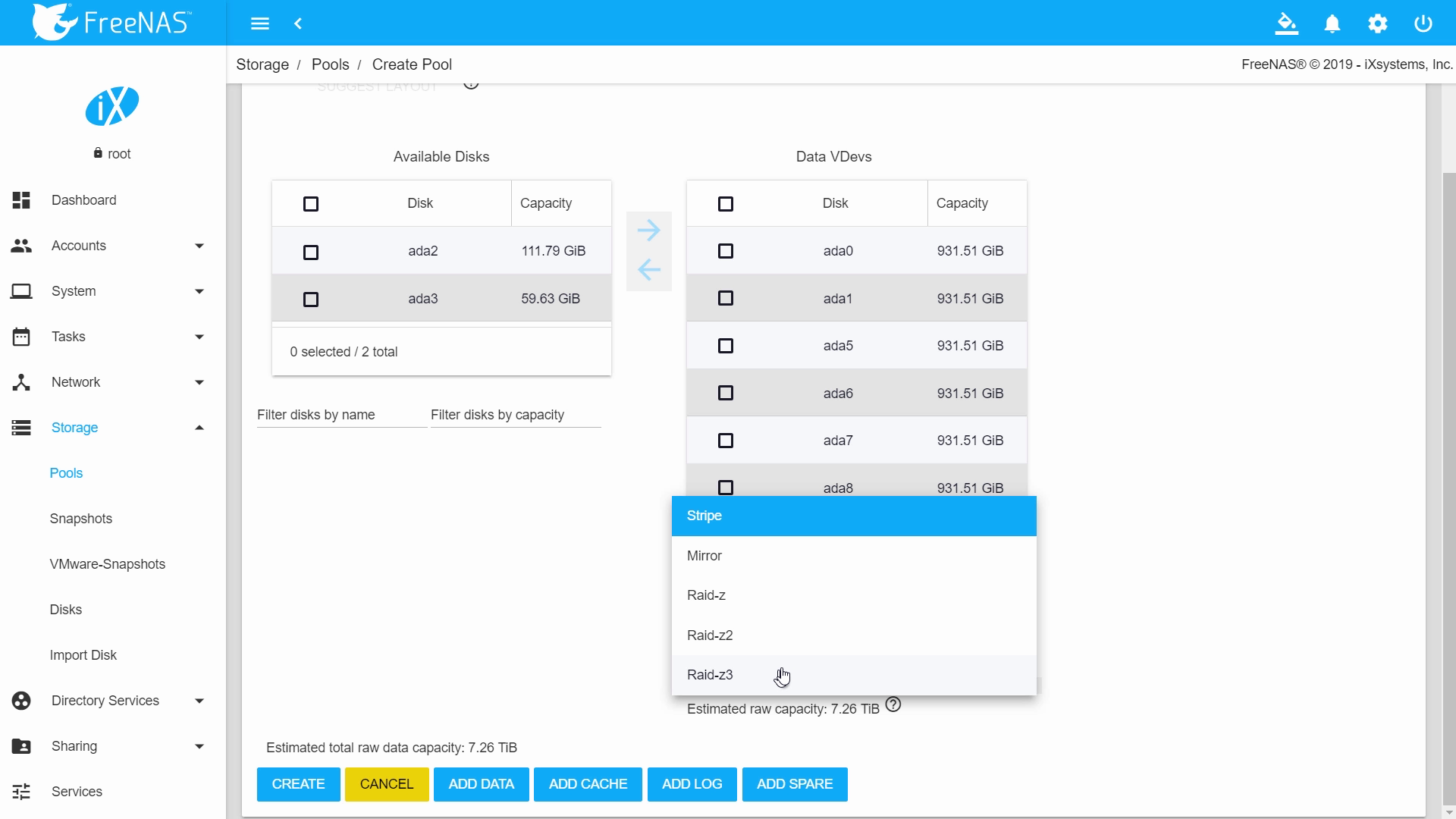

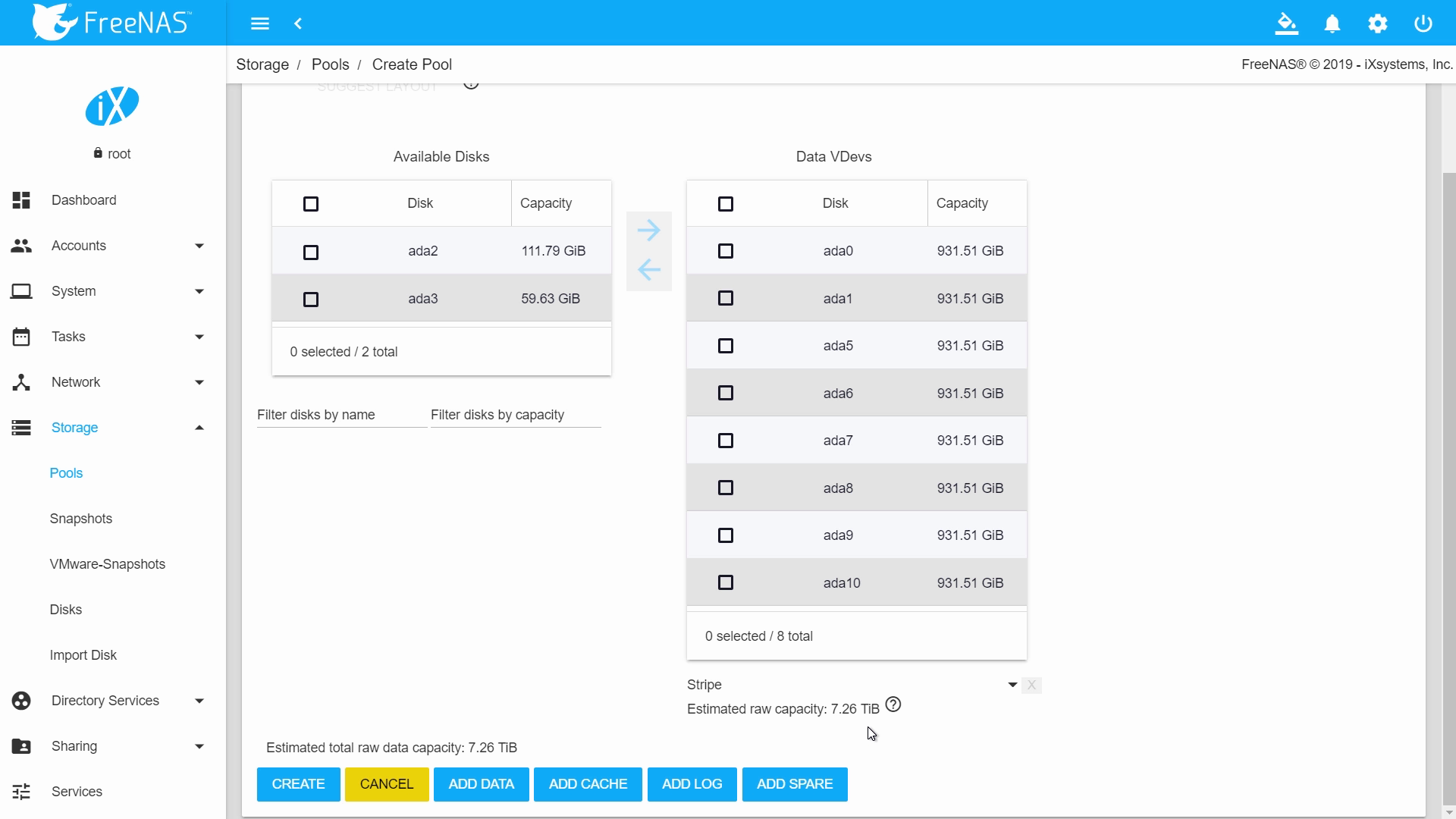

SUGGEST LAYOUT attempts to balance usable capacity and redundancy by automatically choosing an ideal vdev layout for the number of available disks.

The following vdev layout options are available when creating a pool:

- Stripe data is shared on two drives, similar to RAID0)

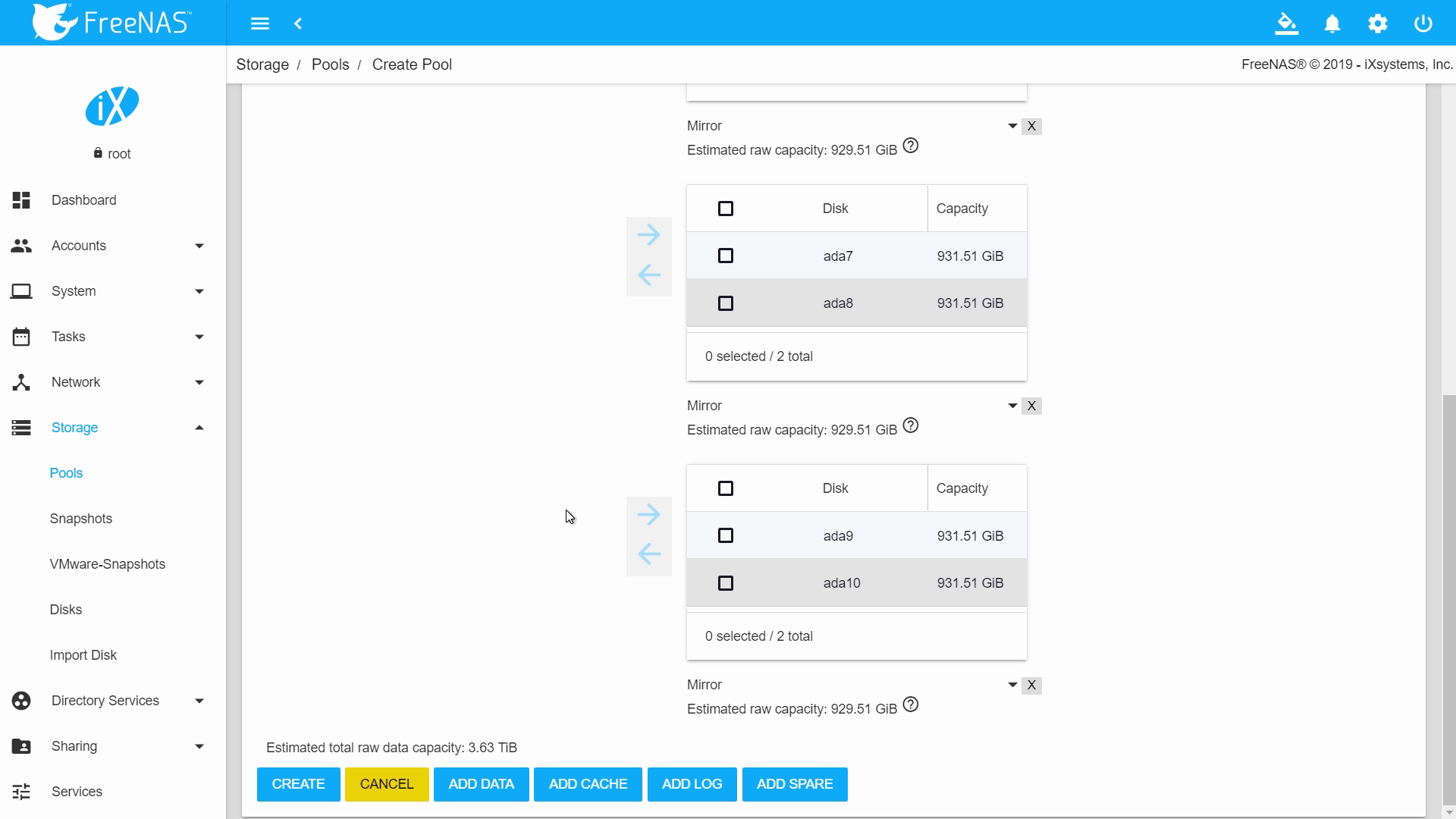

- Mirror copies data on two drives, similar to RAID1 but not limited to 2 disks)

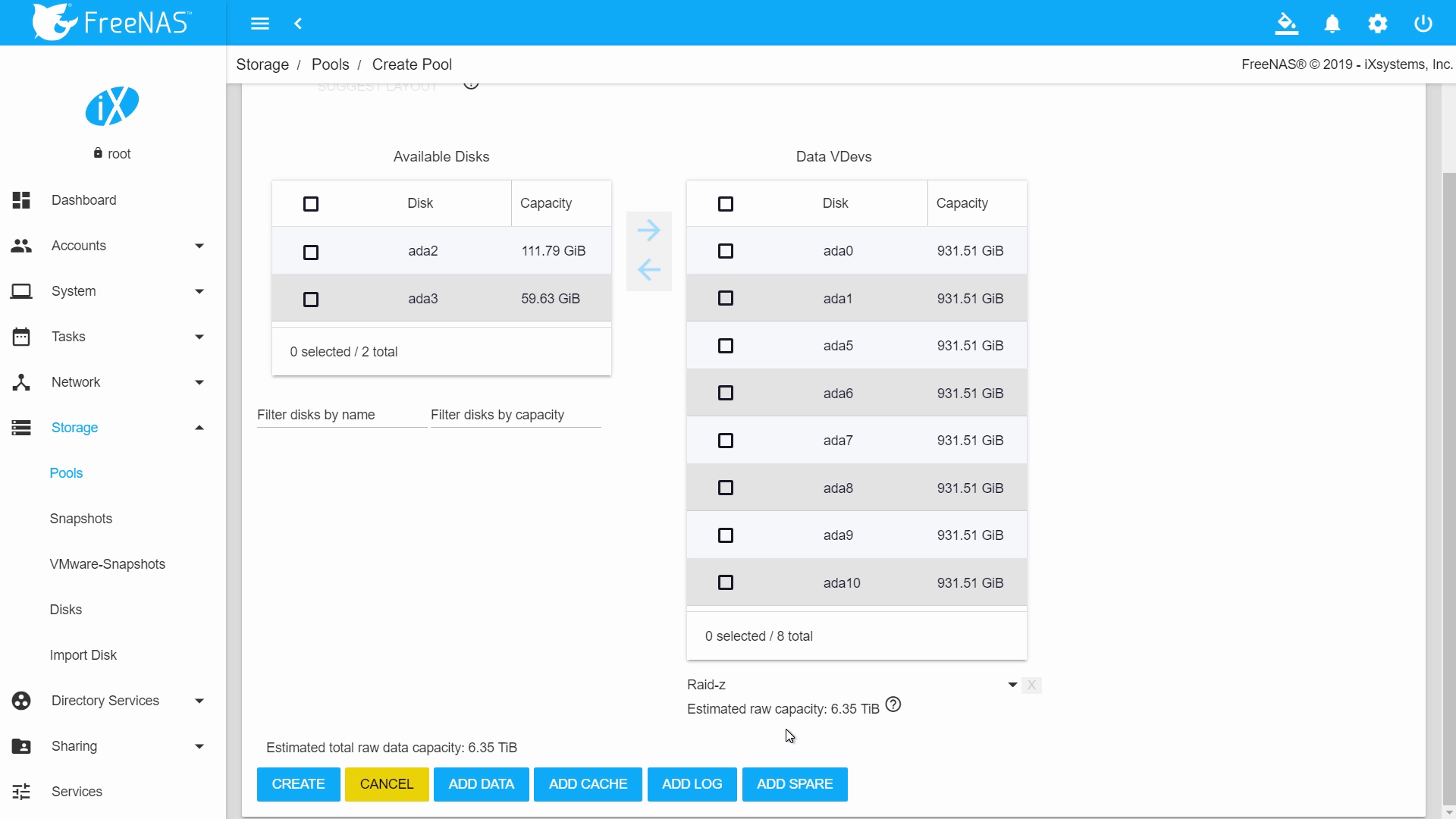

- RAIDZ1 single parity similar to RAID5

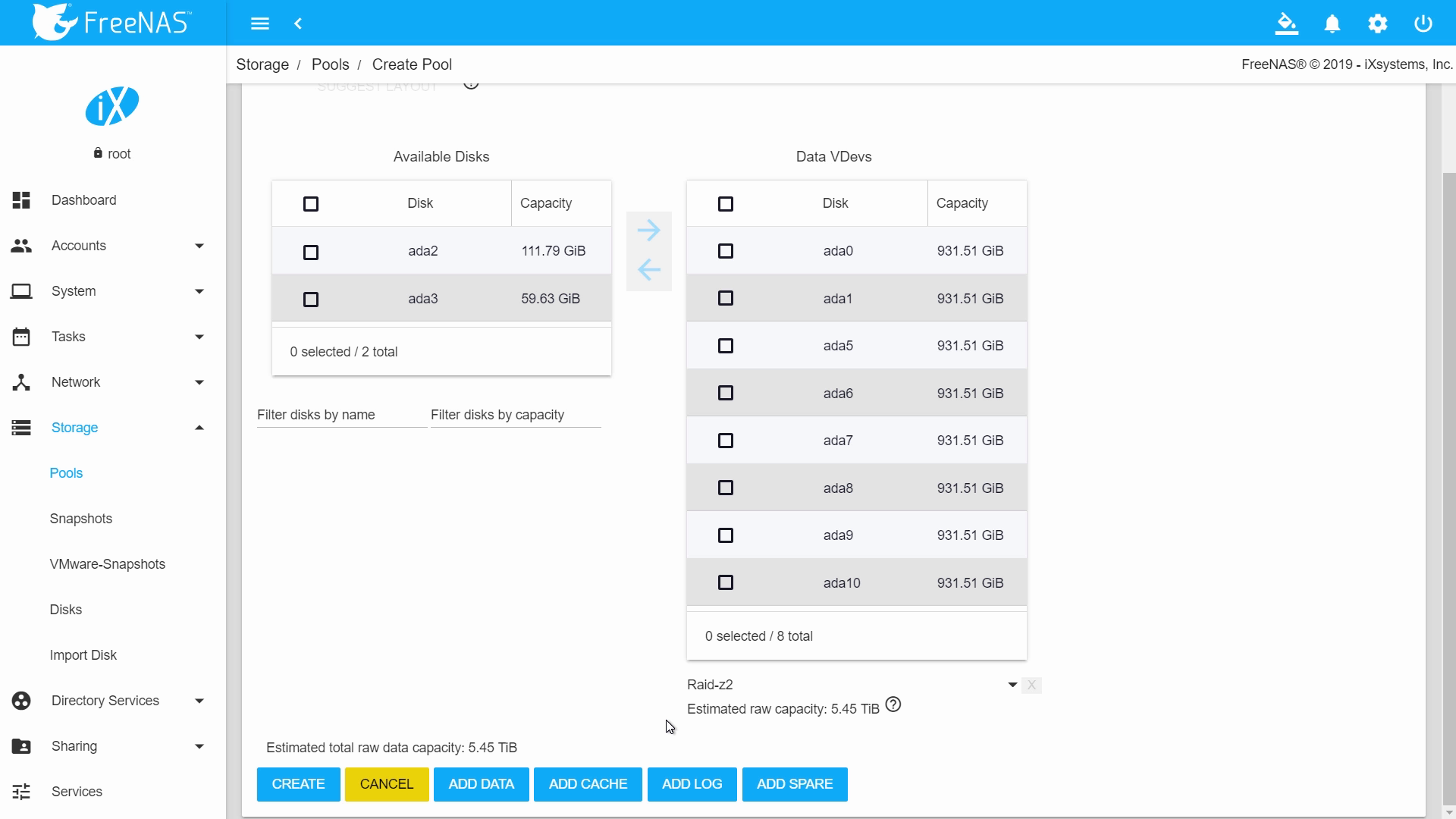

- RAIDZ2 double parity similar to RAID6

- RAIDZ3 which uses triple parity and has no RAID equivalent

The first vdev option is Stripe which simply combines all the disks into one single volume. A striped pool provides the best performance and most storage. However, since there is no redundancy, any disk failure will result in the loss of all data on the pool.

Mirroring, similar to RAID1, duplicates the data across every drive in the vdev. Because the pool data is striped across all the vdevs, a pool with multiple mirrored vdevs behaves sort of like a RAID 1+0 array. This setup has excellent redundancy and performance. As long as the mirrored vdev has at least one functional drive, your data will still be intact. Read operations can be read from all the drives, and writes are spread across all the vdevs. The downside to pools of mirrored vdevs is the high capacity penalty. As an example, to get 10TB of usable space, you would need over 20TB of RAW capacity.

RAIDZ1 (similar in concept to RAID5) offers a higher capacity while maintaining a layer of protection for the data. RAIDZ1 requires at least three disks per vdev and consumes one drive’s worth of capacity for parity protection distributed across all the drives. While RAIDZ1 does offer more protection than a simple striped pool, using RAIDZ1 is generally discouraged. With large 6+ TB drives, resilvering an array will usually take several hours. The resilvering operation puts a high load on the disks; if you’re using RAIDZ1 and any disk in the faulted vdev fails during resilver, the whole pool will fail with it.

Because RAIDZ1 is vulnerable to total pool failures during a resilver operation, we typically recommend using RAIDZ2 for backup and file-sharing configurations. Similar to RAID6, RAIDZ2 adds a second set of parity data to the vdev, again distributed across all the disks. You can lose up to two disks per vdev while maintaining data integrity, meaning RAIDZ2 is safer than RAIDZ1 but has a greater capacity penalty. RAIDZ2 requires a minimum of four disks.

RAIDZ3 adds a third set of parity data to the vdev. It requires at least five disks but allows you to lose up to three disks per vdev without any data loss. This could be good for very large disk arrays handling data archiving, but generally not used due to the performance impact of triple-parity. iXsystems typically recommends multiple RAIDZ2 vdevs grouped into a large pool for ultra-high capacity builds.

For additional information on ZFS Pools and how their configurations can affect performance, read our blog: Six Metrics for Measuring ZFS Pool Performance.

A hot Spare is a drive that isn’t used for storage but instead will immediately replace a failed drive in the pool. Because the pool is most vulnerable while it’s in a degraded state (i.e. it has failed drives, but is still functioning), it’s very important to begin the pool resilver operation as soon as possible. Having a hot spare in the pool ensures that this operation will start immediately, so it’s highly recommended to have a hot spare in your pool if you have space for one.

After you’ve selected a vdev layout, click Create to continue.

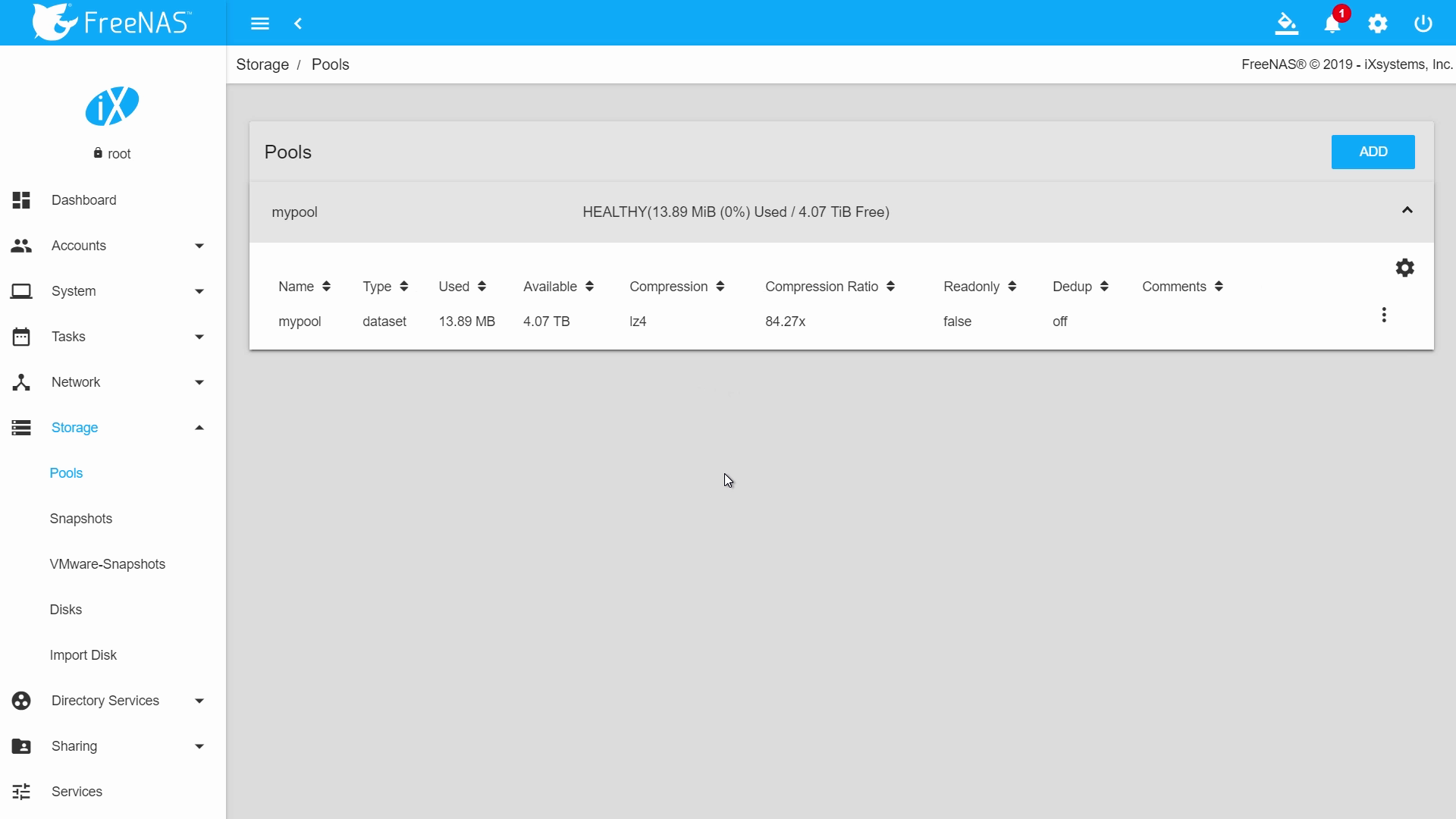

You should now see your newly created pool listed here.

Make sure to check out all of the FreeNAS tutorial videos on our YouTube channel. You can also find more information about managing pools in the FreeNAS Documentation here.