I'll do. Will take time. Its 4am over here so not today. Maybe next week i'll find the time.Read it over, you will figure it out.

-

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum has become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Resource icon

multi_report.sh version for Core and Scale 3.0

- Thread starter TooMuchData

- Start date

- Joined

- Nov 22, 2017

- Messages

- 310

Been working on it for a while; I do my best to both keep it up to date and make it more understandable.Wow, that is very similar to my script, even some of my newer changes seem to have migrated into it. I didn't even know it was out there in this version, I recall the original version.

- Joined

- May 28, 2011

- Messages

- 10,996

That is good. I'm all about the good of the people here, if we create a better product, teach people how to do things, share knowledge, that is my goal. i need to take a look at what you have, I see some differences for sure that I may want to take advantage of. I know I'm not a very good programmer so I will take my beating and learn where I can.Been working on it for a while; I do my best to both keep it up to date and make it more understandable.

@lx05 If I came off as a little harsh last night, it was not my intention to. I was tired as hell when I read the posting and my real point was to read the script, figure out what it's doing. And above all, cause no harm. This is why I try to test the hell out of the scripts I post. I do my due diligence. I can't test every configuration or situation but I make a best effort to make sure the product works on Core and Scale, or I toss a disclaimer that it hasn't been tested on Scale (early beta versions for me are that way). But keep up the good work and learn all you can.

No worries.If I came off as a little harsh last night

It was late at night and I came across this feature, was curious and gave it a try. Wanted to get it done quick and dirty (which always goes wrong) and didn't look much into it. Then wanted to throw it out here because the next morning I probably wouldn't have remembered what I changed. I did a lot of crap in the script and now slowly understand how the script fully works. Right now I just use a static variable to achieve the feature I want (which isn't very risky imo, just 2 lines of code) and maybe I'll figure out how to implement it "properly", then I will come back here.

I'm quite strict on time right now, as I am writing my German A-levels right now, but I often do stuff that is fun to me instead of preparing...

Thanks for pointing out the possible impacts of my edits in the script. Atm I run my TrueNas mainly for fun and to try out different jails, plugins, dockers... to create a all-in-one machine for all my needs. I don't have a lot of data on there, others might do and it could be drastically more mission-critical than mine is.

- Joined

- May 28, 2011

- Messages

- 10,996

When you figure it out, teach me how it works.and now slowly understand how the script fully works.

- Joined

- Nov 22, 2017

- Messages

- 310

Is your version on github (or some other dvcs site) somewhere? I do not think I have ever seen a link to it aside from flat downloads.That is good. I'm all about the good of the people here, if we create a better product, teach people how to do things, share knowledge, that is my goal. i need to take a look at what you have, I see some differences for sure that I may want to take advantage of. I know I'm not a very good programmer so I will take my beating and learn where I can.

- Joined

- May 28, 2011

- Messages

- 10,996

Yes, here is the site: https://github.com/JoeSchmuck/Multi-ReportIs your version on github

So I'm certain that I do not use github properly but it is a nice safe place to keep a copy of my work. I tend to make changes on my computer, test the crap out of them, then update github. But often the "beta" versions have not been tested or might be lightly tested on Scale, because I don't use Scale but I have a test server setup for this use only. I will test on Scale before I remove the "beta" label. I just uploaded the changes to github which I have made to fix the minor issue @lx05 had and this is still a beta because I updated my version 2.0 that I was in the middle of to add that change. I also updated some of the mouseover changes although I'm not happy with the solution. I'm sure there is a better way, I just don't know it. I'm an engineer by day time, not a programmer

Deeda

Explorer

- Joined

- Feb 16, 2021

- Messages

- 65

- Joined

- May 28, 2011

- Messages

- 10,996

Not a silly question. The value is the number of days since the last SMART Short or Long tests was conducted as reported by the drive. And in your example the last test was a "long" test. There is a value that you can set as the trigger point, by default it's 2 days, you could change it to 1000 days if you desire, but I would never suggest that.Possibly a silly question, but I often see the "Last Test Age" come up as something that needs attention. I was wondering what the number refers to?

The error could be caused by two things:

1) That it has been that long since the last SMART test, meaning maybe your drives are not listed to be included in the SMART Short tests like the rest of you drives are. This does happen where drives get dropped for various reasons.

2) The other possibility is the SMART Short/Long "Hours" are not actually representative of the power on hours value when the test occurs.

I've seen both situations. I would first verify that you are actually conducting testing as you expect. If that is correct then PM me the output of the script "-dump" option and all I really need are all the attached files.

So how to fix this if the drives are reporting incorrectly... One of the last updates I made was to allow customizing each drive if needed. You can use "-config" to generate this and you would IGNORE the Last Test Age data. This will add an entry to the multi_report_config.txt file that are specific overrides for these four drives. But I'd like you to still send me the -dump data if that is what you do. I just want to verify you are doing the correct thing and if I need to make a change to the script. If you feel the -config option seems confusing, I would ask you to provide me comments and suggestion on how you would improve it, keeping in mind that this a script and not a nice GUI. I can also provide you an updated config file that you can just drop in and the configuration is done for those four drives.

But first, find out if the SMART tests are being run. At face value it's been almost 2 years since a SMART test was run on those four drives.

- Joined

- Jul 12, 2022

- Messages

- 3,222

Hey, explored the config file a bit and found this bit

Now, my TrueNAS config wasn't ever mailed to me: is this a refuse, do I need to do something else or have I misinterpreted it?

Also I will shamelessly say that it would be nice to have the pool free space fragmentation monitored as well if possible; I did not find anything about it in the config file, my bad if I missed it.

Code:

###### FreeNAS config backup settings configBackup="true" # Set to "true" to save config backup (which renders next two options operational); "false" to keep disable config backups. configSendDay="Wed" # Set to the day of the week the config is emailed. (All, Mon, Tue, Wed, Thu, Fri, Sat, Sun, Month) saveBackup="false" # Set to "false" to delete FreeNAS config backup after mail is sent; "true" to keep it in dir below. backupLocation="/tmp/" # Directory in which to store the backup FreeNAS config files.

Now, my TrueNAS config wasn't ever mailed to me: is this a refuse, do I need to do something else or have I misinterpreted it?

Also I will shamelessly say that it would be nice to have the pool free space fragmentation monitored as well if possible; I did not find anything about it in the config file, my bad if I missed it.

- Joined

- May 28, 2011

- Messages

- 10,996

@Davvo The default config file will send the TrueNAS configuration file on Monday's, but yours looks to be setup for Wednesday's. If that is all you changed then it should be working but only on Wednesdays (tomorrow). Now I'm assuming you are editing the multi_report_config.txt file, not the script directly. The config file will overwrite the script values when it runs. The script values are only relevant if you do not use an external config file.

So do you want me to add Defrag to the Zpool chart? If yes, I would have it only create a warning not a critical error message. You will be able to set it to a specific value, and from what little I have read on the subject, 80% is still acceptable but nearing 90% is not, so the default threshold will be 80% (user changeable). It will be this weekend when I can get to it and this will be just before I finalize version 2.0.

Nope, the script as of right now does not monitor fragmentation because I never considered it and no one has asked for it before. It's an easy thing to add actually. I wish there was a tool to defrag ZFS pools but I'm not aware of any as of right now. If there is such a tool, I'd love to hear about it. I would also think TrueNAS would incorporate that tool whenever it becomes available.Also I will shamelessly say that it would be nice to have the pool free space fragmentation monitored as well if possible; I did not find anything about it in the config file, my bad if I missed it.

So do you want me to add Defrag to the Zpool chart? If yes, I would have it only create a warning not a critical error message. You will be able to set it to a specific value, and from what little I have read on the subject, 80% is still acceptable but nearing 90% is not, so the default threshold will be 80% (user changeable). It will be this weekend when I can get to it and this will be just before I finalize version 2.0.

- Joined

- Jul 12, 2022

- Messages

- 3,222

I use a cronjob that runs the script only at Wednesday at a fixed time; I will come again if I won't get the config today.@Davvo The default config file will send the TrueNAS configuration file on Monday's, but yours looks to be setup for Wednesday's. If that is all you changed then it should be working but only on Wednesdays (tomorrow).

Yup, not touching the script just using it as reference for the commented parts above the code.Now I'm assuming you are editing the multi_report_config.txt file, not the script directly.

If I'm not wrong the pool rebalancing script does defragmentation as a side bonus, but that's under specific conditions (you need to have the space in the pool to store a temporary copy of all your files in order to run the script iirc). I agree that a more intelligent way to do so would be a great addition to the TrueNAS/ZFS code.I wish there was a tool to defrag ZFS pools but I'm not aware of any as of right now. If there is such a tool, I'd love to hear about it. I would also think TrueNAS would incorporate that tool whenever it becomes available.

Thank you, that would be wonderful.So do you want me to add Defrag to the Zpool chart? If yes, I would have it only create a warning not a critical error message. You will be able to set it to a specific value, and from what little I have read on the subject, 80% is still acceptable but nearing 90% is not, so the default threshold will be 80% (user changeable). It will be this weekend when I can get to it and this will be just before I finalize version 2.0.

- Joined

- May 28, 2011

- Messages

- 10,996

I'm not so sure about that, not a true defragmentation. It looks like it has the ability to improve defragmentation as you said under the right situations but it doesn't do this by making files contiguous, it does it by spreading the files out evenly amongst many vdevs, if I'm reading it correctly. And to be honest, I have not read about this script before so 15 seconds of reading does not make me the expert.If I'm not wrong the pool rebalancing script does defragmentation as a side bonus

I think the only true way to force a defragmentation is to move all the files off to another vdev and then move them back, that would defragment the files but you need to have the space for all the files. Creating a script that does that wouldn't be difficult but you need the extra space and most people just don't have it.

I looked at my FRAG value last night, 2%. But then again I don't store a lot of changing data on my NAS.

Let me know how that goes. If it fails to work, send send me a copy of your config file so I can test it. I have no idea why it wouldn't work but maybe I induced an error. Never know.I use a cronjob that runs the script only at Wednesday at a fixed time; I will come again if I won't get the config today.

If I have time this weekend I will update the script, the wife hit me with a failing refrigerator last night. My priority has shifted, must have refrigerator. I hope I can fix it, much cheaper than a new one.

- Joined

- Jul 12, 2022

- Messages

- 3,222

Well, I don't think it's necessary to use another vdev; if the data is fragmented and there is enough space available you just need to rewrite the data in order to get those blocks written in a sequential order, then free the older blocks that stored such data. That's what that script does because incidentally is the same process used for balancing the pool since ZFS stripes the data on different vdevs. It's a dumb method that works for home use.I'm not so sure about that, not a true defragmentation. It looks like it has the ability to improve defragmentation as you said under the right situations but it doesn't do this by making files contiguous, it does it by spreading the files out evenly amongst many vdevs, if I'm reading it correctly. And to be honest, I have not read about this script before so 15 seconds of reading does not make me the expert.

I think the only true way to force a defragmentation is to move all the files off to another vdev and then move them back, that would defragment the files but you need to have the space for all the files. Creating a script that does that wouldn't be difficult but you need the extra space and most people just don't have it.

I looked at my FRAG value last night, 2%. But then again I don't store a lot of changing data on my NAS.

Will do, but I'm confident it will work.Let me know how that goes. If it fails to work, send send me a copy of your config file so I can test it. I have no idea why it wouldn't work but maybe I induced an error. Never know.

Ouch that's definitely a priority, though now I imagine your wife physically bonking you with the refrigerator in your geeky lair.If I have time this weekend I will update the script, the wife hit me with a failing refrigerator last night. My priority has shifted, must have refrigerator. I hope I can fix it, much cheaper than a new one.

- Joined

- May 28, 2011

- Messages

- 10,996

EDIT: Updated the file to v2.0.2 to fix two problems.

Here is Version2.0 2.0.2 and most of the updates were done last week, until I saw the version @dak180 was working on (Thanks @dak180) . I now have added data collection for the drives in the JSON format as well and if it proves to work out, it will replace the 'a' and 'x' files currently collected.

The changes you get with this change are a few added or updated a features, better readability for help information, but most important was adding better data collection and email support in order to troubleshoot a problem swiftly and accurately. Right now I can simulate a single drive at a time which is why I ask for the drive data.

With the custom drive configuration you can really edit each drive. For SSD/NVMe that shows Wear Level in reverse (0 = 100% good), there is a reverse option now. Because this option was added, if you already have a custom drive configuration, you will need to update it by deleting the custom data and recreating it or you will get a notification it's out of date.

The Email Support will now send me -dump files to the email address I created just for this project. This will ease the burden on the user. However I will know what your email address is and some people will be uneasy about this. Several people here can tell you that I will not share your email address, and I will not. I will communicate back to you via the email address it came from. If you prefer to just start a conversation here in the Forum and send attachments that way, that is fine too and will keep you anonymous. I just thought I'd make it easier for some people. To use this feature use the

The one thing I have not been able to make work as I'd like is the Mouseover feature so there is an alternate method available. If someone knows how to make this feature work properly, I'm willing to accept the code that actually works in the email HTML.

### Changelog:

# V2.0.2 (22 January 2023)

# - Fix Wear Level that may fail on some drives.

#

# v2.0.1 (21 January 2023)

# - Fixed Zpool Fragmentation Warning for 9% and greater (Hex Math issue again).

#

# v2.0 (21 January 2023)

# - Formatted all -config screens to fit into 80 column x 24 lines.

# - Removed custom builds

# - Fixed Custom Configuration Delete Function.

# - Fixed Zpool Scrub Bytes for FreeNAS 11.x

# - Fixed SMART Test to allow for 'Offline' value.

# - Modified Wear Level script to account for 'Reverse' Adjustment.

# - Added Wear Level Adjustment Reversing to the Custom Drive configuration.

# - Added Output.html to -dump command.

# - Added Mouseover and Alternate '()' to Mouseover for normalized values (Reallocated Sectors, Reallocated Sector Events, UDMA CRC, MultiZone).

# - Updated Testing Code to accept both drive_a and drive_x files.

# - Added Zpool Fragmentation value by request.

# - Added '-dump email' parameter to send joeschmuck2023@hotmail.com an email with the drive data and the multi_report_config.txt file ONLY.

# - Added Drive dump data in JSON format. It looks like a better way to parse the drive data. Still retaining the older file format for now.

#

# The multi_report_config file will automatically update previous versions to add new features.

Please let me know if you find an error or concern. You can let me know here or at the email address joeschmuck2023@hotmail.com

Last Note: Please do not think that I don't have a day job, I do. If you send me and email, I will get to it when I get home from work, if I don't have other pressing matters like an ill wife. Most of the problems I see have easy fixes but may take a few hours to fix and then test on Core and Scale before I send the fix out. Post testing takes the most amount of time. Also, if you see the script provide a strange output, run the script again, verify it didn't burp on you. I'm not sure why BASH can crap on you but it happens. Not often but it does happen. Run the script again and verify the problem occurred before sending me something. I'm working to eliminate that issue (fingers crossed).

Cheers,

-Joe

Here is Version

The changes you get with this change are a few added or updated a features, better readability for help information, but most important was adding better data collection and email support in order to troubleshoot a problem swiftly and accurately. Right now I can simulate a single drive at a time which is why I ask for the drive data.

With the custom drive configuration you can really edit each drive. For SSD/NVMe that shows Wear Level in reverse (0 = 100% good), there is a reverse option now. Because this option was added, if you already have a custom drive configuration, you will need to update it by deleting the custom data and recreating it or you will get a notification it's out of date.

The Email Support will now send me -dump files to the email address I created just for this project. This will ease the burden on the user. However I will know what your email address is and some people will be uneasy about this. Several people here can tell you that I will not share your email address, and I will not. I will communicate back to you via the email address it came from. If you prefer to just start a conversation here in the Forum and send attachments that way, that is fine too and will keep you anonymous. I just thought I'd make it easier for some people. To use this feature use the

-dump email parameter and answer "y" to the question if you want to send the data to Joe. If you answer anything else, the dump will only go to your email address.The one thing I have not been able to make work as I'd like is the Mouseover feature so there is an alternate method available. If someone knows how to make this feature work properly, I'm willing to accept the code that actually works in the email HTML.

### Changelog:

# V2.0.2 (22 January 2023)

# - Fix Wear Level that may fail on some drives.

#

# v2.0.1 (21 January 2023)

# - Fixed Zpool Fragmentation Warning for 9% and greater (Hex Math issue again).

#

# v2.0 (21 January 2023)

# - Formatted all -config screens to fit into 80 column x 24 lines.

# - Removed custom builds

# - Fixed Custom Configuration Delete Function.

# - Fixed Zpool Scrub Bytes for FreeNAS 11.x

# - Fixed SMART Test to allow for 'Offline' value.

# - Modified Wear Level script to account for 'Reverse' Adjustment.

# - Added Wear Level Adjustment Reversing to the Custom Drive configuration.

# - Added Output.html to -dump command.

# - Added Mouseover and Alternate '()' to Mouseover for normalized values (Reallocated Sectors, Reallocated Sector Events, UDMA CRC, MultiZone).

# - Updated Testing Code to accept both drive_a and drive_x files.

# - Added Zpool Fragmentation value by request.

# - Added '-dump email' parameter to send joeschmuck2023@hotmail.com an email with the drive data and the multi_report_config.txt file ONLY.

# - Added Drive dump data in JSON format. It looks like a better way to parse the drive data. Still retaining the older file format for now.

#

# The multi_report_config file will automatically update previous versions to add new features.

Please let me know if you find an error or concern. You can let me know here or at the email address joeschmuck2023@hotmail.com

Last Note: Please do not think that I don't have a day job, I do. If you send me and email, I will get to it when I get home from work, if I don't have other pressing matters like an ill wife. Most of the problems I see have easy fixes but may take a few hours to fix and then test on Core and Scale before I send the fix out. Post testing takes the most amount of time. Also, if you see the script provide a strange output, run the script again, verify it didn't burp on you. I'm not sure why BASH can crap on you but it happens. Not often but it does happen. Run the script again and verify the problem occurred before sending me something. I'm working to eliminate that issue (fingers crossed).

Cheers,

-Joe

Attachments

Last edited:

- Joined

- May 28, 2011

- Messages

- 10,996

Thanks for the heads up. I just checked the script of course I didn't check for any fragmentation above 5%. The Hex math got me yet again. We now convert it to decimal (base 10) and things are working again, I hope for everyone. I've updated the file, please download it again. Version is now 2.0.1 and includes the fix. Hope this works for you. Please let us know.

- Joined

- Jul 12, 2022

- Messages

- 3,222

Download from here until the resource gets updated I guess?

github.com

github.com

GitHub - JoeSchmuck/Multi-Report: FreeNAS/TrueNAS Script for emailed drive information.

FreeNAS/TrueNAS Script for emailed drive information. - JoeSchmuck/Multi-Report

- Joined

- May 28, 2011

- Messages

- 10,996

I posted the update, or thought I attached it. Must have just removed it. I attached it again above. But yes, the github location will work as well, it's been updated.Download from here until the resource gets updated I guess?

GitHub - JoeSchmuck/Multi-Report: FreeNAS/TrueNAS Script for emailed drive information.

FreeNAS/TrueNAS Script for emailed drive information. - JoeSchmuck/Multi-Reportgithub.com

NickF

Guru

- Joined

- Jun 12, 2014

- Messages

- 763

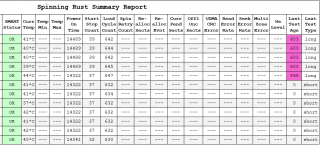

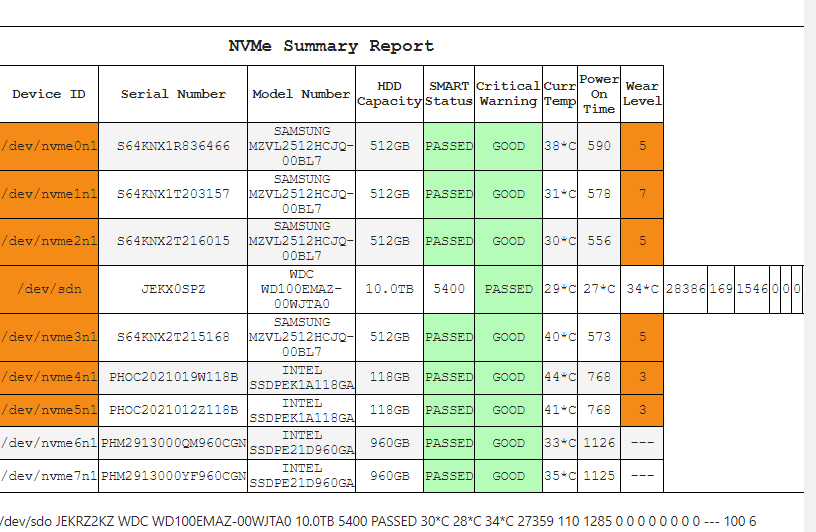

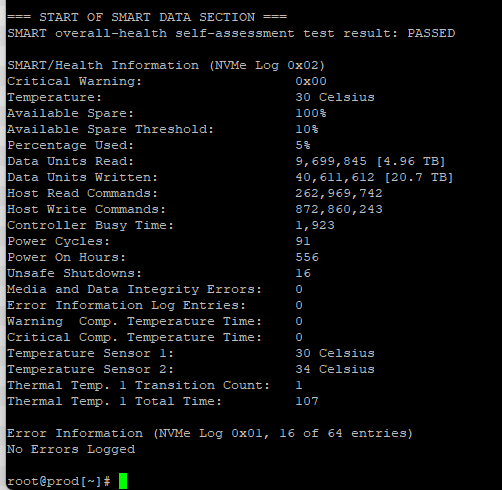

I Have a fairly complex setup for my system. I just ran an output.

I just downloaded it and ran it through my system, which is kinda complex. Weird error where it thinks one of my SAS drives is an NVME drive. Full report is here: https://fuscolabscom-my.sharepoint....lDiiW1XnjjB7sBdXWjoTcIoluxLWZvMz3Zvg?e=c32IK3

I'm also not entirely sure what the "Wear Level counter" indicator means.

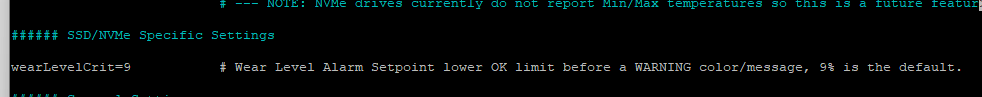

The configu file says:

Does that mean that if I've used 9% of the drive that it will alarm?

The script is triggering a WARN status, but I've only used 5% of the drive's endurance with 95% remaining.

In any case, this is really cool. Thank you for your hard work!!

I posted the update, or thought I attached it. Must have just removed it. I attached it again above. But yes, the github location will work as well, it's been updated.

I just downloaded it and ran it through my system, which is kinda complex. Weird error where it thinks one of my SAS drives is an NVME drive. Full report is here: https://fuscolabscom-my.sharepoint....lDiiW1XnjjB7sBdXWjoTcIoluxLWZvMz3Zvg?e=c32IK3

I'm also not entirely sure what the "Wear Level counter" indicator means.

The configu file says:

Does that mean that if I've used 9% of the drive that it will alarm?

The script is triggering a WARN status, but I've only used 5% of the drive's endurance with 95% remaining.

In any case, this is really cool. Thank you for your hard work!!

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum will now become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums.Related topics on forums.truenas.com for thread: "multi_report.sh version for Core and Scale"

Similar threads

- Replies

- 169

- Views

- 85K