This guide was written to be read from top to bottom without jumps, with the intent of spreading awareness to both new and experienced users; the author of this document assumes the understanding of the concepts explained in the following resources:

DISK FAILURE RATE

Assuming the probability of any single drive failing is p, the VDEV size/width is n, and the number of drives that fail simultaneously in that VDEV is X, then:

This formula allows us to calculate the probability of X drives failing at the same time in our VDEV.

Now, assuming that p = 0.03 (3%) and that n = 2 (vdev is a 2-way mirror), we get the following numbers:

X | 0 | 1 | 2 |

P(X) | 0.941 | 0.058 | 0.002 |

% | 94.09 | 5.82 | 0.18 |

Which means that we have a 94% probability of losing neither disks, a 5.82% of losing either disks, and a 0.18% of simultaneously losing both disks. Because we are using a 2-way mirror, we encounter data loss ony when both drives organize to go on strike together: as such, the data loss probability of our VDEV is 0.18%.

The data loss probability of a POOL composed by more than a single VDEV is simply the individual VDEV data loss probability multiplied by the number of VDEVs; assuming a POOL composed by 3 VDEVs in 2-way mirror, we get the following numbers: 3 * 0.18% = 0.54%.

If we consider instead a single VDEV composed of 6 disks in RAIDZ2, we get the following equation:

P(X) = C(6,X) * (0.03)^X * (1-0.03)^(6-X)

n = 6 because the VDEV has six drives

n = 6 because the VDEV has six drives

X | 0 | 1 | 2 | 3 |

P(X) | 0.833 | 0.155 | 0.012 | 0.001 |

% | 83.29 | 15.46 | 1.19 | 0.05 |

Which means that we have a 83.29% probability of losing no disks, a 15.46% of losing a single disk, a 1.19% of simultaneously losing two disks, and a 0.05% of simultaneously losing three disks. Because we are using RAIDZ2 (2 parity drives) we encounter data loss only when three disks organize to go on strike together: the data loss probability of our VDEV is 0.05%.

Compared to the first example (3 mirror VDEVs), this POOL's data loss probability is an order of magnitude lower while using the same amount of drives and the difference grows larger as the POOL expands, as shown in the following graphs.

Granted, performance wise the RAIDZ VDEV is going to be left in the dust; this also includes stressful operations such as resilvering and scrubbing:

About the resilvering process and the sequential resilver introduced in OpenZFS 2.0: Video and Slides.

Those partial quotes are extracts of posts regarding a much wider discussion; what does matter to us is that RAIDZ (in any of its configurations) gets hit harsher by such operations.

THE URE IMPACT

Mechanical failures are not the only way spinning rust could harm our data: today's drives use ECC in order to properly assure they read back the correct data, and when that fails you have an URE or Unrecoverable Read Error: it means that something has happened that has caused the reading of a sector to fail that the drive cannot fix. Quoting this answer on su:

About ECC in Hard Drives.

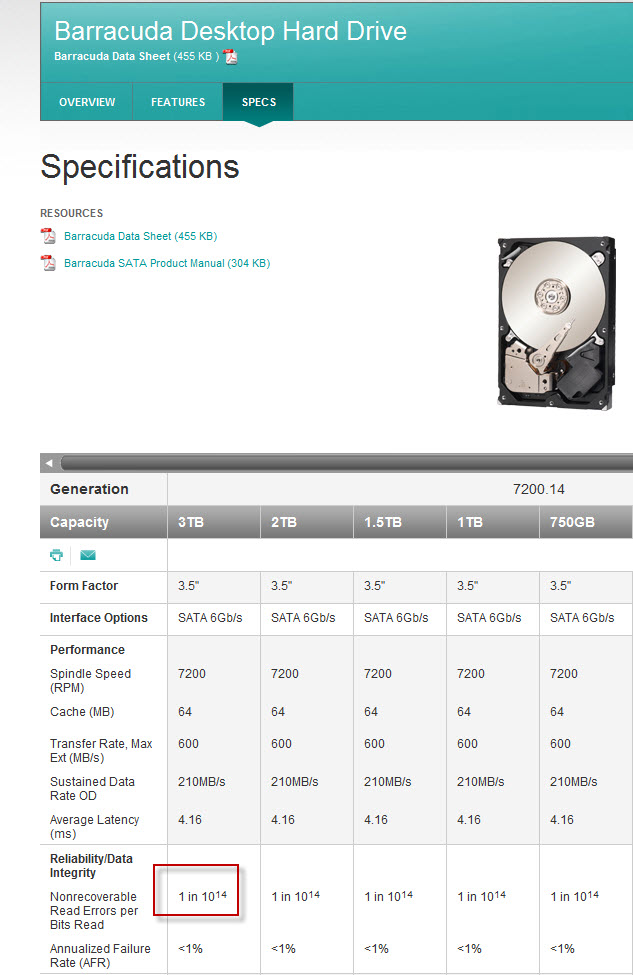

Probably for marketing reasons, different manufacturers use different notations to exprime their drives' URE value; comparing WD and Seagate shows that the former uses

1 in 10^14 and the latter 1 per 10E14: the difference is massive because the first notation means 1e-14 while the second actually means 1e-15, a whole order of magnitude smaller! It has however been stated that the value has to be read as 1e-14.Most drive manifacturers report events of an URE every 1e-14 (e = 10^) bits read for common grade disks and 1e-15 for enterprise/pro ones... but how does this influence our POOL?

In order to find out, we need to go back to our single 2-way mirror VDEV: assuming we are using 6TB drives and that our pool is 80% full, let's calculate the probability of an URE during resilver.

P(URE) = 1 - (1-URE)^bit_read

where bit_read is the data to resilver expressed in bits: in this case 6*8e12*0.8 bits.

8e12 is the euquivalent in bits of a single TB

where bit_read is the data to resilver expressed in bits: in this case 6*8e12*0.8 bits.

8e12 is the euquivalent in bits of a single TB

Running the numbers tells us the probability of encountering a URE during the resilver of our VDEV is 32%; if we use a drive with an URE of 1e-15, said probability drops to just 4%.

Switching to a 6 wide RAIDZ2 without parity disk remaining (having lost two out of 6 drives), the bit_read value is obtained by 6*8e12*0.8 multiplied by 4 (the surviving drives).

Running the numbers with an URE of 1e-14 gives out a chilling 78% probability of scrambling for backup and coffee; using drives with an URE of 1e-15, the probability drops to 14%.

REASONABLE CRITICISM

Scary numbers, aren't they? While everything we have seen so far is correct it's time to address a few issues that arise from the same root: calculations are as valid as the the parameters used.

The proper name of what we previously called probability of any single drive failing (the p value in the first formula) is Annualized Failure Rate: the AFR is an estimated probability that a disk will fail during a full year of use, a relation between the mean time between failure (MTBF) and the hours that a number of devices are run per year.

AFR = 8766 or 8760 / MTBF

Here we need to lay a few points: both AFR and MTBF as given by vendors are population statistics that can not predict the behaviour of an individual disks; second, the AFR will increase towards and beyond the end of the service life (read warranty) of the drive. The 3% used in the calculations so far is an estimated mean over an analisys of over 5 years of replacement logs for a large sample of drives.

Annualized failure rate - Wikipedia

Hard disk drive reliability and MTBF / AFR | Support Seagate US

Seagate is no longer using the industry standard "Mean Time Between Failures" (MTBF) to quantify disk drive average failure rates. Seagate is changing to another standard: "Annualized Failure Rate" (AFR).

knowledge.seagate.com

This is a partial quote of an excellent article that I strongly recomend reading, written by Valerie Henson back in the 2007.

Before moving on I want to signal the following analysis of WD's RED PRO low endurance ratings (dated March 2022); it would merit a few lines, but this resource is already awfully long so go take a look.

Discussing Low WD Red Pro NAS Hard Drive Endurance Ratings

We discuss why the WD Red Pro 20TB has an almost shockingly low endurance rating that can be bested by low-cost consumer QLC SSDs

In regards to the URE numbers the elephant in the room is that despite calculations foretelling catastrophic rates during resilvering/rebuilding (this point is intimately linked with the RAID5/Z1 is dead argument), very few of such events seem to happen.

Bonus Tip - Unrecoverable Errors in RAID 5

What is the probability of successfully completing the rebuild in RAID 5 array.

www.raidtips.com

Why RAID-5 Stops Working in 2009 - Not Necessarily

Why RAID-5 Stops Working in 2009 - Not Necessarily. How the Bit Error Rate May Not Be Critical to RAID-5 Disk Arrays. By Darren McBride

Using RAID-5 Means the Sky is Falling, and Other False Claims

Benchmark Reviews Editorial: Using RAID-5 means the sky is falling, and other false claims based on hard disk drive URE rates during array rebuild.

The case of the 12TB URE: Explained and debunked

Spoiler: it’s a myth!URE: Unrecoverable Read Error – an item in the spec sheet of hard drives and a source of a myth. Many claim that reading around 12TB of data from a consumer grade H…

I haven't been able to find reliable information about known how the OEMs test UREs and what are the legal constrains behind this process: we don't know values such as the variance or standard deviation between the reported value and the actual values, but we are observing a huge discrepancies; having a scientific background this annoys me greatly.

Personally I can't endorse this statement without reliable data, and I can't suggest the use of a 22TB drive with a declared URE of 1e-14; what's certain is that the lack of independent and reliable testing (scientific method and large enough population) in conjunction to perhaps a grey area in regulations is hurting us consumers.

THE SAFETY MINDSET

The point of this resource is to expand the constantly growing safety mindest that this community constantly brings on the table and shares, providing the necessary tools to identify the dangers of data corruption.

The formula that ties the two main parameters we have analyzed is the following.

P(LOSS) = P(DISK) * P(URE) * N

where N is the number of VDEVs that compose our POOL and P(DISK) is the probability of losing all the parity drives on your single VDEV.

where N is the number of VDEVs that compose our POOL and P(DISK) is the probability of losing all the parity drives on your single VDEV.

6 disks layout, 80% full | P(LOSS) with URE = 1e-14 | P(LOSS) with URE = 1e-15 |

|---|---|---|

3 mirror VDEVs | 5.6% | 0.7% |

1 raidz2 VDEV | 0.9% | 0.2% |

Not so high numbers now, right? That's because as high as the calculated P(URE) is, it really matters only when no parity is in place in our VDEVs.

As we have seen in this resource, numbers are not absolute: almost every value considered in this document is the result of population statistics and averages: keeping in mind that defective drives exists is a factor of primal importance that needs to be taken into consideration during the designing of our POOLs.

As we understood at the beginning of this resource, resilvering is a critical time for any VDEV and mirrors handle this stressful process more gracefully than any RAIDZ: they reduce the time this process is completed, the larger the drives size the greater this improvement. Especially on elderly drives, this reduces the risks of faults popping out (from internal components failure to UREs).

Another vital points that we must considerate in order to evaluate the risks of our VDEVs are our monitoring capabilities and our response time.

Another vital points that we must considerate in order to evaluate the risks of our VDEVs are our monitoring capabilities and our response time.

Proper (frequent and regular, by scripts or manually) monitoring greatly enhances the resiliency of any VDEV, allowing us to either preemptively intervene or to prepare us to a critical situation, reducing our response time: how quickly we are to address an critical event (ie a faulty drive) is as important as the parity level of our VDEVs; the faster we are able to bring a POOL out of a degraded status, the safer our data is.

In the end risk acceptance is subjective, data loss being painful is not.