- Joined

- May 28, 2011

- Messages

- 10,996

PART 1:

WHY A ESXi SERVER?

I have a personal preference for virtualization and it started a long time ago while using VMWare Workstation, it was easy to operate on my Windoze computer and I'm accustom to it. While this thread is mainly how to select the right components and put together a server, it is also about requirements and teaching component selection. You can use this to build a pure TrueNAS server or a Type 1 Hypervisor like I am doing. I will not be going into much detail about the virtual environment. the big thing to know about the virtual environment for this thread is to learn what components you can pass through ESXi to your TrueNAS VM. This is actually a critical part. Everything I selected was chosen with that in mind.

REQUIREMENTS and COMPONENT LISTING

This has been a project I've been wanting to do for several years and due to some recent very good sale prices, the fact that my hard drives were double the warranty life, and I rarely spend money on myself, I was able to justify the cost.

The highlights are:

Cost: $2389.57 USD, includes tax and shipping charges.

Total Storage: RAIDZ1 = 10.1TB, RAIDZ2 = 6.67TB (values are approximate and read the story below and there are still decisions for me to make).

Power Consumption Idle: ~41 Watt at the electrical outlet (half of my spinning disk server)

Silent Operation (unless you have dog ears)

Full Parts List:

AsRock Rack B650D4U Micro-ATX Motherboard - $299 USD

Two Kingston 32GB ECC Registered DDR5 4800 Model KSM48E40BD8KM-32HM - 114.99 USD each ($229.98)

AMD Ryzen 5 7600X 6-Core 4.7 GHz CPU - $249USD

Cooler Master Hyper 212 Spectrum V3 CPU Air Cooler - $29.99 USD

Prolimatech PRO-PK3-1.5G Nano Aluminum High-Grade Thermal Compound - $7.99 USD

Six 4TB NVMe Drives (Nextorage Japan Internal SSD 4TB for PS5 with Heatsink, PCIe Gen 4.0) - $199 USD each ($1199.94)

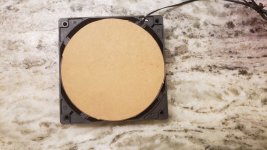

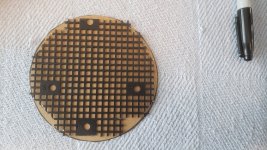

Quad M.2 NVMe to PCIe Adapter (Newegg Item 9SIARE9JVF1975) - $35.90 USD

Corsair RM750e Fully Modular Low-Noise ATX Power Supply - $99.99 USD

ASUS AP201 Type-C Airflow-focused Micro-ATX, Mini-ITX Computer Case - $79.97 USD

Does this make sense financially to do over replacing my old spinners with new spinners? Nope. The money I spent would take over 10 years to recoup in power savings alone, assuming power costs remain the same. We know that will not happen but they will not double. My four hard drives were on the edge of end of life and the price I paid for the spinners was about the same cost of my first set of hard drives about 10 years ago. I rationalized that the NVMe drives would theoretically last 10 years at a minimum. I also wanted a more efficient CPU and faster as well, even though I did not need a faster CPU. Want overrides Need today, but this is rare for me.

I will go into my parts selection and rationale behind each selection. My hope is that this thread will help others do the legwork to select components for themselves. There is always some risk, and if you are unwilling to take the risk, then you should not build your own system.

First of all, it took months for me to decide what my system requirements were and I used a spreadsheet to document it all, which included potential hardware choices and NAS capacities and RAIDZ1 or RAIDZ2, even Mirrors with all the capacity calculations. @Arwen had a thread about looking for a low power, 20 PCIe lane (min) system a little while back which re-sparked my interest in a new system. I am very thankful for that thread as it made me ultimately purchase the system I now have.

STORAGE CAPACITY:

This first thing I feel everyone should do is know what capacity they need. You will have seen me post something like these words "Choose the capacity you desire and need for 3 to 5 years (whatever the warranty period is) and then double that capacity because anyone fairly new to a NAS will store a lot more than they expect to, so spend the money up front, and hard drives typically last 5 years or longer these days, but that doesn't mean they won't fail right after the warranty period or even before." Since I've been running a NAS for quite a long time I already know my requirements which are ~6TB minimum. Now this is the storage capacity I need form my NAS. I'm also virtualizing using ESXi (free as always) so I need a boot drive and enough space for my datastore. As of this writing I have a 4TB NVMe as my boot drive and datastore1. This is a lot of space. But I also have a second 4TB NVMe as datastore2. LOTS of VM space, and currently way more than I need.

I have six 4TB NVMe drives, four specifically for the TrueNAS VM, and two for ESXi use. I am contemplating on reallocating the 4TB NVMe for datastore2 as part of TrueNAS storage (five 4TB NVMe drives). What I'm saying it my final configuration is not decided, but it will be by the end of December. I could end up with a RAIDZ1 with ~14TB of storage if I wanted to. But I'm not really fond of RAIDZ1, I like RAIDZ2. Something I need to consider as I'm not using spinning rust anymore.

Your storage is the one main consumable item you have. It will wear out over time. You MUST realize this and keep it in mind. Every other part short of a fan should last you the length of 10+ years, typically well over 10 years however technology will advance and in 15 years you may want to replace your power hungry NAS with a newer version. My NAS consumes 41 Watts at idle right now running ESXi. In 10 years the hardware might be sipping 5 watts of power for an equivalent functional setup.

From this point forward, always buy quality parts. If you buy cheap, your system may be unstable or fail sooner. Also know that infant mortality is real, even if you buy top of the line hardware, a certain percentage will fail early. That is life.

RAM CAPACITY and TYPE:

The second thing a person should decide is how much RAM is required. For TrueNAS only I require 16GB but others may require more if they are using quite a few addon applications or in an office environment. For home use, 16GB has never failed me. But again, I'm using ESXi so I want 64GB ECC RAM and would like to have the option to double if I wanted to, even though I doubt I will. Now I know what I need, 64GB ECC RAM. That was the easiest decision I had to make as it required little research. Speaking of research, the RAM you select must be matched for your motherboard. I'll get into that more when we discuss the motherboard.

CPU SELECTION:

For this task the PCIe lanes became a significant topic in the parts selection. I needed a CPU that could provide me at a minimum of 20 PCIe lanes (more is better), support ECC RAM, faster than my previous CPU, and was cost effective. For my selection the brand initially was a factor but ultimately was not. The AMD Ryzen 5 7600X 6 core 4.7GHz (5.3GHz boost) 105W Desktop Processor met my requirements. This CPU supports 28 PCIe lanes, 24 which are usable, 128GB ECC RAM (DDR5), Built in Graphics (nothing fancy and really not needed for this server but nice to have if later I wanted it). I honestly would have preferred an Intel CPU (personal preference) however I've owned a few AMD CPUs and was never disappointed, I've owned Cyrix CPUs as well and it was a was another competitor against Intel. Back to the story... The only downside to the AMD CPU is being able to "prove" that ECC is really working. This is as I understand it, a function of the CPU, RAM, and the Motherboard electronics, where with Intel, it's just the CPU and RAM. So this is a very minor risk to me and I have "faith" that the ECC is working correctly.

MOTHERBOARD:

The motherboard is a critical part, research it as well. Thanks to @Arwen I was made to think about several factors, the most significant was PCIe lanes. This is how the data is transferred to/from your CPU to devices in the motherboard and on the PCIe card slots. Just because a motherboard has two x16 slots does not mean there is 32 PCIe lanes in operation. That is an example and you MUST download the User Guide and read it very carefully. Most of the time the answer is in the basic design/interface layout diagram, not in the text of the document. If you cannot clearly identify these very important features, do more investigating until you can identify them, or maybe the motherboard you are looking at isn't the one you need. Or you roll the dice and take a chance. My requirements for the motherboard is: One x16 slot which could be bifurcated to x4x4x4x4 for use with a NVMe PCIe adapter, One true x4 PCIe slot, and any extra slots would be nice to have. ECC RAM support for at least 64GB ECC RAM, 128GB would be nice, IPMI, 1Gbit NIC, iTX or uATX, USB 2.0 and 3.1/3.2 connectivity, and the ability to reuse my DDR4 ECC RAM form my current server. The ASRock Rack B650D4U was the one I selected. This took me over 2 weeks to research, and it was a few hours a day. Because I was using ESXi, I also examined the diagrams to see what controllers I could possibly pass though to a VM. This ASRock motherboard hit everyone of my minimum requirements except the DDR4 ECC RAM requirement. Looks like DDR5 is the current RAM choice so I had to purchase new RAM. No money saved here.

Motherboard misses (my mistake for not looking for these): An internal USB 3.2 (USB C) motherboard cable connector, Built in Sound (most servers do not seem to have a built in sound card. Those were my misses for this motherboard. They are not critical however I wish I had them. I may add a USB C adapter to support my Case front panel USB C port.

WHY A ESXi SERVER?

I have a personal preference for virtualization and it started a long time ago while using VMWare Workstation, it was easy to operate on my Windoze computer and I'm accustom to it. While this thread is mainly how to select the right components and put together a server, it is also about requirements and teaching component selection. You can use this to build a pure TrueNAS server or a Type 1 Hypervisor like I am doing. I will not be going into much detail about the virtual environment. the big thing to know about the virtual environment for this thread is to learn what components you can pass through ESXi to your TrueNAS VM. This is actually a critical part. Everything I selected was chosen with that in mind.

REQUIREMENTS and COMPONENT LISTING

This has been a project I've been wanting to do for several years and due to some recent very good sale prices, the fact that my hard drives were double the warranty life, and I rarely spend money on myself, I was able to justify the cost.

The highlights are:

Cost: $2389.57 USD, includes tax and shipping charges.

Total Storage: RAIDZ1 = 10.1TB, RAIDZ2 = 6.67TB (values are approximate and read the story below and there are still decisions for me to make).

Power Consumption Idle: ~41 Watt at the electrical outlet (half of my spinning disk server)

Silent Operation (unless you have dog ears)

Full Parts List:

AsRock Rack B650D4U Micro-ATX Motherboard - $299 USD

Two Kingston 32GB ECC Registered DDR5 4800 Model KSM48E40BD8KM-32HM - 114.99 USD each ($229.98)

AMD Ryzen 5 7600X 6-Core 4.7 GHz CPU - $249USD

Cooler Master Hyper 212 Spectrum V3 CPU Air Cooler - $29.99 USD

Prolimatech PRO-PK3-1.5G Nano Aluminum High-Grade Thermal Compound - $7.99 USD

Six 4TB NVMe Drives (Nextorage Japan Internal SSD 4TB for PS5 with Heatsink, PCIe Gen 4.0) - $199 USD each ($1199.94)

Quad M.2 NVMe to PCIe Adapter (Newegg Item 9SIARE9JVF1975) - $35.90 USD

Corsair RM750e Fully Modular Low-Noise ATX Power Supply - $99.99 USD

ASUS AP201 Type-C Airflow-focused Micro-ATX, Mini-ITX Computer Case - $79.97 USD

Does this make sense financially to do over replacing my old spinners with new spinners? Nope. The money I spent would take over 10 years to recoup in power savings alone, assuming power costs remain the same. We know that will not happen but they will not double. My four hard drives were on the edge of end of life and the price I paid for the spinners was about the same cost of my first set of hard drives about 10 years ago. I rationalized that the NVMe drives would theoretically last 10 years at a minimum. I also wanted a more efficient CPU and faster as well, even though I did not need a faster CPU. Want overrides Need today, but this is rare for me.

I will go into my parts selection and rationale behind each selection. My hope is that this thread will help others do the legwork to select components for themselves. There is always some risk, and if you are unwilling to take the risk, then you should not build your own system.

First of all, it took months for me to decide what my system requirements were and I used a spreadsheet to document it all, which included potential hardware choices and NAS capacities and RAIDZ1 or RAIDZ2, even Mirrors with all the capacity calculations. @Arwen had a thread about looking for a low power, 20 PCIe lane (min) system a little while back which re-sparked my interest in a new system. I am very thankful for that thread as it made me ultimately purchase the system I now have.

STORAGE CAPACITY:

This first thing I feel everyone should do is know what capacity they need. You will have seen me post something like these words "Choose the capacity you desire and need for 3 to 5 years (whatever the warranty period is) and then double that capacity because anyone fairly new to a NAS will store a lot more than they expect to, so spend the money up front, and hard drives typically last 5 years or longer these days, but that doesn't mean they won't fail right after the warranty period or even before." Since I've been running a NAS for quite a long time I already know my requirements which are ~6TB minimum. Now this is the storage capacity I need form my NAS. I'm also virtualizing using ESXi (free as always) so I need a boot drive and enough space for my datastore. As of this writing I have a 4TB NVMe as my boot drive and datastore1. This is a lot of space. But I also have a second 4TB NVMe as datastore2. LOTS of VM space, and currently way more than I need.

I have six 4TB NVMe drives, four specifically for the TrueNAS VM, and two for ESXi use. I am contemplating on reallocating the 4TB NVMe for datastore2 as part of TrueNAS storage (five 4TB NVMe drives). What I'm saying it my final configuration is not decided, but it will be by the end of December. I could end up with a RAIDZ1 with ~14TB of storage if I wanted to. But I'm not really fond of RAIDZ1, I like RAIDZ2. Something I need to consider as I'm not using spinning rust anymore.

Your storage is the one main consumable item you have. It will wear out over time. You MUST realize this and keep it in mind. Every other part short of a fan should last you the length of 10+ years, typically well over 10 years however technology will advance and in 15 years you may want to replace your power hungry NAS with a newer version. My NAS consumes 41 Watts at idle right now running ESXi. In 10 years the hardware might be sipping 5 watts of power for an equivalent functional setup.

From this point forward, always buy quality parts. If you buy cheap, your system may be unstable or fail sooner. Also know that infant mortality is real, even if you buy top of the line hardware, a certain percentage will fail early. That is life.

RAM CAPACITY and TYPE:

The second thing a person should decide is how much RAM is required. For TrueNAS only I require 16GB but others may require more if they are using quite a few addon applications or in an office environment. For home use, 16GB has never failed me. But again, I'm using ESXi so I want 64GB ECC RAM and would like to have the option to double if I wanted to, even though I doubt I will. Now I know what I need, 64GB ECC RAM. That was the easiest decision I had to make as it required little research. Speaking of research, the RAM you select must be matched for your motherboard. I'll get into that more when we discuss the motherboard.

CPU SELECTION:

For this task the PCIe lanes became a significant topic in the parts selection. I needed a CPU that could provide me at a minimum of 20 PCIe lanes (more is better), support ECC RAM, faster than my previous CPU, and was cost effective. For my selection the brand initially was a factor but ultimately was not. The AMD Ryzen 5 7600X 6 core 4.7GHz (5.3GHz boost) 105W Desktop Processor met my requirements. This CPU supports 28 PCIe lanes, 24 which are usable, 128GB ECC RAM (DDR5), Built in Graphics (nothing fancy and really not needed for this server but nice to have if later I wanted it). I honestly would have preferred an Intel CPU (personal preference) however I've owned a few AMD CPUs and was never disappointed, I've owned Cyrix CPUs as well and it was a was another competitor against Intel. Back to the story... The only downside to the AMD CPU is being able to "prove" that ECC is really working. This is as I understand it, a function of the CPU, RAM, and the Motherboard electronics, where with Intel, it's just the CPU and RAM. So this is a very minor risk to me and I have "faith" that the ECC is working correctly.

MOTHERBOARD:

The motherboard is a critical part, research it as well. Thanks to @Arwen I was made to think about several factors, the most significant was PCIe lanes. This is how the data is transferred to/from your CPU to devices in the motherboard and on the PCIe card slots. Just because a motherboard has two x16 slots does not mean there is 32 PCIe lanes in operation. That is an example and you MUST download the User Guide and read it very carefully. Most of the time the answer is in the basic design/interface layout diagram, not in the text of the document. If you cannot clearly identify these very important features, do more investigating until you can identify them, or maybe the motherboard you are looking at isn't the one you need. Or you roll the dice and take a chance. My requirements for the motherboard is: One x16 slot which could be bifurcated to x4x4x4x4 for use with a NVMe PCIe adapter, One true x4 PCIe slot, and any extra slots would be nice to have. ECC RAM support for at least 64GB ECC RAM, 128GB would be nice, IPMI, 1Gbit NIC, iTX or uATX, USB 2.0 and 3.1/3.2 connectivity, and the ability to reuse my DDR4 ECC RAM form my current server. The ASRock Rack B650D4U was the one I selected. This took me over 2 weeks to research, and it was a few hours a day. Because I was using ESXi, I also examined the diagrams to see what controllers I could possibly pass though to a VM. This ASRock motherboard hit everyone of my minimum requirements except the DDR4 ECC RAM requirement. Looks like DDR5 is the current RAM choice so I had to purchase new RAM. No money saved here.

Motherboard misses (my mistake for not looking for these): An internal USB 3.2 (USB C) motherboard cable connector, Built in Sound (most servers do not seem to have a built in sound card. Those were my misses for this motherboard. They are not critical however I wish I had them. I may add a USB C adapter to support my Case front panel USB C port.

Last edited: