visamp

Dabbler

- Joined

- Aug 24, 2023

- Messages

- 11

Hello,

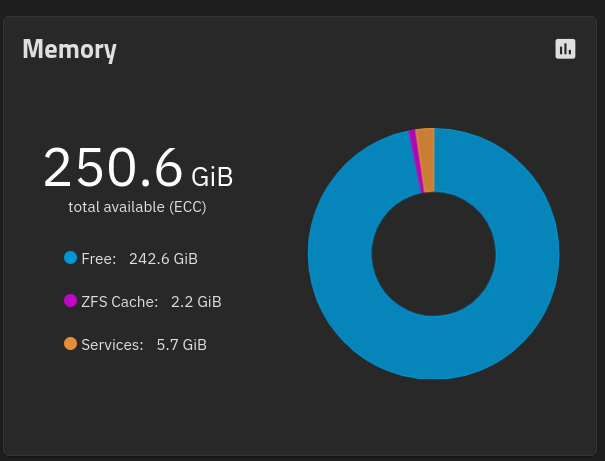

I am running TrueNAS-SCALE-22.12.3.2 in a Dell 7920 Workstation with Intel(R) Xeon(R) Silver 4114 CPU @ 2.20GHz and 250 GB of Samsung ECC memory (2666 hz).

I followed the Lawrence Systems tutorial to "How To Get the Most From Your TrueNAS Scale: Arc Memory Cache Tuning". When I did this, and set my memory to 185 for max cache (which left plenty of memory for the rest of the system apps, eg. Nextcloud, Collabora, and Cloudflare Tunnel) using the shell in TrueNAS, the dashboard showed ZFS cache dropped down to nearly zero.

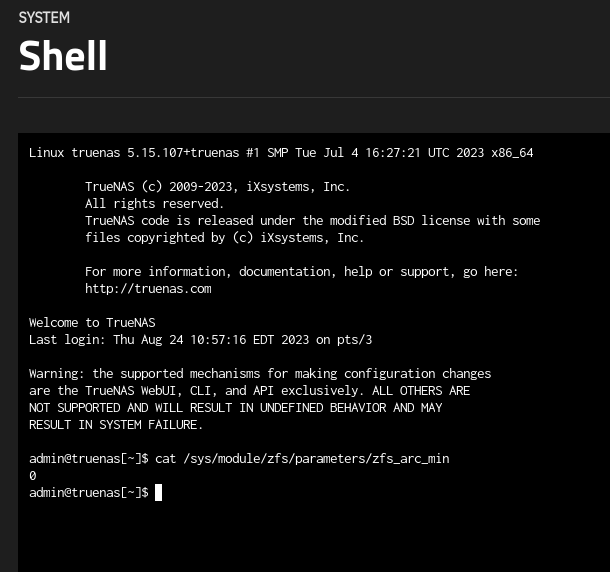

So, I undid the setting in /sys/module/zfs/parameters/zfs_arc_max and set it back to 0, turned off the init script, rebooted twice, but the ZFS cache still remains at nearly zero. I have opened up several video files to see if I could get it to rise, but nothing. Here is what you can see when I cat the zfs_arc_min:

You can see here on the 20th when I followed the tutorial to tune the ARC caching, the in use memory flatlined.

I'm not sure how to troubleshoot this further. I do not notice slowness in the system, but I am using an NVME pool for my primary pool. Any ideas if this is a bug or something that I can troubleshoot further and fix myself?

I am running TrueNAS-SCALE-22.12.3.2 in a Dell 7920 Workstation with Intel(R) Xeon(R) Silver 4114 CPU @ 2.20GHz and 250 GB of Samsung ECC memory (2666 hz).

I followed the Lawrence Systems tutorial to "How To Get the Most From Your TrueNAS Scale: Arc Memory Cache Tuning". When I did this, and set my memory to 185 for max cache (which left plenty of memory for the rest of the system apps, eg. Nextcloud, Collabora, and Cloudflare Tunnel) using the shell in TrueNAS, the dashboard showed ZFS cache dropped down to nearly zero.

So, I undid the setting in /sys/module/zfs/parameters/zfs_arc_max and set it back to 0, turned off the init script, rebooted twice, but the ZFS cache still remains at nearly zero. I have opened up several video files to see if I could get it to rise, but nothing. Here is what you can see when I cat the zfs_arc_min:

You can see here on the 20th when I followed the tutorial to tune the ARC caching, the in use memory flatlined.

I'm not sure how to troubleshoot this further. I do not notice slowness in the system, but I am using an NVME pool for my primary pool. Any ideas if this is a bug or something that I can troubleshoot further and fix myself?