Hi All,

Is it good or a requirement to disable the on disk write cache? If I see 10-13% fluctuation in vdev usage I think this means the larger vdev will stall the scrub and slow down. This is my lab where the data is random dd 100gb files and then I copy them to fill the pool to 79%

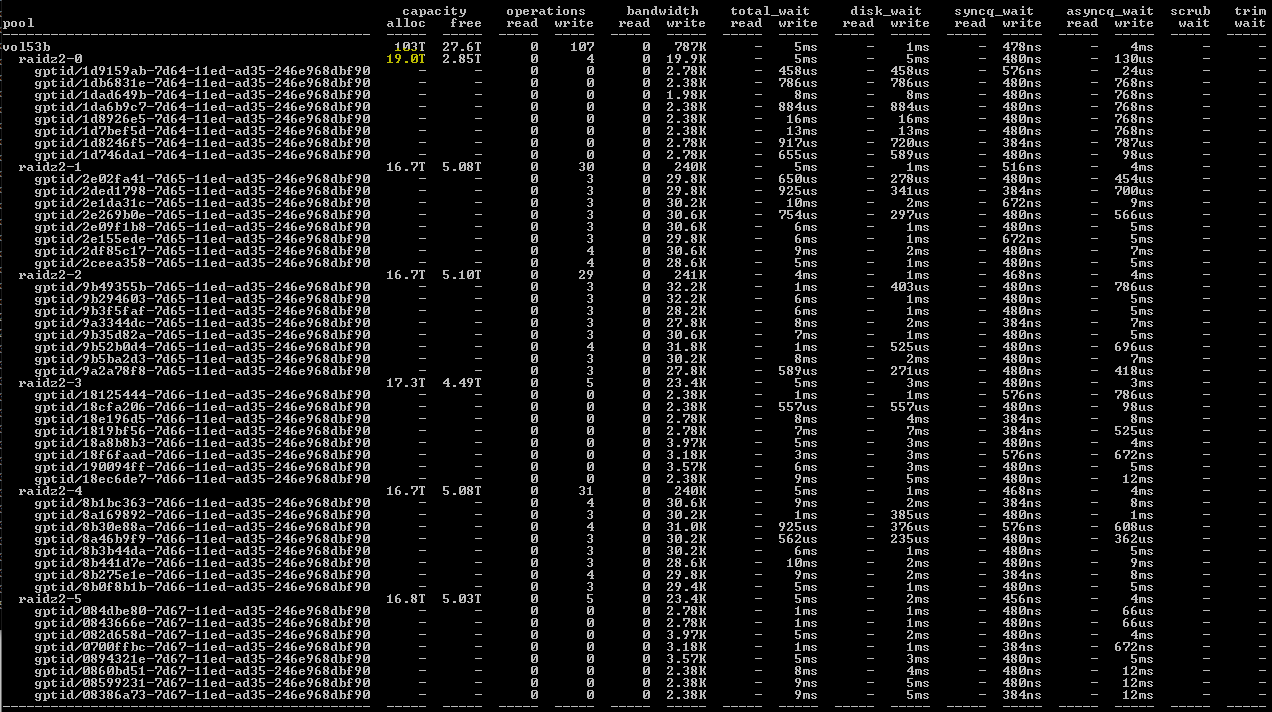

I noticed this with 24 mirrors striped and when I did 6 vdevs of RAIDz2 I could really see the one vdev doing all the work on a scrub. It takes 6 hours or so to scrub this 79% zpool and I start to see where all the IO is going to the first vdev. I see the same thing when doing striped mirrors.

Is it good or a requirement to disable the on disk write cache? If I see 10-13% fluctuation in vdev usage I think this means the larger vdev will stall the scrub and slow down. This is my lab where the data is random dd 100gb files and then I copy them to fill the pool to 79%

I noticed this with 24 mirrors striped and when I did 6 vdevs of RAIDz2 I could really see the one vdev doing all the work on a scrub. It takes 6 hours or so to scrub this 79% zpool and I start to see where all the IO is going to the first vdev. I see the same thing when doing striped mirrors.