Hi all,

first let me thank all here and at ixsystems for a) fantastic software and b) fantastic community. What I've learned here over the years is extremely useful - as is the software provided.

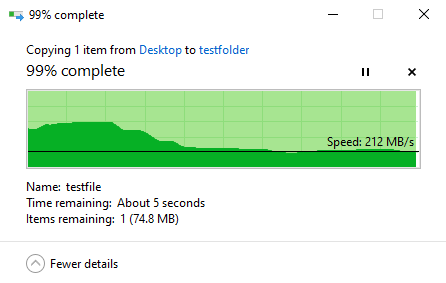

Now to my question; I recently purchased 2x 32Gb 3D Xpoint M.2's for use as log devices - I wanted to speed up my (exclusively sync) writes to the pool, and they have achieved exactly this. When adding the log vdev in the gui I actually get the option to either stripe or mirror the two Optanes, so I did some testing. When either a single or two Optanes are added as mirrored as log, I get ~300Mib/s write speed, indefinately, which is expected as that's roughly the write speed of one of the optanes. Just to see what would happen, I removed the log, and re-added the two Optanes in a strip, and for the first ~3Gib of the 8.5GiB testfile write speed (remember - sync!) jumps up to ~600Mib/s which is wow - I only have 2 striped mirrors (WD40EFRX) in the pool so that tripled the write performance. However, after the first 3.5GiB write speed drops to the bare pool performance just over 200MiB/s:

Could this a Windows caching thing that just doesn't kick in with the slower mirrored slog setup because it can keep up (the file comes from ssd that should be able to keep up with the server)? Also, the testfile should have been cached in mem (128Gb RAM on the client)....

Will be interested to hear opinions - also any discussion on mirrored vs striped slog - but keep in mind this is a home lab server server so even though I don't want to lose any data, a temporary loss of write performance due to failed slog stripe is no problem.

TIA

Kai.

first let me thank all here and at ixsystems for a) fantastic software and b) fantastic community. What I've learned here over the years is extremely useful - as is the software provided.

Now to my question; I recently purchased 2x 32Gb 3D Xpoint M.2's for use as log devices - I wanted to speed up my (exclusively sync) writes to the pool, and they have achieved exactly this. When adding the log vdev in the gui I actually get the option to either stripe or mirror the two Optanes, so I did some testing. When either a single or two Optanes are added as mirrored as log, I get ~300Mib/s write speed, indefinately, which is expected as that's roughly the write speed of one of the optanes. Just to see what would happen, I removed the log, and re-added the two Optanes in a strip, and for the first ~3Gib of the 8.5GiB testfile write speed (remember - sync!) jumps up to ~600Mib/s which is wow - I only have 2 striped mirrors (WD40EFRX) in the pool so that tripled the write performance. However, after the first 3.5GiB write speed drops to the bare pool performance just over 200MiB/s:

Could this a Windows caching thing that just doesn't kick in with the slower mirrored slog setup because it can keep up (the file comes from ssd that should be able to keep up with the server)? Also, the testfile should have been cached in mem (128Gb RAM on the client)....

Will be interested to hear opinions - also any discussion on mirrored vs striped slog - but keep in mind this is a home lab server server so even though I don't want to lose any data, a temporary loss of write performance due to failed slog stripe is no problem.

TIA

Kai.