Ebedorian

Dabbler

- Joined

- May 5, 2023

- Messages

- 10

So, I've been on here since May 2023, and I have had varying success with several iterations of a TrueNas Scale build that I've been working on. I have had issues with fan speed control (see forum post here) and drive errors (posted here), but I haven't gotten much help. I've just been doing a lot of reading.

I could use some guidance on this. This build is intended to provide archive storage for large files and run a few virtual machines. Most of the gear I used is linked in case anyone is interested in using any of it. If most of it is garbage, I'd still like to know, as this has been a learning process for me.

My Current Build includes the following:

Supermicro X11SSL-F

Intel Xeon E3-1270 v5 @ 3.6 GHz

SST-NT07-115X-USA (CPU Cooler)

64GB Micron Crucial DDR4 (4X16GB) 2133MHz UDIMM RAM

Noctua NF-A6x25 PWM x 2

Rosewill RSV-Z3100U 3U Server Chassis

Silicon Power 128GB SSD 3D NAND A55 SLC x 2 (Boot Pool)

SAMSUNG 845DC 800GB PRO MLC x 4 (Storage Pool)

WL4000GSA6472E (4 x OOS4000G and 1 x ST4000NM0033) (Storage Pool)

MCX311A-XCAT 10GB

9200-8I IT (HBA)

DUAL NVMe to PCIe

Intel OPTANE SSD P1600X Series 118GB x 2 (SLOG)

WD 1TB WD Red SA500 NAS 3D NAND Internal SSD (Cache)

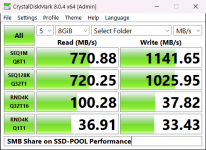

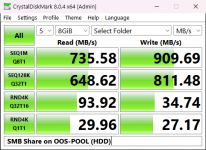

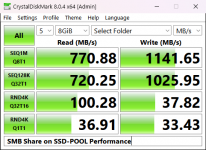

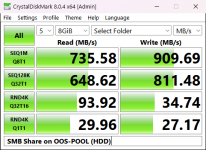

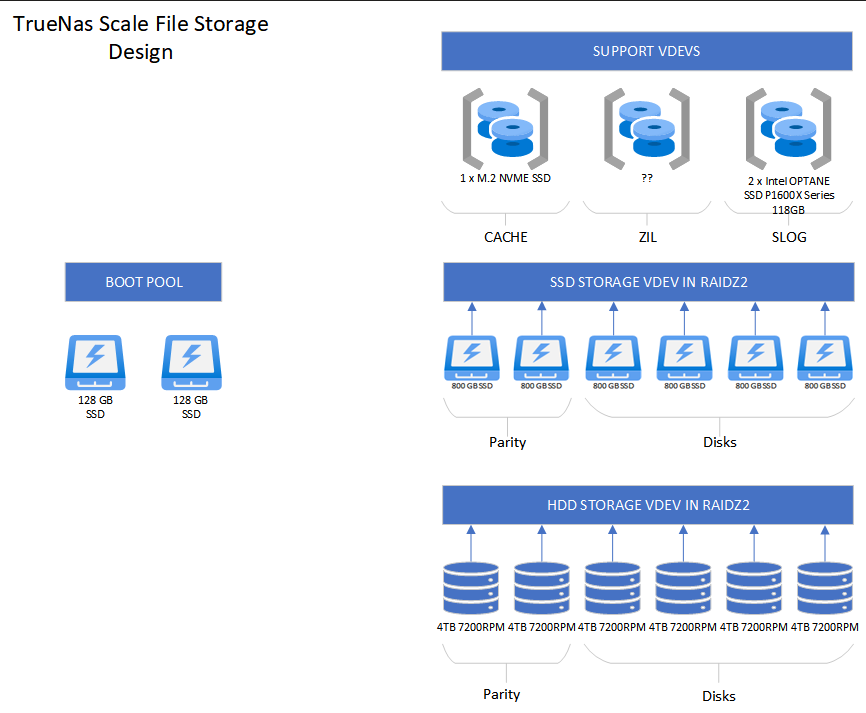

My current design. The support VDEVs are intended for use with the spinning disks, not the SSDs. I'm not even sure if ZIL is necessary. This is where I could use some advice. Running Crystal Disk Mark on an iSCSI drive had putrid results, hence the reason for adding the support VDEVS. But this is where I need the advice.

Thanks again for any assistance given.

I could use some guidance on this. This build is intended to provide archive storage for large files and run a few virtual machines. Most of the gear I used is linked in case anyone is interested in using any of it. If most of it is garbage, I'd still like to know, as this has been a learning process for me.

My Current Build includes the following:

Supermicro X11SSL-F

Intel Xeon E3-1270 v5 @ 3.6 GHz

SST-NT07-115X-USA (CPU Cooler)

64GB Micron Crucial DDR4 (4X16GB) 2133MHz UDIMM RAM

Noctua NF-A6x25 PWM x 2

Rosewill RSV-Z3100U 3U Server Chassis

Silicon Power 128GB SSD 3D NAND A55 SLC x 2 (Boot Pool)

SAMSUNG 845DC 800GB PRO MLC x 4 (Storage Pool)

WL4000GSA6472E (4 x OOS4000G and 1 x ST4000NM0033) (Storage Pool)

MCX311A-XCAT 10GB

9200-8I IT (HBA)

DUAL NVMe to PCIe

Intel OPTANE SSD P1600X Series 118GB x 2 (SLOG)

WD 1TB WD Red SA500 NAS 3D NAND Internal SSD (Cache)

My current design. The support VDEVs are intended for use with the spinning disks, not the SSDs. I'm not even sure if ZIL is necessary. This is where I could use some advice. Running Crystal Disk Mark on an iSCSI drive had putrid results, hence the reason for adding the support VDEVS. But this is where I need the advice.

Thanks again for any assistance given.