Warning: Long Post xD

If you do not care about specs and other info and thoughts, I would appreciate help with HDD section

CPU: AMD R7 5800X3D

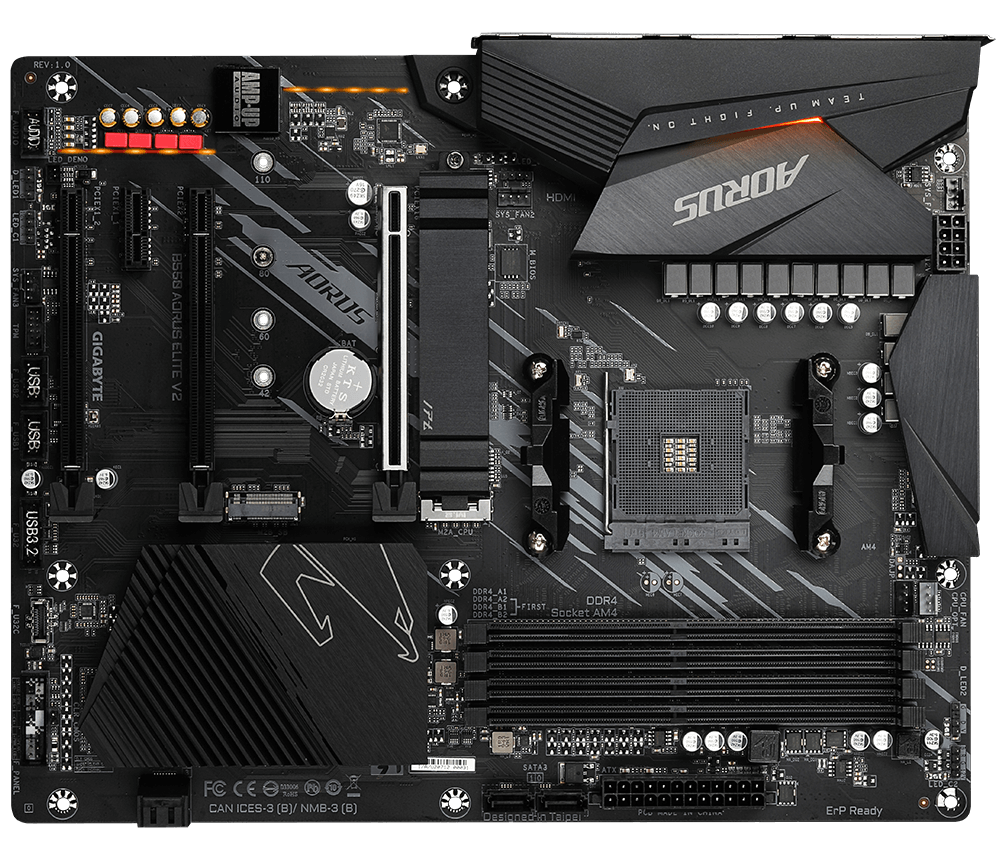

MoBo: Gigabyte B550 Aorus Elite V2

RAM: 2x16GB UDIMM ECC - Samsung 3200MHz

BootDrive: 2x 240GB Kingston A400

HBA: AOC-S2308L-L8i - In IT mode.

HDD Old: 3x WD Red 8TB (Plus - bought before they even became Plus series) + 1x WD Red Pro 8TB

HDD New: 4x WD Red Plus 8TB

Pool: 8 Drives in RaidZ2

SLOG: 2x Intel Optane 16GB modules

OS: TrueNAS Scale

A few notes for the specs:

CPU: I get that it is kinda overkill for just a "simple" HDD array. I plan to maybe put some more Dockers. I'll also try Plex and use it to offload transcoding x264 and x265 to the server itself (as I like ridiculous mega placebo params xD)

MoBo: I tested and in theory, the board is working correctly with ECC. It is not perfect as the normal platform lacks the necessary modules to properly check ECC RAM - No Error injection or even proper reposting of Error corrections but all hardware and software pointers say that ECC is ok.

RAM: So 32GB now with the possibility to upgrade to 64GB if I see the need.

BootDrive: 120GB should be enough but 240 costed literally 2$ more in the shop so I just went with them.

HBA: Afaik it should be good :P It will be slotted into the x16 slot first but might be moved into the x2 slot later. I know the bandwidth is not the best with it, but even with PCIe 3.0 x1, the 2.5GbE would not be fully saturated with it so I think it will be fine.

SLOG: I want to use "Sync: Always" on a few datasets and I might host a few VMs in which case it will help. In theory, the 170-180MB/s writes they give will not be enough to saturate the 2.5GbE that the MoBo has but I do not worry about it as not all traffic will get there, and even if it did, it is still like 1.5Gb which I would be more than happy with. I was thinking about getting a single (2 would be too much $$$ for that for me) P4800x/P4801x like 100GB model for that and maybe even for mini L2ARC later but might not be even necessary if I ever upgrade the RAM to 64GB.

HDD: This is a huge problem and I'm not 100% sure what to do about it. I read about it some but I would still like to get some insight into it.

Right now I have "HDD Old" set so 3 drives at 5400 (or more, WD is kinda weird about the actual speed on those) and 1 drive at 7200. I have 2 options:

1. Buy 4 WD Red (5400 RPM) and have 7 5400 and 1 7200

2. Buy 5 WD Red and have all the "same" 5400 RPM drives. This however means ill have 1 random free 8 TB drive that I'll have nothing to do with.

Is option 1 a bad idea? From what I heard, mixing different RPM drives is not really that bad and should not really affect anything. In reality, everything should just be as fast as the 5400 RPM so I do not really lose anything. Option 2 is in theory possible but would cost me more and leave me with 1 random drive which is kinda wasteful imo.

OS: I went with Scale even tho there are a few vids and posts I saw with lower performance compared to Core which should not impact me a lot. It will allow me to use Dockers which I'm muuuch more familiar with, which I hope will help me with the whole setup and everything.

Notes about usage:

The system will 95% be used for rather "cold" data to store mostly backup/archive data and some media for potential Plex - movies, series, and anime. The server might have a few Dockers for some services like Plex, Cloud, Media Conversion (x264/x265), and maybe some more, we will see. It will be mostly used by 1/2 people as it is a data storage for home usage.

This should give me some possible expansion in terms of RAM (32 -> 64) and possible SLOG (and) L2ARC upgrade possible P4800X that might even be used also as L2ARC but I think it should not be necessary due to the usage being mostly Archival (no real need for huge cache/L2ARC) and mostly working with larger media files.

I think that I thought about the whole setup as good enough for my usage but I still need some insight if Option 1 in the HDD section is ok. Do you think I went super off in some aspect of the build or it should be ok? I really wanted to do as many things as "right" as possible so I really wanted ECC RAM, I cut corners a little bit with HDDs as they are SATA but the HBA is SAS, not sure if it is something to be "proud" about but I thought having proper HBA with SATA drives is better than just SATA controller with SATA drives, maybe I'm super wrong about it tho xD

If you do not care about specs and other info and thoughts, I would appreciate help with HDD section

CPU: AMD R7 5800X3D

MoBo: Gigabyte B550 Aorus Elite V2

RAM: 2x16GB UDIMM ECC - Samsung 3200MHz

BootDrive: 2x 240GB Kingston A400

HBA: AOC-S2308L-L8i - In IT mode.

HDD Old: 3x WD Red 8TB (Plus - bought before they even became Plus series) + 1x WD Red Pro 8TB

HDD New: 4x WD Red Plus 8TB

Pool: 8 Drives in RaidZ2

SLOG: 2x Intel Optane 16GB modules

OS: TrueNAS Scale

A few notes for the specs:

CPU: I get that it is kinda overkill for just a "simple" HDD array. I plan to maybe put some more Dockers. I'll also try Plex and use it to offload transcoding x264 and x265 to the server itself (as I like ridiculous mega placebo params xD)

MoBo: I tested and in theory, the board is working correctly with ECC. It is not perfect as the normal platform lacks the necessary modules to properly check ECC RAM - No Error injection or even proper reposting of Error corrections but all hardware and software pointers say that ECC is ok.

RAM: So 32GB now with the possibility to upgrade to 64GB if I see the need.

BootDrive: 120GB should be enough but 240 costed literally 2$ more in the shop so I just went with them.

HBA: Afaik it should be good :P It will be slotted into the x16 slot first but might be moved into the x2 slot later. I know the bandwidth is not the best with it, but even with PCIe 3.0 x1, the 2.5GbE would not be fully saturated with it so I think it will be fine.

SLOG: I want to use "Sync: Always" on a few datasets and I might host a few VMs in which case it will help. In theory, the 170-180MB/s writes they give will not be enough to saturate the 2.5GbE that the MoBo has but I do not worry about it as not all traffic will get there, and even if it did, it is still like 1.5Gb which I would be more than happy with. I was thinking about getting a single (2 would be too much $$$ for that for me) P4800x/P4801x like 100GB model for that and maybe even for mini L2ARC later but might not be even necessary if I ever upgrade the RAM to 64GB.

HDD: This is a huge problem and I'm not 100% sure what to do about it. I read about it some but I would still like to get some insight into it.

Right now I have "HDD Old" set so 3 drives at 5400 (or more, WD is kinda weird about the actual speed on those) and 1 drive at 7200. I have 2 options:

1. Buy 4 WD Red (5400 RPM) and have 7 5400 and 1 7200

2. Buy 5 WD Red and have all the "same" 5400 RPM drives. This however means ill have 1 random free 8 TB drive that I'll have nothing to do with.

Is option 1 a bad idea? From what I heard, mixing different RPM drives is not really that bad and should not really affect anything. In reality, everything should just be as fast as the 5400 RPM so I do not really lose anything. Option 2 is in theory possible but would cost me more and leave me with 1 random drive which is kinda wasteful imo.

OS: I went with Scale even tho there are a few vids and posts I saw with lower performance compared to Core which should not impact me a lot. It will allow me to use Dockers which I'm muuuch more familiar with, which I hope will help me with the whole setup and everything.

Notes about usage:

The system will 95% be used for rather "cold" data to store mostly backup/archive data and some media for potential Plex - movies, series, and anime. The server might have a few Dockers for some services like Plex, Cloud, Media Conversion (x264/x265), and maybe some more, we will see. It will be mostly used by 1/2 people as it is a data storage for home usage.

This should give me some possible expansion in terms of RAM (32 -> 64) and possible SLOG (and) L2ARC upgrade possible P4800X that might even be used also as L2ARC but I think it should not be necessary due to the usage being mostly Archival (no real need for huge cache/L2ARC) and mostly working with larger media files.

I think that I thought about the whole setup as good enough for my usage but I still need some insight if Option 1 in the HDD section is ok. Do you think I went super off in some aspect of the build or it should be ok? I really wanted to do as many things as "right" as possible so I really wanted ECC RAM, I cut corners a little bit with HDDs as they are SATA but the HBA is SAS, not sure if it is something to be "proud" about but I thought having proper HBA with SATA drives is better than just SATA controller with SATA drives, maybe I'm super wrong about it tho xD