SangieWolf

Dabbler

- Joined

- Jun 13, 2018

- Messages

- 21

I tried searching for help but couldn't find anything relevant.

One of my drives was giving bad sector errors so I swapped it out and let it resilver, which took quite a few hours. Several days later, I noticed the drives were still constantly busy. I realized that I wasn't on the most recent update channel, so I upgraded to FreeNAS-11.2-U4 and no change with the drives constantly being busy.

I even shut off my workstation to see if it was something client level causing the drives to be busy, and no such luck.

Also, reading and writing to the AFP share is also slow.

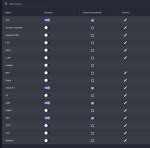

Please see my drive stats below and my hardware stats in my signature.

One of my drives was giving bad sector errors so I swapped it out and let it resilver, which took quite a few hours. Several days later, I noticed the drives were still constantly busy. I realized that I wasn't on the most recent update channel, so I upgraded to FreeNAS-11.2-U4 and no change with the drives constantly being busy.

I even shut off my workstation to see if it was something client level causing the drives to be busy, and no such luck.

Also, reading and writing to the AFP share is also slow.

Please see my drive stats below and my hardware stats in my signature.