Hi there

Since the original thread (DIY all flash/SSD NAS - not going for practicality) is now demoted to read-only I've chosen to start a new one - hope this doesn't violate any community guidelines. If so - I'm very sorry.

Well.

I've accumulated around 100 SSDs of which 87 is Samsung-only drives. Not being some sort of brand snob, but I figured it's better to 'match' drives.

Decided to brush of dust and take this project on again, but I've run into som issues in the meantime.

First: some hardware-pr0n (teaser only)

But I'm having some issues and I'm concerned that I've might have fried or broken something.

Started testing with one drive on each backplane. Created a pool and did some testing. Everything seemed fine, so I started to fill in the drives with all four backplanes still connected.

At some point I ran into issues and decided to reboot - nothing seemed off.

Then I powered of the system and after moving some cables around to tidy things up, I tried to power the system back on.

Nothing. A click and nothing else.

Then I remembered: I've, up and until now, been using a 450 Watt SSF PSU. Bad decision, I think.

Tried some variations of powering off, connecting the backlanes while the system was still on, fewer disks. Nothing. Nothing seemed to work reliably. Tried almost everything except installing a more powerful PSU.

Finally, did just that. But now, I'm having stability issues. Not all disks are detected. If they are, they are not brought into TrueNAS and my pools are corrupted or just not showing up.

Thought I'd fried something, and had almost given up.

With all four backplanes connected to Molex-power, but not the HBA (in essence taking the SAS-SAS, expander, cables out of the equation) the drives started showing up. Still throwing errors and not being reliable at all.

So...

My first test, was with all four backplanes connected (in pairs, to the HBA with a SAS-SAS expander cable to the backplane next down in cascade - don't know if that made any sense?)

Like so:

HBA

⮑ Backplane #1 ➞ SSD

⮑ Backplane #3 ➞ SSD

⮑ Backplane #2 ➞ SSD

⮑ Backplane #4 ➞ SSD

If I remove the SAS cable and connect them to the HBA in pairs, like so:

HBA

⮑ Backplane #1 ➞ 24x SSD

⮑ Backplane #2 ➞ 24x SSD

They both seem to work as intended using any combination of HBA -> SAS-port (of which there are three on each expander).

But, as soon as I connect them like this - in essence using any SAS-SAS cable (cascaded)

HBA

⮑ Backplane #1 ➞ 24x SSD

⮑ Backplane #2 ➞ 24x SSD

Or

HBA

⮑ Backplane #3 ➞ 24x SSD

⮑ Backplane #4 ➞ 24x SSD

Nothing works.

Did I fry something? Are the cables broken? Are the backplanes broken?

Sorry if I've upset any of you with my behaviour

To be honest, I'm quite ashamed/sorry, if I've broken this fine system with my stupid, stupid decisions

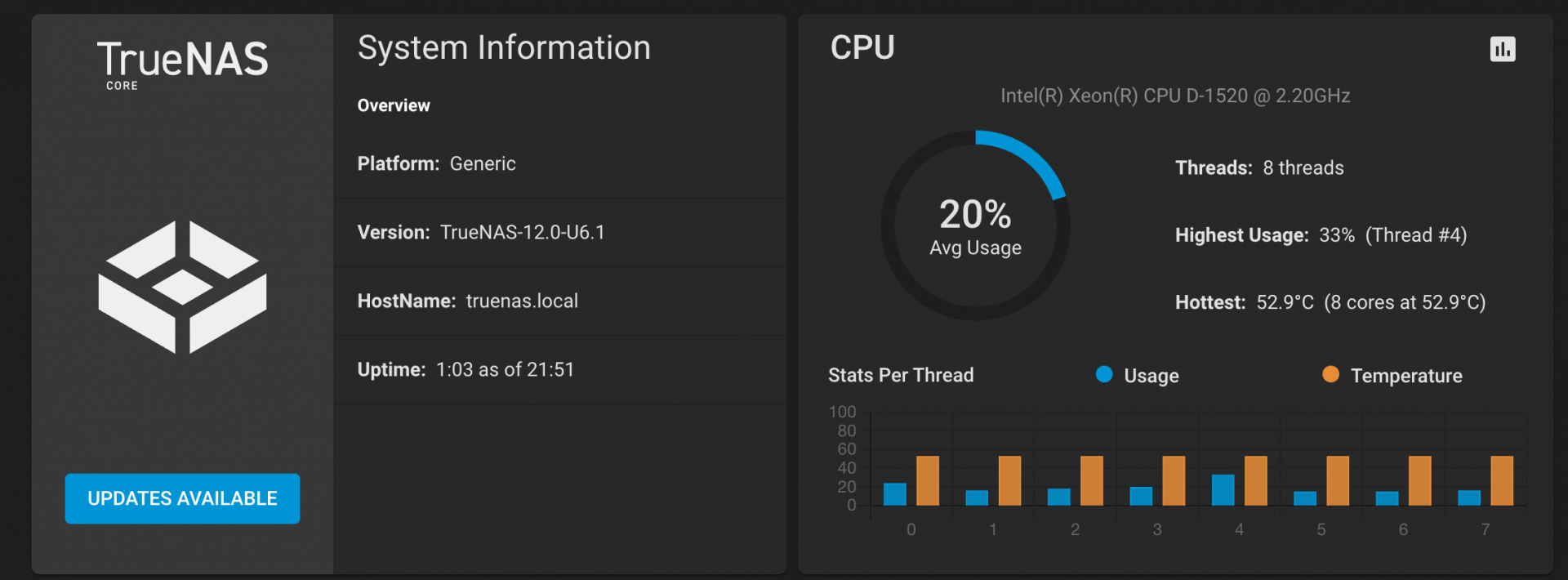

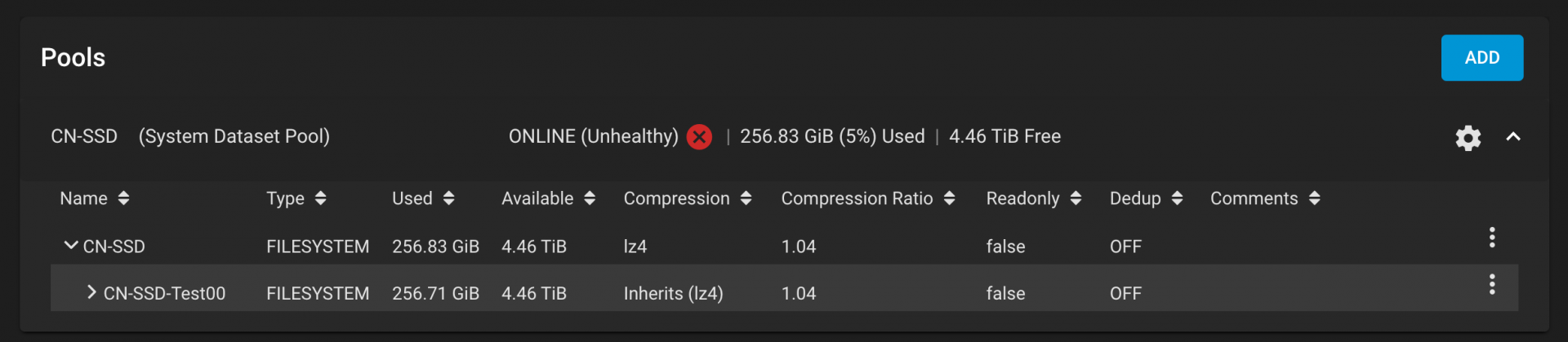

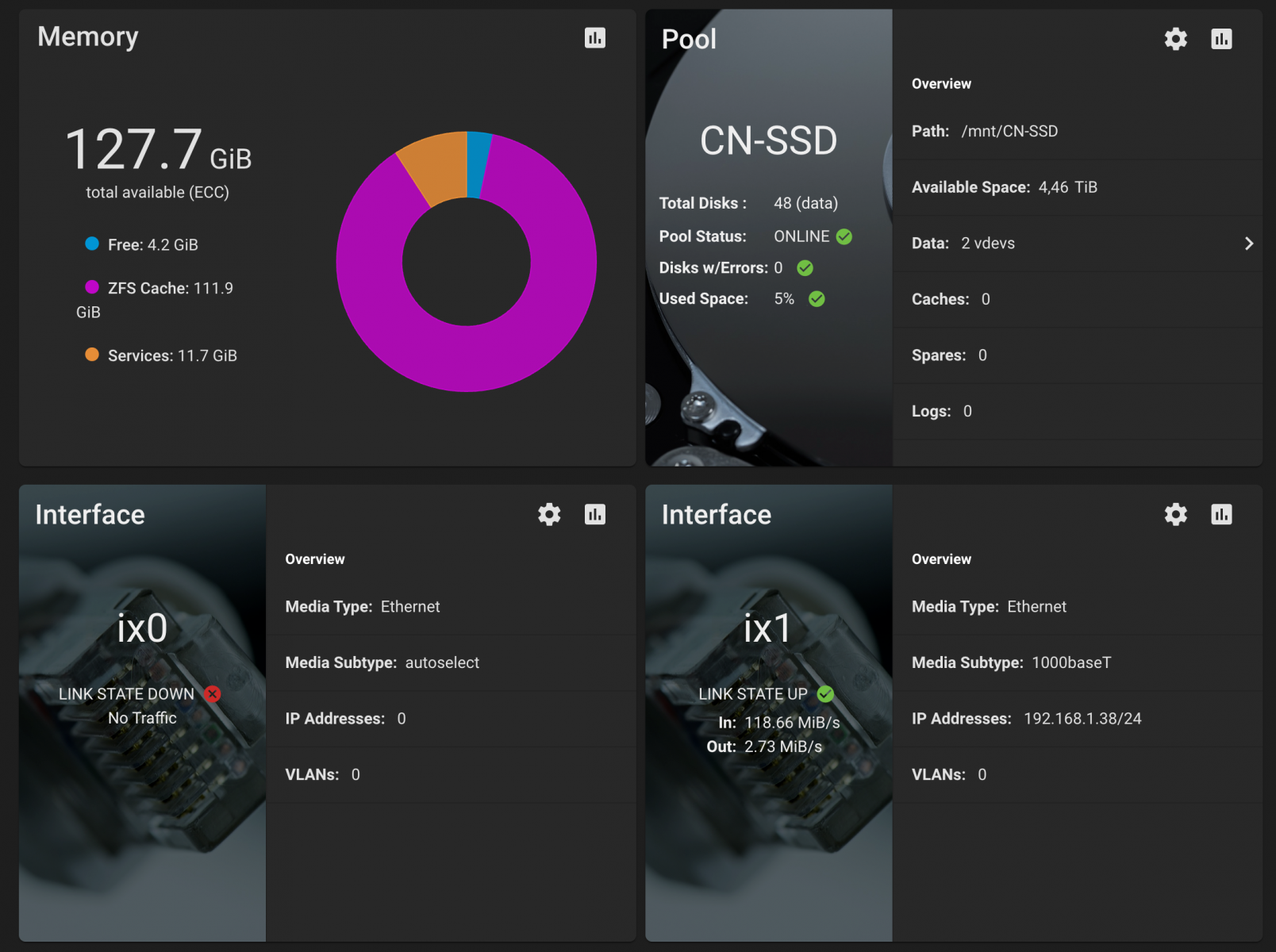

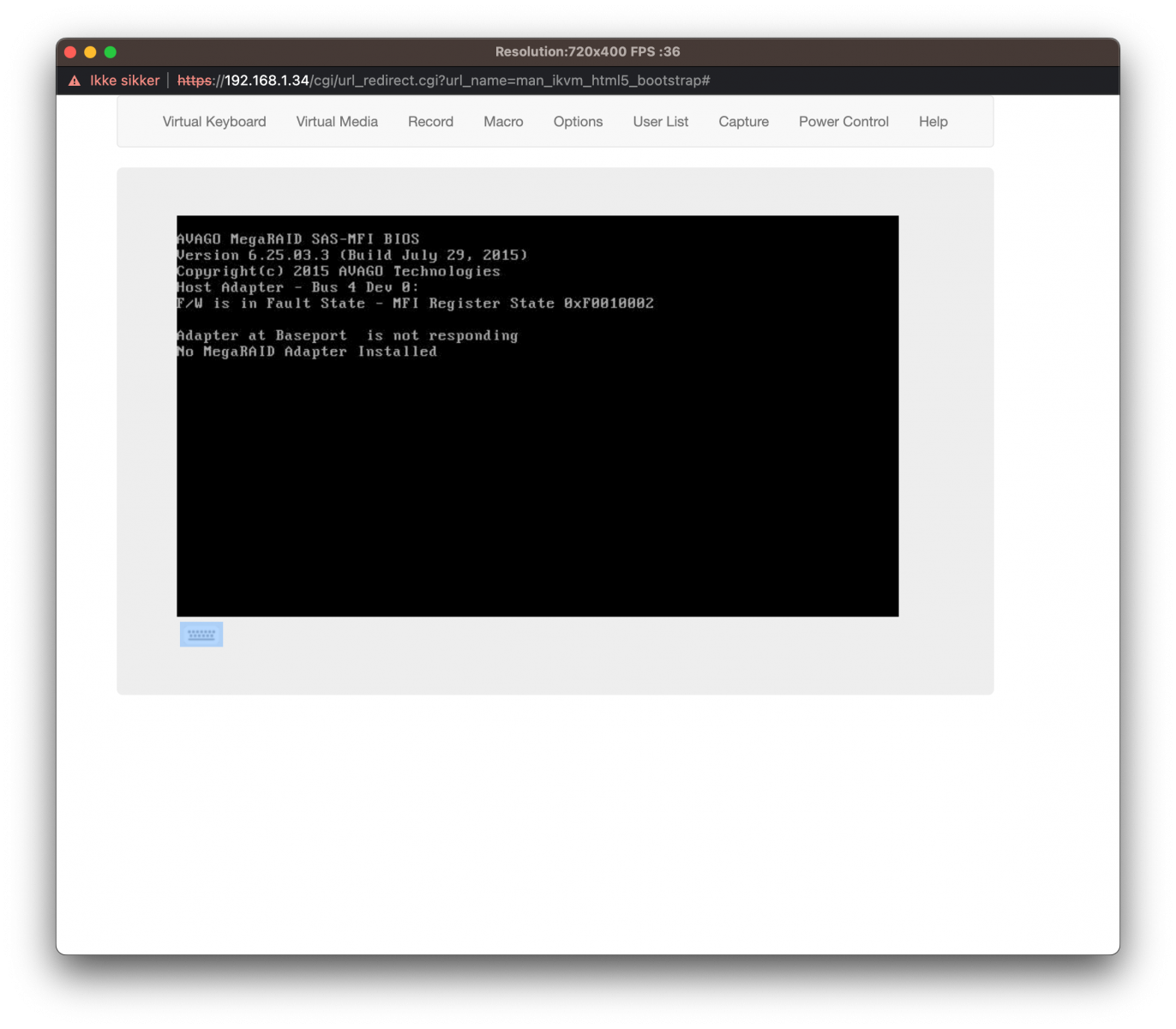

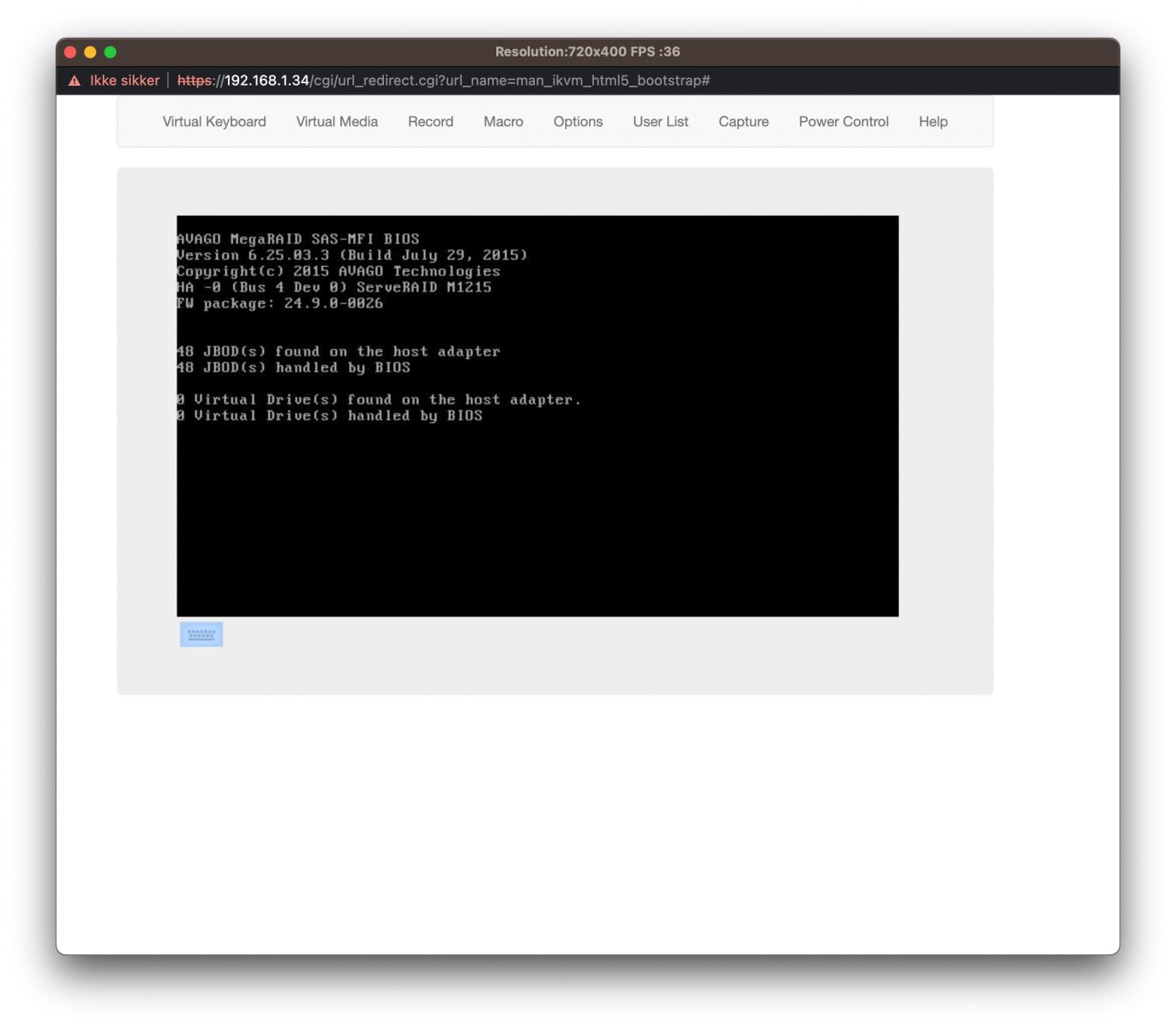

P.S.: Have attached an assortment of screenshots:

Since the original thread (DIY all flash/SSD NAS - not going for practicality) is now demoted to read-only I've chosen to start a new one - hope this doesn't violate any community guidelines. If so - I'm very sorry.

Well.

I've accumulated around 100 SSDs of which 87 is Samsung-only drives. Not being some sort of brand snob, but I figured it's better to 'match' drives.

Decided to brush of dust and take this project on again, but I've run into som issues in the meantime.

First: some hardware-pr0n (teaser only)

But I'm having some issues and I'm concerned that I've might have fried or broken something.

Started testing with one drive on each backplane. Created a pool and did some testing. Everything seemed fine, so I started to fill in the drives with all four backplanes still connected.

At some point I ran into issues and decided to reboot - nothing seemed off.

Then I powered of the system and after moving some cables around to tidy things up, I tried to power the system back on.

Nothing. A click and nothing else.

Then I remembered: I've, up and until now, been using a 450 Watt SSF PSU. Bad decision, I think.

Tried some variations of powering off, connecting the backlanes while the system was still on, fewer disks. Nothing. Nothing seemed to work reliably. Tried almost everything except installing a more powerful PSU.

Finally, did just that. But now, I'm having stability issues. Not all disks are detected. If they are, they are not brought into TrueNAS and my pools are corrupted or just not showing up.

Thought I'd fried something, and had almost given up.

With all four backplanes connected to Molex-power, but not the HBA (in essence taking the SAS-SAS, expander, cables out of the equation) the drives started showing up. Still throwing errors and not being reliable at all.

So...

My first test, was with all four backplanes connected (in pairs, to the HBA with a SAS-SAS expander cable to the backplane next down in cascade - don't know if that made any sense?)

Like so:

HBA

⮑ Backplane #1 ➞ SSD

⮑ Backplane #3 ➞ SSD

⮑ Backplane #2 ➞ SSD

⮑ Backplane #4 ➞ SSD

If I remove the SAS cable and connect them to the HBA in pairs, like so:

HBA

⮑ Backplane #1 ➞ 24x SSD

⮑ Backplane #2 ➞ 24x SSD

They both seem to work as intended using any combination of HBA -> SAS-port (of which there are three on each expander).

But, as soon as I connect them like this - in essence using any SAS-SAS cable (cascaded)

HBA

⮑ Backplane #1 ➞ 24x SSD

⮑ Backplane #2 ➞ 24x SSD

Or

HBA

⮑ Backplane #3 ➞ 24x SSD

⮑ Backplane #4 ➞ 24x SSD

Nothing works.

Did I fry something? Are the cables broken? Are the backplanes broken?

Sorry if I've upset any of you with my behaviour

To be honest, I'm quite ashamed/sorry, if I've broken this fine system with my stupid, stupid decisions

P.S.: Have attached an assortment of screenshots: