Daisuke

Contributor

- Joined

- Jun 23, 2011

- Messages

- 1,041

My goal is to move 20TB of data from

Based on my research, the general rule of

12x HGST Ultrastar He8 Helium (HUH728080ALE601) 8TB CMR

With Record Size set on

With Record Size set on

Server specs, hyper-threading disabled:

Is there a warmup period after reboot, or do I need to change any other settings to improve the disks performance?

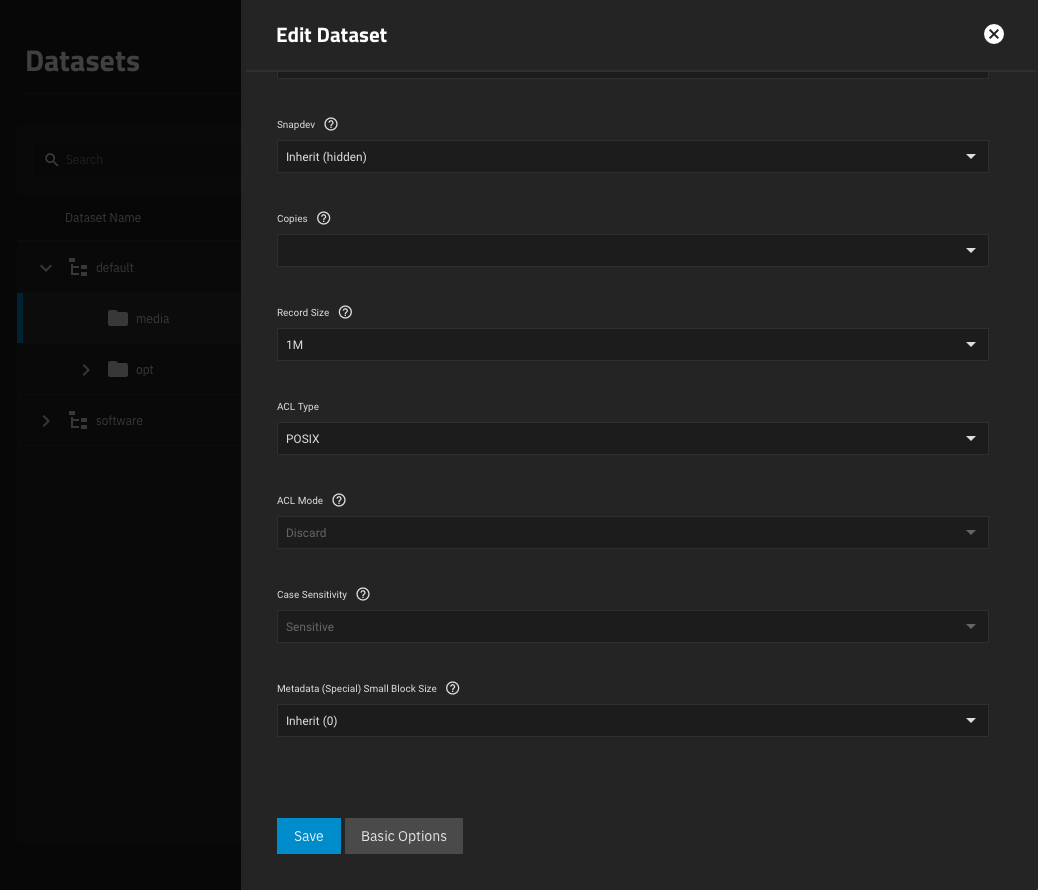

/mnt/default/media dataset to /mnt/default/opt/media dataset. First thing I did is to change the /mnt/default/media dataset Record Size to 1M, from default 128K:Based on my research, the general rule of

recordsize is that it should closely match the typical workload experienced within that dataset. Since my media files average 5MB or more, should have recordsize=1M. This matches the typical I/O seen in that dataset, setting a larger recordsize prevents the files from becoming unduly fragmented, ensuring the fewest IOPS are consumed during either read or write of the data within that dataset.12x HGST Ultrastar He8 Helium (HUH728080ALE601) 8TB CMR

default pool:Code:

# zpool status default

pool: default

state: ONLINE

scan: resilvered 8.15M in 00:00:01 with 0 errors on Sat Dec 17 20:45:45 2022

config:

NAME STATE READ WRITE CKSUM

default ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

b768e9a0-820f-47cb-95f1-0a205dbe69a2 ONLINE 0 0 0

11c649c6-15fb-4e4d-bb9e-a6e49d92dbe4 ONLINE 0 0 0

66f8cece-5550-4032-abdf-2c62f5c193f4 ONLINE 0 0 0

576baa03-374f-435e-906a-1b897df113dc ONLINE 0 0 0

6b6ae667-ae27-46e1-b059-3ba6f5ca4c5c ONLINE 0 0 0

eed80073-49af-40d5-842c-5b6b607ce36c ONLINE 0 0 0

de70ed0b-3d3b-43c8-a5ec-c536dba8cea7 ONLINE 0 0 0

e77f0ce2-b5d1-4b5e-af46-237519b4495b ONLINE 0 0 0

7aa22586-b4b5-4326-86e5-dd0e14415627 ONLINE 0 0 0

03dfccd5-8388-43c0-90d6-481b53f73a4e ONLINE 0 0 0

0e89244d-6504-411b-82ee-32ace0ed0359 ONLINE 0 0 0

17700665-232a-48c1-a99e-82bdc5e26c14 ONLINE 0 0 0

errors: No known data errors

With Record Size set on

/mnt/default dataset to 128K, I get a low write speed:Code:

# pwd /mnt/default # fio --name=random-write --ioengine=posixaio --rw=randwrite --bs=4k \ --numjobs=1 --size=4g --iodepth=1 --runtime=60 --time_based --end_fsync=1 Run status group 0 (all jobs): WRITE: bw=78.4MiB/s (82.2MB/s), 78.4MiB/s-78.4MiB/s (82.2MB/s-82.2MB/s), io=4886MiB (5123MB), run=62329-62329msec

With Record Size set on

/mnt/default dataset to 1M, after reboot I get a significantly lower write speed:Code:

# pwd /mnt/default # fio --name=random-write --ioengine=posixaio --rw=randwrite --bs=4k \ --numjobs=1 --size=4g --iodepth=1 --runtime=60 --time_based --end_fsync=1 Run status group 0 (all jobs): WRITE: bw=1988KiB/s (2036kB/s), 1988KiB/s-1988KiB/s (2036kB/s-2036kB/s), io=117MiB (122MB), run=60042-60042msec

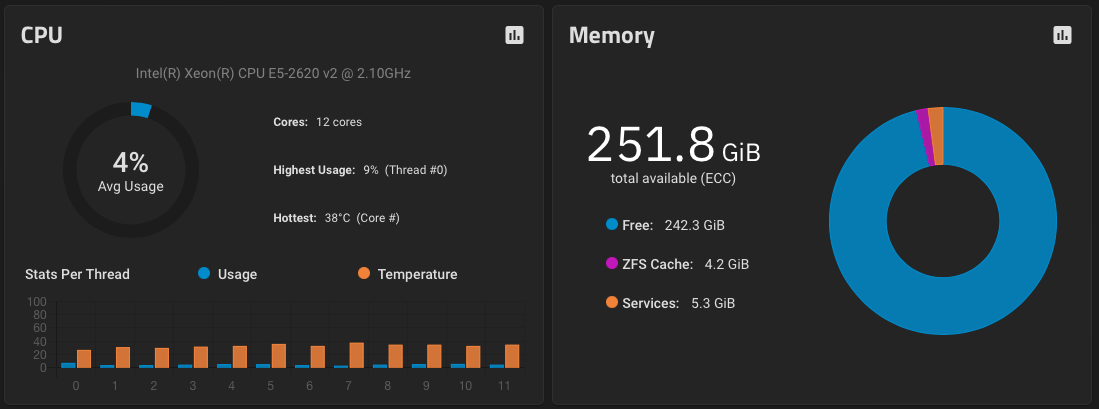

Server specs, hyper-threading disabled:

- Dell R720xd with 12x HGST Ultrastar He8 Helium (HUH728080ALE601) 8TB for default pool expandability

- 2x E5-2620 v2 @ 2.10GHz CPU

- 16x 16GB 2Rx4 PC3-12800R DDR3-1600 ECC, for a total of 256GB RAM

- 1x PERC H710 Mini, flashed to LSI 9207i firmware

- 1x PERC H810 PCIe, flashed to LSI 9207e firmware

- 1x NVIDIA Tesla P4 GPU, for transcoding

- 2x Samsung 870 EVO 500GB SATA SSD for software pool hosting Kubernetes applications

Is there a warmup period after reboot, or do I need to change any other settings to improve the disks performance?

Last edited: