truenasuserh

Cadet

- Joined

- Sep 29, 2020

- Messages

- 9

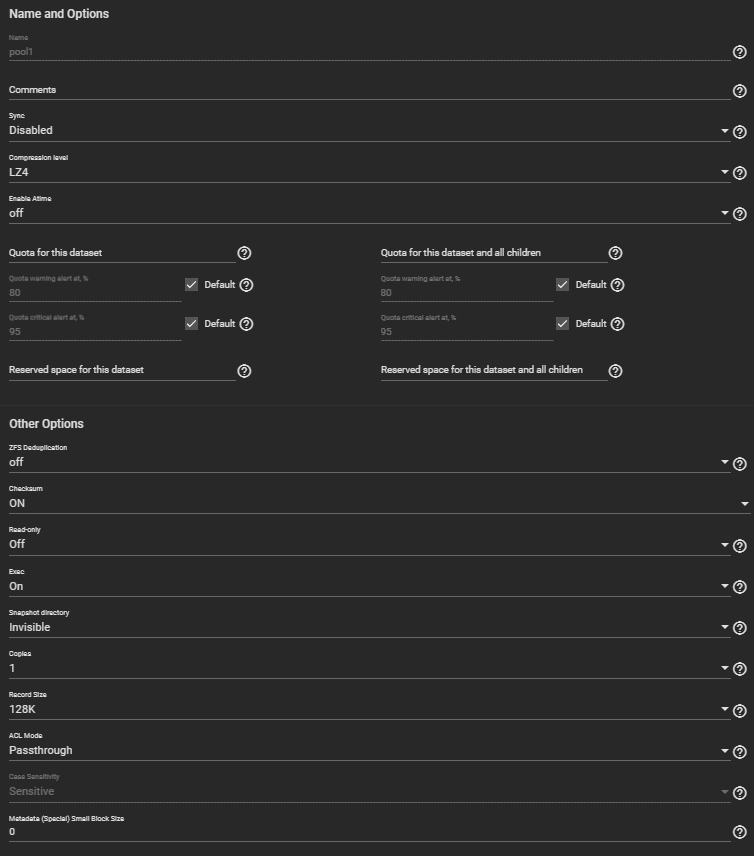

I've got the following setup:

Disks here are Seagate IronWolf 12 TB hard disks (ST12000VN0008)

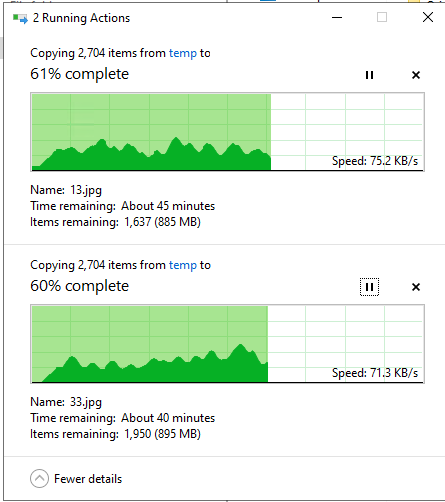

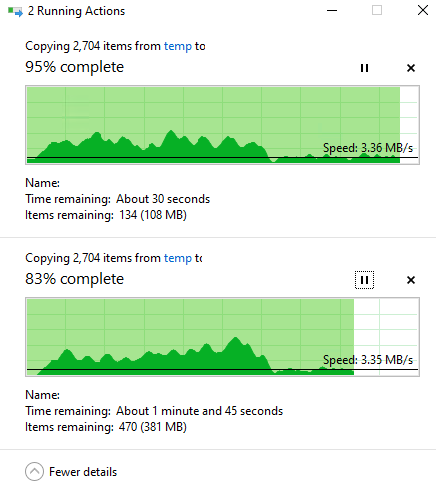

I've got a test folder of representative production data, which I copy over SMB. This folder is 2.2GB, with mixed filesizes, some 12KB, some 2MB, with a ratio of roughly 50/50 (in size, not file count). During this, the smallest files seem to slow down the copy a lot. My boss is convinced that small or big files shouldn't matter, as small files would get aggregated using transactions, and therefore the hard drives will only see large consecutive writes. This copy takes roughly 59.5 seconds.

Copying this folder twice in parallel, I'd expect about two minutes. However, this actually takes about 8 minutes 20 seconds, with transfer speeds dropping to a couple hunderd KB/s when the transfers hit the small files.

I'd assume this is an IOPS issue. But what's killing my IOPS? The FIO tests show 2K iops, so a folder with 2K files should not be throttled on IOPS, right?

System information:

OS Version:

TrueNAS-13.0-U5.3

Model:

D120-C21

Memory:

32 GiB

CPU

Intel(R) Xeon(R) CPU D-1541 @ 2.10GHz

(CPU MAX according to the reporting is 70%.)

(Mod Edit - Removed the color tags from your post and converted your multilines to codeblocks for readability.)

Disks here are Seagate IronWolf 12 TB hard disks (ST12000VN0008)

I've got a test folder of representative production data, which I copy over SMB. This folder is 2.2GB, with mixed filesizes, some 12KB, some 2MB, with a ratio of roughly 50/50 (in size, not file count). During this, the smallest files seem to slow down the copy a lot. My boss is convinced that small or big files shouldn't matter, as small files would get aggregated using transactions, and therefore the hard drives will only see large consecutive writes. This copy takes roughly 59.5 seconds.

Copying this folder twice in parallel, I'd expect about two minutes. However, this actually takes about 8 minutes 20 seconds, with transfer speeds dropping to a couple hunderd KB/s when the transfers hit the small files.

I'd assume this is an IOPS issue. But what's killing my IOPS? The FIO tests show 2K iops, so a folder with 2K files should not be throttled on IOPS, right?

Code:

fio --randrepeat=1 --direct=1 --gtod_reduce=1 --numjobs=1 --bs=4k --iodepth=64 --size=1G --readwrite=randwrite --ramp_time=4 --group_reporting --name=test --filename=test

test: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=psync, iodepth=64

fio-3.28

Starting 1 process

Jobs: 1 (f=1): [w(1)][90.6%][w=344MiB/s][w=88.1k IOPS][eta 00m:03s]

test: (groupid=0, jobs=1): err= 0: pid=4860: Wed Nov 29 15:04:23 2023

write: IOPS=10.4k, BW=40.8MiB/s (42.8MB/s)(1023MiB/25076msec); 0 zone resets

bw ( KiB/s): min= 341, max=579808, per=95.83%, avg=40027.96, stdev=106981.49, samples=49

iops : min= 85, max=144952, avg=10006.61, stdev=26745.43, samples=49

cpu : usr=0.87%, sys=14.31%, ctx=12697, majf=0, minf=1

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,261841,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=64

Run status group 0 (all jobs):

WRITE: bw=40.8MiB/s (42.8MB/s), 40.8MiB/s-40.8MiB/s (42.8MB/s-42.8MB/s), io=1023MiB (1073MB), run=25076-25076msec

Code:

fio --randrepeat=1 --direct=1 --gtod_reduce=1 --numjobs=10 --bs=4k --iodepth=64 --size=1G --readwrite=randwrite --ramp_time=4 --group_reporting --name=test --filename=test

test: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=psync, iodepth=64

...

fio-3.28

Starting 10 processes

Jobs: 10 (f=10): [w(10)][46.7%][w=1151MiB/s][w=295k IOPS][eta 00m:08s]

test: (groupid=0, jobs=10): err= 0: pid=4889: Wed Nov 29 15:06:06 2023

write: IOPS=402k, BW=1572MiB/s (1648MB/s)(3773MiB/2400msec); 0 zone resets

bw ( MiB/s): min= 1092, max= 2089, per=89.32%, avg=1404.13, stdev=41.10, samples=40

iops : min=279768, max=535000, avg=359453.75, stdev=10520.42, samples=40

cpu : usr=4.14%, sys=61.84%, ctx=148692, majf=0, minf=1

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,965810,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=64

Run status group 0 (all jobs):

WRITE: bw=1572MiB/s (1648MB/s), 1572MiB/s-1572MiB/s (1648MB/s-1648MB/s), io=3773MiB (3956MB), run=2400-2400msec

Code:

fio --randrepeat=1 --direct=1 --gtod_reduce=1 --numjobs=10 --bs=4k --iodepth=64 --size=4G --readwrite=randwrite --ramp_time=4 --group_reporting --name=test --filename=test

test: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=psync, iodepth=64

...

fio-3.28

Starting 10 processes

Jobs: 5 (f=5): [_(1),w(1),_(1),w(3),_(2),w(1),_(1)][99.8%][w=298MiB/s][w=76.2k IOPS][eta 00m:01s]

test: (groupid=0, jobs=10): err= 0: pid=4910: Wed Nov 29 15:17:34 2023

write: IOPS=16.9k, BW=65.8MiB/s (69.0MB/s)(40.0GiB/621485msec); 0 zone resets

bw ( KiB/s): min= 7887, max=427386, per=100.00%, avg=67421.05, stdev=3381.92, samples=12352

iops : min= 1968, max=106843, avg=16852.13, stdev=845.49, samples=12352

cpu : usr=1.09%, sys=16.80%, ctx=8370246, majf=0, minf=1

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,10474974,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=64

Run status group 0 (all jobs):

WRITE: bw=65.8MiB/s (69.0MB/s), 65.8MiB/s-65.8MiB/s (69.0MB/s-69.0MB/s), io=40.0GiB (42.9GB), run=621485-621485msec

Code:

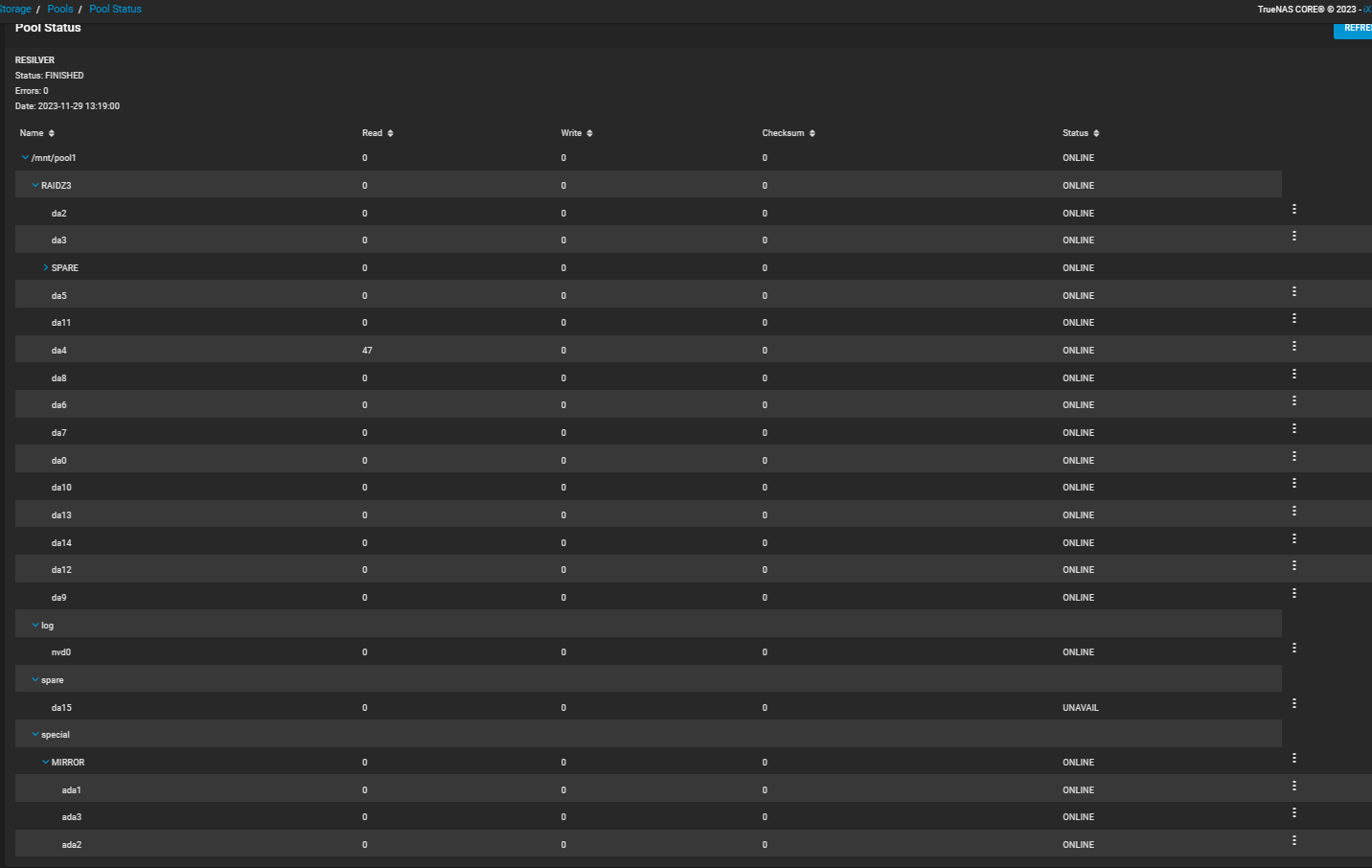

zpool status pool1

pool: pool1

state: ONLINE

status: One or more devices has experienced an unrecoverable error. An

attempt was made to correct the error. Applications are unaffected.

action: Determine if the device needs to be replaced, and clear the errors

using 'zpool clear' or replace the device with 'zpool replace'.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-9P

scan: resilvered 19.9G in 00:12:05 with 0 errors on Wed Nov 29 13:31:05 2023

config:

NAME STATE READ WRITE CKSUM

pool1 ONLINE 0 0 0

raidz3-0 ONLINE 0 0 0

gptid/96687099-d203-11eb-9e64-e0d55e60faba ONLINE 0 0 0

gptid/f7429afe-dc42-11ed-9b7a-e0d55e60faba ONLINE 0 0 0

spare-2 ONLINE 0 0 0

gptid/97f3a674-d203-11eb-9e64-e0d55e60faba ONLINE 0 0 0

gptid/a6c07145-d203-11eb-9e64-e0d55e60faba ONLINE 0 0 0

gptid/99e77ff3-d203-11eb-9e64-e0d55e60faba ONLINE 0 0 0

gptid/18d7ebc7-dc43-11ed-9b7a-e0d55e60faba ONLINE 0 0 0

gptid/9e3ed33b-d203-11eb-9e64-e0d55e60faba ONLINE 47 0 0

gptid/396b46cc-dc43-11ed-9b7a-e0d55e60faba ONLINE 0 0 0

gptid/9bf4f4c2-d203-11eb-9e64-e0d55e60faba ONLINE 0 0 0

gptid/9eb74444-d203-11eb-9e64-e0d55e60faba ONLINE 0 0 0

gptid/97416f1e-d203-11eb-9e64-e0d55e60faba ONLINE 0 0 0

gptid/a10904dd-d203-11eb-9e64-e0d55e60faba ONLINE 0 0 0

gptid/a2003ccd-d203-11eb-9e64-e0d55e60faba ONLINE 0 0 0

gptid/a468b099-d203-11eb-9e64-e0d55e60faba ONLINE 0 0 0

gptid/a3ec783a-d203-11eb-9e64-e0d55e60faba ONLINE 0 0 0

gptid/a5a34558-d203-11eb-9e64-e0d55e60faba ONLINE 0 0 0

special

mirror-2 ONLINE 0 0 0

gptid/a51b321a-d203-11eb-9e64-e0d55e60faba ONLINE 0 0 0

gptid/a59ed3da-d203-11eb-9e64-e0d55e60faba ONLINE 0 0 0

gptid/a59dcf5d-d203-11eb-9e64-e0d55e60faba ONLINE 0 0 0

logs

gptid/a06d9015-d203-11eb-9e64-e0d55e60faba ONLINE 0 0 0

spares

gptid/a6c07145-d203-11eb-9e64-e0d55e60faba INUSE currently in use

errors: No known data errors

System information:

OS Version:

TrueNAS-13.0-U5.3

Model:

D120-C21

Memory:

32 GiB

CPU

Intel(R) Xeon(R) CPU D-1541 @ 2.10GHz

(CPU MAX according to the reporting is 70%.)

(Mod Edit - Removed the color tags from your post and converted your multilines to codeblocks for readability.)

Last edited by a moderator: