Scampicfx

Contributor

- Joined

- Jul 4, 2016

- Messages

- 125

Dear Community,

after having dealt with many FreeNAS / TrueNAS Core systems, I wanted to get in touch with my first all-flash server. Therefore, I recently bought an TrueNAS R20 all-flash server by iXSystems. I had high expectations, but after connecting it via Fibre Channel to my ESXi host, I noticed that there is something wrong.

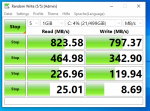

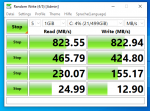

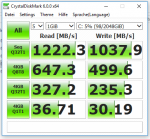

I would like to hear your opinion regarding the performance of this machine. In my opinion, these performance values are way too low! When I compare some other threads here in the forums, they show performance well above 1000 MB/s and ten thousands of IOPS. I'm miles away from these values. Here are some benchmarks:

Command:

Result:

Command:

Result:

EDIT: I had to trim these values a bit because otherwise the posting would exceed the allowed character limit.

Pool Layout:

The pool consists of 9 SSDs in total. Each SSD is a SEAGATE XS1920SE70094 with 1920 GByte capacity.

Shouldn't there be a lot more speeds with that machine?

A poor Xeon Bronze is built into this system, with a clock rate of 1,9 GHz without Turbo. I can see 100% CPU usage during some tests. Could this be the bottleneck?

Thank you for all your help!

after having dealt with many FreeNAS / TrueNAS Core systems, I wanted to get in touch with my first all-flash server. Therefore, I recently bought an TrueNAS R20 all-flash server by iXSystems. I had high expectations, but after connecting it via Fibre Channel to my ESXi host, I noticed that there is something wrong.

I would like to hear your opinion regarding the performance of this machine. In my opinion, these performance values are way too low! When I compare some other threads here in the forums, they show performance well above 1000 MB/s and ten thousands of IOPS. I'm miles away from these values. Here are some benchmarks:

Command:

Code:

fio --name=random-write --ioengine=posixaio --rw=randwrite --bs=4k --size=4g --numjobs=1 --iodepth=1 --runtime=60 --time_based --end_fsync=1 erf

Result:

Code:

random-write: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=posixaio, iodepth=1

fio-3.27

Starting 1 process

random-write: Laying out IO file (1 file / 4096MiB)

Jobs: 1 (f=1): [F(1)][100.0%][w=86.3MiB/s][w=22.1k IOPS][eta 00m:00s]

random-write: (groupid=0, jobs=1): err= 0: pid=80346: Thu Oct 14 21:11:41 2021

write: IOPS=24.2k, BW=94.5MiB/s (99.1MB/s)(5729MiB/60625msec); 0 zone resets

slat (nsec): min=1083, max=8310.5k, avg=5143.45, stdev=13051.82

clat (nsec): min=649, max=40429k, avg=34843.03, stdev=106826.69

lat (usec): min=8, max=40430, avg=39.99, stdev=106.96

clat percentiles (nsec):

| 1.00th=[ 724], 5.00th=[ 852], 10.00th=[ 7392],

| 20.00th=[ 11840], 30.00th=[ 13120], 40.00th=[ 14016],

| 50.00th=[ 15424], 60.00th=[ 18816], 70.00th=[ 36096],

| 80.00th=[ 45824], 90.00th=[ 64256], 95.00th=[ 74240],

| 99.00th=[ 105984], 99.50th=[1122304], 99.90th=[1122304],

| 99.95th=[1138688], 99.99th=[1171456]

bw ( KiB/s): min=57968, max=223809, per=100.00%, avg=97774.81, stdev=26052.37, samples=119

iops : min=14492, max=55952, avg=24443.35, stdev=6513.04, samples=119

lat (nsec) : 750=1.97%, 1000=4.89%

lat (usec) : 2=2.02%, 4=0.28%, 10=2.23%, 20=50.45%, 50=19.46%

lat (usec) : 100=17.63%, 250=0.29%, 500=0.04%, 750=0.01%, 1000=0.01%

lat (msec) : 2=0.74%, 4=0.01%, 10=0.01%, 20=0.01%, 50=0.01%

cpu : usr=6.12%, sys=11.03%, ctx=1676099, majf=0, minf=1

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,1466606,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

WRITE: bw=94.5MiB/s (99.1MB/s), 94.5MiB/s-94.5MiB/s (99.1MB/s-99.1MB/s), io=5729MiB (6007MB), run=60625-60625msecCommand:

Code:

NRFILES=2; NUMJOBS=10; ARCMAX=$((`sysctl -n vfs.zfs.arc_max` * 2 / 1024 / 1024 / $NRFILES / $NUMJOBS)); fio --name=seq128k --ioengine=posixaio --direct=1 --buffer_compress_percentage=20 --rw=rw --rwmixread=30 --directory=/mnt/tank/perf --filename_format=seq.\$jobnum.\$filenum --nrfiles=$NRFILES --filesize=${ARCMAX}m --blocksize=128k --time_based=1 --runtime=300 --group_reporting --numjobs=$NUMJOBS --iodepth=4Result:

Code:

fio-3.27

Starting 10 processes

Jobs: 10 (f=20):

seq128k: (groupid=0, jobs=10): err= 0: pid=80687: Thu Oct 14 21:32:22 2021

read: IOPS=2351, BW=294MiB/s (308MB/s)(86.1GiB/300010msec)

slat (nsec): min=946, max=1471.4M, avg=249756.50, stdev=5392261.38

clat (nsec): min=1142, max=1723.6M, avg=598333.38, stdev=10144015.29

lat (usec): min=16, max=1723.8k, avg=848.09, stdev=11487.95

clat percentiles (nsec):

| 1.00th=[ 1336], 5.00th=[ 1448], 10.00th=[ 1528],

| 20.00th=[ 1672], 30.00th=[ 1944], 40.00th=[ 2416],

| 50.00th=[ 8768], 60.00th=[ 43264], 70.00th=[ 61184],

| 80.00th=[ 84480], 90.00th=[ 242688], 95.00th=[ 675840],

| 99.00th=[ 5537792], 99.50th=[ 10682368], 99.90th=[149946368],

| 99.95th=[185597952], 99.99th=[408944640]

bw ( KiB/s): min= 3810, max=2014864, per=100.00%, avg=307335.48, stdev=14589.87, samples=5787

iops : min= 24, max=15737, avg=2397.04, stdev=114.00, samples=5787

write: IOPS=5487, BW=686MiB/s (719MB/s)(201GiB/300010msec); 0 zone resets

slat (nsec): min=988, max=1333.7M, avg=121656.50, stdev=3189799.70

clat (nsec): min=1317, max=2157.0M, avg=6576278.93, stdev=25930458.84

lat (usec): min=25, max=2157.0k, avg=6697.94, stdev=26119.99

clat percentiles (usec):

| 1.00th=[ 3], 5.00th=[ 169], 10.00th=[ 273], 20.00th=[ 498],

| 30.00th=[ 1123], 40.00th=[ 3490], 50.00th=[ 6849], 60.00th=[ 6980],

| 70.00th=[ 7111], 80.00th=[ 7242], 90.00th=[ 7439], 95.00th=[ 7570],

| 99.00th=[ 55837], 99.50th=[130548], 99.90th=[354419], 99.95th=[526386],

| 99.99th=[960496]

bw ( KiB/s): min= 8128, max=4730752, per=100.00%, avg=715079.87, stdev=33556.76, samples=5805

iops : min= 57, max=36954, avg=5581.13, stdev=262.17, samples=5805

lat (usec) : 2=9.77%, 4=6.53%, 10=0.74%, 20=1.21%, 50=2.59%

lat (usec) : 100=6.13%, 250=6.32%, 500=8.98%, 750=4.18%, 1000=2.50%

lat (msec) : 2=4.85%, 4=4.48%, 10=39.70%, 20=0.64%, 50=0.54%

lat (msec) : 100=0.32%, 250=0.37%, 500=0.09%, 750=0.02%, 1000=0.01%

lat (msec) : 2000=0.01%, >=2000=0.01%

cpu : usr=2.56%, sys=0.44%, ctx=3818899, majf=0, minf=10

IO depths : 1=0.9%, 2=16.0%, 4=83.1%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=705519,1646428,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=4

Run status group 0 (all jobs):

READ: bw=294MiB/s (308MB/s), 294MiB/s-294MiB/s (308MB/s-308MB/s), io=86.1GiB(92.5GB), run=300010-300010msec

WRITE: bw=686MiB/s (719MB/s), 686MiB/s-686MiB/s (719MB/s-719MB/s), io=201GiB (216 GB), run=300010-300010msec

EDIT: I had to trim these values a bit because otherwise the posting would exceed the allowed character limit.

Pool Layout:

Code:

pool: tank

state: ONLINE

scan: scrub repaired 0B in 00:00:00 with 0 errors on Sun Oct 10 00:00:00 2021

config:

NAME STATE READ WRITE CKSUM

tank ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

gptid/43ce125d-eff2-11eb-9c5d-3cecef5ee76e ONLINE 0 0 0

gptid/43d723d8-eff2-11eb-9c5d-3cecef5ee76e ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

gptid/43b92e81-eff2-11eb-9c5d-3cecef5ee76e ONLINE 0 0 0

gptid/43dc9dd2-eff2-11eb-9c5d-3cecef5ee76e ONLINE 0 0 0

mirror-2 ONLINE 0 0 0

gptid/4390162d-eff2-11eb-9c5d-3cecef5ee76e ONLINE 0 0 0

gptid/43e6a086-eff2-11eb-9c5d-3cecef5ee76e ONLINE 0 0 0

mirror-3 ONLINE 0 0 0

gptid/43aa6c09-eff2-11eb-9c5d-3cecef5ee76e ONLINE 0 0 0

gptid/43dc5d25-eff2-11eb-9c5d-3cecef5ee76e ONLINE 0 0 0

spares

gptid/43f04a14-eff2-11eb-9c5d-3cecef5ee76e AVAIL

The pool consists of 9 SSDs in total. Each SSD is a SEAGATE XS1920SE70094 with 1920 GByte capacity.

Shouldn't there be a lot more speeds with that machine?

A poor Xeon Bronze is built into this system, with a clock rate of 1,9 GHz without Turbo. I can see 100% CPU usage during some tests. Could this be the bottleneck?

Thank you for all your help!

Last edited: