-

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum has become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Disck activity falls to zero if there are file deletions

- Thread starter vaschthestampede

- Start date

vaschthestampede

Dabbler

- Joined

- Apr 8, 2018

- Messages

- 44

I guess because it will have to read and rewrite every file in the entire Dataset.Very slow: it's at least twice the operations per entry on a low-iops HDD.

Data integrity is definitely something I want to maintain.As far as I know, none that retains data integrity.

Why not? As I've understood it:As far as I know, none that retains data integrity.

Add dedup VDEV.

Dedup data is still on spinning rust.

Delete all blocks that cause the dedup data on spinning rust and reupload them.

That will cause all dedup data being written to the new and free dedup VDEV.

I'm sure there is a script for that. Just like for rebalancing data VDEVs.

- Joined

- Jul 12, 2022

- Messages

- 3,222

There are no other ways to proceed if they don't want to act in a distructive way (wipe everything, including the DDT table :D).Why not? As I've understood it:

Add dedup VDEV.

Dedup data is still on spinning rust.

Delete all blocks that cause the dedup data on spinning rust and reupload them.

That will cause all dedup data being written to the new and free dedup VDEV.

I'm sure there is a script for that. Just like for rebalancing data VDEVs.

At least, none that I know of; admittedly, I know very little about dedup.

- Joined

- Feb 6, 2014

- Messages

- 5,112

It will just be very slow, as you're observing from the disk I/O "basically falling to 0" - I guarantee there's a lot of "disk activity" going on behind the scenes in the form of the heads seeking to mostly-random locations and handling small writes.To be honest, I didn't understand this part.

Are you telling me that this operation is not enough?

Or just that it will be slow?

Or both?

Assuming additional dedup SSDs are installed, new writes and updates to the DDT will go there, but until all data that has its deduplication hash stored on the HDDs is either deleted from the system, or a new copy is copied to it (thus incrementing the refcnt value) there remains the risk of suddenly slowing down.

vaschthestampede

Dabbler

- Joined

- Apr 8, 2018

- Messages

- 44

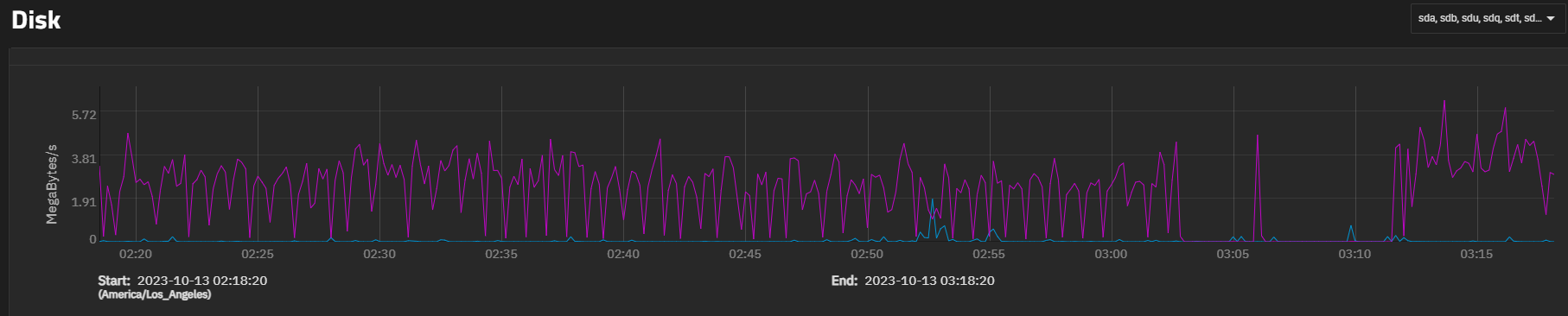

This is a report showing the disk activity, only one but the others were the same, during the issue.

I say the activity falls to zero because the report shows no read or write activity.

I don't question that there's a lot of disk activity going on behind the scenes, but it is not reported.

I say the activity falls to zero because the report shows no read or write activity.

I don't question that there's a lot of disk activity going on behind the scenes, but it is not reported.

- Joined

- Jul 12, 2022

- Messages

- 3,222

You are looking at the wrong graph: in the "Disk operations detailed" tab the file deletion activity is shown.I don't question that there's a lot of disk activity going on behind the scenes, but it is not reported.

Last edited:

vaschthestampede

Dabbler

- Joined

- Apr 8, 2018

- Messages

- 44

in the "Disk operations detailed" tab the file deletion activity is shown.

Where is thi report?

It shall appear in thy reporting tab if thou lookest at reporting -> disks -> disk I/O.Where is thi report?

Or via shell zpool iostat -v 2

vaschthestampede

Dabbler

- Joined

- Apr 8, 2018

- Messages

- 44

It's the report I shared.It shall appear in thy reporting tab if thou lookest at reporting -> disks -> disk I/O.

Or via shell zpool iostat -v 2

If, or rather when, it happens again I will try to execute this command.It shall appear in thy reporting tab if thou lookest at reporting -> disks -> disk I/O.

Or via shell zpool iostat -v 2

vaschthestampede

Dabbler

- Joined

- Apr 8, 2018

- Messages

- 44

Do you think that if I run this procedure there will be the same problems and therefore the volume will not be available?Very slow: it's at least twice the operations per entry on a low-iops HDD.

- Joined

- Jul 12, 2022

- Messages

- 3,222

I'm not sure I follow what you mean... it has been explained your situation and how doing certain things will impact your system.Do you think that if I run this procedure there will be the same problems and therefore the volume will not be available?

vaschthestampede

Dabbler

- Joined

- Apr 8, 2018

- Messages

- 44

I'll try to explain myself better.

If, once I added other disks for dedup, I run the VDEV Rebalancing script, could the problem of disk activity dropping to zero arise?

I have this doubt precisely because I think that this script deletes and rewrites all the files.

I apologize for my poor English.

If, once I added other disks for dedup, I run the VDEV Rebalancing script, could the problem of disk activity dropping to zero arise?

I have this doubt precisely because I think that this script deletes and rewrites all the files.

I apologize for my poor English.

Yes. You will need to go through the bottleneck once to remove all the dedup data stored in the HDDs.I'll try to explain myself better.

If, once I added other disks for dedup, I run the VDEV Rebalancing script, could the problem of disk activity dropping to zero arise?

I have this doubt precisely because I think that this script deletes and rewrites all the files.

I apologize for my poor English.

vaschthestampede

Dabbler

- Joined

- Apr 8, 2018

- Messages

- 44

I'm afraid that wouldn't work at all.

github.com

github.com

GitHub - markusressel/zfs-inplace-rebalancing: Simple bash script to rebalance pool data between all mirrors when adding vdevs to a pool.

Simple bash script to rebalance pool data between all mirrors when adding vdevs to a pool. - markusressel/zfs-inplace-rebalancing

Why not?I'm afraid that wouldn't work at all.

GitHub - markusressel/zfs-inplace-rebalancing: Simple bash script to rebalance pool data between all mirrors when adding vdevs to a pool.

Simple bash script to rebalance pool data between all mirrors when adding vdevs to a pool. - markusressel/zfs-inplace-rebalancinggithub.com

vaschthestampede

Dabbler

- Joined

- Apr 8, 2018

- Messages

- 44

The link has the following text highlighted:

EDIT:

The new drives arrived today and I just set them up.

I let them work for a while and test, on newly copied files, that the problem does not occur.

EDIT2:

I'm noticing that it seems to be doing some sort of rebalancing!

This is the situation as soon as the disks are configured:

This a few minutes later:

No Deduplication

Due to the working principle of this script, which essentially creates a duplicate file on purpose, deduplication will most definitely prevent it from working as intended. If you use deduplication you probably have to resort to a more expensive rebalancing method that involves additional drives.

EDIT:

The new drives arrived today and I just set them up.

I let them work for a while and test, on newly copied files, that the problem does not occur.

EDIT2:

I'm noticing that it seems to be doing some sort of rebalancing!

This is the situation as soon as the disks are configured:

Code:

dedup - - - - - -

mirror-2 3.47T 16.8G 4.44K 16.2K 18.5M 118M

d8c51d4c-1dd3-4648-a990-fdf7d6972333 - - 2.22K 8.11K 9.23M 58.9M

abf1aa23-632f-4042-9490-103aa8529599 - - 2.22K 8.10K 9.24M 58.9M

mirror-7 9.29G 3.48T 0 1.40K 278 149M

e0944b41-cc69-4380-b1a3-971d19a47b49 - - 0 713 139 74.6M

b7ce854b-f262-4c93-aed2-ac92f1846aa3 - - 0 717 139 74.6MThis a few minutes later:

Code:

dedup - - - - - -

mirror-2 3.40T 89.8G 4.44K 16.2K 18.5M 118M

d8c51d4c-1dd3-4648-a990-fdf7d6972333 - - 2.22K 8.10K 9.23M 58.9M

abf1aa23-632f-4042-9490-103aa8529599 - - 2.22K 8.10K 9.24M 58.9M

mirror-7 83.8G 3.40T 216 2.62K 865K 113M

e0944b41-cc69-4380-b1a3-971d19a47b49 - - 108 1.31K 432K 56.5M

b7ce854b-f262-4c93-aed2-ac92f1846aa3 - - 108 1.31K 432K 56.5M

Last edited:

vaschthestampede

Dabbler

- Joined

- Apr 8, 2018

- Messages

- 44

The problem is appearing again even if the available space on the disks for the dedup is high.

Are there any other checks I can do?

Code:

dedup - - - - - -

mirror-2 2.68T 819G 3.25K 9.73K 14.1M 70.7M

d8c51d4c-1dd3-4648-a990-fdf7d6972333 - - 1.63K 4.86K 7.04M 35.3M

abf1aa23-632f-4042-9490-103aa8529599 - - 1.63K 4.86K 7.04M 35.3M

mirror-7 2.67T 829G 2.25K 4.26K 10.3M 33.8M

e0944b41-cc69-4380-b1a3-971d19a47b49 - - 1.12K 2.13K 5.16M 16.9M

b7ce854b-f262-4c93-aed2-ac92f1846aa3 - - 1.12K 2.13K 5.15M 16.9MCode:

dedup: DDT entries 10111421243, size 582B on disk, 188B in core

bucket allocated referenced

______ ______________________________ ______________________________

refcnt blocks LSIZE PSIZE DSIZE blocks LSIZE PSIZE DSIZE

------ ------ ----- ----- ----- ------ ----- ----- -----

1 7.98G 1018T 921T 928T 7.98G 1018T 921T 928T

2 1.37G 176T 164T 164T 2.89G 370T 345T 347T

4 64.3M 6.62T 6.33T 6.43T 315M 29.5T 28.1T 28.8T

8 1000K 83.7G 61.0G 64.1G 10.5M 771G 537G 579G

16 1.23M 156G 15.9G 21.8G 30.8M 3.82T 375G 524G

32 1.45M 186G 23.4G 30.1G 61.9M 7.72T 1019G 1.27T

64 114K 13.9G 4.05G 4.46G 8.65M 1.05T 319G 351G

128 10.9K 995M 284M 338M 1.80M 156G 41.6G 51.0G

256 7.00K 171M 17.5M 72.7M 2.64M 59.3G 5.92G 26.9G

512 1.33K 15.9M 3.38M 14.3M 722K 11.1G 2.32G 8.01G

1K 837 101M 3.81M 8.16M 1.23M 153G 5.59G 12.1G

2K 83 8.28M 556K 1.02M 261K 26.6G 1.66G 3.13G

4K 50 5.27M 196K 494K 278K 28.6G 1.03G 2.64G

8K 58 6.51M 469K 777K 551K 61.5G 4.64G 7.48G

16K 43 562K 57.5K 393K 964K 12.5G 1.27G 8.61G

32K 15 651K 283K 384K 579K 24.9G 11.2G 14.9G

64K 3 130K 89.5K 110K 201K 8.76G 6.05G 7.36G

128K 3 260K 11.5K 27.4K 486K 40.6G 1.82G 4.34G

256K 3 256K 8.50K 27.4K 1.21M 95.8G 3.22G 11.1G

512K 32 4M 128K 292K 17.4M 2.17T 69.4G 159G

1M 15 1.75M 57.5K 137K 16.7M 1.88T 62.6G 152G

Total 9.42G 1.17P 1.07P 1.07P 11.3G 1.40P 1.27P 1.28P

Are there any other checks I can do?

Last edited:

- Joined

- Feb 6, 2014

- Messages

- 5,112

Comparing to your initial post here:

https://www.truenas.com/community/t...-there-are-file-deletions.113608/#post-786375

your table now sits at

Your deduplication table has nearly doubled in size to ~1.7T (in RAM) and 5.35T across your two disks, which is overflowing the dedup SSDs again.

https://www.truenas.com/community/t...-there-are-file-deletions.113608/#post-786375

Code:

dedup: DDT entries 5605103970, size 605B on disk, 195B in core

your table now sits at

Code:

dedup: DDT entries 10111421243, size 582B on disk, 188B in core

Your deduplication table has nearly doubled in size to ~1.7T (in RAM) and 5.35T across your two disks, which is overflowing the dedup SSDs again.

Last edited:

vaschthestampede

Dabbler

- Joined

- Apr 8, 2018

- Messages

- 44

Help me understand how these numbers are calculated.

I'm having trouble figuring out how to anticipate needing new disks for dedup.

I'm having trouble figuring out how to anticipate needing new disks for dedup.

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum will now become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums.Related topics on forums.truenas.com for thread: "Disck activity falls to zero if there are file deletions"

Similar threads

- Replies

- 1

- Views

- 2K

- Replies

- 0

- Views

- 3K