I'm having some issues with a new system I've setup and started to transfer files to. However, it's very slow. My other TrueNAS Core server (built on consumer hardware) worked great, nice and fast until the HBA started to fail and caused errors. I bought a refurbished SuperMicro SSG-6048R-E1CR36H as a replacement/upgrade for the old server with the intention of making the old server be a backup.

The SuperMicro has:

* 2x Xeon E5-2673 V4, 2.3GHz 20-Core CPUs

* 8x 32gb PC4-2400T DDR4 ECC (256gb total)

* 14x Toshiba MG03SCA400 4TB 7200RPM SAS 6G drives

* 2x 240gb SATA SSD for OS (cheap Crucial and Kioxia)

* 1x 2tb INTEL SSDPEKNU020TZ NVMe drive for Log.

* Supermicro AOM-SAS3-8i8e HBA in JBOD mode

* Dual 10gbps onboard network ports.

This gives me just enough storage to store all the critical files from the old server and stay under 80% pool usage.

From empty, the pool has struggled to write at more than 2-4Gbit/s, whether via SMB or rsync. The old server can transfer files to my computer at 9.something gbit/s, and i can write to it at similar speeds, so I'm fairly sure it's the new server since I get the same speeds when writing from 3 different computers with 10gbe network connections.

First check was iperf, network seems fine:

With parallel connections it looks a little better

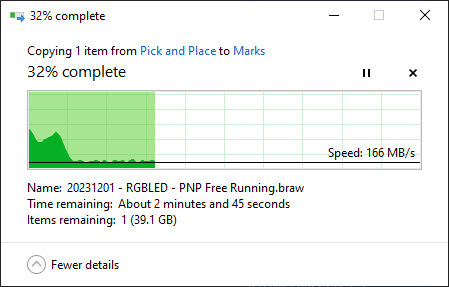

This is pretty typical for a file transfer from windows - a good burst of high speed then really dismal from then on. This is coming from NVMe on my computer, so bottle neck shouldn't be either my computer or the network.

I've tried moving the HBA from the default CPU1/SLOT2 PCIE3.0 X8 port to the CPU2 SLOT 4 PCIE3.0 x16 (note, card is only x8 length) but there was no performance change - no surprise there though.

CPU usage during copying is "0% average" with highest usage typically around 2-4%.

The pool is:

ZFS Health:

Disk Health:

In the SMB service advanced settings I've tried both multi-channel on and off - it doesn't make a difference, but I'm not surprised since rysnc sees the same performance writing to the pool.

At this point I'm not sure what to do next, my understanding (which could be very wrong) is that the log drive would allow network saturation speed writing of data even if the HBA/drives couldn't keep up... but it doesn't see any use. The pool here has more drives (and SAS vs SATA) compared to the old server, so I figured it would be faster for read/write than the old server if anything, plus it has a heck of a lot more processing power.

The SuperMicro has:

* 2x Xeon E5-2673 V4, 2.3GHz 20-Core CPUs

* 8x 32gb PC4-2400T DDR4 ECC (256gb total)

* 14x Toshiba MG03SCA400 4TB 7200RPM SAS 6G drives

* 2x 240gb SATA SSD for OS (cheap Crucial and Kioxia)

* 1x 2tb INTEL SSDPEKNU020TZ NVMe drive for Log.

* Supermicro AOM-SAS3-8i8e HBA in JBOD mode

* Dual 10gbps onboard network ports.

This gives me just enough storage to store all the critical files from the old server and stay under 80% pool usage.

From empty, the pool has struggled to write at more than 2-4Gbit/s, whether via SMB or rsync. The old server can transfer files to my computer at 9.something gbit/s, and i can write to it at similar speeds, so I'm fairly sure it's the new server since I get the same speeds when writing from 3 different computers with 10gbe network connections.

First check was iperf, network seems fine:

Code:

C:\temp\iperf-3.1.3-win64> ./iperf3 -c 192.168.1.70 -bidir Connecting to host 192.168.1.70, port 5201 [ 4] local 192.168.1.88 port 61668 connected to 192.168.1.70 port 5201 [ ID] Interval Transfer Bandwidth [ 4] 0.00-1.00 sec 894 MBytes 7.50 Gbits/sec [ 4] 1.00-2.00 sec 898 MBytes 7.52 Gbits/sec [ 4] 2.00-3.00 sec 893 MBytes 7.50 Gbits/sec [ 4] 3.00-4.00 sec 885 MBytes 7.42 Gbits/sec [ 4] 4.00-5.00 sec 924 MBytes 7.76 Gbits/sec [ 4] 5.00-6.00 sec 922 MBytes 7.73 Gbits/sec [ 4] 6.00-7.00 sec 925 MBytes 7.76 Gbits/sec [ 4] 7.00-8.00 sec 909 MBytes 7.62 Gbits/sec [ 4] 8.00-9.00 sec 900 MBytes 7.55 Gbits/sec [ 4] 9.00-10.00 sec 904 MBytes 7.58 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth [ 4] 0.00-10.00 sec 8.84 GBytes 7.59 Gbits/sec sender [ 4] 0.00-10.00 sec 8.84 GBytes 7.59 Gbits/sec receiver

With parallel connections it looks a little better

Code:

[SUM] 0.00-10.00 sec 11.0 GBytes 9.42 Gbits/sec sender [SUM] 0.00-10.00 sec 11.0 GBytes 9.42 Gbits/sec receiver

This is pretty typical for a file transfer from windows - a good burst of high speed then really dismal from then on. This is coming from NVMe on my computer, so bottle neck shouldn't be either my computer or the network.

I've tried moving the HBA from the default CPU1/SLOT2 PCIE3.0 X8 port to the CPU2 SLOT 4 PCIE3.0 x16 (note, card is only x8 length) but there was no performance change - no surprise there though.

CPU usage during copying is "0% average" with highest usage typically around 2-4%.

The pool is:

Code:

Data VDEVs 1 x RAIDZ2 | 13 wide | 3.64 TiB Metadata VDEVs VDEVs not assigned Log VDEVs 1 x DISK | 1 wide | 1.86 TiB Cache VDEVs VDEVs not assigned Spare VDEVs 1 x 3.64 TiB Dedup VDEVs VDEVs not assigned

ZFS Health:

Code:

ZFS Health check_circle Pool Status: Online Total ZFS Errors: 0 Scheduled Scrub Task: Set Auto TRIM: Off Last Scan: Finished Scrub on 2023-12-24 18:04:05 Last Scan Errors: 0 Last Scan Duration: 18 hours 2 minutes 48 seconds

Disk Health:

Code:

Disk Health check_circle Disks temperature related alerts: 0 Highest Temperature: 43 °C Lowest Temperature: 19 °C Average Disk Temperature: 33.056 °C Failed S.M.A.R.T. Tests: 0

In the SMB service advanced settings I've tried both multi-channel on and off - it doesn't make a difference, but I'm not surprised since rysnc sees the same performance writing to the pool.

At this point I'm not sure what to do next, my understanding (which could be very wrong) is that the log drive would allow network saturation speed writing of data even if the HBA/drives couldn't keep up... but it doesn't see any use. The pool here has more drives (and SAS vs SATA) compared to the old server, so I figured it would be faster for read/write than the old server if anything, plus it has a heck of a lot more processing power.