Hi folks,

I'm not particularly happy with my network performance from my Truenas Scale build and was hoping this could be improved.

My Truenas Scale server specs are:

X99 motherboard

64gb of ram

Xeon E5-2690v3 CPU

Intel X710 4x SFP+ NIC (with 2 in use using SFP+ DAC connected to a Unifi Aggregate Switch

NIC details

I've run tests from 2 external machines:

1.) Ubuntu server hosted directly on the Truenas Scale server and using the 2nd SFP+ NIC. It has 6x vCPU codes and 8gb of RAM

2.) Lenovo M920q i7-9700T with 32GB of RAM and a Supermicro AOC-STGN-I2S SFP+ card with Ubuntu Server freshly installed

I have a few datasets on my truenas Server but I'm trying in particular to optimize for my NVME dataset that has 2x 1TB NVMEs in a RAIDz1 array

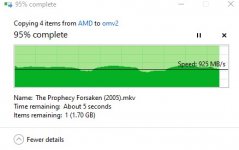

Looks like internally, the NVME is operating at acceptable speed with 1.5GBs reported:

Here's the same test from 1.)

and 2.)

iperf3 tests:

From 1.)

From 2.)

I'll be able getting another system online with a 10gb card so I'll be able to test without Truenas but any thoughts?

Could there be NIC misconfiguration or an outdated NIC driver?

Better NFS/Dataset settings (althought doesn't the iperf3 test abstract the datasets and sharing protocols?)

I mean I don't think I'm hitting any CPU or RAM limitations and clearly the NICs negotiated at 10gb so really not sure what's going on...

And just for your info. What i'm trying to achieve is the fastest possible remote storage for a Promox cluster and also perhaps for (hosted on the Proxmox nodes) kubernetes/Docker persistent data. I'm planning to add a x16 NVME adapter with 4x NVMEs but clearly I'm not even able to get close to saturate the current NVME setup.

Any help appreciated!

I'm not particularly happy with my network performance from my Truenas Scale build and was hoping this could be improved.

My Truenas Scale server specs are:

X99 motherboard

64gb of ram

Xeon E5-2690v3 CPU

Intel X710 4x SFP+ NIC (with 2 in use using SFP+ DAC connected to a Unifi Aggregate Switch

NIC details

Code:

sudo lspci | grep X710

04:00.0 Ethernet controller: Intel Corporation Ethernet Controller X710 for 10GbE SFP+ (rev 02)

04:00.1 Ethernet controller: Intel Corporation Ethernet Controller X710 for 10GbE SFP+ (rev 02)

04:00.2 Ethernet controller: Intel Corporation Ethernet Controller X710 for 10GbE SFP+ (rev 02)

04:00.3 Ethernet controller: Intel Corporation Ethernet Controller X710 for 10GbE SFP+ (rev 02)

sudo find /sys | grep drivers.*04:00.0

/sys/bus/pci/drivers/i40e/0000:04:00.0

sudo find /sys | grep drivers.*04:00.1

/sys/bus/pci/drivers/i40e/0000:04:00.1

sudo lshw -class network

*-network:0

description: Ethernet interface

product: Ethernet Controller X710 for 10GbE SFP+

vendor: Intel Corporation

physical id: 0

bus info: pci@0000:04:00.0

logical name: ens4f0

version: 02

serial: 40:a6:b7:3b:2d:e8

size: 10Gbit/s

capacity: 10Gbit/s

width: 64 bits

clock: 33MHz

capabilities: pm msi msix pciexpress vpd bus_master cap_list rom ethernet physical fibre 10000bt-fd

configuration: autonegotiation=off broadcast=yes driver=i40e driverversion=5.15.79+truenas duplex=full firmware=6.80 0x800042e1 0.385.97 ip=192.168.6.5 latency=0 link=yes multicast=yes speed=10Gbit/s

resources: irq:29 memory:90000000-907fffff memory:92800000-92807fff memory:fb980000-fb9fffff memory:92000000-921fffff memory:92820000-9289ffff

*-network:1

description: Ethernet interface

product: Ethernet Controller X710 for 10GbE SFP+

vendor: Intel Corporation

physical id: 0.1

bus info: pci@0000:04:00.1

logical name: ens4f1

version: 02

serial: 40:a6:b7:3b:2d:e9

size: 10Gbit/s

capacity: 10Gbit/s

width: 64 bits

clock: 33MHz

capabilities: pm msi msix pciexpress vpd bus_master cap_list rom ethernet physical fibre 10000bt-fd

configuration: autonegotiation=off broadcast=yes driver=i40e driverversion=5.15.79+truenas duplex=full firmware=6.80 0x800042e1 0.385.97 latency=0 link=yes multicast=yes speed=10Gbit/s

resources: irq:29 memory:90800000-90ffffff memory:92808000-9280ffff memory:fb900000-fb97ffff memory:92200000-923fffff memory:928a0000-9291ffff

I've run tests from 2 external machines:

1.) Ubuntu server hosted directly on the Truenas Scale server and using the 2nd SFP+ NIC. It has 6x vCPU codes and 8gb of RAM

2.) Lenovo M920q i7-9700T with 32GB of RAM and a Supermicro AOC-STGN-I2S SFP+ card with Ubuntu Server freshly installed

I have a few datasets on my truenas Server but I'm trying in particular to optimize for my NVME dataset that has 2x 1TB NVMEs in a RAIDz1 array

Looks like internally, the NVME is operating at acceptable speed with 1.5GBs reported:

Code:

time dd if=/dev/zero of=/mnt/NVME_Pool/Docker_Data/testfile bs=16k count=128k 131072+0 records in 131072+0 records out 2147483648 bytes (2.1 GB, 2.0 GiB) copied, 1.6583 s, 1.3 GB/s

Here's the same test from 1.)

Code:

dd if=/dev/zero of=/mnt/nfsmount/testfile bs=16k count=128k 131072+0 records in 131072+0 records out 2147483648 bytes (2.1 GB, 2.0 GiB) copied, 10.1924 s, 211 MB/s

and 2.)

Code:

dd if=/dev/zero of=/mnt/NVME_Pool/Docker_Data/testfile bs=16k count=128k 131072+0 records in 131072+0 records out 2147483648 bytes (2.1 GB, 2.0 GiB) copied, 1.6583 s, 1.3 GB/s

iperf3 tests:

From 1.)

Code:

iperf3 -P 20 -c 192.168.6.5 -p 8008 Connecting to host 192.168.6.5, port 8008 [ 5] local 192.168.6.7 port 36192 connected to 192.168.6.5 port 8008 <snip> [ 43] local 192.168.6.7 port 36364 connected to 192.168.6.5 port 8008 [ ID] Interval Transfer Bitrate Retr Cwnd [ 5] 0.00-1.00 sec 15.6 MBytes 131 Mbits/sec 0 202 KBytes <snip> [ 43] 0.00-1.00 sec 11.5 MBytes 96.2 Mbits/sec 0 168 KBytes [SUM] 0.00-1.00 sec 275 MBytes 2.30 Gbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 1.00-2.00 sec 24.3 MBytes 204 Mbits/sec 0 325 KBytes [<snip> [ 43] 1.00-2.00 sec 14.9 MBytes 125 Mbits/sec 0 175 KBytes [SUM] 1.00-2.00 sec 348 MBytes 2.92 Gbits/sec 4 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 2.00-3.00 sec 19.3 MBytes 162 Mbits/sec 0 325 KBytes <snip> [ 43] 2.00-3.00 sec 12.3 MBytes 103 Mbits/sec 0 184 KBytes [SUM] 2.00-3.00 sec 278 MBytes 2.33 Gbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 3.00-4.00 sec 18.1 MBytes 152 Mbits/sec 0 325 KBytes <snip> [ 43] 3.00-4.00 sec 12.3 MBytes 103 Mbits/sec 0 184 KBytes [SUM] 3.00-4.00 sec 284 MBytes 2.38 Gbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 4.00-5.00 sec 23.0 MBytes 193 Mbits/sec 0 325 KBytes <snip> [ 43] 4.00-5.00 sec 15.0 MBytes 126 Mbits/sec 0 191 KBytes [SUM] 4.00-5.00 sec 365 MBytes 3.07 Gbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 5.00-6.00 sec 22.2 MBytes 186 Mbits/sec 0 325 KBytes <snip> [ 43] 5.00-6.00 sec 14.0 MBytes 118 Mbits/sec 0 197 KBytes [SUM] 5.00-6.00 sec 344 MBytes 2.88 Gbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 6.00-7.00 sec 21.7 MBytes 182 Mbits/sec 0 325 KBytes <snip> [ 43] 6.00-7.00 sec 15.8 MBytes 133 Mbits/sec 0 197 KBytes [SUM] 6.00-7.00 sec 359 MBytes 3.01 Gbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 7.00-8.00 sec 20.3 MBytes 171 Mbits/sec 0 325 KBytes <snip> [ 43] 7.00-8.00 sec 13.9 MBytes 117 Mbits/sec 0 197 KBytes [SUM] 7.00-8.00 sec 343 MBytes 2.88 Gbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 8.00-9.00 sec 22.3 MBytes 187 Mbits/sec 0 325 KBytes <snip> [ 43] 8.00-9.00 sec 15.4 MBytes 129 Mbits/sec 0 208 KBytes [SUM] 8.00-9.00 sec 365 MBytes 3.06 Gbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 9.00-10.00 sec 18.8 MBytes 158 Mbits/sec 0 325 KBytes <snip> [ 43] 9.00-10.00 sec 16.0 MBytes 135 Mbits/sec 0 270 KBytes [SUM] 9.00-10.00 sec 306 MBytes 2.57 Gbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bitrate Retr [ 5] 0.00-10.00 sec 206 MBytes 172 Mbits/sec 0 sender <snip> [ 43] 0.00-10.00 sec 140 MBytes 117 Mbits/sec receiver [SUM] 0.00-10.00 sec 3.19 GBytes 2.74 Gbits/sec 4 sender [SUM] 0.00-10.00 sec 3.17 GBytes 2.72 Gbits/sec receiver iperf Done.

From 2.)

Code:

iperf3 -P 20 -c 192.168.6.5 -p 8008 Connecting to host 192.168.6.5, port 8008 [ 5] local 192.168.4.219 port 40520 connected to 192.168.6.5 port 8008 <snip> [ 43] local 192.168.4.219 port 40698 connected to 192.168.6.5 port 8008 [ ID] Interval Transfer Bitrate Retr Cwnd [ 5] 0.00-1.00 sec 36.5 MBytes 306 Mbits/sec 0 1.28 MBytes <snip> [ 43] 0.00-1.00 sec 28.7 MBytes 241 Mbits/sec 0 834 KBytes [SUM] 0.00-1.00 sec 491 MBytes 4.11 Gbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 1.00-2.00 sec 27.5 MBytes 231 Mbits/sec 0 1.28 MBytes <snip> [ 43] 1.00-2.00 sec 27.5 MBytes 231 Mbits/sec 0 899 KBytes [SUM] 1.00-2.00 sec 431 MBytes 3.62 Gbits/sec 225 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 2.00-3.00 sec 23.8 MBytes 199 Mbits/sec 0 1.35 MBytes <snip> [ 43] 2.00-3.00 sec 22.5 MBytes 189 Mbits/sec 0 899 KBytes [SUM] 2.00-3.00 sec 417 MBytes 3.50 Gbits/sec 90 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 3.00-4.00 sec 22.5 MBytes 189 Mbits/sec 0 1.35 MBytes <snip> [ 43] 3.00-4.00 sec 21.2 MBytes 178 Mbits/sec 18 191 KBytes [SUM] 3.00-4.00 sec 419 MBytes 3.51 Gbits/sec 108 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 4.00-5.00 sec 22.5 MBytes 189 Mbits/sec 0 1.35 MBytes <snip> [ 43] 4.00-5.00 sec 21.2 MBytes 178 Mbits/sec 27 629 KBytes [SUM] 4.00-5.00 sec 432 MBytes 3.63 Gbits/sec 117 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 5.00-6.00 sec 21.2 MBytes 178 Mbits/sec 0 1.35 MBytes <snip> [ 43] 5.00-6.00 sec 23.8 MBytes 199 Mbits/sec 0 629 KBytes [SUM] 5.00-6.00 sec 452 MBytes 3.80 Gbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 6.00-7.00 sec 22.5 MBytes 189 Mbits/sec 0 1.35 MBytes <snip> [ 43] 6.00-7.00 sec 28.8 MBytes 241 Mbits/sec 0 629 KBytes [SUM] 6.00-7.00 sec 485 MBytes 4.07 Gbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 7.00-8.00 sec 25.0 MBytes 210 Mbits/sec 0 1.35 MBytes <snip> [ 43] 7.00-8.00 sec 23.8 MBytes 199 Mbits/sec 0 629 KBytes [SUM] 7.00-8.00 sec 434 MBytes 3.64 Gbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 8.00-9.00 sec 21.2 MBytes 178 Mbits/sec 0 1.43 MBytes <snip> [ 43] 8.00-9.00 sec 22.5 MBytes 189 Mbits/sec 0 877 KBytes [SUM] 8.00-9.00 sec 418 MBytes 3.50 Gbits/sec 405 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 9.00-10.00 sec 22.5 MBytes 189 Mbits/sec 0 1.43 MBytes <snip> [ 43] 9.00-10.00 sec 25.0 MBytes 210 Mbits/sec 0 877 KBytes [SUM] 9.00-10.00 sec 429 MBytes 3.60 Gbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bitrate Retr [ 5] 0.00-10.00 sec 245 MBytes 206 Mbits/sec 0 sender <snip> [ 43] 0.00-10.00 sec 242 MBytes 203 Mbits/sec receiver [SUM] 0.00-10.00 sec 4.30 GBytes 3.70 Gbits/sec 945 sender [SUM] 0.00-10.00 sec 4.24 GBytes 3.64 Gbits/sec receiver iperf Done.

I'll be able getting another system online with a 10gb card so I'll be able to test without Truenas but any thoughts?

Could there be NIC misconfiguration or an outdated NIC driver?

Better NFS/Dataset settings (althought doesn't the iperf3 test abstract the datasets and sharing protocols?)

I mean I don't think I'm hitting any CPU or RAM limitations and clearly the NICs negotiated at 10gb so really not sure what's going on...

And just for your info. What i'm trying to achieve is the fastest possible remote storage for a Promox cluster and also perhaps for (hosted on the Proxmox nodes) kubernetes/Docker persistent data. I'm planning to add a x16 NVME adapter with 4x NVMEs but clearly I'm not even able to get close to saturate the current NVME setup.

Any help appreciated!