insan3

Dabbler

- Joined

- Apr 3, 2017

- Messages

- 11

I have updated to bluefin but i was a little frighting experience. All my apps wouldn't start. In kubectl i noticed the following:

( i removed all my own pods in this view for easier reading.

Also in the logs it was misery all along

I managed to get it working by unsetting the app pool, reboot, and add the pool again. After that, it worked fine.

Another thing i notice and haven't figured out yet is why the zfs pool upgrade notification keeps on, i did upgrade the pool in storage but it keeps on notifying me.

Anyone else is having this?

Code:

root@freenas[~]# k3s kubectl get pods -A NAMESPACE NAME READY STATUS RESTARTS AGE prometheus-operator prometheus-operator-5c7445d877-rbmtg 0/1 Completed 0 7d21h cnpg-system cnpg-controller-manager-854876b995-kc6wz 0/1 Completed 0 7d21h kube-system openebs-zfs-controller-0 0/5 Error 0 7d21h metallb-system controller-7597dd4f7b-58ggf 0/1 Completed 0 7d21h kube-system coredns-d76bd69b-8t5fx 0/1 Completed 0 7d21h kube-system nvidia-device-plugin-daemonset-xsnrr 0/1 Completed 0 7d21h metallb-system speaker-kb7qv 0/1 CrashLoopBackOff 16 (85s ago) 7d21h kube-system openebs-zfs-node-wxn5n 1/2 CrashLoopBackOff 17 (18s ago) 7d21h

( i removed all my own pods in this view for easier reading.

Also in the logs it was misery all along

Code:

<snip>

ec 30 14:26:23 freenas k3s[14741]: E1230 14:26:23.515357 14741 kuberuntime_manager.go:954] "Failed to stop sandbox" podSandboxID={Type:docker ID:30a0d090744cd891b64b6f5bf5d0a2d44930db1eb5ad2b277fba77b47ebe3dd3}

Dec 30 14:26:23 freenas k3s[14741]: E1230 14:26:23.515419 14741 kubelet.go:1806] failed to "KillPodSandbox" for "85a93451-1ca3-4892-b7d0-d9d82f461ade" with KillPodSandboxError: "rpc error: code = Unknown desc = networkPlugin cni failed to teardown pod \"svclb-unifi-comm-db7b87da-h2494_kube-system\" network: cni c>

Dec 30 14:26:23 freenas k3s[14741]: E1230 14:26:23.515462 14741 pod_workers.go:965] "Error syncing pod, skipping" err="failed to \"KillPodSandbox\" for \"85a93451-1ca3-4892-b7d0-d9d82f461ade\" with KillPodSandboxError: \"rpc error: code = Unknown desc = networkPlugin cni failed to teardown pod \\\"svclb-unifi-com>

Dec 30 14:26:24 freenas k3s[14741]: {"level":"warn","ts":"2022-12-30T14:26:24.379+0100","logger":"etcd-client","caller":"v3@v3.5.3-k3s1/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"etcd-endpoints://0xc0019ec700/kine.sock","attempt":0,"error":"rpc error: code = Unknown desc = no such t>

Dec 30 14:26:24 freenas k3s[14741]: E1230 14:26:24.445465 14741 kubelet.go:2373] "Container runtime network not ready" networkReady="NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized"

Dec 30 14:26:24 freenas k3s[14741]: E1230 14:26:24.515120 14741 pod_workers.go:965] "Error syncing pod, skipping" err="network is not ready: container runtime network not ready: NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized" pod="kube-system/>

Dec 30 14:26:24 freenas k3s[14741]: E1230 14:26:24.518083 14741 remote_runtime.go:269] "StopPodSandbox from runtime service failed" err="rpc error: code = Unknown desc = networkPlugin cni failed to teardown pod \"svclb-unifi-stun-e71d9e5a-n477w_kube-system\" network: cni config uninitialized" podSandboxID="aa2af2>

Dec 30 14:26:24 freenas k3s[14741]: E1230 14:26:24.518149 14741 kuberuntime_manager.go:954] "Failed to stop sandbox" podSandboxID={Type:docker ID:aa2af2633d453ab0aed5a74c60194cadd31cc1f4940c93f8b0b9cb58deb4076a}

Dec 30 14:26:24 freenas k3s[14741]: E1230 14:26:24.518227 14741 kubelet.go:1806] failed to "KillPodSandbox" for "c802fc6b-c324-4a3f-a0db-f44c59a16288" with KillPodSandboxError: "rpc error: code = Unknown desc = networkPlugin cni failed to teardown pod \"svclb-unifi-stun-e71d9e5a-n477w_kube-system\" network: cni c>

Dec 30 14:26:24 freenas k3s[14741]: E1230 14:26:24.518283 14741 pod_workers.go:965] "Error syncing pod, skipping" err="failed to \"KillPodSandbox\" for \"c802fc6b-c324-4a3f-a0db-f44c59a16288\" with KillPodSandboxError: \"rpc error: code = Unknown desc = networkPlugin cni failed to teardown pod \\\"svclb-unifi-stu>

</snip>

I managed to get it working by unsetting the app pool, reboot, and add the pool again. After that, it worked fine.

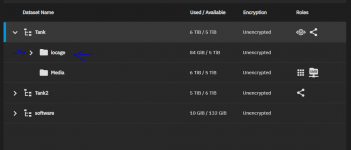

Another thing i notice and haven't figured out yet is why the zfs pool upgrade notification keeps on, i did upgrade the pool in storage but it keeps on notifying me.

New ZFS version or feature flags are available for pool 'tank'. Upgrading pools is a one-time process that can prevent rolling the system back to an earlier TrueNAS version. It is recommended to read the TrueNAS release notes and confirm you need the new ZFS feature flags before upgrading a pool.

Anyone else is having this?