Daisuke

Contributor

- Joined

- Jun 23, 2011

- Messages

- 1,041

My Bluefin 22.12.0 upgrade from Angelfish 22.02.4 was flawless. I'm going to post all steps I took during my TrueNAS Scale Build upgrade, as well other improvements and optimizations I implemented, as reference for other users.

For related inquiries or questions involving formatted code, please post the output using

My Server Specs

You will find various Linux terminal commands present into this guide. Since Scale is a Debian Linux based OS, it is recommended you become familiar with the Linux command-line environment and terminal. It will help you troubleshoot several issues, a lot easier.

Pools and Datasets

It is strongly recommended to separate your media storage, from your applications storage. Also, is preferable that root defined pool dataset names follow the Linux standard naming conventions. Users familiar with FHS, will find this beneficial.

Pool Disks Swap Partition

In Bluefin, each pool storage device has a predefined

Pool Disks or Reporting DIF Warnings

Is really important to follow this detailed guide, in today's world, reliable data storage is the most valuable asset. Recent versions of Linux kernel properly detect badly formatted disks and warn you about it.

Non-Root Local User

In Bluefin, non-root local user UID starts with

Data Protection

Configure S.M.A.R.T self-tests to measure the storage devices health, predict possible device failures and provide notifications on unsafe values. S.M.A.R.T. (Self-Monitoring, Analysis, and Reporting Technology) is a supplementary component built into modern storage devices through which devices monitor, store, and analyze the health of their operation.

Email Alerts

It is extremely important to have functional mail alerts in Scale, in order to receive notifications of a degraded pool or other critical errors. I personally use the Apple iCloud SMTP servers. You cannot send iCloud emails without an application password with software other than Apple products, is a two-factor security feature.

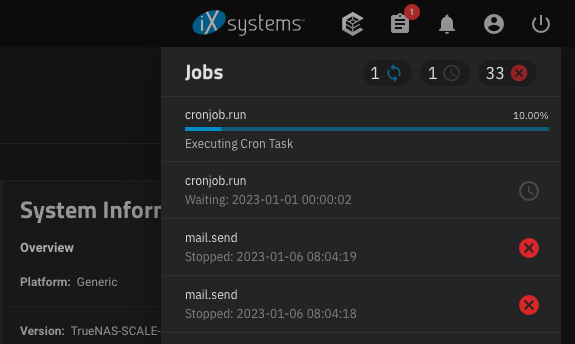

System Failed Jobs

In certain rare cases, you might experience certain cron jobs being stuck for days or other failed tasks:

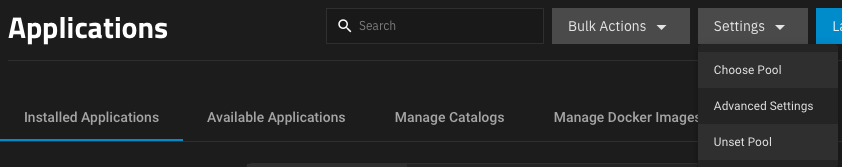

Kubernetes Service

If you get an error message telling you the Kubernetes service is not running or Unable to connect to kubernetes cluster in Bluefin, go to Apps > Settings > Unset Pool. Reboot the server and Set/Choose Pool. Once the pool set, it can take up to 30 minutes until all services are reconfigured, please be patient.

Kubernetes Applications

Is recommended to use the TrueCharts applications, their development team is specialized in Kubernetes charts, the same way iXsystems team is specialized in Scale OS. Beside robust charts, the main advantage TrueCharts applications offer is an uniform installer process. You will find the same familiar settings in each application and the team offers stellar support on their Discord channel, if you have any questions. All related application settings reflected in this guide are taken from their apps, thank you @truecharts for your community support.

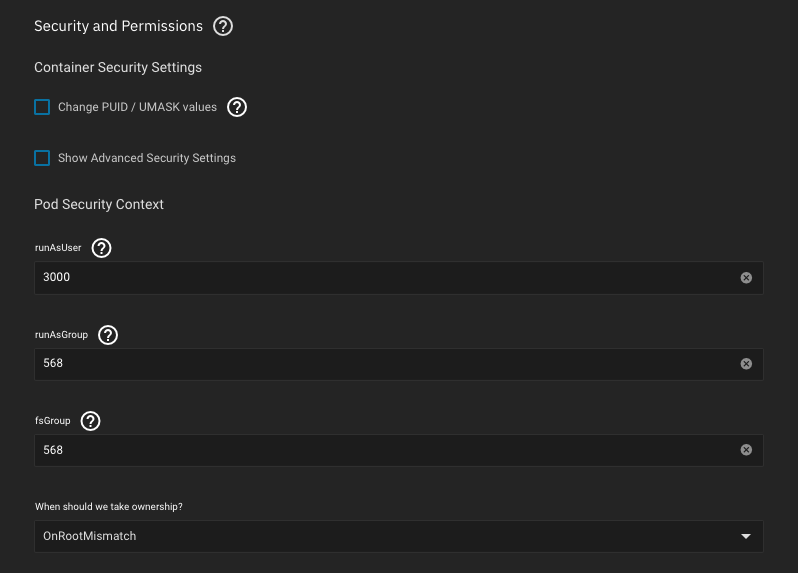

Kubernetes Applications Security

To avoid any file permission issues while sharing the same

You can always use the default

Kubernetes Applications Shell

The UI allows you to have access to any application shell, however, you will be able to access the shell only if you login into UI with

Kubernetes Cluster External Access (for Advanced Users)

If you are an experienced Kubernetes cluster administrator, you can access the Scale Kubernetes cluster remotely and troubleshoot quite easy any issues you might encounter. Thank you @blacktide for the useful information.

SMB Shares

New in Bluefin, shared hostPaths are considered insecure and are not recommended. That means, if you setup a dataset as SMB share and share that directory with multiple Kubernetes apps, you will get conflict warnings. This is actually a very good approach, for your data integrity and this is where dataset permissions play an important role.

iXsystems mentioned into their Release Notes: "As a last resort that can result in system and app instability, Host Path Safety Checks can be disabled in Apps > Settings > Advanced Settings." Do not disable that setting.

In simple terms, the

Datasets Structure Organization

You can easily organize your pool datasets structure with

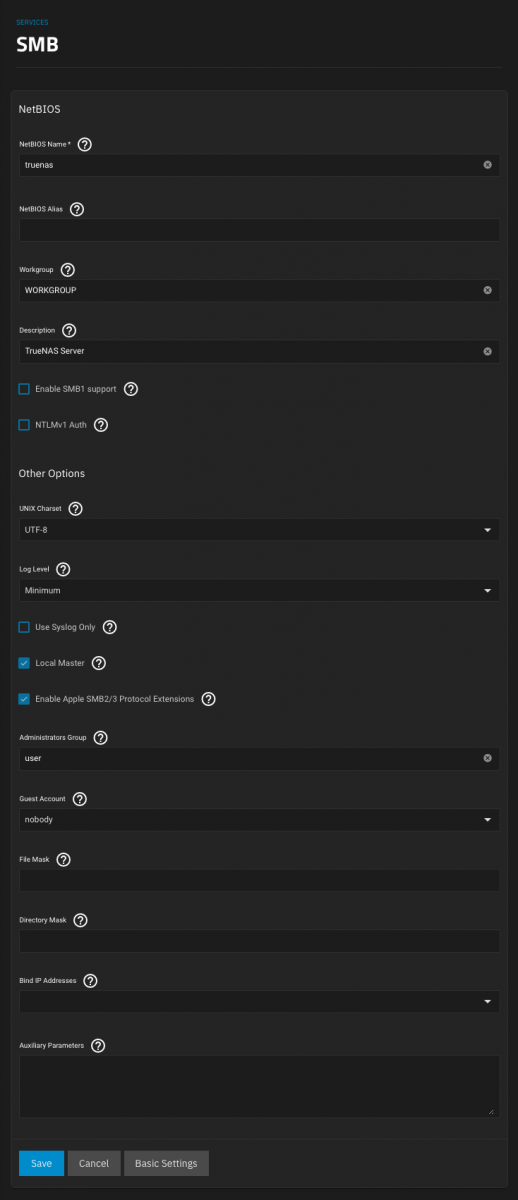

SMB Shares Configuration

Once your datasets structure is properly organized, proceed with the SMB shares configuration.

Plex Transcoding

Media that is incompatible with your device will be transcoded to a playable format. If you own a Plex compatible GPU, you can turn on Hardware-Accelerated Streaming in Plex Media Server. It is strongly recommended to create a Memory medium storage type, used specifically for transcoding.

Uninterruptible Power Supply

It is recommended to use an UPS, in order to avoid brief power failures, which will abruptly shutdown your server. These interruptions are known to corrupt your pool data, therefore is worth the investment.

For related inquiries or questions involving formatted code, please post the output using

[CODE]command[/CODE]. See more details into Linux Terminal Conventions section, below.My Server Specs

- Dell R720xd with 12x HGST Ultrastar He8 Helium (HUH728080ALE601) 8TB for default pool expandability

- 2x E5-2620 v2 @ 2.10GHz CPU

- 16x 16GB 2Rx4 PC3-12800R DDR3-1600 ECC, for a total of 256GB RAM

- 1x PERC H710 Mini, flashed to LSI 9207i firmware

- 1x PERC H810 PCIe, flashed to LSI 9207e firmware

- 1x NVIDIA Tesla P4 GPU, for transcoding

- 2x Samsung 870 EVO 500GB SATA SSD for software pool hosting Kubernetes applications

- 1x NetApp DS4246 enclosure with 12x HGST Ultrastar He8 Helium (HUH728080ALE601) 8TB for default pool expandability

You will find various Linux terminal commands present into this guide. Since Scale is a Debian Linux based OS, it is recommended you become familiar with the Linux command-line environment and terminal. It will help you troubleshoot several issues, a lot easier.

In a Linux command-line environment, there are two types of prompts at the beginning of an executed command:

When you post a terminal command into forums, it is important to use the

The code segment should contain both the executed command and outputted result:

In the above example, the command was executed as non-root user and escalated the execution as

- Dollar sign (

$) means you are a normal non-priviledged user - Hash (

#) means you are the system administrator (root)

#, everyone understands that command was executed as root user:Code:

$ ssh -l user uranus.lan $ id uid=3000(user) gid=3000(user) groups=3000(user),544(builtin_administrators),568(apps) $ sudo su - [sudo] password for user: # id uid=0(root) gid=0(root) groups=0(root),544(builtin_administrators)

When you post a terminal command into forums, it is important to use the

[CODE]command[/CODE], for readability:The code segment should contain both the executed command and outputted result:

Code:

$ sudo sg_scan -i /dev/sda

[sudo] password for user:

/dev/sda: scsi0 channel=0 id=0 lun=0 [em]

ATA HUH728080ALE601 0003 [rmb=0 cmdq=1 pqual=0 pdev=0x0]

root user with sudo.Pools and Datasets

It is strongly recommended to separate your media storage, from your applications storage. Also, is preferable that root defined pool dataset names follow the Linux standard naming conventions. Users familiar with FHS, will find this beneficial.

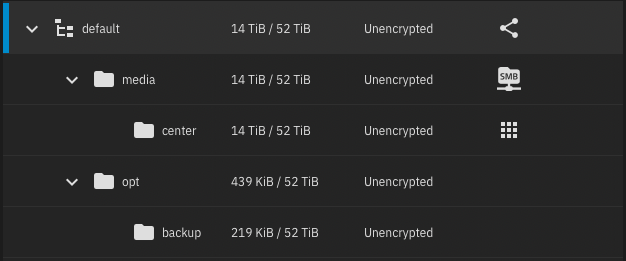

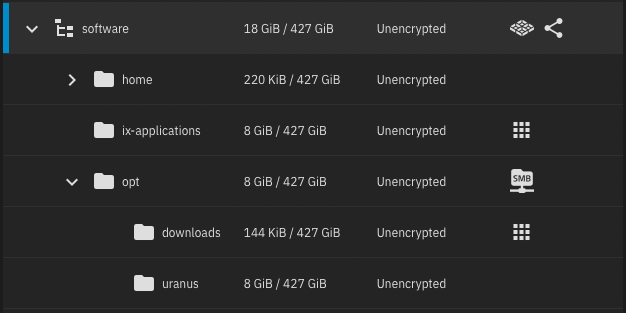

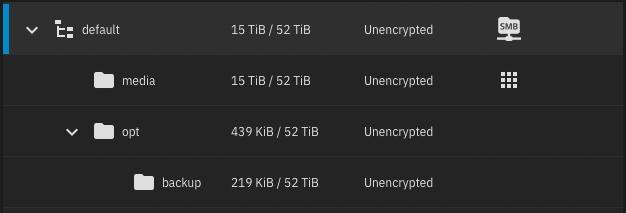

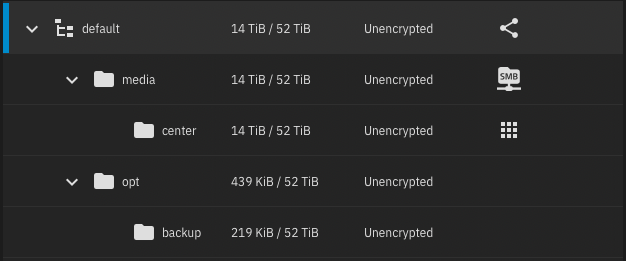

My pools and datasets setup:

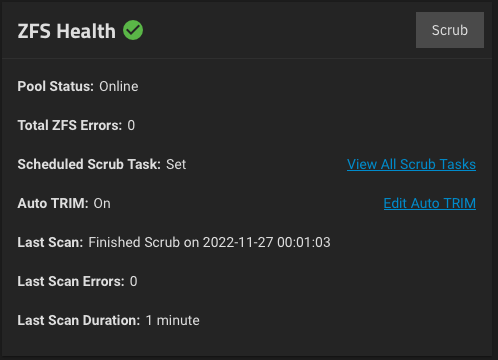

If you use SSDs for your pool, make sure you enable Auto TRIM pool option in Storage > ZFS Health:

TRIM is a command that helps Scale operating system know precisely which data blocks are no longer needed and can be deleted, or marked as free for rewriting, on SSDs. Whenever a delete command is issued, TRIM immediately wipes the pages or blocks where files are stored. Next time the operating system tries to write new data in that area, it does not have to wait first to delete it.

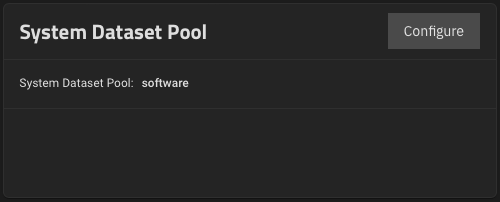

To take advantage of your SSDs speed, set the System Dataset Pool to

Datasets should have the

On the other hand, a MySQL InnoDB database stored on a dataset will greatly benefit from a

If

To determine the correct

Note that changing the

- default pool, using RaidZ2 CMR disks:

- media dataset used for SMB share access

- center dataset used for storage of media related files and accessed by multiple Kubernetes applications

- opt dataset used for shared data

- backup dataset used for backup files

- media dataset used for SMB share access

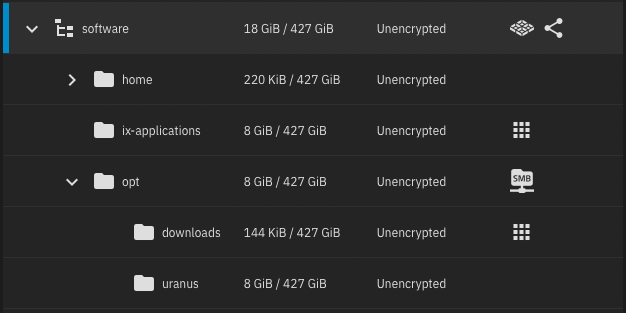

- software pool, using Mirror SSDs:

- home dataset used for non-root user home directories

- ix-applications dataset used for Kubernetes applications

- opt dataset used for SMB share access

- downloads dataset used for storage of temporary media related files and accessed by multiple Kubernetes applications

- uranus dataset used for a private Github repo where automated application backups are stored

If you use SSDs for your pool, make sure you enable Auto TRIM pool option in Storage > ZFS Health:

TRIM is a command that helps Scale operating system know precisely which data blocks are no longer needed and can be deleted, or marked as free for rewriting, on SSDs. Whenever a delete command is issued, TRIM immediately wipes the pages or blocks where files are stored. Next time the operating system tries to write new data in that area, it does not have to wait first to delete it.

To take advantage of your SSDs speed, set the System Dataset Pool to

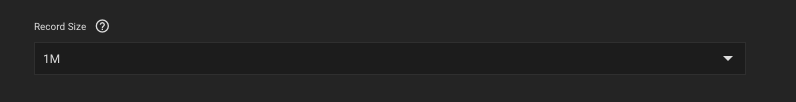

software pool:Datasets should have the

recordsize set according your specific dataset usage. For example, if media files larger than 5MB are stored on a dataset, recordsize should be set to 1M:On the other hand, a MySQL InnoDB database stored on a dataset will greatly benefit from a

recordsize set to 16K. That is because InnoDB defaults to a 16KB page size, so most operations will be done in individual 16K chunks of data.If

recordsize is a lot smaller than the size of the typical storage operation within the dataset, fragmentation will be greatly increased, leading to unnecessary performance. If recordsize is much higher than the size of the typical storage operation within the dataset, latency will be greatly increased. See openzfs performance tuning, for reference.To determine the correct

recordsize, you can calculate the average file size for a specific dataset with this command:Code:

# find /mnt/software/ix-applications -type f -ls | awk '{s+=$7} END {printf "%.0f\n", s/NR}' | numfmt --to=iec

43K

Note that changing the

recordsize affects only newly created files, existing files are unaffected. Therefore, is important to move your data to a new dataset with the correct recordsize. See man zfs, for reference.Pool Disks Swap Partition

In Bluefin, each pool storage device has a predefined

Linux swap partition of 2GB, used mainly to allow the use of slightly-different disk capacities in a physical setup. For example, if a replacement disk capacity is slightly smaller than other pool disks, the swap partition can be used to allocate additional space to ZFS partition. Thank you @Arwen and @winnielinnie for the useful information.In certain cases, it might be necessary to change the default

Set the default

Once the

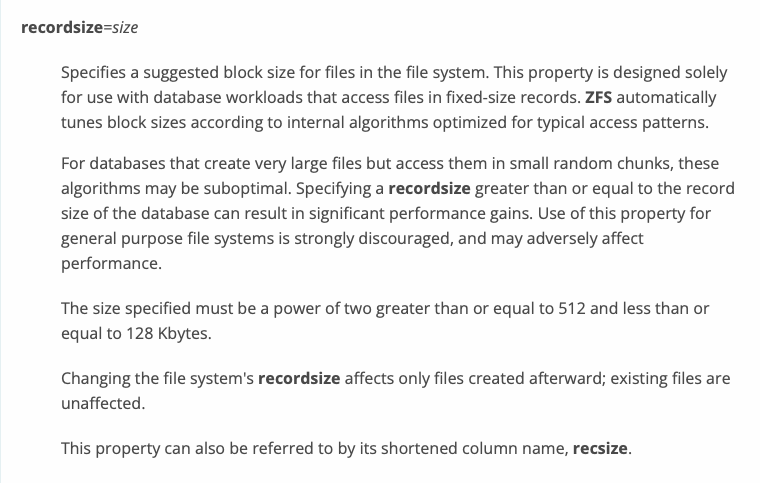

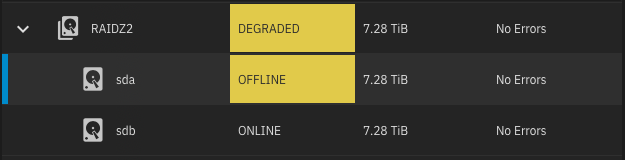

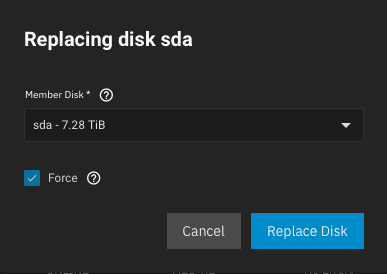

In UI, go to Storage > Pool Name > Manage Devices and take the disk offline:

Login into shell as

Back in UI, forcibly Replace the disk:

The replacement will trigger an automatic resilvering, this process will take several hours.

Linux swap partition size. Default setting in Bluefin:Code:

# midclt call system.advanced.config | jq '.swapondrive' 2

Set the default

Linux swap partition, to 4GB:Code:

# midclt call system.advanced.update '{"swapondrive": 4}' | jq '.swapondrive'

4

Once the

Linux swap partition size setting changed, you need to wipe each disk and resilver it.In UI, go to Storage > Pool Name > Manage Devices and take the disk offline:

Login into shell as

root user and wipe the disks signatures, this process will take only few seconds:Code:

# wipefs -af /dev/sda

Back in UI, forcibly Replace the disk:

The replacement will trigger an automatic resilvering, this process will take several hours.

Pool Disks or Reporting DIF Warnings

Is really important to follow this detailed guide, in today's world, reliable data storage is the most valuable asset. Recent versions of Linux kernel properly detect badly formatted disks and warn you about it.

Non-Root Local User

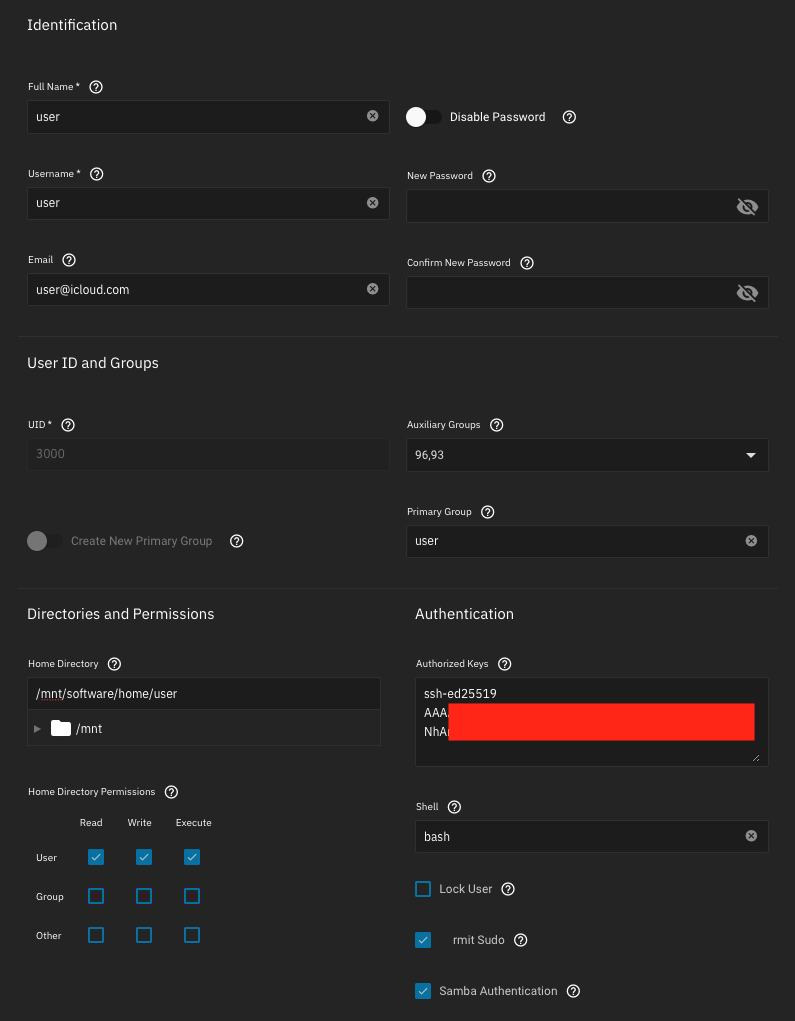

In Bluefin, non-root local user UID starts with

3000, versus Angelfish UID 1000. It is recommended after Bluefin upgrade to delete old non-local users and re-create them with the new UID structure, to avoid future permission conflicts. Prior upgrade, make sure your root user has the password enabled into Angelfish UI. Once upgraded to Bluefin, create a non-root local user to use it as UI login. On a clean install, admin user is created with UID 950 and root user password is disabled.Standard Linux user creation defines the same userid and groupid, then you can add additional groupids to your user. A standard Linux user also requires a

Create a

Make sure you set a valid email address, needed for testing the email alerts (see below Email Alerts section). The new UID

You should also generate a ssh key, login into your server as

Use your new

Next, recursively re-apply the correct user/group permissions to your

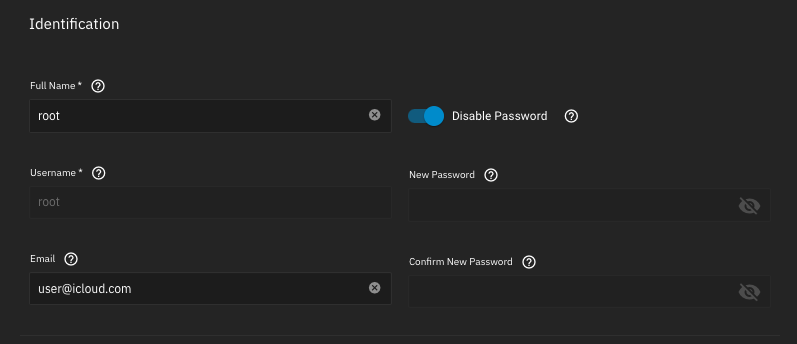

Once upgraded to Bluefin, disable password for

/home directory defined, in your case /mnt/pool/dataset/username. Auxiliary groupid 41 is builtin_administrators and auxiliary groupid 96 is apps (needed for Plex), names showing as ids will be fixed in Bluefin 22.12.1.Create a

/mnt/pool/dataset/username dataset (e.g. /mnt/software/home/user), where your user private information will be stored, then add a new user with following settings:Make sure you set a valid email address, needed for testing the email alerts (see below Email Alerts section). The new UID

3000 can be used into runAsUser setting for all Scale applications, see below Kubernetes Apps Security section.You should also generate a ssh key, login into your server as

user and run the following command:Code:

$ pwd /mnt/software/home/user $ ssh-keygen -t ed25519 -C 'user@icloud.com' Generating public/private ed25519 key pair. Enter file in which to save the key (/mnt/software/home/user/.ssh/id_ed25519): [press Enter] Enter passphrase (empty for no passphrase): [press Enter] Enter same passphrase again: [press Enter] Your identification has been saved in /mnt/software/home/user/.ssh/id_ed25519 Your public key has been saved in /mnt/software/home/user/.ssh/id_ed25519.pub The key fingerprint is: SHA256:kpfu/eLIwWkXyujcDQfUDX/FqAX0UoJUnabz8XR9esc user@icloud.com The key's randomart image is: +--[ED25519 256]--+ | .o+=o.+.| | ..+ +*..| | . . +=o .| | o . +o. =| | o S . o *o| | B + . o E| | . X o ..| | o = O. | | o =.+o. | +----[SHA256]-----+ $ mv .ssh/id_ed25519.pub .ssh/authorized_keys

Use your new

id_ed25519 private key to access the server, the authorized_keys file content will automatically show into Authorized Keys UI field.Next, recursively re-apply the correct user/group permissions to your

/mnt/pool/dataset/username dataset:Once upgraded to Bluefin, disable password for

root user (see caveat mentioned below into Kubernetes Apps Shell):Data Protection

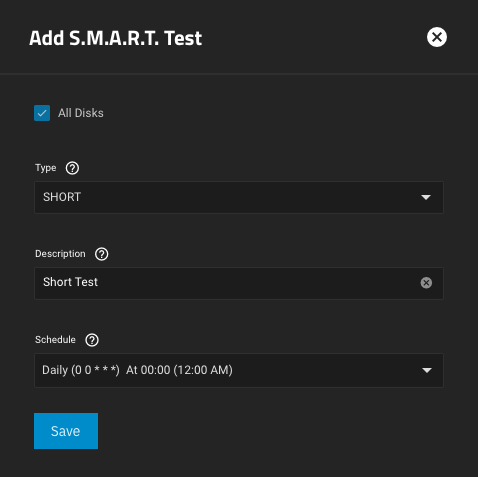

Configure S.M.A.R.T self-tests to measure the storage devices health, predict possible device failures and provide notifications on unsafe values. S.M.A.R.T. (Self-Monitoring, Analysis, and Reporting Technology) is a supplementary component built into modern storage devices through which devices monitor, store, and analyze the health of their operation.

There are four types of self-tests that a device can execute, all safe to user data:

It is recommended to run a daily SHORT self-test and a bi-monthly LONG self-test, on all your pool disks.

- SHORT - runs tests that have a high probability of detecting device problems

- LONG - same as SHORT check but with no time limit and with complete device surface examination

- CONVEYANCE - identifies if damage incurred during transportation of the device

- OFFLINE - runs an immediate offline Short self-test, in foreground

It is recommended to run a daily SHORT self-test and a bi-monthly LONG self-test, on all your pool disks.

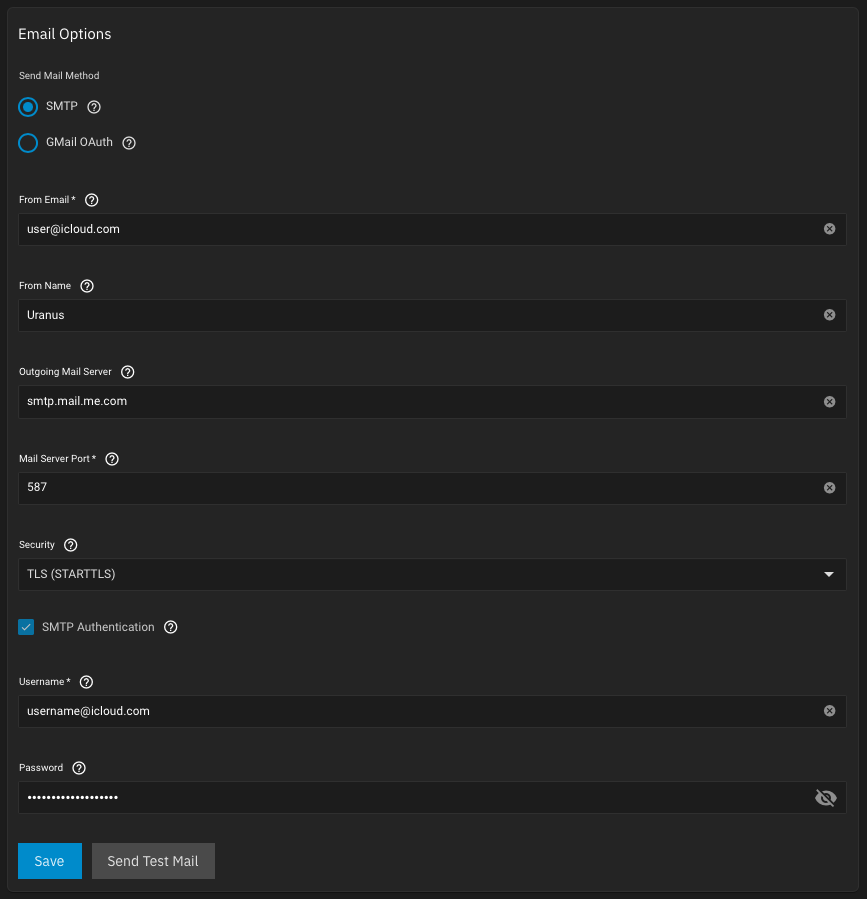

Email Alerts

It is extremely important to have functional mail alerts in Scale, in order to receive notifications of a degraded pool or other critical errors. I personally use the Apple iCloud SMTP servers. You cannot send iCloud emails without an application password with software other than Apple products, is a two-factor security feature.

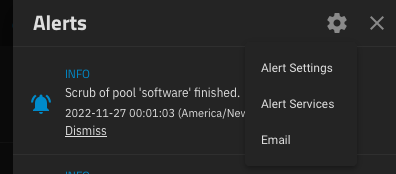

Go to Alerts and select Email from Settings dropdown menu:

iCloud settings example:

The Username must be your true AppleID email account or the linked iCloud alias (which you cannot modify or delete). From Email can be any

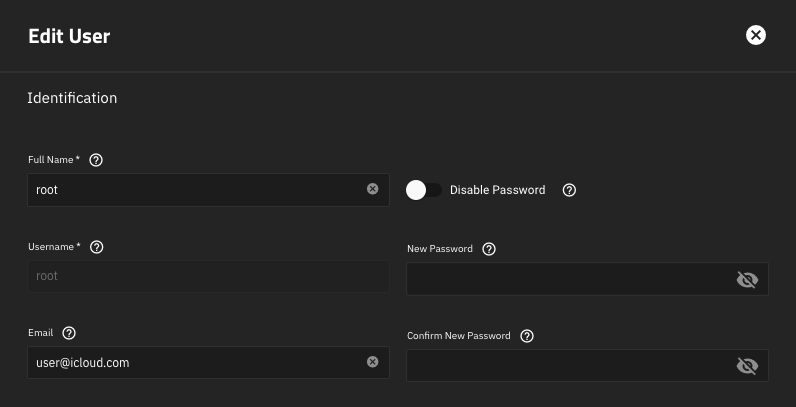

Next, go to Credentials > Local Users > root > Edit and add the email address you used into previous From Email field:

See also NAS-119145 related to wrong hostname, fixed in Bluefin 22.12-RC.1.

iCloud settings example:

The Username must be your true AppleID email account or the linked iCloud alias (which you cannot modify or delete). From Email can be any

@icloud.com alias you can create, modify or delete, but must be part of your list of linked emails to main AppleID. For example, you can use user@icloud.com, which is an account alias you created into username@icloud.com AppleID account.Next, go to Credentials > Local Users > root > Edit and add the email address you used into previous From Email field:

See also NAS-119145 related to wrong hostname, fixed in Bluefin 22.12-RC.1.

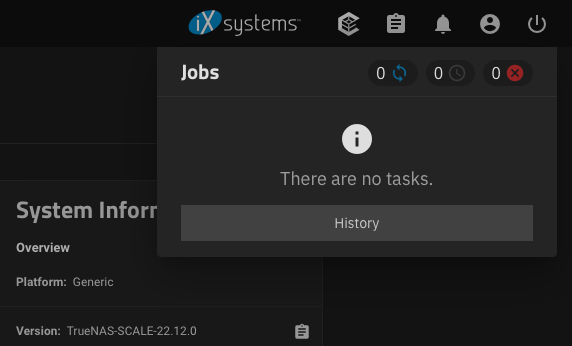

System Failed Jobs

In certain rare cases, you might experience certain cron jobs being stuck for days or other failed tasks:

You can address the issue easy, by restarting the

middlewared service:Code:

# systemctl restart middlewared.service

Kubernetes Service

If you get an error message telling you the Kubernetes service is not running or Unable to connect to kubernetes cluster in Bluefin, go to Apps > Settings > Unset Pool. Reboot the server and Set/Choose Pool. Once the pool set, it can take up to 30 minutes until all services are reconfigured, please be patient.

Kubernetes Applications

Is recommended to use the TrueCharts applications, their development team is specialized in Kubernetes charts, the same way iXsystems team is specialized in Scale OS. Beside robust charts, the main advantage TrueCharts applications offer is an uniform installer process. You will find the same familiar settings in each application and the team offers stellar support on their Discord channel, if you have any questions. All related application settings reflected in this guide are taken from their apps, thank you @truecharts for your community support.

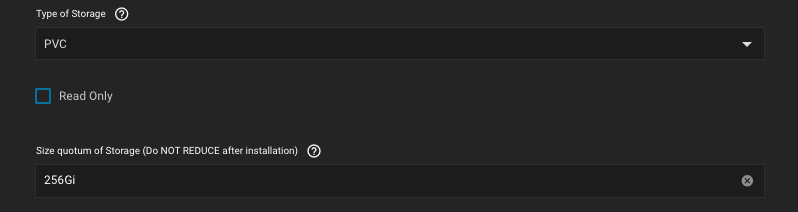

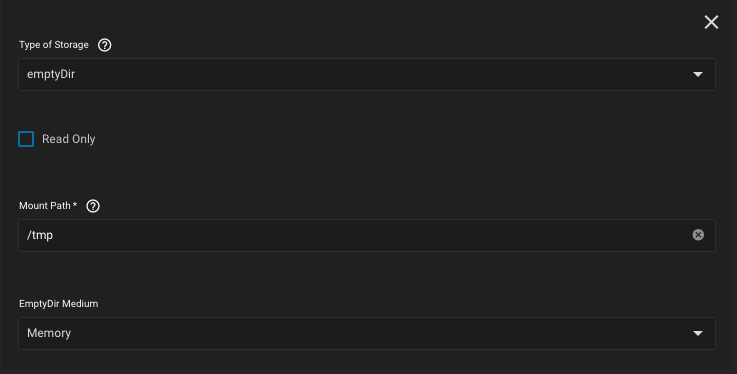

Make sure your apps have the following storage types set accordingly.

Required default app storage:

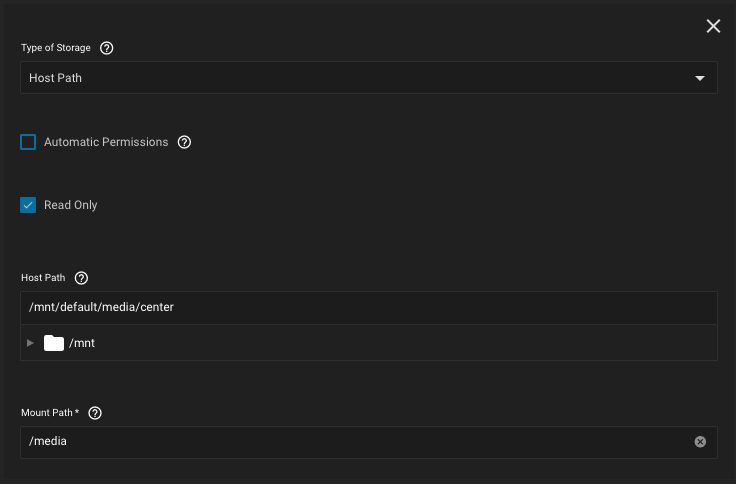

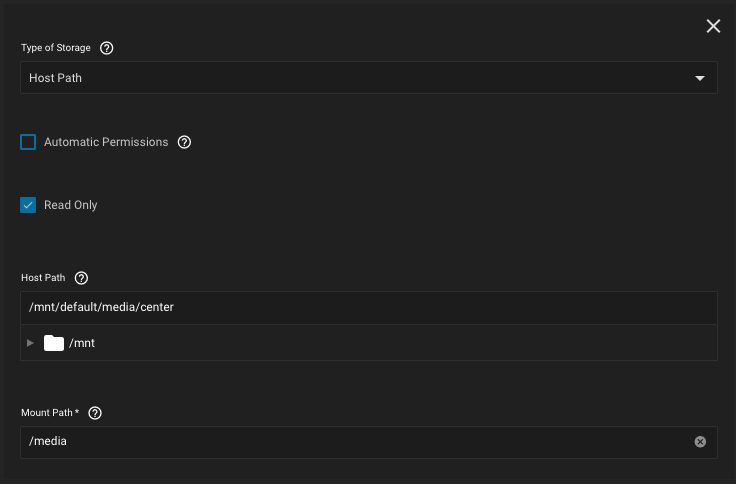

Example of additional storage, in TrueCharts Plex application:

As per Kubernetes documentation, the

List of directories present into internal

Bluefin introduces a new non-root

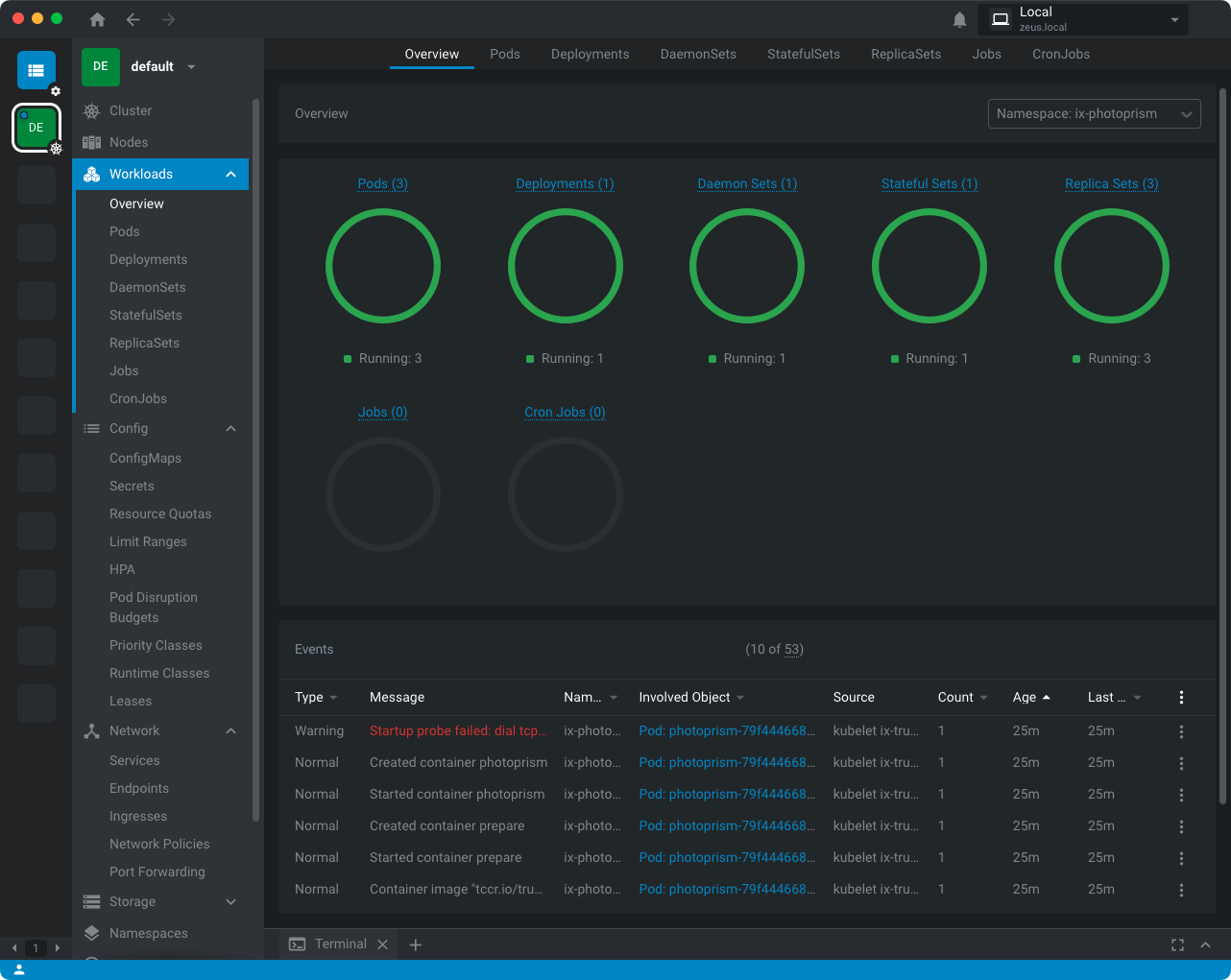

List all Kubernetes pods:

Access the TrueCharts Plex application logs:

Access the TrueCharts Plex application shell and execute a command, never perform any changes through shell:

Required default app storage:

- PVC, the simple type is deprecated, never change this type of storage, this is the main cause for application failures

Example of additional storage, in TrueCharts Plex application:

- Host Path set to

/mnt/pool/dataset/child-dataset, in my example/mnt/default/media/center - Mount Path set to internal container directory

/media, standard Linux directory where media is stored

As per Kubernetes documentation, the

hostPath volume must be mounted as Read Only. This will also prevent any SMB share mounted on /mnt/default/media dataset to change the file permissions inside containers.List of directories present into internal

/media container directory, which are also present inside /mnt/default/media/center dataset:Code:

$ ls -lh /media drwxrwxr-x 8 3000 kah 8 Mar 8 2022 Books drwxrwxr-x 4 3000 kah 4 Aug 2 00:00 Courses drwxrwxr-x 4 3000 kah 12 Oct 31 00:00 Documents drwxrwxr-x 6 3000 kah 6 Dec 15 00:00 Photos drwxrwxr-x 54 3000 kah 54 Dec 15 20:49 Trash drwxrwxr-x 4 3000 kah 4 Dec 14 17:57 'TV Recordings'

Bluefin introduces a new non-root

admin user, disabling the password for root user. This prohibits the access to application logs and shell, which will be fixed into a future Bluefin release. You can easily access to logs and shell through an ssh terminal, with root user. If you are a Kubernetes administrator, see the Kubernetes External Access section.List all Kubernetes pods:

Code:

# k3s kubectl get pods -A

Access the TrueCharts Plex application logs:

Code:

# k3s kubectl get pods -n ix-plex NAME READY STATUS RESTARTS AGE plex-7d47c9cc4f-dprph 1/1 Running 0 42h # k3s kubectl logs plex-7d47c9cc4f-dprph -n ix-plex | tail -n 3 udc input failed with 1001 udc input failed with 1011 ERROR: Error decoding audio frame (Invalid data found when processing input)

Access the TrueCharts Plex application shell and execute a command, never perform any changes through shell:

Code:

# k3s kubectl exec -it plex-7d47c9cc4f-dprph -n ix-plex -- /bin/sh $ df -ahT /tmp Filesystem Type Size Used Avail Use% Mounted on tmpfs tmpfs 32G 425M 32G 2% /tmp

Kubernetes Applications Security

To avoid any file permission issues while sharing the same

hostPath with multiple applications and a SMB share, is recommended to set the runAsUser to the non-root local user you use to access the SMB share, in my case UID 3000:You can always use the default

apps (568) user as runAsUser, but you will end-up with mixed permissions, if you manually create a directory or file through a SMB share (logged as non-root local user), which will break your apps file access. Proceed, based on your own needs.In certain cases, the

Container logs:

With PVC mounted locally, old UID

You have the choice to delete the app and start fresh, or execute the script listed below, to fix the permissions.

Adjust the

The script has all required failsafe checks in place and is set to use the

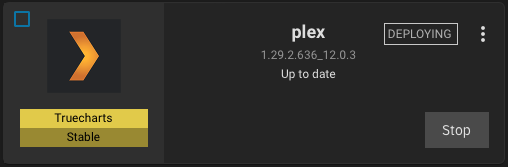

In UI, stop the application if is still showing as Deploying and execute the script:

Start the application in UI.

runAsUser UID change can result in failure to properly apply the UID for all container files:Container logs:

Code:

2022-12-19 19:15:27.197191+00:00 Permission denied: /config/Library/Application Support/Plex Media Server/Preferences.xml

With PVC mounted locally, old UID

1000 is still assigned, instead of UID 3000:Code:

# ls -lh /mnt/software/tmp-plex total 512 drwxrwsr-x 3 1000 apps 3 Aug 9 16:33 Library

You have the choice to delete the app and start fresh, or execute the script listed below, to fix the permissions.

Adjust the

pool (where ix-application dataset belongs to), app and username script variables (where user should be the name of your UID 3000):Code:

# id user uid=3000(user) gid=3000(user) groups=3000(user),568(apps),544(builtin_administrators)

The script has all required failsafe checks in place and is set to use the

apps (568) usergroup, which is the default runAsGroup setting into all apps. I recommend to never change the usergroup name, into your applications. Create the script as root:Code:

cat > ./perms.tool << 'EOF'

#!/usr/bin/env bash

pool='software'

app='plex'

usergroup='apps'

username='user'

if [ $EUID -gt 0 ]; then

echo 'Run perms.tool as root.'

exit 1

fi

if [ ! -x "$(command -v k3s)" ]; then

echo 'k3s is not installed.'

exit 1

fi

if [ ! -x "$(command -v zfs)" ]; then

echo 'zfs is not installed.'

exit 1

fi

if [ ! -d /mnt/$pool/ix-applications ]; then

echo 'Invalid pool name or ix-applications dataset not part of pool.'

exit 1

fi

if [ "$(id -g $usergroup 2>/dev/null)" == "" ]; then

echo 'Invalid user group.'

exit 1

fi

if [ "$(id -u $username 2>/dev/null)" == "" ]; then

echo 'Invalid user name.'

exit 1

fi

volume="$(k3s kubectl get pvc -n ix-$app -o json | jq -r '.items[0].spec.volumeName')"

if [ "$volume" == "null" ]; then

echo 'Invalid application name or not installed.'

exit 1

fi

if [ -d /mnt/$pool/tmp-$app ]; then

rm -rf /mnt/$pool/tmp-$app

fi

name="$pool/ix-applications/releases/$app/volumes/$volume"

zfs set mountpoint=/$pool/tmp-$app $name

if [ $? -ne 0 ]; then

echo "Failed to set local mountpoint."

exit 1

fi

echo -n "Applying $username:$usergroup permissions to $app application... "

find /mnt/$pool/tmp-$app -type d -exec chmod a+rwx,g-w,o-w,ugo-s,-t {} +

find /mnt/$pool/tmp-$app -type f -exec chmod a+rwx,u-x,g-wx,o-wx,ugo-s,-t {} +

chown -R $username:$usergroup /mnt/$pool/tmp-$app

echo 'OK'

zfs set mountpoint=legacy $name

if [ $? -ne 0 ]; then

echo "Failed to set legacy mountpoint."

exit 1

fi

rm -rf /mnt/$pool/tmp-$app

EOF

In UI, stop the application if is still showing as Deploying and execute the script:

Code:

# bash perms.tool Applying user:apps permissions to plex application... OK

Start the application in UI.

Kubernetes Applications Shell

The UI allows you to have access to any application shell, however, you will be able to access the shell only if you login into UI with

root user. This should be fixed into a future Bluefin release. Example of denied shell access into container, when logged into UI with a non-root user:WARN[0000] Unable to read /etc/rancher/k3s/k3s.yaml, please start server with --write-kubeconfig-mode to modify kube config permissions error: error loading config file "/etc/rancher/k3s/k3s.yaml": open /etc/rancher/k3s/k3s.yaml: permission denied

Kubernetes Cluster External Access (for Advanced Users)

If you are an experienced Kubernetes cluster administrator, you can access the Scale Kubernetes cluster remotely and troubleshoot quite easy any issues you might encounter. Thank you @blacktide for the useful information.

You can temporarily modify

Modify

Next, copy the

You can now access the Kubernetes cluster with Lens:

Rebooting the server will revert

iptables, allowing the Kubernetes cluster to accept connections from a wider private subnet. My current iptables output:Code:

# iptables -L INPUT -n --line-numbers # Warning: iptables-legacy tables present, use iptables-legacy to see them Chain INPUT (policy ACCEPT) num target prot opt source destination 1 KUBE-ROUTER-INPUT all -- 0.0.0.0/0 0.0.0.0/0 /* kube-router netpol - 4IA2OSFRMVNDXBVV */ 2 KUBE-ROUTER-SERVICES all -- 0.0.0.0/0 0.0.0.0/0 /* handle traffic to IPVS service IPs in custom chain */ match-set kube-router-service-ips dst 3 KUBE-FIREWALL all -- 0.0.0.0/0 0.0.0.0/0 4 ACCEPT tcp -- 192.168.1.8 0.0.0.0/0 tcp dpt:6443 /* iX Custom Rule to allow access to k8s cluster from internal TrueNAS connections */ 5 ACCEPT tcp -- 127.0.0.1 0.0.0.0/0 tcp dpt:6443 /* iX Custom Rule to allow access to k8s cluster from internal TrueNAS connections */ 6 DROP tcp -- 0.0.0.0/0 0.0.0.0/0 tcp dpt:6443 /* iX Custom Rule to drop connection requests to k8s cluster from external sources */

Modify

INPUT 4 (your line number might be different) to accept a wider private subnet, instead of just the assigned Scale 192.168.1.8 private IP address:Code:

# iptables -R INPUT 4 -s 192.168.0.0/16 -j ACCEPT

Next, copy the

/etc/rancher/k3s/k3s.yaml file contents to your local ~/.kube/config and change the localhost IP address, to your required private IP address:Code:

$ sed -i 's|127.0.0.1|192.168.1.12|' ~/.kube/config

You can now access the Kubernetes cluster with Lens:

Rebooting the server will revert

iptables to original configuration.SMB Shares

New in Bluefin, shared hostPaths are considered insecure and are not recommended. That means, if you setup a dataset as SMB share and share that directory with multiple Kubernetes apps, you will get conflict warnings. This is actually a very good approach, for your data integrity and this is where dataset permissions play an important role.

iXsystems mentioned into their Release Notes: "As a last resort that can result in system and app instability, Host Path Safety Checks can be disabled in Apps > Settings > Advanced Settings." Do not disable that setting.

In simple terms, the

hostPath validation prevents the use of same pool dataset for both apps and shares. From my understanding, there are a lot of apps changing file permissions without any warnings, or they simply don't work with SMB share ACLs. That's the main reason why you should not disable that setting and instead, re-organize your pool datasets properly. This also will be beneficial for future Bluefin upgrades. As a side note, @truecharts team do not offer storage related support for their applications, if you disable the hostPath validation.Datasets Structure Organization

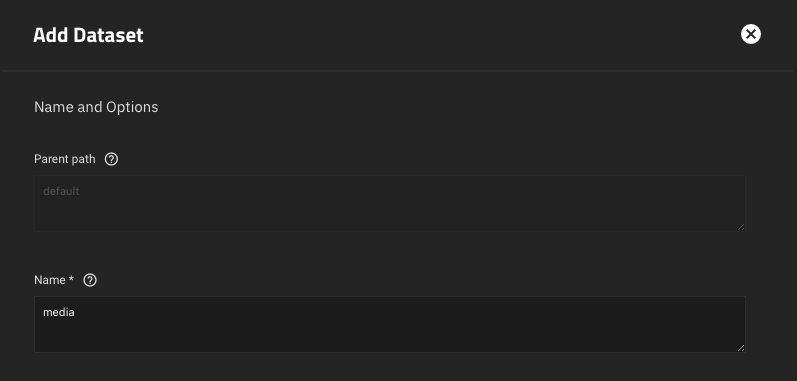

You can easily organize your pool datasets structure with

zfs rename command, thank you @winnielinnie for the useful information.The goal is to move all data from

Into

➊ Use

➋ Into UI, create a new

➌ Rename the

The rename process is instant and

New dataset usage in TrueCharts Plex application, change only the

media dataset:Into

media/center child dataset:➊ Use

zfs command as root user, to rename the dataset you plan to move into a child dataset:Code:

# zfs rename default/media default/media-old

➋ Into UI, create a new

media dataset:➌ Rename the

media-old dataset to center child dataset:Code:

# zfs rename default/media-old default/media/center

The rename process is instant and

recordsize setting will be preserved.New dataset usage in TrueCharts Plex application, change only the

hostPath and your Plex media files will not be affected in any way:SMB Shares Configuration

Once your datasets structure is properly organized, proceed with the SMB shares configuration.

The main concern is having

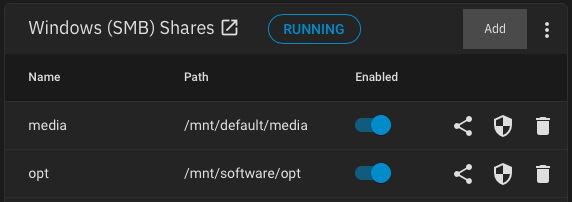

I currently have two SMB shares set:

For any SMB connectivity issues, you can troubleshoot with following command:

Organize your pool datasets, preferably following Linux standard naming conventions (read the Pools and Datasets section, for important dataset optimizations):

To avoid any

To access your SMB shares, use the previously created UID

You will not encounter any issues or warnings if you make sure the

Changing the dataset ACL Type to SMB/NFSv4 will result in breaking the file permissions and cannot be reversed, as detailed into warning:

As per Kubernetes documentation, the

Example of re-used internal directory, into an application:

ix-applications dataset isolated, it should not be accessed by anyone except root user. Currently, the ix-applications dataset is allowed to be read by anyone and permissions cannot be changed. For example, a non-root local user should:- not be able to access

ix-applicationsdataset through an SMB share, see thread - be able to read/write data using a

hostPathvolume assigned to a child dataset, accessible by multiple pods

- Enable Apple SMB2/3 Protocol Extensions, if you connect the shares to a Mac

- Set the Administrators Group to user UID 3000 you created earlier

I currently have two SMB shares set:

- media - mounted on

mediadataset, wherecenterchild dataset is used for shared files between multiple applications - opt - mounted on

optdataset, wheredownloadschild dataset is used for shared files between multiple applications

For any SMB connectivity issues, you can troubleshoot with following command:

Code:

# midclt call smb.status AUTH_LOG | jq

Organize your pool datasets, preferably following Linux standard naming conventions (read the Pools and Datasets section, for important dataset optimizations):

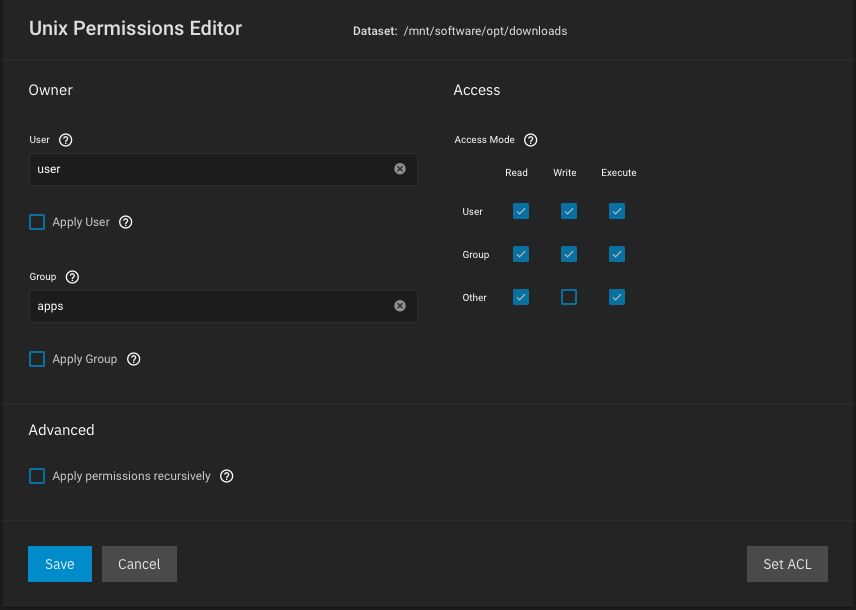

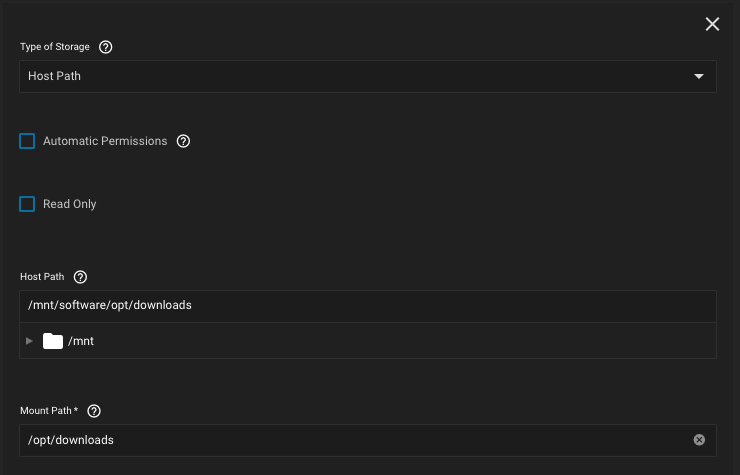

To avoid any

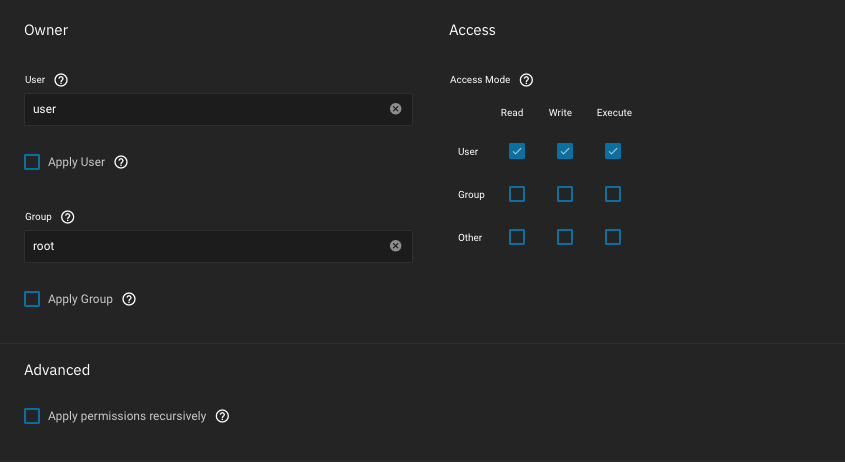

hostPath conflicts, set your SMB share to /mnt/software/opt and your application hostPath to /mnt/software/opt/downloads. Assigning an SMB share directly to /mnt/software pool is not considered good practice.To access your SMB shares, use the previously created UID

3000, which must have proper permissions to all your datasets (except ix-applications dataset, which should never be exposed or accessed by an end-user)./mnt/software/opt/downloads dataset permissions, see documentation for setting POSIX ACLs:You will not encounter any issues or warnings if you make sure the

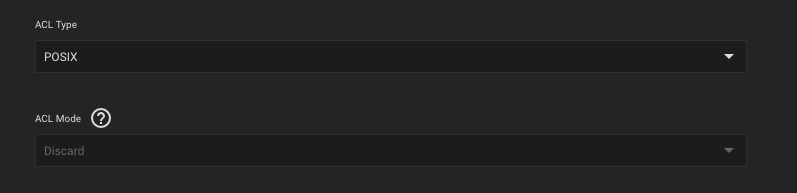

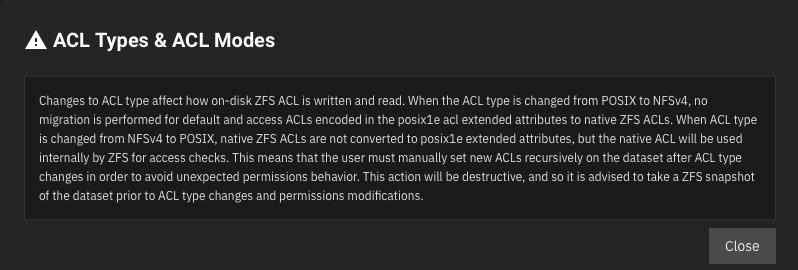

/mnt/software/opt SMB share is not directly accessed by multiple applications, the SMB share user matches the runAsUser UID and the dataset ACL Type is set to POSIX (default option), which is supported by Linux, Mac and Windows:Changing the dataset ACL Type to SMB/NFSv4 will result in breaking the file permissions and cannot be reversed, as detailed into warning:

As per Kubernetes documentation, the

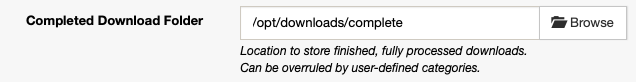

hostPath volume must be mounted as Read Only. Since we are using POSIX ACLs and a single non-root local user controls the SMB share, as well all applications, an exception can be made. Example of /mnt/software/opt/downloads dataset shared with multiple applications who access the internal pod /opt/downloads directory to read/write data:Example of re-used internal directory, into an application:

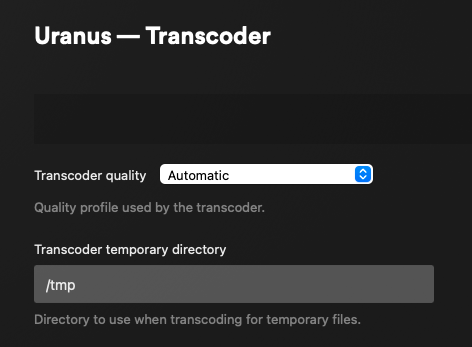

Plex Transcoding

Media that is incompatible with your device will be transcoded to a playable format. If you own a Plex compatible GPU, you can turn on Hardware-Accelerated Streaming in Plex Media Server. It is strongly recommended to create a Memory medium storage type, used specifically for transcoding.

Using a Memory medium will significantly improve the transcoding performance. In your TrueCharts Plex application, add:

In Plex Media Server, go to Settings > Transcoder and set the temporary directory:

In Plex Media Server, go to Settings > Transcoder and set the temporary directory:

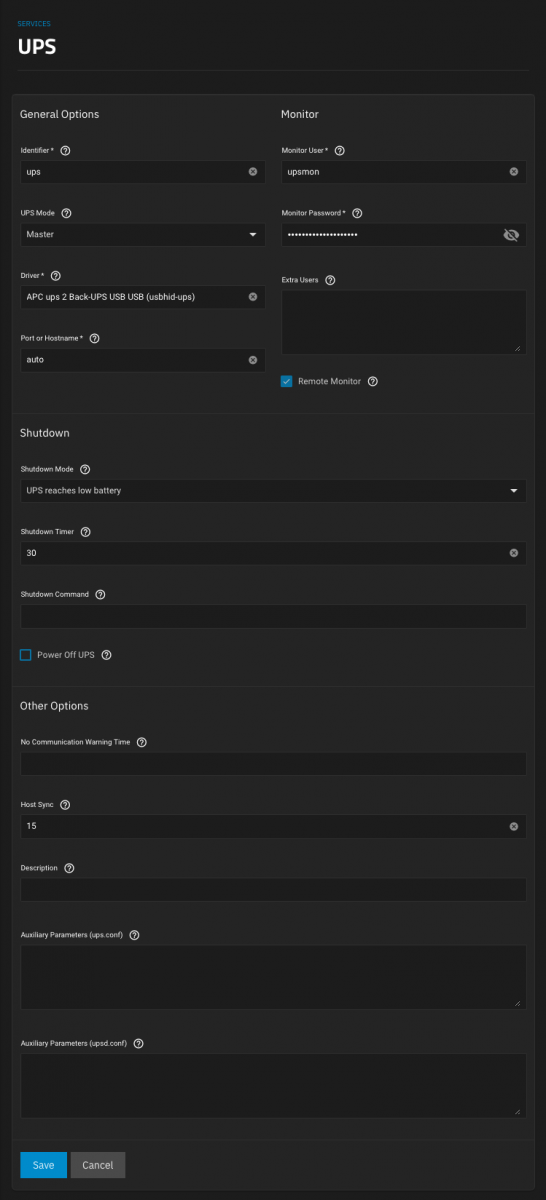

Uninterruptible Power Supply

It is recommended to use an UPS, in order to avoid brief power failures, which will abruptly shutdown your server. These interruptions are known to corrupt your pool data, therefore is worth the investment.

Identify the UPS model and driver:

In my case, the scanner detected an UPS unit connected to USB port. The only important information you will need is the driver

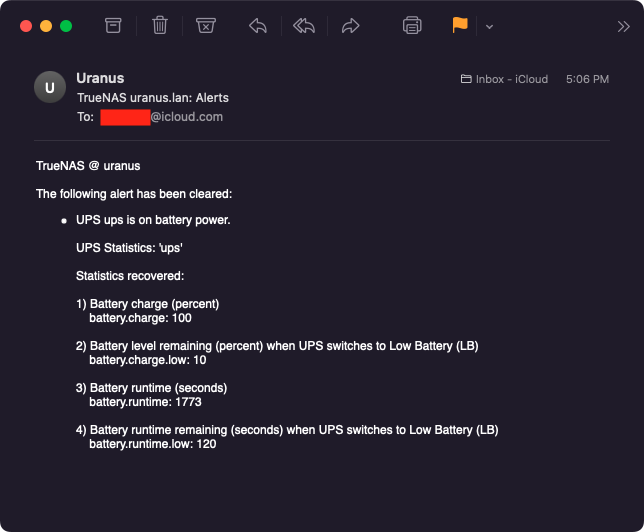

If you configured correctly your email alerts, in case of a power failure, you will receive an alert similar to:

Code:

# nut-scanner

Neon library not found. XML search disabled.

Scanning USB bus.

No start IP, skipping SNMP

No start IP, skipping NUT bus (old connect method)

Scanning IPMI bus.

[nutdev1]

driver = "usbhid-ups"

port = "auto"

vendorid = "051D"

productid = "0002"

product = "Back-UPS NS 1500M2 FW:957.e4 .D USB FW:e4"

serial = "5B2150T61261"

vendor = "American Power Conversion"

bus = "001"

[nutdev2]

driver = "nut-ipmipsu"

port = "id1"

[nutdev3]

driver = "nut-ipmipsu"

port = "id2"

In my case, the scanner detected an UPS unit connected to USB port. The only important information you will need is the driver

usbhid-ups, the product name might vary. Into UPS service, configure the settings as listed below and pay attention to:- Driver - match the found driver, in our example

usbhid-ups - Port - match the found port, in our example

auto - Monitor Password - set a random complex password, you will not use it as end-user

If you configured correctly your email alerts, in case of a power failure, you will receive an alert similar to:

Last edited: