Brandito

Explorer

- Joined

- May 6, 2023

- Messages

- 72

Yeah, I have 91TB on the disks, I have 3 spares and some room on another nas but not a contiguous 91TB free anywhere. I would need a second disk shelf most likely.

The checksums have increased again

It's extra odd that it tells me to do a zpool status -v for a list but when I do that it doesn't actually list anything

With the swap change, I don't really recall adding or removing the swap. I believe it was enabled when I started on core, but I quickly migrated to scale after a few weeks. What I do recall doing was moving the system dataset from the boot-pool to another pool. I thought it was my home pool, but that can't be because I know I tried moving it there when this whole issue started. Does that affect the swap location? The two options are in the same menu, so I assume it does?

When I got the error that started this thread, I tried moving the system dataset to my home pool again thinking it would add the swap partition. Then I offlined a single drive in the new vdev and replaced it with itself assuming again that it would add in the swap partition. Is it possible that caused the corruption? Like I mentioned before, the resilver was strange, starting at a 1 day to complete and then actually completing within hours.

I am still using the "new" to me HBAs and brand new cables, but they're likely not as good as the original sff cables I had which were supermicro branded.

Should I shut down and at least swap the cables? I'm just worried to bring the machine offline now.

The checksums have increased again

Code:

root@truenas[~]# zpool status

pool: Home

state: ONLINE

status: One or more devices has experienced an error resulting in data

corruption. Applications may be affected.

action: Restore the file in question if possible. Otherwise restore the

entire pool from backup.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-8A

scan: resilvered 1.32T in 02:06:45 with 0 errors on Sat Nov 11 13:45:36 2023

config:

NAME STATE READ WRITE CKSUM

Home ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

a7d78b0d-f891-11ed-a2f8-90e2baf17bf0 ONLINE 0 0 33

a7b00eef-f891-11ed-a2f8-90e2baf17bf0 ONLINE 0 0 33

a7d01f81-f891-11ed-a2f8-90e2baf17bf0 ONLINE 0 0 33

a7c951e3-f891-11ed-a2f8-90e2baf17bf0 ONLINE 0 0 0

a7bfef1b-f891-11ed-a2f8-90e2baf17bf0 ONLINE 0 0 0

e4f37ae1-f494-4baf-94e5-07db0c38cb0c ONLINE 0 0 0

raidz2-1 ONLINE 0 0 0

8cca2c8f-39ee-40a6-88e0-24ddf3485aa0 ONLINE 0 0 2

74f3cc23-1b32-4faf-89cc-ba0cd72ba308 ONLINE 0 0 46

4e5f5b16-6c2b-4e6b-a907-3e1b9b1c4886 ONLINE 0 0 46

cde58bb6-9d8e-4cdc-a1bf-847f459b459b ONLINE 0 0 46

58c22778-521b-4e8f-aadd-6d5ad17a8f68 ONLINE 0 0 2

33633f68-920b-4a40-bd4d-45e30b6872bc ONLINE 0 0 2

raidz2-2 ONLINE 0 0 0

2a2e5211-d4ea-4da9-8ea5-bdabdc542bdb ONLINE 0 0 0

56c07fd7-6cb6-4985-9a20-2b5ff9d42631 ONLINE 0 0 0

1147286d-8cd8-4025-8e5d-bbf06e2bd795 ONLINE 0 0 44

7e1fa408-7565-4913-b045-49447ef9253b ONLINE 0 0 44

3d56d2fa-d505-4bea-b9a2-80c121e4e559 ONLINE 0 0 44

a9906b32-2690-4f7b-8d8f-00ca915d8f3d ONLINE 0 0 0

raidz2-5 ONLINE 0 0 0

b8c63108-353b-4ed7-a927-ca3df817bd21 ONLINE 0 0 0

58782264-02f1-41c6-9b91-d07144cb0ccb ONLINE 0 0 32

03df98a5-a86d-4bc8-879a-5cf611d4306c ONLINE 0 0 32

022c7ffb-0a07-45cb-b3af-ad1730a08054 ONLINE 0 0 32

a5786a1f-a7ad-4a30-877a-88a03c94a774 ONLINE 0 0 0

4c59238e-5cbd-428e-8a72-a018d9dae9c2 ONLINE 0 0 0

logs

mirror-6 ONLINE 0 0 0

5ba1f70b-be51-470f-94ed-777683425477 ONLINE 0 0 0

f2605776-46a9-4455-a4bc-322d4cf8a688 ONLINE 0 0 0

errors: 2 data errors, use '-v' for a listIt's extra odd that it tells me to do a zpool status -v for a list but when I do that it doesn't actually list anything

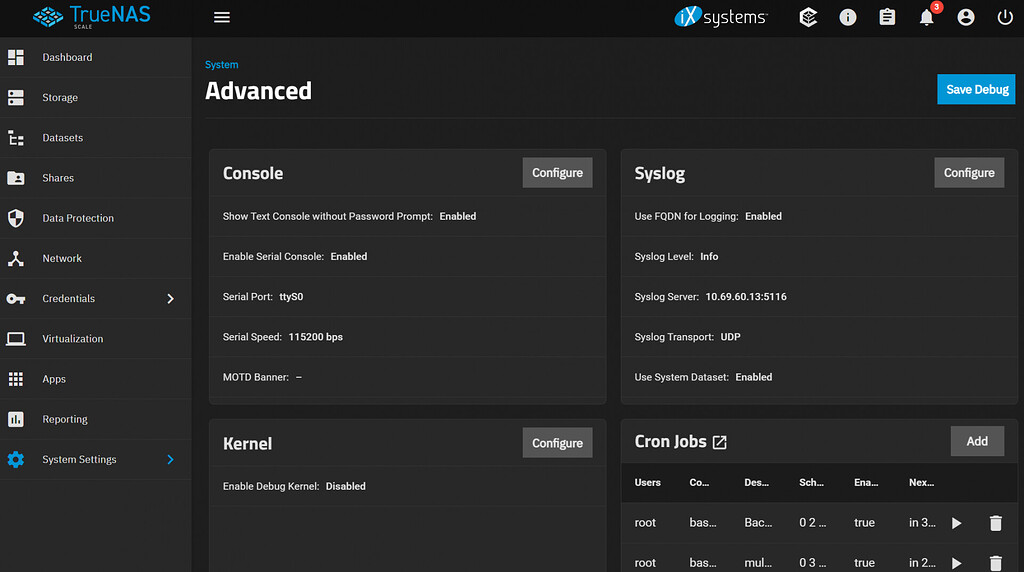

With the swap change, I don't really recall adding or removing the swap. I believe it was enabled when I started on core, but I quickly migrated to scale after a few weeks. What I do recall doing was moving the system dataset from the boot-pool to another pool. I thought it was my home pool, but that can't be because I know I tried moving it there when this whole issue started. Does that affect the swap location? The two options are in the same menu, so I assume it does?

When I got the error that started this thread, I tried moving the system dataset to my home pool again thinking it would add the swap partition. Then I offlined a single drive in the new vdev and replaced it with itself assuming again that it would add in the swap partition. Is it possible that caused the corruption? Like I mentioned before, the resilver was strange, starting at a 1 day to complete and then actually completing within hours.

I am still using the "new" to me HBAs and brand new cables, but they're likely not as good as the original sff cables I had which were supermicro branded.

Should I shut down and at least swap the cables? I'm just worried to bring the machine offline now.

Last edited: