- Joined

- Nov 25, 2013

- Messages

- 7,776

Import on the command line:

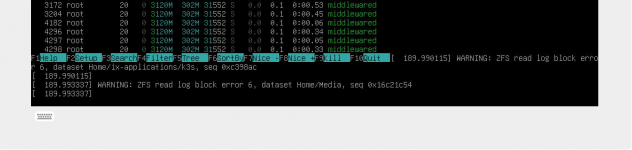

If that works post the output of

zpool import -o altroot=/mnt <poolname>If that works post the output of

zfs list

Last edited: