@awil95

This is a bit of a can of worms I'm getting into, as most responses to these kinds of questions are generally met with responses somewhere along the lines of "The way it is now is the most proper, get used to it." The

tl;dr is that in my opinion,

this is insane.

I'll start off by acknowledging that this whole thing is a tricky situation with no real

proper way to do it, due to the complications of pooled storage; however, I do think there's a pretty good compromise that I'll touch on at the end.

Largely, I've seen systems have Samba configured two ways by default in regards to this:

1) Report the entire pools used and free space for all shares.

This is what UNRAID does.

Let's say you had a pool of 50TB and regardless of how your data was laid out 35TB were in use. Then let's say you have 3 shares, "Media", "Documents", "Games".

They would all appear like this:

- Media: 15TB free of 50TB

- Documents: 15TB free of 50TB

- Games: 15TB free of 50TB

As space gets consumed, the loss of free space is reflected across all shares.

This certainly keeps things the most simple in terms of being aware of space consumption, but really leaves a lot to be desired in terms of each share having a really sense of owning its own space. It also starts to break down pretty badly if you want to try and restrict certain shares to certain users since they then might not be aware of all the shares that might result in space being consumed.

This approach prioritizes making capacity and free space intuitive, but makes used space confusing, since it's overall and not per-share.

2) Determine the capacity of a share via USED + FREE (*shudders*)

Obviously this is how TrueNAS configures Samba, and while I do think it's better than (1), it's not without its fair share of issues.

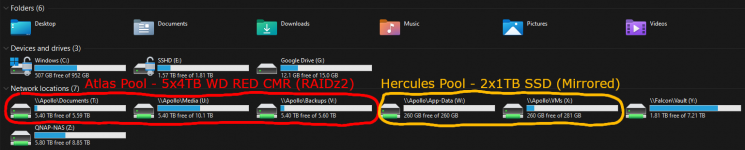

First off, right out of the gate there is a problem with how this looks when mounted in Windows.

In this example, none of my shares have access limitations and none of my shares have reservations or quotas. Despite this the capacities obviously vary. We KNOW why this is the case, because the capacity here is basically an after thought, with used and free space being the primary sources of data to create these views of the shares. Although it makes technical sense and is ideal in terms of used and free space, to me it's not very intuitive and

goes completely against how capacity is typically represented with local storage.

If you don't mind this setup because you primarily care that used and free space are handled "correctly", then more power to you. But for me at least, this drives me

nuts.

Why does Games magically have more capacity because I put more in it? That doesn't make any sense at all. You can keep pointing back to USED + FREE but that's only so golden in a vacuum (where capacity is an afterthought), and in my opinion the shares should ideally make sense from all directions.

This approach prioritizes making used and free space intuitive, but makes capacity confusing since it ends up varying depending upon what's going on in other shares.

It's better to think about it in the reverse:

Imagine a pool with a total capacity of 10 TB. You configured multiple shares (independent of each other) to access via client PCs.

On one of these shares (

"media"), there exists 2 TB worth of data on it. (Accessible to

anyone and

everyone.)

On another share (

"archives"), you dump 5 TB worth of data onto it. (Accessible and known only to

admins.)

It would still be just as strange to look at your

"media" share's properties to see:

- Used space: 2 TB

- Total capacity: 10 TB

- Free space: 3 TB

Imagine a

non-admin user sitting at her desk, scratching her head looking at the

"media" share: "Wait, I only saved 2 TB of stuff on here. But it's saying I only have 3 TB of space remaining? It's 10 TB total capacity! Where'd the other missing 5 TB go?!"

However, on the flip-side (as it is

currently employed), she would actually see:

- Used space: 2 TB

- Total capacity: 5 TB

- Free space: 3 TB

"Oh wow. I only have 3 TB left that I can dump multimedia in this share. Looks like I can't exceed more than 5 TB in total."

While this example highlights the strength of the current approach, it also demonstrates its weakness.

So at this point the user finished with the thought "Ok there is 5 TB total, I can fit 3TB more of stuff on here". Now lets say they think "Cool, tomorrow I'll bring in my 2TB movies drive from home to share with everyone else and dump them on there. But what they don't know is that overnight

the admins decided to archive away 1.5TB of more data into that share they can't even see. Since that free space figure is for the entire pool, they get punked. They come back the next day, connect their drive and open up explorer to now see:

- Used space: 2TB

- Total capacity: 3.5 TB

- Free space: 1.5 TB

And now, in an oh so familiar situation they go "Wait, the amount of used space didn't change, no one put any more files on this drive, and yet the free space suddenly is much less.

Where did the other 1.5TB go? I can't fit my movies anymore". Then it just looks like the admins were mean and decided to take media space away from everybody for no reason. This would look even worse if the share was just for that user specifically, as at least with a common share it's vaguely understandable that all of the free space isn't yours.

Obviously, we understand why this happened, but I don't think it's reasonable to expect users, who shouldn't have to care how their storage is physically implemented, to understand this. Not to mention it basically means their free space is a lie and that at any time it could be reduced by the actions of others, even on personal shares.

To me this concept of just accepting "dynamic capacity" is too awkward.

My Approach:

The way I handle it, while not perfect, I think is better than either of these defaults. It institutes the paradigm that is more common with non-pool storage, FREE = TOTAL - USED. Same math, but the difference here is that capacity isn't dynamic, it's free/used space that are, as is traditional.

One word:

Quotas. Yes they were technically mentioned in this very thread (barely), and yes no one said

not to use them, but I'm really shocked at how rarely they're recommended when people complain about this way that SMB shares on TrueNAS show their capacity as it's basically the perfect middle ground IMO. I don't see reservations mentioned too often either. I think it would have gone a long way in the many other threads like these where people ask how to make their shares more intuitive for them, if instead of basically saying "the way it is by default is fine", people just at least told them to look into quotas and reservations.

Anyway, this is the approach:

Think of your whole pool (or the portion of it dedicated to SMB shares) as it literally is. A giant pool of abstracted/virtual storage available to divvy up however you want. You can think of your network like a single workstation that may or may not be shared by multiple users, and some of those users may or may not be able to see a given share. By always setting quotas on your shares, you treat them as if they were physical drives installed on a real machine and as such they appear and behave exactly like one. When you mount shares with quotas, Windows (and the Linux distros I've used) will show the quota as the capacity, with the free and used space being specific to that share/dataset as well.

Note these are the same shares as before, but now with quotas on them.

To keep it simple, here is an example with one user that isn't you, we'll call them Dylan.

You have your pool of 50TB and you decide to give Dylan 2TB of that for their own share, so you make a dataset for them, set a 2TiB quota on it, and then make it a share that they can access. This is just as if you just installed a 2TB disk to their machine. It looks like one, it acts like one and would be the most familiar to them as a regular user.

Then, eventually their "2TB drive" starts to get a little close to full and they come to you complaining. The solution? Easy! Just increase the quota (assuming you have and are willing to give up the extra space). Again, this is just like as if you went out and bought a new drive for them (lets say 4TB) and then upgraded their machine physically, just with the convenience of not having to do data migration. Now they have their "4TB drive" and all is well. Rinse and repeat whenever needed.

Even if you originally never intended to limit a certain share and essentially have to pick a quota arbitrarily, it's fine. Just start with one that roughly makes sense and you can enlarge it later if need be.

Overall:

- The behavior and appearance of regular local storage is most closely replicated

- Used, free, and total space all make intuitive sense

- No space can go "missing"

- Free space is never unexpectedly robbed

- There is motivation to better regulate space consumption as you have to stop and think every time before increase a quota. "Do I really need 5 more TB of cat pictures on my pool in this cat pictures datasetshare? Maybe instead I should delete some old ones." It's harder to accidentally run up against your pool capacity as you have a much clearer sense of what's happening to space as it's consumed.

Is it perfect and suitable for all ZFS/SMB use cases? Certainly not. But for traditional "access/share remote data" SMB shares like these, I think it's the most ideal by far. It leaves zero room for confusion while using the shares and adds a minimal amount of forethought and maintenance to your pool.

Another way to think about it, if you happen to be familiar with virtual machines, is it's like having a hypervisor and using your physical drive's space to segment out room for each virtual machines virtual disks, with the disks using thin provisioning. It just requires thinking ahead a little, and not by much as you can always change the quotas later.

The only "caveat" is that you have to make sure that the total size of all your quotas doesn't exceed your pool size. Nothing bad will happen if they do, its just that you basically then lose the benefits of this approach.

Maybe it's a bit OCD, but unless I specifically need a massive dataset with an unfixed capacity, I like having more a more well defined structure and extents for my data (and pretty much everything else I touch software wise).