- Joined

- Jan 14, 2023

- Messages

- 623

Hello again,

sorry if this a total beginner question but I probably didn't find the correct passage in the documentation.

I have two storage pools mars (two mirrored SSDs) and neptune (4 HHDs in raidz2).

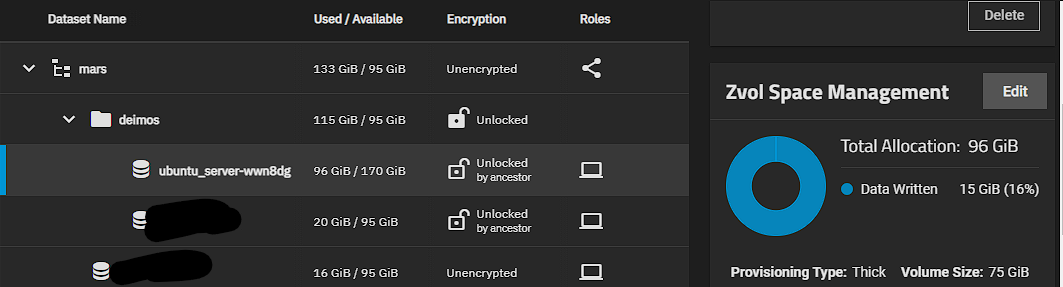

I created a 75 Gb zvol for Ubuntu (or rather it got created during creation of the VM). I replicate that to my HHD pool.

Now two things I observed:

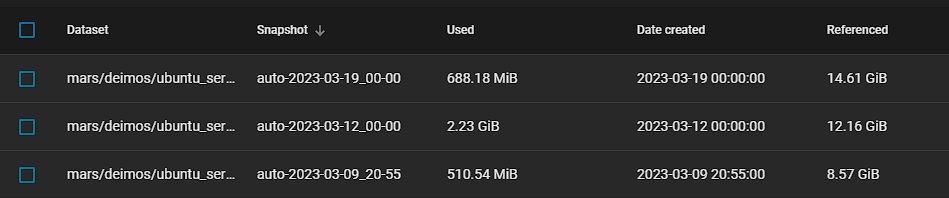

1) The total used size exceeds the allocated zvol size. I assume this is because the snapshots consume space and that used space needs to be accounted for somewhere. However I can't derive the additional space (21 GB) from the snapshots. The used space is much smaller but the referenced space (for all 3) is much larger.

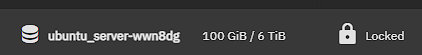

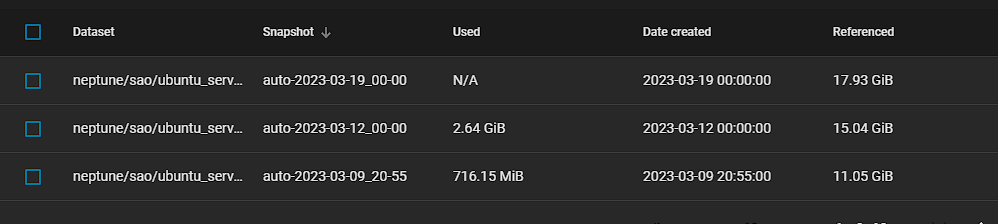

2) On the HDD pool the sizes are different (although LZ4 is enabled on both). I read somewhere that this may be because raidz2 needs a different overhead in space than a mirrored vdev.

Can someone explain or point me in the right direction to understand the fundamentals of that?

I'm not really space constrained so practially I don't care too much about that, hoewever I'm curious.

Best regards!

sorry if this a total beginner question but I probably didn't find the correct passage in the documentation.

I have two storage pools mars (two mirrored SSDs) and neptune (4 HHDs in raidz2).

I created a 75 Gb zvol for Ubuntu (or rather it got created during creation of the VM). I replicate that to my HHD pool.

Now two things I observed:

1) The total used size exceeds the allocated zvol size. I assume this is because the snapshots consume space and that used space needs to be accounted for somewhere. However I can't derive the additional space (21 GB) from the snapshots. The used space is much smaller but the referenced space (for all 3) is much larger.

2) On the HDD pool the sizes are different (although LZ4 is enabled on both). I read somewhere that this may be because raidz2 needs a different overhead in space than a mirrored vdev.

Can someone explain or point me in the right direction to understand the fundamentals of that?

I'm not really space constrained so practially I don't care too much about that, hoewever I'm curious.

Best regards!