Brandito

Explorer

- Joined

- May 6, 2023

- Messages

- 72

sent, thanks for the help btwCan you PM me a debug?

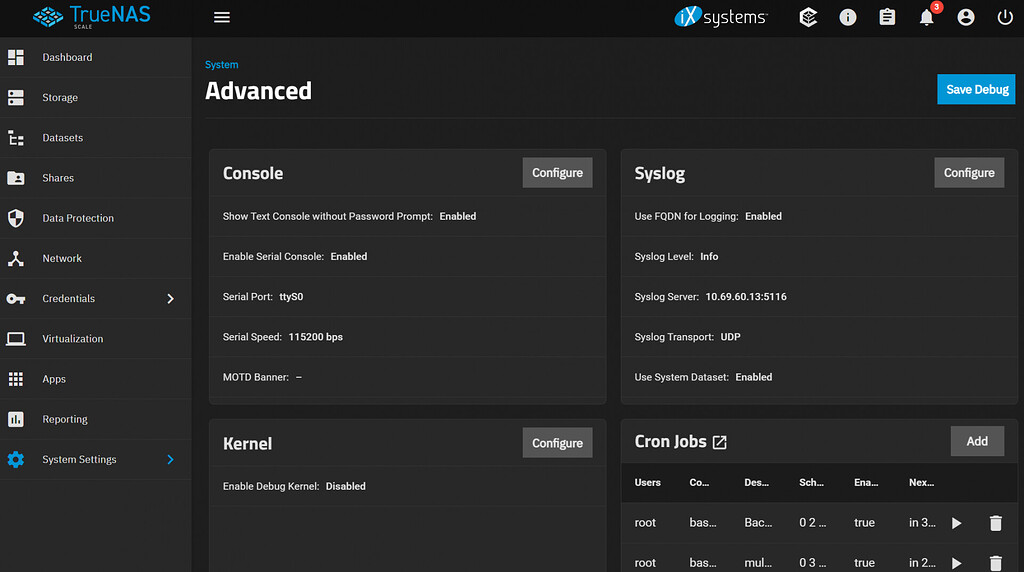

What is a Debug and Why does (NickF think) it Matters?

This resource was originally created by user: @NickF1227 on the TrueNAS Community Forums Archive. NOTE: This script is provided as-is and and is not endorsed by TrueNAS or iXsystems. Use at your own risk. Disclaimer : This is a living document and I expect to revise it often-ish It’s also a...www.truenas.com