- Joined

- Dec 8, 2017

- Messages

- 442

I feel like I should know better than to make another "why is Truenas slow" post, but really I can't figure this one out.

I often store large (50+) GB files on one dataset and then transfer them to another dataset within the same pool. On TrueNAS 12-U8 I was getting about 1.2GBps out of the box on fresh reads, and about 1.5GBps on reads from ARC. Adding only the tunable vfs.zfs.zfetch.max_distance and setting it to 2147483648 increased speeds to about 1.5GBps on fresh reads, and over 2GBps reading from ARC (where I copy the same file again). I tried lower numbers of that tunable, and performance increased until I hit that number, so I stuck with it. Yes, I know it's way beyond the defaults.

File transfers have been attempted using SMB from Windows 11 where server side copy takes effect, and by using a simple cp command via ssh. I can download via 10gbps network at 1GBps (gigabytes, no bits) easily, so definitely no network issues. In fact, I can easily transfer 10 50GB files at the same time, and it still saturates the 10gb link without the disks breaking a sweat. So to me, this is not a "why is my 10gb network/smb slow" question. There is no other activity when I'm doing this. Disks and CPU are idle until the transfer is attempted.

On TrueNAS 13-U1.1 I hit about 600MBps on file transfers between datasets with fresh data. Re-transferring after it gets stored in ARC brings speeds to about 1GBps. Tunable makes no difference on or off and my performance is pretty much cut in half now.

Now before you tell me that 600MBps is great and I should be happy with that performance, especially since I'm reading and writing to the same pool at the same time, let me explain why I think it should be better.

Here are my dd speed tests writing and then reading back a 500GB file:

root@nas:~/Files/Downloads # dd if=/dev/zero of=test.dat bs=1m count=512000

512000+0 records in

512000+0 records out

536870912000 bytes transferred in 94.790463 secs (5663765067 bytes/sec)

root@nas:~/Files/Downloads # dd of=/dev/null if=test.dat bs=1m count=512000

512000+0 records in

512000+0 records out

536870912000 bytes transferred in 133.549504 secs (4020014270 bytes/sec)

Yes, that is most definitely on a dataset with compression turned off, atime off, and all datasets involved are using 1MB block sizes.

Scrubs typically run at around 8GBps. I scrub through about 20TB of data in an hour or so, probably limited by too slow of a CPU.

The pool consists of 16 SATA SSDS connected to an 9400-16i, and another 16 SATA SSDs connected to another 9400-16i for a total of 32 SATA SSDs. Each 9400-16i is connected to it's own PCIe 8x slot, and the slots do not split bandwidth on the motherboard. It is made up of 4 RAIDZ2 vdevs. I have 256GB of memory installed, CPU is a W-2125.

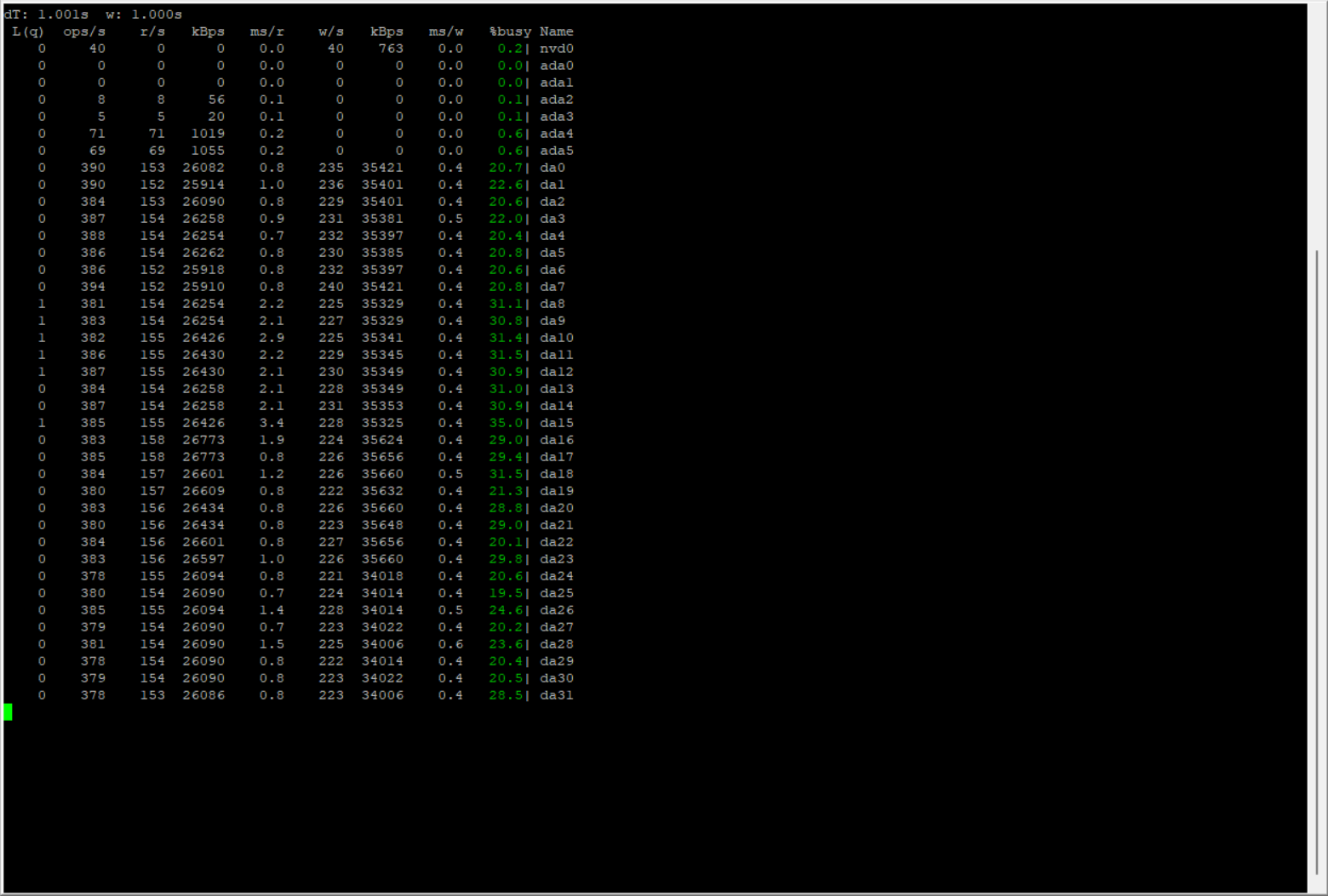

This is disk usage during a fresh transfer:

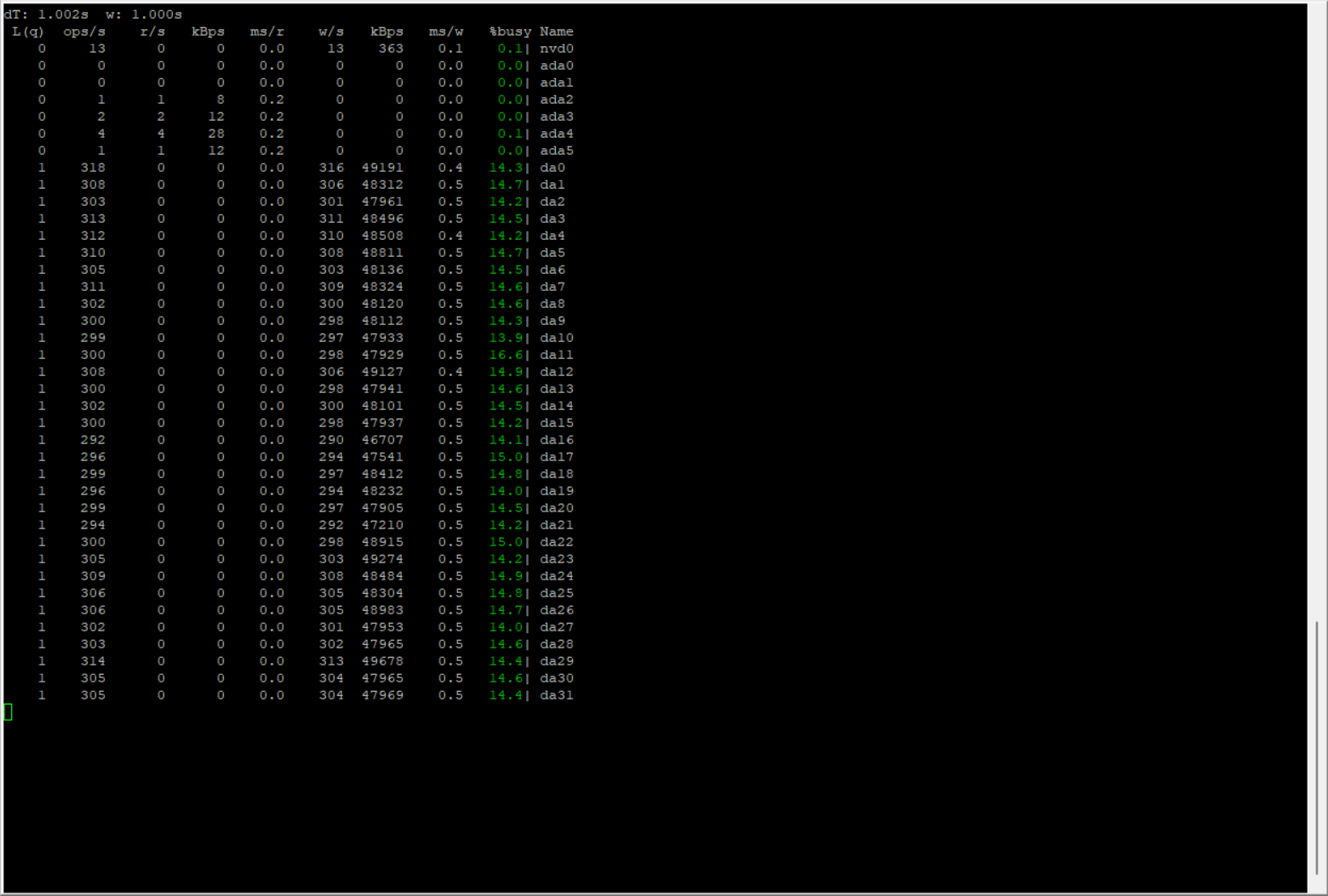

Here is the same transfer again when it's already in ARC:

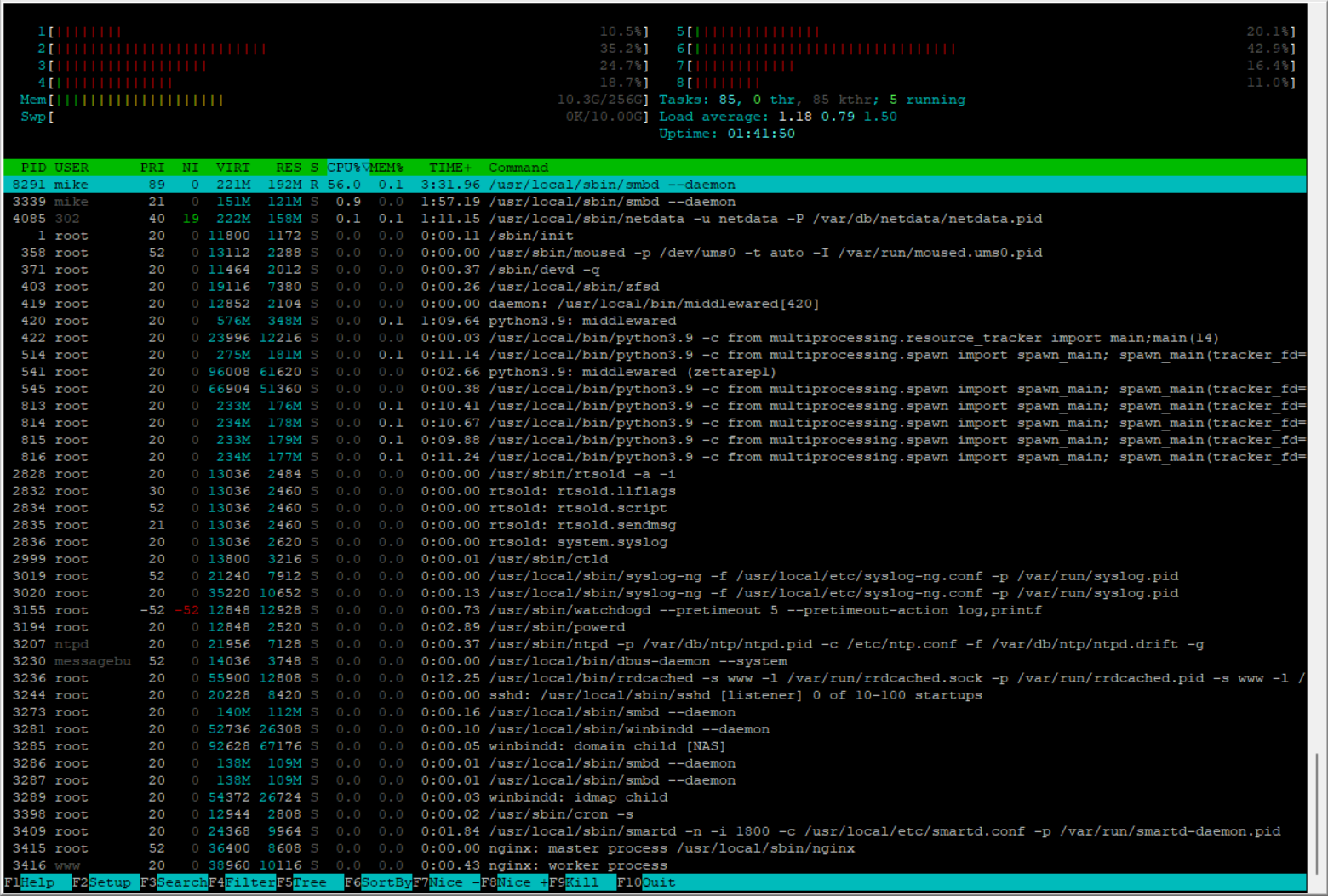

CPU usage:

This system has always performed way slower than I thought it should, but I've just lived with it. Now with my performance cut in half just from an update, it's getting silly.

Reverting back to 12 U8 brings performance immediately back. Just to be sure, I reverted back, then re-upgraded to 13. Same exact thing.

Please let me know what I'm missing here.

I often store large (50+) GB files on one dataset and then transfer them to another dataset within the same pool. On TrueNAS 12-U8 I was getting about 1.2GBps out of the box on fresh reads, and about 1.5GBps on reads from ARC. Adding only the tunable vfs.zfs.zfetch.max_distance and setting it to 2147483648 increased speeds to about 1.5GBps on fresh reads, and over 2GBps reading from ARC (where I copy the same file again). I tried lower numbers of that tunable, and performance increased until I hit that number, so I stuck with it. Yes, I know it's way beyond the defaults.

File transfers have been attempted using SMB from Windows 11 where server side copy takes effect, and by using a simple cp command via ssh. I can download via 10gbps network at 1GBps (gigabytes, no bits) easily, so definitely no network issues. In fact, I can easily transfer 10 50GB files at the same time, and it still saturates the 10gb link without the disks breaking a sweat. So to me, this is not a "why is my 10gb network/smb slow" question. There is no other activity when I'm doing this. Disks and CPU are idle until the transfer is attempted.

On TrueNAS 13-U1.1 I hit about 600MBps on file transfers between datasets with fresh data. Re-transferring after it gets stored in ARC brings speeds to about 1GBps. Tunable makes no difference on or off and my performance is pretty much cut in half now.

Now before you tell me that 600MBps is great and I should be happy with that performance, especially since I'm reading and writing to the same pool at the same time, let me explain why I think it should be better.

Here are my dd speed tests writing and then reading back a 500GB file:

root@nas:~/Files/Downloads # dd if=/dev/zero of=test.dat bs=1m count=512000

512000+0 records in

512000+0 records out

536870912000 bytes transferred in 94.790463 secs (5663765067 bytes/sec)

root@nas:~/Files/Downloads # dd of=/dev/null if=test.dat bs=1m count=512000

512000+0 records in

512000+0 records out

536870912000 bytes transferred in 133.549504 secs (4020014270 bytes/sec)

Yes, that is most definitely on a dataset with compression turned off, atime off, and all datasets involved are using 1MB block sizes.

Scrubs typically run at around 8GBps. I scrub through about 20TB of data in an hour or so, probably limited by too slow of a CPU.

The pool consists of 16 SATA SSDS connected to an 9400-16i, and another 16 SATA SSDs connected to another 9400-16i for a total of 32 SATA SSDs. Each 9400-16i is connected to it's own PCIe 8x slot, and the slots do not split bandwidth on the motherboard. It is made up of 4 RAIDZ2 vdevs. I have 256GB of memory installed, CPU is a W-2125.

This is disk usage during a fresh transfer:

Here is the same transfer again when it's already in ARC:

CPU usage:

This system has always performed way slower than I thought it should, but I've just lived with it. Now with my performance cut in half just from an update, it's getting silly.

Reverting back to 12 U8 brings performance immediately back. Just to be sure, I reverted back, then re-upgraded to 13. Same exact thing.

Please let me know what I'm missing here.

Last edited: