Hi Everyone,

I have been reading these forums for a long time and have run out of ideas for figuring my issue out. I had an old system that I had been using for a while, and it worked great. Then I got lucky with a Dell server that I could grab cheaply from work. Here are both systems.

Old System:

OS - Truenas Core 13-U4

Motherboard - Asus X99-E WS

RAM - 128GB(8x16GB) Samsung LRDIMM 2133mhz

CPU - Xeon E5 2699 V3

Boot Drives - Mirrored 256GB SSD

Storage Spinning Rust - 6 X Seagate 7E10 8TB 3.5" in RaidZ2

Storage NVME Striped - 4 X Samsung 970 EVO Plus 1TB

NIC - Mellanox ConnectX-4 Lx

PSU - EVGA G3 1000watt

New System:

OS - Truenas Core 13-U4

Dell r730XD

RAM - 512GB(16x32GB) Samsung LRDIMM 2133mhz

CPU - Dual Xeon E5 2697 V3

Controller - HBA330 (Non-Raid passtrough)

Boot Drives - Same as old

Storage Spinning Rust - Same as old plus a midplane of 4 more 3.5" drives in dual mirrored Vdevs

Storage NVME Striped - Same as old plus another 4 X Samsung 970 EVO Plus 1TB for two sets of NVME Striped

NIC - Mellanox ConnectX-4 Lx

PSU - Redundant 1100watt

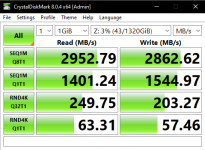

For my issue with my old system, I was able to saturate my 25gbe network. My synthetic testing with crystal disk mark was at 2.9GB/s Read and Write. SMB transfer was about 2.69GB/s Read and Write. It was amazing.

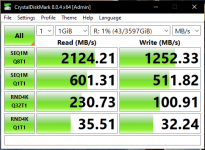

My new Dell server is set up the same way. Synthetic in crystal disk mark is about 2GB/s~ Read and 1.1GB/s Write. SMB transfer was about 1.35GB/s Read, and 1.15GB/s Write.

For the life of me, I can't get it to perform as well as my old system, which is essentially the same hardware while sharing the same generation.

So far, I have fully tested my network with other systems plus iperf between my main computer and server, and it keeps getting a solid 23.5gbps. So I believe it's safe to say the Nic and switches are not holding it back. Next was internal drive testing with DD in the shell. That is showing about 264MB/s Read and Write on spinning rust and about 3GB/s Read and 2.8GB/s Write on NVME. Next, I tested the Raid for both storage and NVME. The Seagates are giving about 450MB/s Read, and 250MB/s Write. NVME was giving 12GB/s Read and 11GB/s Write.

Next, I dove into possible issues when I installed a fresh copy of Truenas Core and just reloaded my config to rebuild my setup on the new server. So I did it again to see what would happen, and it still did not change getting about the exact same performance.

The last thing I verified with myself was that when I run a Read/Write test from two different machines connected at 25gbe hitting the server at the same time doing an SMB transfer, I end up getting 2.83GB/s Read and 1.34GB/s Write. So I know it can at least hit the theoretical max for writes with multiple sources hitting it, but writing is still disappointing comparatively.

I have not tried reinstalling and destroying my Raid NVME to add them and retest without my old config. I want to avoid doing this if at all possible. I was also looking into tunables, but I do not understand how to add and which ones to add to truenas core.

If anyone can help me start working toward a solution, or if you have a similar Dell server and have had this issue, I am ready to listen. I also will accept the fact that if this is normal for a Dell server to be, then I will take it and be happy with what I have.

Thanks for any help you may be able to provide!

I have been reading these forums for a long time and have run out of ideas for figuring my issue out. I had an old system that I had been using for a while, and it worked great. Then I got lucky with a Dell server that I could grab cheaply from work. Here are both systems.

Old System:

OS - Truenas Core 13-U4

Motherboard - Asus X99-E WS

RAM - 128GB(8x16GB) Samsung LRDIMM 2133mhz

CPU - Xeon E5 2699 V3

Boot Drives - Mirrored 256GB SSD

Storage Spinning Rust - 6 X Seagate 7E10 8TB 3.5" in RaidZ2

Storage NVME Striped - 4 X Samsung 970 EVO Plus 1TB

NIC - Mellanox ConnectX-4 Lx

PSU - EVGA G3 1000watt

New System:

OS - Truenas Core 13-U4

Dell r730XD

RAM - 512GB(16x32GB) Samsung LRDIMM 2133mhz

CPU - Dual Xeon E5 2697 V3

Controller - HBA330 (Non-Raid passtrough)

Boot Drives - Same as old

Storage Spinning Rust - Same as old plus a midplane of 4 more 3.5" drives in dual mirrored Vdevs

Storage NVME Striped - Same as old plus another 4 X Samsung 970 EVO Plus 1TB for two sets of NVME Striped

NIC - Mellanox ConnectX-4 Lx

PSU - Redundant 1100watt

For my issue with my old system, I was able to saturate my 25gbe network. My synthetic testing with crystal disk mark was at 2.9GB/s Read and Write. SMB transfer was about 2.69GB/s Read and Write. It was amazing.

My new Dell server is set up the same way. Synthetic in crystal disk mark is about 2GB/s~ Read and 1.1GB/s Write. SMB transfer was about 1.35GB/s Read, and 1.15GB/s Write.

For the life of me, I can't get it to perform as well as my old system, which is essentially the same hardware while sharing the same generation.

So far, I have fully tested my network with other systems plus iperf between my main computer and server, and it keeps getting a solid 23.5gbps. So I believe it's safe to say the Nic and switches are not holding it back. Next was internal drive testing with DD in the shell. That is showing about 264MB/s Read and Write on spinning rust and about 3GB/s Read and 2.8GB/s Write on NVME. Next, I tested the Raid for both storage and NVME. The Seagates are giving about 450MB/s Read, and 250MB/s Write. NVME was giving 12GB/s Read and 11GB/s Write.

Next, I dove into possible issues when I installed a fresh copy of Truenas Core and just reloaded my config to rebuild my setup on the new server. So I did it again to see what would happen, and it still did not change getting about the exact same performance.

The last thing I verified with myself was that when I run a Read/Write test from two different machines connected at 25gbe hitting the server at the same time doing an SMB transfer, I end up getting 2.83GB/s Read and 1.34GB/s Write. So I know it can at least hit the theoretical max for writes with multiple sources hitting it, but writing is still disappointing comparatively.

I have not tried reinstalling and destroying my Raid NVME to add them and retest without my old config. I want to avoid doing this if at all possible. I was also looking into tunables, but I do not understand how to add and which ones to add to truenas core.

If anyone can help me start working toward a solution, or if you have a similar Dell server and have had this issue, I am ready to listen. I also will accept the fact that if this is normal for a Dell server to be, then I will take it and be happy with what I have.

Thanks for any help you may be able to provide!