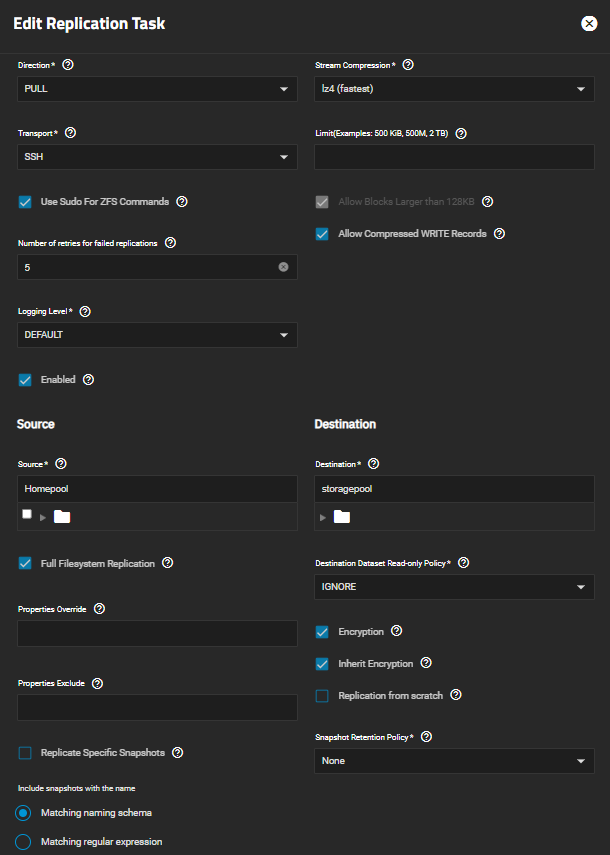

Error: concurrent.futures.process._RemoteTraceback: """ Traceback (most recent call last): File "/usr/lib/python3/dist-packages/middlewared/plugins/zfs_/dataset_quota.py", line 75, in get_quota with libzfs.ZFS() as zfs: File "libzfs.pyx", line 529, in libzfs.ZFS.__exit__ File "/usr/lib/python3/dist-packages/middlewared/plugins/zfs_/dataset_quota.py", line 77, in get_quota quotas = resource.userspace(quota_props) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ File "libzfs.pyx", line 3642, in libzfs.ZFSResource.userspace libzfs.ZFSException: cannot get used/quota for storagepool: dataset is busy During handling of the above exception, another exception occurred: Traceback (most recent call last): File "/usr/lib/python3.11/concurrent/futures/process.py", line 256, in _process_worker r = call_item.fn(*call_item.args, **call_item.kwargs) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ File "/usr/lib/python3/dist-packages/middlewared/worker.py", line 112, in main_worker res = MIDDLEWARE._run(*call_args) ^^^^^^^^^^^^^^^^^^^^^^^^^^^ File "/usr/lib/python3/dist-packages/middlewared/worker.py", line 46, in _run return self._call(name, serviceobj, methodobj, args, job=job) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ File "/usr/lib/python3/dist-packages/middlewared/worker.py", line 34, in _call with Client(f'ws+unix://{MIDDLEWARE_RUN_DIR}/middlewared-internal.sock', py_exceptions=True) as c: File "/usr/lib/python3/dist-packages/middlewared/worker.py", line 40, in _call return methodobj(*params) ^^^^^^^^^^^^^^^^^^ File "/usr/lib/python3/dist-packages/middlewared/plugins/zfs_/dataset_quota.py", line 79, in get_quota raise CallError(f'Failed retreiving {quota_type} quotas for {ds}') middlewared.service_exception.CallError: [EFAULT] Failed retreiving GROUP quotas for storagepool """ The above exception was the direct cause of the following exception: Traceback (most recent call last): File "/usr/lib/python3/dist-packages/middlewared/main.py", line 201, in call_method result = await self.middleware._call(message['method'], serviceobj, methodobj, params, app=self) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1342, in _call return await methodobj(*prepared_call.args) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ File "/usr/lib/python3/dist-packages/middlewared/schema/processor.py", line 177, in nf return await func(*args, **kwargs) ^^^^^^^^^^^^^^^^^^^^^^^^^^^ File "/usr/lib/python3/dist-packages/middlewared/plugins/pool_/dataset_quota_and_perms.py", line 223, in get_quota quota_list = await self.middleware.call( ^^^^^^^^^^^^^^^^^^^^^^^^^^^ File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1399, in call return await self._call( ^^^^^^^^^^^^^^^^^ File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1350, in _call return await self._call_worker(name, *prepared_call.args) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1356, in _call_worker return await self.run_in_proc(main_worker, name, args, job) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1267, in run_in_proc return await self.run_in_executor(self.__procpool, method, *args, **kwargs) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1251, in run_in_executor return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs)) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ middlewared.service_exception.CallError: [EFAULT] Failed retreiving GROUP quotas for storagepool