Proxy5

Dabbler

- Joined

- Oct 16, 2020

- Messages

- 14

Hi,

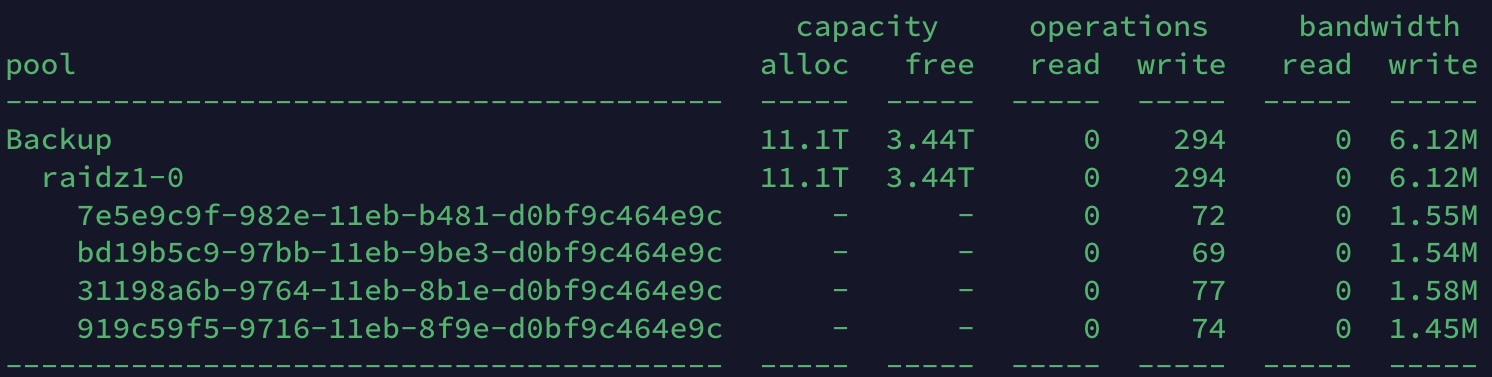

Raidz1 4x4TB HDD zfs pool write speed is low (lower than 7MB/s)

I am trying to copy files by accessing the truenas server via ssh.

the source SSD pool is 1 x RAIDZ2 | 4 wide | 931.51 GiB

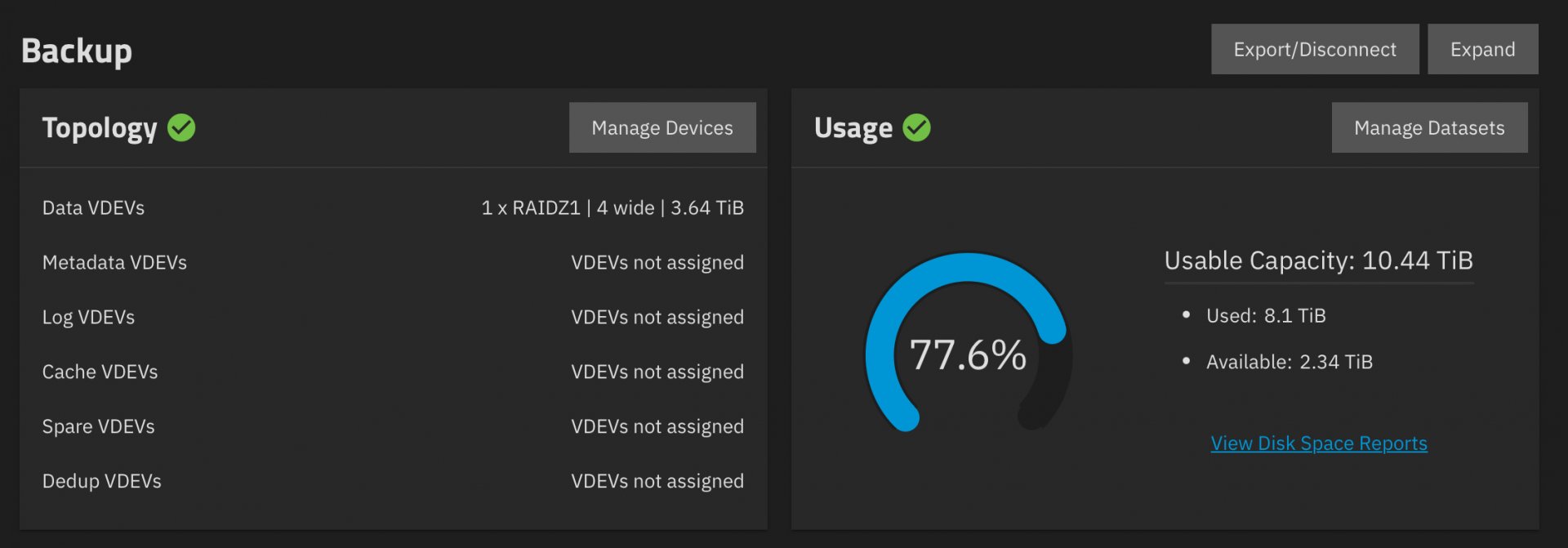

target HDD pool 1 x RAIDZ1 | 4 wide | 3.64 TiB

the write speed of the hdd pool is about 7MB/s, which I consider quite low.

for the test I copied an .m4v file with a simple cp command, the file size is 3.4 GiB

the target hdd zfs pool is currently 78% full, this may be important.

the hardware environment:

Dell T620

CPU E5-2630 v2 @ 2.60GHz

RAM 192 GB ECC

HBA H310 IT mod

software environment:

TrueNAS-SCALE-22.12.3.3

during copying, the data does not go out to the network, so I think it has no significance.

Any idea where I should go?

Raidz1 4x4TB HDD zfs pool write speed is low (lower than 7MB/s)

I am trying to copy files by accessing the truenas server via ssh.

the source SSD pool is 1 x RAIDZ2 | 4 wide | 931.51 GiB

target HDD pool 1 x RAIDZ1 | 4 wide | 3.64 TiB

the write speed of the hdd pool is about 7MB/s, which I consider quite low.

for the test I copied an .m4v file with a simple cp command, the file size is 3.4 GiB

the target hdd zfs pool is currently 78% full, this may be important.

the hardware environment:

Dell T620

CPU E5-2630 v2 @ 2.60GHz

RAM 192 GB ECC

HBA H310 IT mod

software environment:

TrueNAS-SCALE-22.12.3.3

during copying, the data does not go out to the network, so I think it has no significance.

Any idea where I should go?