I reboot religiously, especially in cases like this. Also esxi will tell you immediately that you need to reboot for the changes to take effect.

That's absolutely true.

Are you testing the correct drive?

6 old 2TB 7200rpm are configured in RAID10 in 1 virtual drive, so you can't miss if you only have one drive.

Didn't you say that you upgraded the RAID card firmware from the VM?

Yes I did. And that worked so easy and smooth I can't believe it. I am use too the old DOS boot flash utility routine I always did with HBA , and I will probably still do it that way on HBA since I know it works and have already pre-made boot disk with the various firmware included, but I wanted to try from Windows GUI to see what will happen and it did work as easy as flashing a SSD firmware from GUI software.

I'm curious what changed.

Me too, but perhaps is not something WE can tell. This is what I know is different on my end:

1.Card firmware is newer (does it matter I don't know)

2.Driver install order is different - I install lsi-provider first and them megaraid driver.

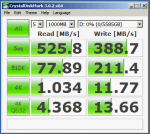

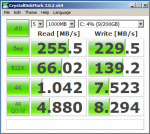

The conclusion is that there is way to make it work with drivers installed. So at least they don't slow down the performance any further. Now it's just the esxi itself. But that is something I don't think I'll get at answer to easy, but since I tested let me show you what the test looks like:

The virtual drive is the same (6 disk in raid10) ones is testes with Windows (native install) and then with ESXI 5.5 from VM (Windows)