Please read the ZFS primer before buying anything.

(Warning: supplied links are reference examples, not proposed hardware)

GENERAL

At that many (and that expensive) drives, maybe you want to consider a rack-mount case with redundant 1U PSUs and hot-plugable caddy drive bays, like

this. There are a lot cheaper options if you dig a little.

Your build also doesn't have a

separate SATA/SAS controller. With your setup, a

controller is a must.

Your motherboard seems really expensive (and power-hungry, maybe?) for a server setup. It is a workstation mobo, not a server one.

The reasons I am against this are not because of stubbornness or a lack of imagination. Let me elaborate:

MAIN ISSUE OF THAT MOTHERBOARD AND SYSTEM LAYOUT/TOPOLOGY

Everything sits on a single point of failure

The idea here (storage server use-case) is to spread cost as much as you can and make sure:

A: If/when your motherboard fails:

1. It doesn't take down any drives with it

(co and pro -sumer motherboards can have silent failures that trash other things on them)

2. It doesn't take a fortune to replace it

3. It doesn't have the potential to cause data loss

(a crisped chipset could do a lot of crazy things to a ZFS array) (way rarer failure, though)

4. It doesn't cause the whole array to lose power

(an ATX PSU controlled by the motherboard will unceremoniously cut power everywhere)

5. There is a separate system on the motherboard that can give a detailed report as to what is up and what is down and why

6. … etc.

B: When a drive fails catastrophically:

1. It doesn't have the potential to take any critical systems down with it (an overvoltage to the chipset, for example,

or worse, the CPU)

2. The system will not halt because of "hardware change panic"

(eg, if you remove internal storage from a PC, it will BSOD, even if it is not used)

3. You can immediately replace it and start resilvering

4. The system will not hang, letting the failed drive keep being in an "ON" state, worsening the problem. Also, in such a case, the rest of the drives keep "working" on an unresponsive system, connected to a freaked-out controller. That's a no-no.

5. … etc.

C: When the (or a) storage controller fails:

1. It won't take other critical systems with it

2. It won't cause a hard shutdown

3. It won't have the potential to misidentify any drives to the OS and cause any miswrites (rare)

4. It doesn't have the potential to cause a cold reboot loop, and "spread" electrical damage to all connected systems (CPU, chipset, drives, motherboard...)

5. … etc.

D: When a PSU fails

1. It isn't the only PSU

2. It doesn't have a direct electrical path (through common voltage regulators, for example), through the same rail, to CPU, controller, drives...

3. It cannot overvolt

4. It cannot undervolt to the rails (supplied power) and keep working with an internal short

5. It will not supply power with noise (capacitor failure)

6. It will not supply unfiltered power

7. It will not directly translate a line overvoltage (critical or not) to a supplied power analogous overvoltage

8-9. It can never, never, pass line current to the transformed rails, even for fractions of a second

8-9. It has not faulty grounding, device side (been there... tried to touch the tower to unscrew the side panel. I threw the whole thing, next)

HOW A SERVER SYSTEM LAYOUT ADDRESSES THESE PROBLEMS

1. There are redundancies

- A PSU that even slightly fails shuts down by default with another taking over

- SAS drives can connect to different controllers at the same time for redundancy (enterprise grade stuff, but can be done DIY)

- The system's real power control is separate from the OS in most server-grade boards

2. There is a separation of concerns

- Most components are made to do only what they are supposed to be doing by definition

- Things are not "crammed" together

- One thing breaking has little chance of breaking other things

- One thing breaking has little chance of stopping critical systems

- Common electrical power pathways are minimized

- (Enterprise-grade) Power delivery from the PSUs to the system is handled by an intermediate power regulator, greatly reducing the chance of a "PSU frenzy" type catastrophe

3. Server-grade stuff is designed for server use

- Hot-pluggable drives (and hot-displuggable, after being exported from ZFS, of course)

- Fully functional controllers that are designed to handle failing drives routinely

- Management subsystem, separate from the CPU, that can monitor the health of the system and is IP accessible

- Enormous capacity for topological changes

- No server-grade components "play hero". While con and pro -sumer grade components (disks, motherboards, controllers, etc.) will try to keep working as long as possible while failing, with little to no warning, until they fail spectacularly, server-grade components will report any failure to both the management (IPMI) and the OS with detail and will take the necessary steps on their own (from marking channels as "bad" on a SAS controller to a PSU being powered off upon detection of an inconsistency)

4. Minimization of operating costs

- Server-grade components consume way less power than con and pro -sumer ones. Especially prosumer ones.

- There are no extraneous or unneeded fanfares on server grade components, like, say, "the best audio chip with integrated amp ever!" or eSATA ports or a ton of USB controllers or integrated graphics that cannot be totally deactivated or... consuming power without reason

- Cooling is efficient and effective

- Counterintuitively, server grade components require way less maintenance (as cost) than standard ones

- Your time is money, so, spending a lot less time fiddling with the hardware means more time configuring your services

5. Minimization of maintenance costs

- Because of the separation of concerns, individual failing components cost way less to replace

- You won't ever have to throw away (or be forced to re-buy) a whole host of subsystems (that motherboard) because of one failing subsystem. Imagine bad SATA ports/channels on the motherboard, for instance. Or a bad controller. Do you keep trusting that board? Or do you turn it into a home cinema appliance? It is not by accident that most enterprise grade servers do not have SATA ports integrated on their motherboards.

- Because of the separation of concerns, even integrated systems on a server grade mainboard are being worn out way slower because they are not being used as hard (complementary SATA controller for the boot drive and the USB ports)

- You can get great performance even with baseline components.

- You can get away with lower-price intermediate components, if a component fails, because replacing them becomes trivial

- Most failures and problems even, you will face have been seen before by others on really similar systems. Time is money, remember?

- Less downtime on failures

- Way more ways to deal with worst case scenarios

- You can always get a spare lower quality controller (100$?) just in case. You cannot get a spare workstation motherboard like that! And, if you can, maybe just buy a premade server

6. Greater trust in your system, more breathing room to enjoy using and configuring it!

THE CASE AGAINST A SERVER GRADE SYSTEM

- You just want to learn how to setup a server, might turn it into a CAD workstation tomorrow...

- You think about turning the system to a server/pc (with a Windows VM and a GPU, perhaps ?)

- You will (for sure) constantly be changing hardware topologies and trying new things. Data doesn't matter that much to me, learning does.

- You are expecting to come onto a lot of money and want this temporarily. It will be turned to a PC with a Cort i9 extremofile and a RXX 9040 in a few months! (in that case, maybe not rough that motherboard up by using it to run an almost 200 TB storage server and keep it in the box? It will be a pity if it suffered any wear) (fake product names are deliberate

)

)

- Personal reasons / preferences

- (For whatever reason) You feel that server grade systems are either beyond what you can handle or you feel you need training to handle such equipment (not the case for anyone, though, its a perception created by the fact that you do not interact with such systems every day. A makeshift PC-to-Server takes a lot more skill and knowledge to keep operational than a purpose-made server)

- You think that in the end you will spend more money for a server-grade system than for a standard one (only if you try to buy a vendor made one, they currently start from 3500$ at least, I think? Those who know, please inform)

- You don't have time to learn new things, you know PCs already, so...

- You are not buying it yourself/alone and the others have strong, rooted opinions about the subject

- You just can't have that thing making all that noise in the house! (mostly the ready-made-vendor servers destined for datacenters make so much noise, you can make a rackmount server your self that has silent fans)

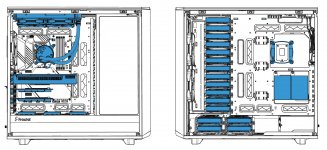

- You don't have the space for a rack (in that case, there are lots of great tower cases, like my The Tower snow edition, that take however many drives you want and even have toolless HDD trays)

FINAL THOUGHTS

1. Don't rush, search all options and paths. This is a significant investment of money, time and skill

2. Don't hurry to buy all the drives up-front, except if you already know for sure you need them. After all, HDDs get cheaper over time. Better to build a great system and expand your array later than buying all the drives and placing them on a mediocre system that in a few months time, will become a black hole for your time, money and calm.

3. There is no totally right path, but, some paths are way better than others.

4. Keep in mind: a server build takes planning. The fun is not in building it (like building a PC for instance) but in using it without remembering it even exists. Seriously building a server is work.

5. Don't be confined to mainstream suppliers, search server-specific suppliers and compare prices from many of them.

6. Don't just examine your use case. The main factor for how much of a server you need (in storage) is not the use case but the storage medium. Your choice of drives determines everything else. 144 TB of storage, for example, in ZFS require more

RAM. Waay more RAM. Any experienced ZFS users reading please consult on this (I can offer no relevant experience). You also need (not would like, need) 3-4 SSDs for caching, ZIL and SLOG.

Please read the

ZFS primer before buying anything.

As a beginner in the server space myself, have fun! I know I do.