Good day,

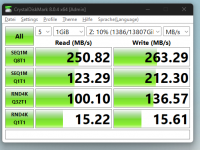

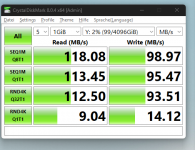

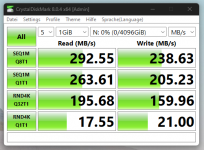

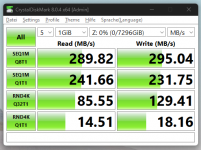

I would like to use my iSCSI share for VMs or similar, but I don't want everything to take a long time to load. That's why I did a speed test and was a bit shocked by the slow performance of Share. I also tested the SMB share and it's faster(?) than the iSCSI share. So if you have any hints on what I should adjust it would be very helpful.

The Truenas server:

Ryzen 5 1400

32GB RAM

2.5G PCI card

1 pool

1x 120gb M.2 Cache SSD

4x 4TB HDD Raid Z VDEV

4x 3TB HDD Raid Z VDEV

the server is connected to my PC directly with a 2.5G USB 3.0 adapter.

I would like to use my iSCSI share for VMs or similar, but I don't want everything to take a long time to load. That's why I did a speed test and was a bit shocked by the slow performance of Share. I also tested the SMB share and it's faster(?) than the iSCSI share. So if you have any hints on what I should adjust it would be very helpful.

The Truenas server:

Ryzen 5 1400

32GB RAM

2.5G PCI card

1 pool

1x 120gb M.2 Cache SSD

4x 4TB HDD Raid Z VDEV

4x 3TB HDD Raid Z VDEV

the server is connected to my PC directly with a 2.5G USB 3.0 adapter.