Full Disclosure: I have no idea what I am doing (from the standpoint as to this is my first foray into building a long term archival NAS) and am hoping someone might be able to provide some guidance / advice / tell me I am planning on doing something super dumb before I actually implement this configuration. Also subnote, some of the hardware configuration may seem a bit overkill, but my goal was to not have to expand the hardware beyond expanding the storage pool in the future (maybe in the future throwing in a higher speed NIC)

Hardware Configuration:

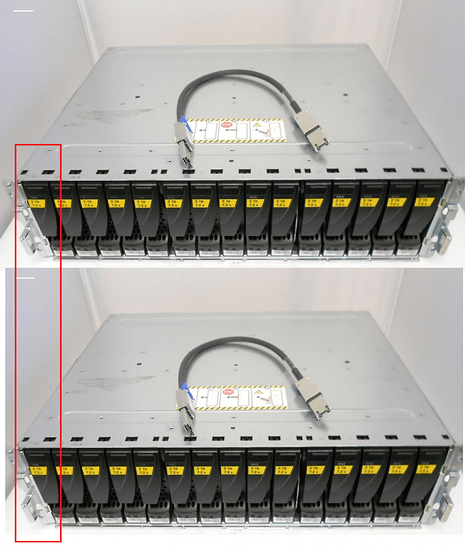

Chassis / MB: 24x Bay 2.5" SuperMicro X9DRI-LN4F+, 2x PSUs

CPU: 2x E5-2670 V2 2.50GHz 10C

Ram: 16x 32GB DDR3 RDIMM (512 GB total)

NIC: 1x Intel X540-BT1 10Gb RJ45, 4x 1Gb Onboard (including 1 IPMI)

HBA: LSI 9305-24i in IT mode SAS HBA

Initial Storage:

1: QNAP QM-4P-384 4x M.2 NVME PCIE 3.0 Expansion Card w/ 4x 2 TB Samsung 980 Pro NVME SSDs installed

2. 16x Samsung 870 Evo SATA SSDs in 16 of the 24 2.5" bays

Anticipated Software / VDEV Configuration

Truenas Scale Latest Version installed on 2x 2TB 980 Pros

Remaining 2x 980 Pros to be used as Cache

Pool:

1. 8x 870 4TB Evos in VDEV Raidz

2. 8x 870 4TB Evos in VDEV Raidz

3. (Future) 8x 4TB 870 Evos in VDEV Raidz

4. (Future) 8x TBD TB HDD in VDEV Raidz in External JBOD via SAS HBA*

5. (Future) 8x TBD TB HDD in VDEV Raidz in External JBOD via SAS HBA*

6. (Future) 8x TBD TB HDD in VDEV Raidz in External JBOD via SAS HBA*

*Note: I am not sold that #4,5,6 need to be part of the original #1,2,3 pool, as I'd be fine with a "Fast" SMB and a "Slow" SMB set of shares, but my goal is to just have 1 SMB share.

**Second Note: I am not sold on Raidz vs Raidz2, still figuring out which I will end up with.

Use Case:

Primarily, I will be using this NAS as a Mass Storage SMB folder. I don't intend to be writing to this 24x7. The intent is to be more of a write once in a while, mainly Read operation. When I do a write operation, it will be at ~700 GB at a time, but the frequency will be a few weeks / months between write operations. I will be integrating Truenas with a separate Windows Server 2012R2 host (for Active Directory sync). The majority of its operation will be Reads (watching videos, listening to music, etc.)

Maybe in the future I'd think about running PLEX or something on here, but I am very doubtful of that. My intent is to reallocate more than the 50% limit of RAM via the workaround mentioned for other Scale applications.

Thoughts?

Hardware Configuration:

Chassis / MB: 24x Bay 2.5" SuperMicro X9DRI-LN4F+, 2x PSUs

CPU: 2x E5-2670 V2 2.50GHz 10C

Ram: 16x 32GB DDR3 RDIMM (512 GB total)

NIC: 1x Intel X540-BT1 10Gb RJ45, 4x 1Gb Onboard (including 1 IPMI)

HBA: LSI 9305-24i in IT mode SAS HBA

Initial Storage:

1: QNAP QM-4P-384 4x M.2 NVME PCIE 3.0 Expansion Card w/ 4x 2 TB Samsung 980 Pro NVME SSDs installed

2. 16x Samsung 870 Evo SATA SSDs in 16 of the 24 2.5" bays

Anticipated Software / VDEV Configuration

Truenas Scale Latest Version installed on 2x 2TB 980 Pros

Remaining 2x 980 Pros to be used as Cache

Pool:

1. 8x 870 4TB Evos in VDEV Raidz

2. 8x 870 4TB Evos in VDEV Raidz

3. (Future) 8x 4TB 870 Evos in VDEV Raidz

4. (Future) 8x TBD TB HDD in VDEV Raidz in External JBOD via SAS HBA*

5. (Future) 8x TBD TB HDD in VDEV Raidz in External JBOD via SAS HBA*

6. (Future) 8x TBD TB HDD in VDEV Raidz in External JBOD via SAS HBA*

*Note: I am not sold that #4,5,6 need to be part of the original #1,2,3 pool, as I'd be fine with a "Fast" SMB and a "Slow" SMB set of shares, but my goal is to just have 1 SMB share.

**Second Note: I am not sold on Raidz vs Raidz2, still figuring out which I will end up with.

Use Case:

Primarily, I will be using this NAS as a Mass Storage SMB folder. I don't intend to be writing to this 24x7. The intent is to be more of a write once in a while, mainly Read operation. When I do a write operation, it will be at ~700 GB at a time, but the frequency will be a few weeks / months between write operations. I will be integrating Truenas with a separate Windows Server 2012R2 host (for Active Directory sync). The majority of its operation will be Reads (watching videos, listening to music, etc.)

Maybe in the future I'd think about running PLEX or something on here, but I am very doubtful of that. My intent is to reallocate more than the 50% limit of RAM via the workaround mentioned for other Scale applications.

Thoughts?