Hi Everyone

I'm fairly new to TrueNAS but OK experienced with IT and storage as I have been managing the IT of our small film post house.

We are currently installing and testing two new TrueNAS servers which should replace our QNAP that had a hardware failure, which cost us a lot of time fixing and killed our trust in QNAP.

Specs:

Supermicro SC848 case with 6G sas expander backplane

Motherboard Supermicro X9QRI-F

4 x CPU 1 Intel Xeon E5-4650, 8x 2.70GHz, 8 GT/s, 20MB Cache

RAM 512GB ECC DDR3 PC12800 (16x 32GB)

HP H220 SAS2 PCIe 3.0 HBA JBOD IT MODE

Intel T540 10G copper ethernet

Mellanox ConnectX3 40G ethernet

TrueNAS-12.0-U8

Storage:

16 x Seagate Exos 16TB sata disks in a pool with 2 VDEVS of 8 disks in raidZ2

(we will add another 8 disk RaidZ2 vdev of the same disks as soon as our hw raid archive is offloaded)

Client:

2019 Mac Pro

Mac OS Catalina

Chelsio T580 40G ethernet

40GbE directly connected to TrueNAS server with QSFP cable

10GbE connected over Netgear 10G switch

MTU 9000

The problem:

Performace is very erratic. Mostly with writes.

I've experimented a lot with tunables. Mostly using the ones from https://calomel.org/freebsd_network_tuning.html

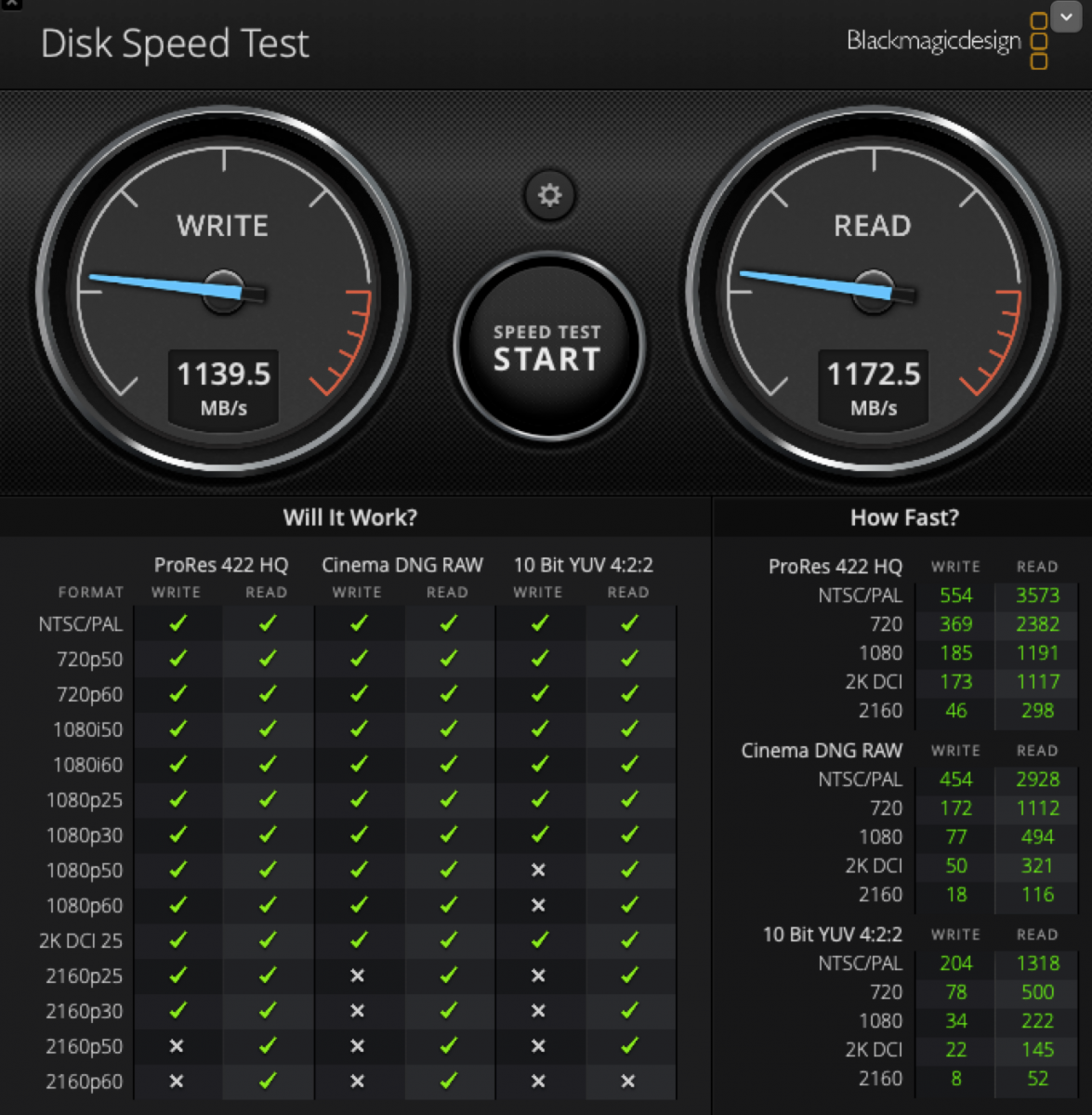

The network connection and SMB seem to be capable of high R/W speeds as this test with a very high zfs.txg.timeout shows.

I guess this is all written to and read from ARC:

However. More realistic tests show write speeds more around 200MB/s

Again, this is changing a lot. Sometimes ramping up to 500MB/s or even more. Sometimes stalling below 100MB/s

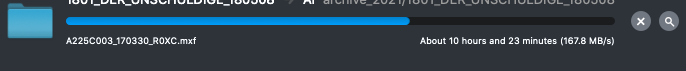

A real-world transfer that is running atm:

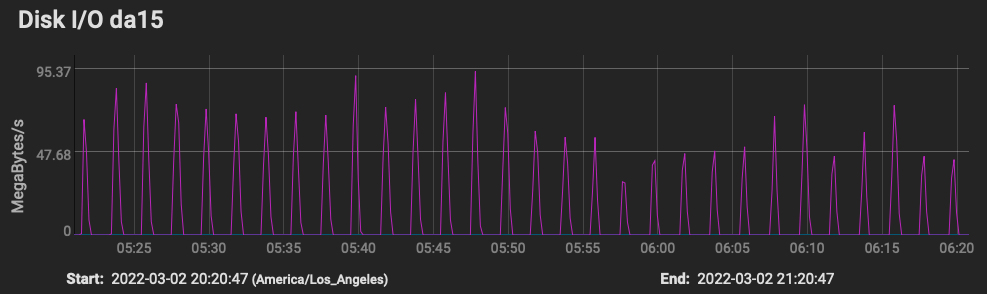

Disk activity during this transfer is very low with the disks mostly idle and short spikes every few minutes:

A dd test on the TrueNAS showed that the pool (compression off) should be capable of ~950MB/s write and 1500MB/s read.

Does any of you have an idea where to look at?

I don't expect 1000MB/s write speeds but something around 500MB/s should be possible with this hardware.

My suspicion is that there is something wrong with my ARC / ZFS tunables.

But completely turning off all zfs tunables doesn't help.

I haven't systematically turned on and off the tunables. But of course started with a clean system and added them gradually.

The Calomel network tunables led to an improvement. Otherwise setting MTU to 9000 and strict sync = no in the SMB shares led to the biggest improvement.

Of course I read many similar posts but nothing led to a breakthrough yet.

Happy for any hints.

Also interested in hiring a TrueNAS / ZFS specialist who could help us setting everything up.

I'm fairly new to TrueNAS but OK experienced with IT and storage as I have been managing the IT of our small film post house.

We are currently installing and testing two new TrueNAS servers which should replace our QNAP that had a hardware failure, which cost us a lot of time fixing and killed our trust in QNAP.

Specs:

Supermicro SC848 case with 6G sas expander backplane

Motherboard Supermicro X9QRI-F

4 x CPU 1 Intel Xeon E5-4650, 8x 2.70GHz, 8 GT/s, 20MB Cache

RAM 512GB ECC DDR3 PC12800 (16x 32GB)

HP H220 SAS2 PCIe 3.0 HBA JBOD IT MODE

Intel T540 10G copper ethernet

Mellanox ConnectX3 40G ethernet

TrueNAS-12.0-U8

Storage:

16 x Seagate Exos 16TB sata disks in a pool with 2 VDEVS of 8 disks in raidZ2

(we will add another 8 disk RaidZ2 vdev of the same disks as soon as our hw raid archive is offloaded)

Client:

2019 Mac Pro

Mac OS Catalina

Chelsio T580 40G ethernet

40GbE directly connected to TrueNAS server with QSFP cable

10GbE connected over Netgear 10G switch

MTU 9000

The problem:

Performace is very erratic. Mostly with writes.

I've experimented a lot with tunables. Mostly using the ones from https://calomel.org/freebsd_network_tuning.html

3 loader 1 hint.isp.0.role 2

4 loader 1 hint.isp.1.role 2

5 loader 1 hint.isp.2.role 2

6 loader 1 hint.isp.3.role 2

23 sysctl 0 net.iflib.min_tx_latency 1

24 loader calomel 1 vfs.zfs.dirty_data_max_max 1.37439E+11

25 loader calomel 1 vfs.zfs.dirty_data_max_percent 25

26 loader calomel 1 net.inet.tcp.hostcache.enable 0

27 loader calomel 1 net.inet.tcp.hostcache.cachelimit 0

28 loader calomel 1 machdep.hyperthreading_allowed 0

29 loader calomel 1 net.inet.tcp.soreceive_stream 1

30 loader calomel 1 net.isr.maxthreads -1

31 loader calomel 1 net.isr.bindthreads 1

32 loader calomel 1 net.pf.source_nodes_hashsize 1048576

33 sysctl calomel 1 kern.ipc.maxsockbuf 16777216

34 sysctl calomel 1 net.inet.tcp.recvbuf_max 4194304

35 sysctl calomel 1 net.inet.tcp.recvspace 65536

36 sysctl calomel 1 net.inet.tcp.sendbuf_inc 65536

37 sysctl calomel 1 net.inet.tcp.sendbuf_max 4194304

38 sysctl calomel 1 net.inet.tcp.sendspace 65536

39 sysctl calomel 1 net.inet.tcp.mssdflt 8934

40 sysctl calomel 1 net.inet.tcp.minmss 536

41 sysctl calomel 1 net.inet.tcp.abc_l_var 7

42 sysctl calomel 1 net.inet.tcp.initcwnd_segments 7

43 sysctl calomel 1 net.inet.tcp.cc.abe -1

44 sysctl calomel 1 net.inet.tcp.rfc6675_pipe 1

45 sysctl calomel 1 net.inet.tcp.syncache.rexmtlimit 0

46 sysctl calomel 1 vfs.zfs.txg.timeout 120

47 sysctl calomel 1 vfs.zfs.delay_min_dirty_percent 98

48 sysctl calomel 1 vfs.zfs.dirty_data_sync_percent 95

49 sysctl calomel 1 vfs.zfs.min_auto_ashift 12

50 sysctl calomel 1 vfs.zfs.trim.txg_batch 128

51 sysctl calomel 1 vfs.zfs.vdev.def_queue_depth 128

52 sysctl calomel 1 vfs.zfs.vdev.write_gap_limit 0

4 loader 1 hint.isp.1.role 2

5 loader 1 hint.isp.2.role 2

6 loader 1 hint.isp.3.role 2

23 sysctl 0 net.iflib.min_tx_latency 1

24 loader calomel 1 vfs.zfs.dirty_data_max_max 1.37439E+11

25 loader calomel 1 vfs.zfs.dirty_data_max_percent 25

26 loader calomel 1 net.inet.tcp.hostcache.enable 0

27 loader calomel 1 net.inet.tcp.hostcache.cachelimit 0

28 loader calomel 1 machdep.hyperthreading_allowed 0

29 loader calomel 1 net.inet.tcp.soreceive_stream 1

30 loader calomel 1 net.isr.maxthreads -1

31 loader calomel 1 net.isr.bindthreads 1

32 loader calomel 1 net.pf.source_nodes_hashsize 1048576

33 sysctl calomel 1 kern.ipc.maxsockbuf 16777216

34 sysctl calomel 1 net.inet.tcp.recvbuf_max 4194304

35 sysctl calomel 1 net.inet.tcp.recvspace 65536

36 sysctl calomel 1 net.inet.tcp.sendbuf_inc 65536

37 sysctl calomel 1 net.inet.tcp.sendbuf_max 4194304

38 sysctl calomel 1 net.inet.tcp.sendspace 65536

39 sysctl calomel 1 net.inet.tcp.mssdflt 8934

40 sysctl calomel 1 net.inet.tcp.minmss 536

41 sysctl calomel 1 net.inet.tcp.abc_l_var 7

42 sysctl calomel 1 net.inet.tcp.initcwnd_segments 7

43 sysctl calomel 1 net.inet.tcp.cc.abe -1

44 sysctl calomel 1 net.inet.tcp.rfc6675_pipe 1

45 sysctl calomel 1 net.inet.tcp.syncache.rexmtlimit 0

46 sysctl calomel 1 vfs.zfs.txg.timeout 120

47 sysctl calomel 1 vfs.zfs.delay_min_dirty_percent 98

48 sysctl calomel 1 vfs.zfs.dirty_data_sync_percent 95

49 sysctl calomel 1 vfs.zfs.min_auto_ashift 12

50 sysctl calomel 1 vfs.zfs.trim.txg_batch 128

51 sysctl calomel 1 vfs.zfs.vdev.def_queue_depth 128

52 sysctl calomel 1 vfs.zfs.vdev.write_gap_limit 0

The network connection and SMB seem to be capable of high R/W speeds as this test with a very high zfs.txg.timeout shows.

I guess this is all written to and read from ARC:

However. More realistic tests show write speeds more around 200MB/s

Again, this is changing a lot. Sometimes ramping up to 500MB/s or even more. Sometimes stalling below 100MB/s

A real-world transfer that is running atm:

Disk activity during this transfer is very low with the disks mostly idle and short spikes every few minutes:

A dd test on the TrueNAS showed that the pool (compression off) should be capable of ~950MB/s write and 1500MB/s read.

Does any of you have an idea where to look at?

I don't expect 1000MB/s write speeds but something around 500MB/s should be possible with this hardware.

My suspicion is that there is something wrong with my ARC / ZFS tunables.

But completely turning off all zfs tunables doesn't help.

I haven't systematically turned on and off the tunables. But of course started with a clean system and added them gradually.

The Calomel network tunables led to an improvement. Otherwise setting MTU to 9000 and strict sync = no in the SMB shares led to the biggest improvement.

Of course I read many similar posts but nothing led to a breakthrough yet.

Happy for any hints.

Also interested in hiring a TrueNAS / ZFS specialist who could help us setting everything up.