SpiritWolf

Dabbler

- Joined

- Oct 21, 2022

- Messages

- 29

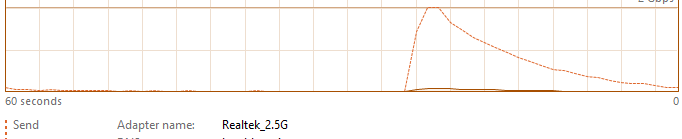

Pushing SMB large multi-gigabyte files TO the NAS causes a large transfer (via 2.5 network interface (intel) or single gig interface (Asus/Realtek) then dropping off.

Start of transfer, push from Mac or PC, 300MB/sec. After about 1 minute, 100, then 70/80MBS, then down to 1MB or so into the Kbytes. Often it disconnects.

Different Mobos (another Asus) different ethernet connections both fast and standard, they all fail.

Test Files: AntMan 4k rip 55gig (compressed as a zip)

Other files but not compressed, doesn't matter as much but will still exhibit

Smaller Files (seems that these do better)

Will come back to speed IF manually copied smaller files come afterward AND it doesn’t disconnect.

Happens with PC and MAC, using 2.5 from *original* tested Mobo (Asus internal REALTEK) or SFP+ 10Gig to Unify switch or combo or Macintosh 2.5 dongle.

The ONLY things not changed out are the RAM sticks and the Processor. Even the switches have been changed.

When connection fails, can’t re-connect w/o resetting CIFS/SMB or rebooting. Typically need to reboot. Reboot can take many minutes.

Deprecated protocol AFP will work…but weakly

———————————————————

TrueNAS 13-U2

Motherboard: Asus B-550 Plus AC-HES w/ current BIOS (tweaks perhaps?)

CPU: Ryzen 5600x 6 cores

Memory: 64 Gig ECC TrueColor 3200

Nics:

10Gb SFP+ PCI-E Network Card NIC, Compare to Intel X520-DA1, with Intel 82599EN Chip, Single SFP+ Port, PCI Express X8

...and

10Gtech 10GBase-T SFP+ Transceiver, 10G T, 10G Copper, RJ-45 SFP+ CAT.6a Module, to 2.5 switch (Mac, PC, and TrueNAS with 2.5 connection to Unifi 48 port switch for general distribution,

…and

Asus Realtek Gig MoBo Ethernet (Attached to Unify 48 port 1st Gen Switch but not always: sometimes use just the 2.5 connection alone)

HBA Card:

9207-8i PCIE3.0 6Gbps HBA LSI FW:P20 IT Mode ZFS FreeNAS unRAID 2* SFF-8087 US

(Attached to SODOLA 8-Port Unmanaged 2.5G Switch| 8 x 2.5GBASE-T Ports)

Drives: 5x Exos 18Tib RAID-Z2

GPU Radeon (used to just to see when output is wrong), not installed usually

PSU: Corsair RMX Series (2021), RM750x, 750 Watt, Gold, Fully Modular Power Supply

testparam-s attached

Start of transfer, push from Mac or PC, 300MB/sec. After about 1 minute, 100, then 70/80MBS, then down to 1MB or so into the Kbytes. Often it disconnects.

Different Mobos (another Asus) different ethernet connections both fast and standard, they all fail.

Test Files: AntMan 4k rip 55gig (compressed as a zip)

Other files but not compressed, doesn't matter as much but will still exhibit

Smaller Files (seems that these do better)

Will come back to speed IF manually copied smaller files come afterward AND it doesn’t disconnect.

Happens with PC and MAC, using 2.5 from *original* tested Mobo (Asus internal REALTEK) or SFP+ 10Gig to Unify switch or combo or Macintosh 2.5 dongle.

The ONLY things not changed out are the RAM sticks and the Processor. Even the switches have been changed.

When connection fails, can’t re-connect w/o resetting CIFS/SMB or rebooting. Typically need to reboot. Reboot can take many minutes.

Deprecated protocol AFP will work…but weakly

———————————————————

TrueNAS 13-U2

Motherboard: Asus B-550 Plus AC-HES w/ current BIOS (tweaks perhaps?)

CPU: Ryzen 5600x 6 cores

Memory: 64 Gig ECC TrueColor 3200

Nics:

10Gb SFP+ PCI-E Network Card NIC, Compare to Intel X520-DA1, with Intel 82599EN Chip, Single SFP+ Port, PCI Express X8

...and

10Gtech 10GBase-T SFP+ Transceiver, 10G T, 10G Copper, RJ-45 SFP+ CAT.6a Module, to 2.5 switch (Mac, PC, and TrueNAS with 2.5 connection to Unifi 48 port switch for general distribution,

…and

Asus Realtek Gig MoBo Ethernet (Attached to Unify 48 port 1st Gen Switch but not always: sometimes use just the 2.5 connection alone)

HBA Card:

9207-8i PCIE3.0 6Gbps HBA LSI FW:P20 IT Mode ZFS FreeNAS unRAID 2* SFF-8087 US

(Attached to SODOLA 8-Port Unmanaged 2.5G Switch| 8 x 2.5GBASE-T Ports)

Drives: 5x Exos 18Tib RAID-Z2

GPU Radeon (used to just to see when output is wrong), not installed usually

PSU: Corsair RMX Series (2021), RM750x, 750 Watt, Gold, Fully Modular Power Supply

testparam-s attached