AIM:

To help people looking at deduplication on TrueNAS 12+, what I've found on the way making it work on mine.

On sustained mixed loads, such as 50GB+ file copies and multiple transfers, using TrueNAS 12 with a deduped pool and default config, I now get almost totally consistent and reliable ~330-400 MB/sec client-server, server-client, and server-server, right now, and couldn't get close to that before, on 11.3 and earlier.

There's going to be quite a deep dive into some aspects of how dedup works. Like my other guides, I aim to leave you with enough understanding that you can probably solve your problems, and if not, you can interpret what you're seeing and understand where in FreeBSD and ZFS core functionality, to look for clarification.

I've had to look up arcane tunables, figure out dtrace to monitor the internals of ZFS... this is hopefully going to shortcut a lot of that for you..... if you can handle the information dump :)

This is a work in progress. I'm still moving my pool over to 12 and exploring what the new capabilities mean for it. So this will take time to write up and will be updated time to time as well. Its not nearly complete yet.

At this point the main takeaway is (1) what the issues are on 11.3 and earlier, and (2) it works nicely on 12

BACKGROUND: WHY DEDUP ANYWAY?! REALLY!!

Everyone knows dedup is an almost unworkable hog on ZFS. So why did I choose to go for it?

My home file server is used for heavy duty dedupable files. Its got a huge number of virtual machines, and also backups of my mum's photo albums. The backups repeat the entire contents, all 400 GB, rather than just contain changes to files. (She does full backups each time for reasons I'm comfortable with.)

I'm sure the detail can have holes picked in it. Why don't I do backups differently, or incrementally. User RAIDZ? use consumer not enterprise disks. Whatever. The upshot is, my pool is highly dedupable so I decided to go dedup and build a server capable of it.

So I built a server that the online info all said should be capable of dedup. Then I found it *still* wasn't up to the job. Huge RAM, huge L2ARC, huge fast pool, 10G LAN, and up to 11.3 it still couldn't handle one client on a quiet 10G LAN sending a single 20GB file over Samba. It stalled and failed - horribly and nastily. I now know why, and what to do to prevent that. I get reliable performance now. It's only taken me 3 or 4 years (9.x to 12-BETA1) of "So what the hell is going on!?" to find the answers.

This resource will summarise what I found on the way, for anyone else thinking of dedup on TrueNAS 12+ or OpenZFS 2.0+ generally. I'm busy working on the server config this week, so I'll begin writing this up in a bit when I'm done building.

ASSUMPTIONS ABOUT YOU, THE READER

I'm targeting this resource at a reader who knows enough, or can learn enough, to make use of the information in it. Someone who already has a bit of an idea about ZFS administration and managing their FreeNAS/TrueNAS server. Someone who knows that the hardware needs to be appropriate and isn't likely to be dirt cheap. Someone who knows dedup isn't likely to be easy, but wants to know how to make it work because they have a workload that matches it.

In other words, you've already got trhe basics of a *NAS server and now want to make it work with dedup. You want to know what's involved, and the pitfalls to watch for.

TERMINOLOGY GRAB BAG

A BIT ABOUT SSD I/O BEFORE WE START

I'll be referring in this page, to a type of SSD developed by Intel and Micron, called 3D X-Point (pronounced "crosspoint"). It's most widely sold as Intel's Optane. To see why, you need to know something about SSD sustained, random, and mixed, IO.

As is widely known, 4k random reads and (more so) writes are probably the single worst load for spinning disks, they have to physically move the read-write heads and wait for spinning metal, to do it. But I've got SSDs, problem solved, right?

Wrong.

What's less well known is that with the current exception of Optane and battery-backed DRAM cards ONLY, mixed sustained reads and writes are are absolutely capable of trashing top enthusiast and datacentre SSD performance.

With almost every SSD, you get a classic "bathtub" curve of very good for pure reads and pure writes, but dreadful for mixed sustained RW. That Intel datacentre SSD that does 200k IOPS? Reckon perhaps as little as 10-30K IOPS when used on mixed RW 4k loads. As at 2020, only 2nd gen onwards Optane breaks that pattern. Not your Samsung Pro SSDs, not your Intel 750 or P3700 NVMe write-oriented datacentre SSDs. Optane and pure battery backed RAM cards only.

I should clarify: That's nothing to do with SSDs having too-small DRAM or SLC cache. It's inherent in the SSD NVRAM chips themselves. Because it's nothing to do with the device cache type or size, a "better" SSD or one with "better" or no cache, won't help much.

If I refer specifically to Optane now and then, in this write-up, that's why. Not because I'm a fan-boi, but because they use a different technology that's largely immune to a problem that negatively and severely affects every other SSD on the market.

Just look at these graphs.....

We'll refer to this below, but bear them in mind. They could be important.

WHAT IS THE DEDUP TABLE (DDT)?

AND... IS IT STORED IN RAM, OR ON DISK?

This is a common point of confusion.

When deduplication is used, the dedup table is part of the way that data is stored in the pool. ZFS uses a hashed list of blocks, to allow easy identification of duplicate blocks. In simple terms, to find an actual block of data on disk, ZFS uses the DDT as an extra step.

The DDT is a fundamental pool structure used by ZFS to track what blocks make up what files, when dedup is used. It's as much a part of the pool as the dataset layout, the snapshot info, pointers to files, or the file date/time metadata. If you lose it, your pool is dead. If ZFS needs it, it reads it from the pool on demand, and uses the data contained to identify not just duplicate blocks, but also to find data on disk. So the dedup table is not a cache or an extra (like ZIL or L2ARC), that gets stored in RAM and if we lose it. too bad. If DDT data is in RAM or L2ARC, it's only there temporarily, like any other in-use pool data.

In other words, for all practical purposes you can think about ZFS handling dedup metadata identically to any of that sort of stuff, if that helps. It's integral to the pool. And the pool won't work well if it can't access the DDT fast, when needed.

IS DEDUP RIGHT FOR YOUR POOL?

The answer is always, what are your constraints and compromises?

Dedup is used to get around disk space issues, at the cost of extra processing. Dedup will always slow your system down, because it involves extra hashing and checking, and considerable amounts of small block (4k) I/O. If you have limited money or hardware/connectors, you might have to get dedup because the disks would be too expensive. If you don't have a problem with disk space, you may not need it.

These are some questions to ask, to assess whether dedup is right for *you*:

WHAT ARE THE PROBLEMS WITH DEDUP? (FreeNAS 11.3 and earlier)

On casually googling ZFS dedup, the it sounds like the main issue will be RAM. But historically there are at least 4 separate resource-related issues in getting ZFS dedup to work well.

(I'll be continuing writing this up over the next 2-3 weeks.....)

INTERPRETING THE PROBLEMS - SOFTWARE TOOLS THAT HELPED:

CHANGES IN OPENZFS 2.0 / TRUENAS 12:

To understand what made dedup really work for me, you need to know a bit about OpenZFS 2.0 and upcoming changes as of 2020. Not everyone does, so let's have a quick summary.

Originally ZFS was developed as part of Solaris, a Unix-like operating system by Sun. For a while Solaris went open source, then it became closed source again, but after it went closed source again, Ilumos took the open source version and forked it to create a continuing open source Solaris project. ZFS was part of that, and after a while ZFS development became a mishmash undertaken by Ilumos, the OpenZFS project, and various groups such as ZFS on Linux. With ZFS on FreeBSD being based on the Illumos code, changes took time to migrate between ZFS-Linux flavour, ZFS-Solaris flavour, and ZFS-FreeBSD flavour. Features were developed for ZFS on Linux (ZoL) that never made it into ZFS on FreeBSD for example, because ZFS on FreeBSD was based on ZFS for Illumos, which hadn't merged them in.

A little while ago, this situation was resolved. OpenZFS would be based in future on ZoL rather than ZFS from Illumos, I think that's because ZoL had developed further and had more momentum and use-base. With that decision made, a bunch of other new development work on ZFS was freed up and started to gain momentum as well, as the OpenZFS developer mailing list has shown since early 2020. TrueNAS 12 is the first FreeNAS/TrueNAS build to incorporate the new ZoL based ZFS code and extra features, and to benefit from these changes.

OpenZFS 2.0 itself is still developing. But below are some of the efficiency and speedup features either recently developed (recent 11.2 - 11.3 builds of FreeNAS), or new in TrueNAS 12, or in the OpenZFS development pipeline, as at August 2020. If I get any of this wrong, please drop me a note in the comments/discussion, and I'll fix it.

USEFUL CONFIG:

RESULTS:

Haven't got here yet, but some really revealing output dumps from this experiment are posted on my resource for SSD/Optane for now, if you want a preview!

Latency is now gorgeous! Just look! :)

I'm also using 12-BETA2 as a local iSCSI device in Windows. No issues at all, other than 2 settings needed to get networking reliable without dropout issues:

EYE CANDY:

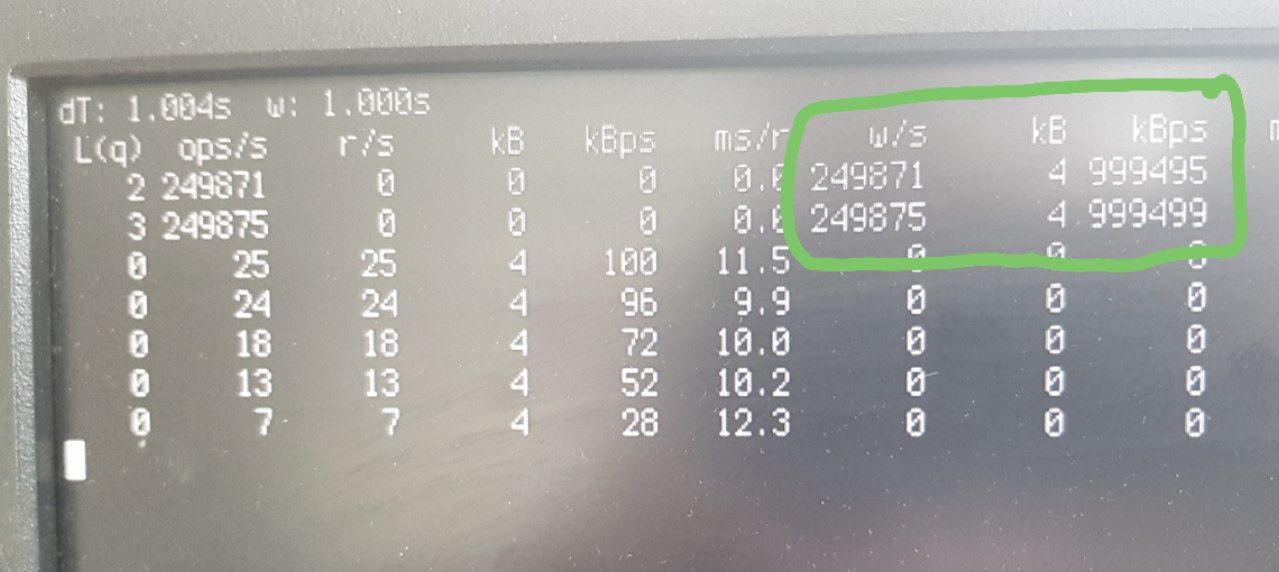

One statistic in the meantime.....

That 1/4 million 4k random IOPS is why special vdevs are essential for dedup........

To help people looking at deduplication on TrueNAS 12+, what I've found on the way making it work on mine.

On sustained mixed loads, such as 50GB+ file copies and multiple transfers, using TrueNAS 12 with a deduped pool and default config, I now get almost totally consistent and reliable ~330-400 MB/sec client-server, server-client, and server-server, right now, and couldn't get close to that before, on 11.3 and earlier.

There's going to be quite a deep dive into some aspects of how dedup works. Like my other guides, I aim to leave you with enough understanding that you can probably solve your problems, and if not, you can interpret what you're seeing and understand where in FreeBSD and ZFS core functionality, to look for clarification.

I've had to look up arcane tunables, figure out dtrace to monitor the internals of ZFS... this is hopefully going to shortcut a lot of that for you..... if you can handle the information dump :)

This is a work in progress. I'm still moving my pool over to 12 and exploring what the new capabilities mean for it. So this will take time to write up and will be updated time to time as well. Its not nearly complete yet.

At this point the main takeaway is (1) what the issues are on 11.3 and earlier, and (2) it works nicely on 12

BACKGROUND: WHY DEDUP ANYWAY?! REALLY!!

Everyone knows dedup is an almost unworkable hog on ZFS. So why did I choose to go for it?

My home file server is used for heavy duty dedupable files. Its got a huge number of virtual machines, and also backups of my mum's photo albums. The backups repeat the entire contents, all 400 GB, rather than just contain changes to files. (She does full backups each time for reasons I'm comfortable with.)

Without dedup: 40 TB of data. Say 80TB within 5 years. Now make that under 55% of a pool, because apparently ZFS likes to have a lot of empty space for maximum efficiency (55%? 75% I don't know, the principle's the same). That's 150 TB of capacity. Now add redundancy. My redundancy of choice is 3 way mirrors. And a backup server, with 2 way mirrors as well. so now I've got to buy, power, and connect, 750 TB of disks. At 8-10 TB per disk when I was buying, that's about 85 HDDs. At £200 each, thats £17k. Ouch!

With dedup: I get ~ 4x deduplication. So 4x less data. My 40TB dedups to about 10TB. But also, future growth will contain a disproportionate amount of dedupable material (repeats of existing), so perhaps the 80TB in 5 years time will dedup down to 12-13TB in 5 years time, not 20TB -- it's not linear because much of the extra 40TB already exists. Pool capacity needed in 5 years is cut from 80TB to about 13TB, that's about 84% down. So my HDD cost shrinks by 84% as well, from 85 HDDs @ £17k to 14 HDDs @ under £3k.

I'm sure the detail can have holes picked in it. Why don't I do backups differently, or incrementally. User RAIDZ? use consumer not enterprise disks. Whatever. The upshot is, my pool is highly dedupable so I decided to go dedup and build a server capable of it.

So I built a server that the online info all said should be capable of dedup. Then I found it *still* wasn't up to the job. Huge RAM, huge L2ARC, huge fast pool, 10G LAN, and up to 11.3 it still couldn't handle one client on a quiet 10G LAN sending a single 20GB file over Samba. It stalled and failed - horribly and nastily. I now know why, and what to do to prevent that. I get reliable performance now. It's only taken me 3 or 4 years (9.x to 12-BETA1) of "So what the hell is going on!?" to find the answers.

This resource will summarise what I found on the way, for anyone else thinking of dedup on TrueNAS 12+ or OpenZFS 2.0+ generally. I'm busy working on the server config this week, so I'll begin writing this up in a bit when I'm done building.

ASSUMPTIONS ABOUT YOU, THE READER

I'm targeting this resource at a reader who knows enough, or can learn enough, to make use of the information in it. Someone who already has a bit of an idea about ZFS administration and managing their FreeNAS/TrueNAS server. Someone who knows that the hardware needs to be appropriate and isn't likely to be dirt cheap. Someone who knows dedup isn't likely to be easy, but wants to know how to make it work because they have a workload that matches it.

In other words, you've already got trhe basics of a *NAS server and now want to make it work with dedup. You want to know what's involved, and the pitfalls to watch for.

TERMINOLOGY GRAB BAG

- DDT - "dedup table". The on-disk deduplication records, used to read deduped data and find existing duplicates.

A BIT ABOUT SSD I/O BEFORE WE START

(This section is a summary of my resource on SSDs and Optane. I have included it here because it turns out to be *so* critical to getting dedup working....)

I'll be referring in this page, to a type of SSD developed by Intel and Micron, called 3D X-Point (pronounced "crosspoint"). It's most widely sold as Intel's Optane. To see why, you need to know something about SSD sustained, random, and mixed, IO.

As is widely known, 4k random reads and (more so) writes are probably the single worst load for spinning disks, they have to physically move the read-write heads and wait for spinning metal, to do it. But I've got SSDs, problem solved, right?

Wrong.

What's less well known is that with the current exception of Optane and battery-backed DRAM cards ONLY, mixed sustained reads and writes are are absolutely capable of trashing top enthusiast and datacentre SSD performance.

With almost every SSD, you get a classic "bathtub" curve of very good for pure reads and pure writes, but dreadful for mixed sustained RW. That Intel datacentre SSD that does 200k IOPS? Reckon perhaps as little as 10-30K IOPS when used on mixed RW 4k loads. As at 2020, only 2nd gen onwards Optane breaks that pattern. Not your Samsung Pro SSDs, not your Intel 750 or P3700 NVMe write-oriented datacentre SSDs. Optane and pure battery backed RAM cards only.

I should clarify: That's nothing to do with SSDs having too-small DRAM or SLC cache. It's inherent in the SSD NVRAM chips themselves. Because it's nothing to do with the device cache type or size, a "better" SSD or one with "better" or no cache, won't help much.

If I refer specifically to Optane now and then, in this write-up, that's why. Not because I'm a fan-boi, but because they use a different technology that's largely immune to a problem that negatively and severely affects every other SSD on the market.

Just look at these graphs.....

We'll refer to this below, but bear them in mind. They could be important.

|

|

|

WHAT IS THE DEDUP TABLE (DDT)?

AND... IS IT STORED IN RAM, OR ON DISK?

This is a common point of confusion.

When deduplication is used, the dedup table is part of the way that data is stored in the pool. ZFS uses a hashed list of blocks, to allow easy identification of duplicate blocks. In simple terms, to find an actual block of data on disk, ZFS uses the DDT as an extra step.

The DDT is a fundamental pool structure used by ZFS to track what blocks make up what files, when dedup is used. It's as much a part of the pool as the dataset layout, the snapshot info, pointers to files, or the file date/time metadata. If you lose it, your pool is dead. If ZFS needs it, it reads it from the pool on demand, and uses the data contained to identify not just duplicate blocks, but also to find data on disk. So the dedup table is not a cache or an extra (like ZIL or L2ARC), that gets stored in RAM and if we lose it. too bad. If DDT data is in RAM or L2ARC, it's only there temporarily, like any other in-use pool data.

In other words, for all practical purposes you can think about ZFS handling dedup metadata identically to any of that sort of stuff, if that helps. It's integral to the pool. And the pool won't work well if it can't access the DDT fast, when needed.

IS DEDUP RIGHT FOR YOUR POOL?

The answer is always, what are your constraints and compromises?

Dedup is used to get around disk space issues, at the cost of extra processing. Dedup will always slow your system down, because it involves extra hashing and checking, and considerable amounts of small block (4k) I/O. If you have limited money or hardware/connectors, you might have to get dedup because the disks would be too expensive. If you don't have a problem with disk space, you may not need it.

These are some questions to ask, to assess whether dedup is right for *you*:

- How much disk space do you actually *need* now? How much will you need in 3-5 years (depending how your mental horizon works for future stuff)?

- How much of that space will dedup actually save (how much of that data is/will be actually duplicated?)

- Can you use something like rsync (built in) which allow you to do incremental backups of your data. If you don't need infinite backups, or you can use incremental rather than full backups, again, you wont use so much disk space over time, so dedup won't show many benefits or be as worthwhile.

- Can you/will you buy larger disks to store your near-future storage needs undeduped?

- Is speed/simplicity, or money, the limiting factor here? Do you need to use dedup to make it affordable/practical?

WHAT ARE THE PROBLEMS WITH DEDUP? (FreeNAS 11.3 and earlier)

On casually googling ZFS dedup, the it sounds like the main issue will be RAM. But historically there are at least 4 separate resource-related issues in getting ZFS dedup to work well.

1 - RAM & dedup ratio

This is what every web page picks up on. It's true, ZFS uses a lot of RAM for dedup. But a lot of the time the high end values arise from deduping unsuitable datasets. ZFS dedup uses a certain amount of bytes for each unique block. If your data doesn't have enough duplicate blocks to make it worthwhile (low dedup ratio), then there will be a lot more unique blocks than "normal" for good deduplication, and the dedup table size will soar - and you'll need a lot more RAM to hold it. If your pool is highly dedupable (3x to 5x or more size reduction) the number of unique blocks is proportionately a lot less and the RAM needed will seem much more reasonable.

What *is* true, is that ZFS will need access to the dedup table records (DDT) potentially for *every* disk block read/write. So you need enough RAM to hold those records in very high speed access (your ARC cache in RAM or L2ARC, or very capable SSD special vdevs), and be sure they won't be evicted from ARC if it'll leat to significant IO timings to reload them at 4k random mixed IO. That means you need RAM, or at worst a very good L2ARC, possibly configured as being dedicated to metadata.

2 - CPU

Dedup requires a lot more hash calculation and lookup, because hashing is the basis for dedup table lookup. It puts extra work on the CPU.

3 - Write amplification

In ZFS, metadata writes themselves generate further writes. Changing a block changes its checksum, which has to be stored in a block, which changes *that* blocks checksum, and alters free space, which alters the spacemap data, which alters *its* checksums, which have to be stored, which alters other metadata checksums. If multiple copies are kept, or parity calculated on RaidZ, that's more amplification. For safety *multiple* copies of all metadata are kept by default (see tunable redundant_metadata=all), so every metadata change is actually many metadata changes. Short version - adding extra data changes can add a lot more data updates. Dedup does this to an extent - though not unreasonably so. (All writes do it, but dedup writes have an extra table to update). Worth noting.

4 - Dedup table loading

This one is huge, rarely mentioned, and needs a big box around it.

When a pool uses dedup, every single block read requires lookup reads in the dedup table. Every single block written requires both dedup table reads beforehand (when reading what's on disk already), and then also requires dedup table updates at writeout.

This alone is so severe that it can, and quite probably will, utterly trash any hope of dedup usability/reliability, unless extra steps are taken (that aren't possible on 11.3 and earlier unless you have an all-SSD pool), including ensuring metadata is on low latency high speed storage. I cannot emphasise this enough.

Dedup tables are typically held as 4k blocks. And you'll remember what we said about 4k random IO. But dedup needs to be read, to write, and read *before* writing, and you'll remember what we said about mixed RW access even on SSDs.

Now, dedup isn't the only metadata traditionally held as 4k blocks. The spacemaps, metadata, and indirect blocks can also be held that way. But spacemap can be told to preload to ARC when ZFS starts up, and the spacemap's block size and IO parameters can be configured using tunables. Matt Ahrens wrote a BSDCan paper on it in 2016 (pages 21-27 especially). The DDT blocks have none of that tunability on 11.3 and earlier, and only one of them introduced in 12+ (though it's enough to make dedup workable). You are stuck with 4k random IO, and often mixed RW, no DDT preload, no way to target the DDT and coalesce multiple 4k DDT IO into 16K~32K IO using larger block sizes... and it sucks.

Here's my own real-world example - and I noted it on ixsystem's bug reporter and in the forums, before I finally understood what was going on.

My file server under 11.3 used Intel's top-end datacentre SSDs (P3700 NVMe), and mirrored datacentre SAS3 (12gbit/s) HDDs striped 4 ways, and 10 gigabit ethernet. It had 192 GB RAM and no VMs or other things to do but be a file server. It should roar.

Real-world result? On a quiet LAN with one single client, doing one single 30GB file save across SMB to a pool on 11.3 with over 10TB spare space, I monitored gstat to watch pool IO during the transfer. Naively you'd expect a bunch of 128K ~ 1MB writes.

Not a chance. I got a torrent of hundreds of thousands of 4k reads (that's literal, >10^5 or 10^6: my DDT has around 170M entries and the HDDs were providing a couple of thousand 4k reads per second between them). Other members confirm the issue ("My takeaway from this.... Dedupe VDEVs gets POUNDED; all said. Tons of IO..."). This resulted in IO latency of tens of seconds to 1 or 2 minutes before ZFS could load enough of the DDT into memory, to actually get writing out some of the file to the pool.

Enough 4k DDT IO, in fact, to nastily kill the Samba session.

And shortly afterwards, to kill my SSH sessions, LAN file share discovery and browsing of the server, iSCSI, zfs send/recv, ... *everything* that was networked.

Why?

Well.... my pool has 171 million blocks in the DDT. They are *all* stored as randomly placed 4K blocks, and every one of them is potentially a prerequisite to reading or writing any block of data. Think about that..... no matter your RAM and L2ARC, *every* block of data you R/W on the pool, will need the related randomly scattered 4k deduplication records fetched.

ZFS was stalled because the disk IO system was snarled up trying to get enough DDT reads to continue writing. During that time, the incoming network buffers had quickly filled up (10G ethernet can do that *really* quickly) so the networking stack signalled the client to pause sending data for up to a minute or 2 at a time (TCP WINDOW=0).

The client paused, and asked if it could resume. "Can send?" "No, TCP windows zero". After a minute or so of repeated failed retries, the client not unreasonably terminated the Samba session and reported a file activity failure.

That wasn't the end of the fun. With the file transfer dying at around 20 - 40GB done, ZFS now had to *unwind* the entire partial transfer - unallocate all blocks already allocated, update all spacemaps and DDT to reflect the deletion of the part-file... that was another many minutes of IO...

At that point, because the file transfer and unwind were ongoing, the server could accept *no* traffic on that NIC for a while, with networking, Samba, and file IO all in stalled I/O chaos. The natural outcome was that ultimately, the backed up data and zero window didn't just kill the transfer. They killed anything that needed to be able to reach the server and get a response. SSH, Windows Discovery (the server and its shares dropped off WIndows Explorer), file share browsing, SCP, *everything* that relied on *any* of these. They came back maybe 15 minutes later, after it all settled down internally.....

The proof that this was DDT and 4k disk IO related, and not other factors was that after 10 minutes or so, when it started to work again, I could try rerunning the same file transfer again. This time, the needed DDT entries were already now in RAM. So it had no problem at all second time.

It wasn't like that first run solved the problem either. The 1st run might have loaded the required DDT blocks for *that* file. But it still only loaded a tiny part of the overall DDT to do it, so the next file hit the same fate regardless, as did the next.

I cannot emphasise this strongly enough. Dedup table 4k read-before-use, even without anything else, put such a demand on the pool's IO system, that even on very good hardware, without certain mitigations available in TrueNAS 12+, it single-handedly left dedup almost unworkable on FreeNAS 11.3, because it stalled - nastily. In stalling, it also trashed every LAN service and session of almost every important kind as a byproduct.

4 - Tunables / Parameters

ZFS has a huge number of tunables. Some of those will help a lot. But you need to figure outt what's likely to help, based on your hardware. I can point out some important ones, but ultimately this one's down to your individual NAS hardware.

(I'll be continuing writing this up over the next 2-3 weeks.....)

INTERPRETING THE PROBLEMS - SOFTWARE TOOLS THAT HELPED:

CHANGES IN OPENZFS 2.0 / TRUENAS 12:

To understand what made dedup really work for me, you need to know a bit about OpenZFS 2.0 and upcoming changes as of 2020. Not everyone does, so let's have a quick summary.

Originally ZFS was developed as part of Solaris, a Unix-like operating system by Sun. For a while Solaris went open source, then it became closed source again, but after it went closed source again, Ilumos took the open source version and forked it to create a continuing open source Solaris project. ZFS was part of that, and after a while ZFS development became a mishmash undertaken by Ilumos, the OpenZFS project, and various groups such as ZFS on Linux. With ZFS on FreeBSD being based on the Illumos code, changes took time to migrate between ZFS-Linux flavour, ZFS-Solaris flavour, and ZFS-FreeBSD flavour. Features were developed for ZFS on Linux (ZoL) that never made it into ZFS on FreeBSD for example, because ZFS on FreeBSD was based on ZFS for Illumos, which hadn't merged them in.

A little while ago, this situation was resolved. OpenZFS would be based in future on ZoL rather than ZFS from Illumos, I think that's because ZoL had developed further and had more momentum and use-base. With that decision made, a bunch of other new development work on ZFS was freed up and started to gain momentum as well, as the OpenZFS developer mailing list has shown since early 2020. TrueNAS 12 is the first FreeNAS/TrueNAS build to incorporate the new ZoL based ZFS code and extra features, and to benefit from these changes.

OpenZFS 2.0 itself is still developing. But below are some of the efficiency and speedup features either recently developed (recent 11.2 - 11.3 builds of FreeNAS), or new in TrueNAS 12, or in the OpenZFS development pipeline, as at August 2020. If I get any of this wrong, please drop me a note in the comments/discussion, and I'll fix it.

- Spacemaps v2 (11.3) and Log Spacemap (12-BETA) - enhancements to the way ZFS manages and tracks free space. Spacemaps v2 refers to longer records in space tracking, which enables other space tracking methods to work. Log spacemap refers to an overhaul of the way spacemaps themselves work. Rather than all changes requiring an update to the spacemaps (a tree of free space in the pool), changes to the spacemaps are written to a simple "log" intiailly, which is merged into the spacemaps when enough changes have accumulated, which saves a lot of writes and a lot of time.

- Special Class vdevs (12-BETA) - the ability to stipulate that certain vdevs, typically SSD based, are reserved for metadata, dedup tables, and if desired other very small files and data, which moves that data off spinning disks and works around the 4k random RW issue

- Warm/persistent L2ARC (coming soon) - L2ARC that doesn't lose its contents over reboot. Meaning metadata and dedup data already cached, isn't lost and have to be reloaded gradually, every reboot.

- Preload dedup tables (almost ready, coming imminently) - a command that tells ZFS to crawl the dedup tables on disk, and cache them in ARC/L2ARC. So they are ready at high speed when needed, and don't have to be read in during file activity.

- Sequential scrub/resilver (12-BETA)- reading the pool metadata in first, then issuing resilver/scrub IO sequentially, rather than as random IO, which is a lot faster.

- Dedup Log (Coming hopefully 2021?) - This is a reworking of dedup table handling, analogous to Log SPacemap. In theory it should cut down dedup disk IO by a huge amount. It's being worked on, or there's active interest in it, but the timescale for when itll be done is not so obvious. It looks like 2021, but ixStaff will know its status much better than any users.

USEFUL CONFIG:

RESULTS:

Haven't got here yet, but some really revealing output dumps from this experiment are posted on my resource for SSD/Optane for now, if you want a preview!

Latency is now gorgeous! Just look! :)

I'm also using 12-BETA2 as a local iSCSI device in Windows. No issues at all, other than 2 settings needed to get networking reliable without dropout issues:

- My network card needs 9k jumbo frames off, for it to work reliably. 4k seems okay. Don't know why. Bug reported.

- Tunable "kern.cam.ctl.iscsi.ping_timeout" set to 10 seconds (default=5) because it kept registering timeouts. Not sure if those disconnected the iSCSI session internally or not, but that fixed it and seems happier. Investigation left for future.

EYE CANDY:

One statistic in the meantime.....

That 1/4 million 4k random IOPS is why special vdevs are essential for dedup........