- Joined

- Jan 14, 2023

- Messages

- 623

Hello all,

I setup 4x4 tb drives in raidz2 at my system:

asrock b560m-itx/ac

i3 10100

32 GB RAM

Delock 5 port SATA PCI Express x4 Card

4x WD Red Plus 4 TB

+ SSDs for proxmox and backup of VMs

Truenas as VM

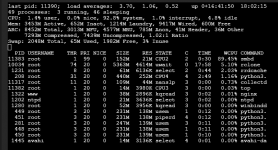

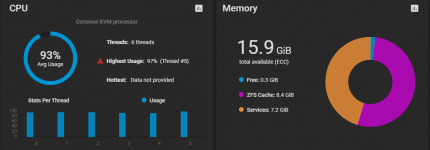

and I assigend 6 cores and 16 GB RAM to Truenas. When I copy files I hit almost 100% usage on all 6 cores during the transfer (via a SMB Share from a Windows Client).

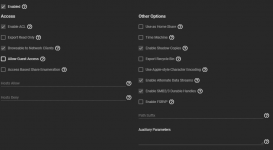

On a live dataset I had the compression set to ZTSD, then changed it to LZ4 - no change and then to compression off. Still the CPU load is that high during transfers.

Any ideas where I can look what may not be configured properly? The documentation states "TrueNAS does not require two cores, as most halfway-modern 64-bit CPUs likely already have at least two." hence I believe such high system loads are not to be expected.

edit: added screenshots for further information

Thanks in advance!

I setup 4x4 tb drives in raidz2 at my system:

asrock b560m-itx/ac

i3 10100

32 GB RAM

Delock 5 port SATA PCI Express x4 Card

4x WD Red Plus 4 TB

+ SSDs for proxmox and backup of VMs

Truenas as VM

and I assigend 6 cores and 16 GB RAM to Truenas. When I copy files I hit almost 100% usage on all 6 cores during the transfer (via a SMB Share from a Windows Client).

On a live dataset I had the compression set to ZTSD, then changed it to LZ4 - no change and then to compression off. Still the CPU load is that high during transfers.

Any ideas where I can look what may not be configured properly? The documentation states "TrueNAS does not require two cores, as most halfway-modern 64-bit CPUs likely already have at least two." hence I believe such high system loads are not to be expected.

edit: added screenshots for further information

Thanks in advance!

Attachments

Last edited: