My current setup is using a HPE Proliant Microserver Gen 10+ with 2 16 TB HDDs in raid0 (I know it has no redundancy, but it is just a small homeserver to start with), 16 GB ECC memory, 1 TB NVME SSD to test things like L2ARC/SLOG and now moving the system dataset to it so the boot pool doesn't consume the whole drive but at least it can use its large write endurance. The boot pool is on an internal flash drive and this is why I am worried about the write endurance for longevity. I am limited by the microserver's HDD slots and its 1 PCIe slot. The setup is for an ISCSI (directly connected) gaming/media NAS with NextCloud with remote access outside the network.

I checked many posts regarding the noise issue; all of which says to move the system dataset to a SSD and to make the syslog use the system dataset. Both of which I have done. These posts also said that by doing this, it will virtually remove all r/w activity from the boot pool, but this was not the case. The other drives showed no activity on idle except the flash drive with the boot pool, which happens every 5 seconds. I know it is specifically 5 seconds because of the timeout flush and I know changing that timeout could result in data in that amount of time being lost., which is why I am avoiding it and the problem would still remain with this "solution." I unset the pool for docker/kube and ended it via sysctl stop to make sure it wasn't that. I also ran sudo find . -type f -mmin +6 to find what was changed in the last 6 minutes but I don't understand its output to be frank.

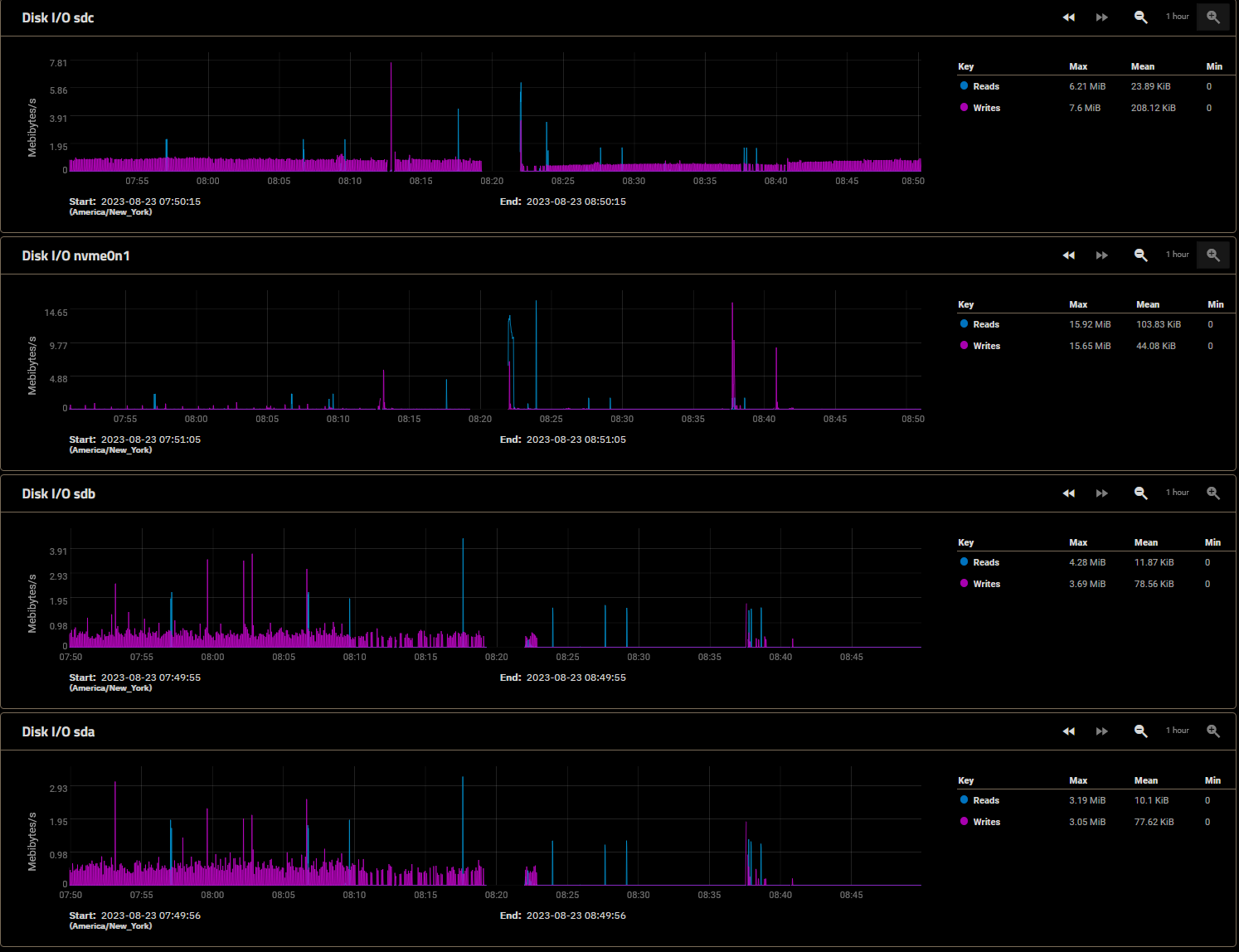

sda and sdb would be the 2 16 TB HDDs. sdc would be the flash drive. I want to move the constant writes to nvme without moving the boot pool if possible. There was one more interesting post, but this pertained to FreeNAS.

https://www.truenas.com/community/threads/nas-is-writing-every-5-seconds.18925/

In the most recent replies, cyberjock mentioned that the syslog is actually writing to ramdisk rather than the actual boot pool. I would also like to know if that is true for TrueNAS and if these charts don't reflect something like that. I might have missed a few details but that should be the majority.

I checked many posts regarding the noise issue; all of which says to move the system dataset to a SSD and to make the syslog use the system dataset. Both of which I have done. These posts also said that by doing this, it will virtually remove all r/w activity from the boot pool, but this was not the case. The other drives showed no activity on idle except the flash drive with the boot pool, which happens every 5 seconds. I know it is specifically 5 seconds because of the timeout flush and I know changing that timeout could result in data in that amount of time being lost., which is why I am avoiding it and the problem would still remain with this "solution." I unset the pool for docker/kube and ended it via sysctl stop to make sure it wasn't that. I also ran sudo find . -type f -mmin +6 to find what was changed in the last 6 minutes but I don't understand its output to be frank.

root@truenas[~]# sudo find . -type f -mmin +1

./dead.letter

./.zshrc

./.warning

./.zsh-histfile

./.freeipmi/sdr-cache/sdr-cache-truenas.localhost

./.gdbinit

./.zlogin

./.bashrc

./.profile

./.midcli.hist

./tdb/persistent/activedirectory_user.tdb

./tdb/persistent/snapshot_count.tdb

./tdb/persistent/ldap_group.tdb

./tdb/persistent/activedirectory_group.tdb

./tdb/persistent/ldap_user.tdb

sda and sdb would be the 2 16 TB HDDs. sdc would be the flash drive. I want to move the constant writes to nvme without moving the boot pool if possible. There was one more interesting post, but this pertained to FreeNAS.

https://www.truenas.com/community/threads/nas-is-writing-every-5-seconds.18925/

In the most recent replies, cyberjock mentioned that the syslog is actually writing to ramdisk rather than the actual boot pool. I would also like to know if that is true for TrueNAS and if these charts don't reflect something like that. I might have missed a few details but that should be the majority.