Default ARC sizes are different on Linux and FreeBSD: https://openzfs.github.io/openzfs-docs/Performance and Tuning/Module Parameters.html#zfs-arc-max

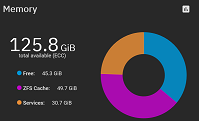

My ARC is capped at 50 GB and there's a bunch of wasted memory (and an obscenely large "services" - but I do have a 16 GB VM).

I'm tempted to set it to ca 100 GB based on this. Any good reason to avoid that / do anyone know why it's set to 50% on TrueNAS SCALE? Maybe just because it's the default on Linux?'

EDIT (to update):

Something (TrueNAS?) is setting it to a lower value than the Linux default, which should either be "0" or about 68719476736, depending on whether it evaluates the formula. WTF ?

For a NAS with over 32GB RAM, I don't think the Linux formula is a great fit.

- Linux: 1/2 of system memory

- FreeBSD: the larger of all_system_memory - 1GB and 5/8 × all_system_memory

My ARC is capped at 50 GB and there's a bunch of wasted memory (and an obscenely large "services" - but I do have a 16 GB VM).

I'm tempted to set it to ca 100 GB based on this. Any good reason to avoid that / do anyone know why it's set to 50% on TrueNAS SCALE? Maybe just because it's the default on Linux?'

EDIT (to update):

Code:

# cat /sys/module/zfs/parameters/zfs_arc_max 53574109184

Something (TrueNAS?) is setting it to a lower value than the Linux default, which should either be "0" or about 68719476736, depending on whether it evaluates the formula. WTF ?

Last edited: