pixelwave

Contributor

- Joined

- Jan 26, 2022

- Messages

- 174

UPDATE - For the final BOM and build please skip to:

www.truenas.com

www.truenas.com

Read below in order to follow my entire journey starting with the initial build:

-----------

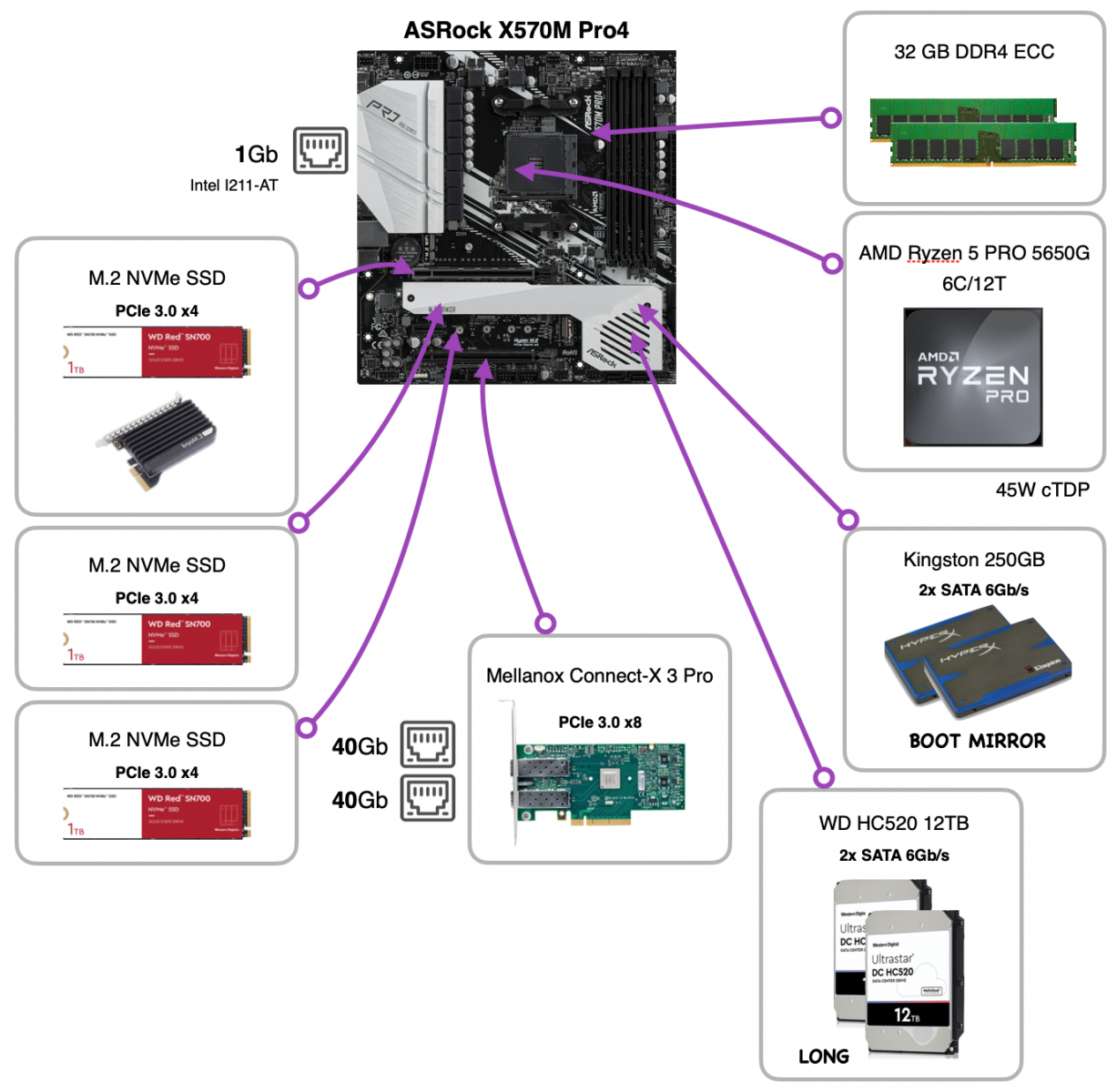

I am wrapping up my final hardware choice to update my TrueNAS Scale System.

I went through the official hardware recommendation list - but would like to go with an AMD system because of a mix of price, power consumption, performance, form-factor and current local availability.

Additional components:

Storage setup:

Boot -> 2x Kingston FURY SSD 240GB (Mirror)

Fast Storage -> 3x WD Red SN700 1TB (RaidZ-1)

Archive -> 2x Western Digital Ultrastar DC HC520 12TB (Mirror)

Any issues or things I might forgot?

I know the boot mirror is overkill - but I still have those drives laying around for free. Also the case is already there and thats why I like to go with mATX.

AMD Ryzen with ECC and 6x M.2 NVMe build

I wouldn't personally run docker/kubernetes off spinning disks - SSD all the way imo My docker/kubernetes is running from 3x M.2 NVMe array (R-Z1) ..

Read below in order to follow my entire journey starting with the initial build:

-----------

I am wrapping up my final hardware choice to update my TrueNAS Scale System.

I went through the official hardware recommendation list - but would like to go with an AMD system because of a mix of price, power consumption, performance, form-factor and current local availability.

Additional components:

- PSU: SEASONIC SSP-350 GT

- CASE: SilverStone Temjin Evolution TJ08-E

- CPU-FAN: Noctua NH-L9a-AM4 chromax.black

Storage setup:

Boot -> 2x Kingston FURY SSD 240GB (Mirror)

Fast Storage -> 3x WD Red SN700 1TB (RaidZ-1)

Archive -> 2x Western Digital Ultrastar DC HC520 12TB (Mirror)

Any issues or things I might forgot?

I know the boot mirror is overkill - but I still have those drives laying around for free. Also the case is already there and thats why I like to go with mATX.

Attachments

Last edited: