LearnLearnLearn

Patron

- Joined

- Apr 26, 2015

- Messages

- 320

I'm at a loss of where to look for this problem but it seems to be with the TN NFS service itself.

I have a TrueNAS instance running on an old Dell R620 with 10Gbe card installed.

An NFS share is set up on the server solely to provide storage/backups etc to ESX 6.7 hosts.

After setting everything up with shares on both ESX hosts, I moved a vm from one of the hosts to the NFS share.

The speed was terrible, reaching a max of 1.3Mbps. I then tried moving the vm to the second host and the same thing happened.

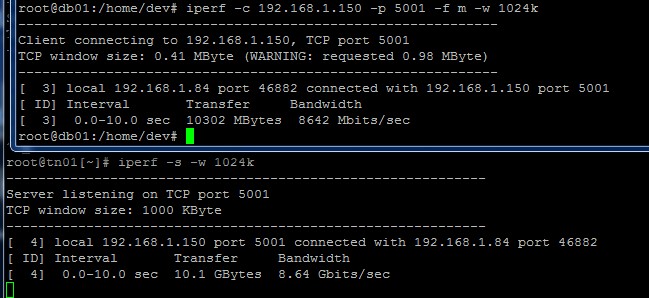

Then I ran iperf on the TN server using different sizes from 128k to 1024k. As you can see, the transfer speeds are pretty much where they should be I think.

So, what is going on? Is NFS not able to keep up? Is there some additional configuring I should be doing on the TN server?

BTW, the 10Gbe cards are also iSCSI but I've read that iSCSI is only slightly faster so have not bothered to go through all that change/test.

All of the cards are HP 634026-001 554FLR 10/40G 2P SFP and the switch is an old NETAPP NAE-1101. The TN box would have a QLogic QLE8152.

I have a TrueNAS instance running on an old Dell R620 with 10Gbe card installed.

An NFS share is set up on the server solely to provide storage/backups etc to ESX 6.7 hosts.

After setting everything up with shares on both ESX hosts, I moved a vm from one of the hosts to the NFS share.

The speed was terrible, reaching a max of 1.3Mbps. I then tried moving the vm to the second host and the same thing happened.

Then I ran iperf on the TN server using different sizes from 128k to 1024k. As you can see, the transfer speeds are pretty much where they should be I think.

So, what is going on? Is NFS not able to keep up? Is there some additional configuring I should be doing on the TN server?

BTW, the 10Gbe cards are also iSCSI but I've read that iSCSI is only slightly faster so have not bothered to go through all that change/test.

All of the cards are HP 634026-001 554FLR 10/40G 2P SFP and the switch is an old NETAPP NAE-1101. The TN box would have a QLogic QLE8152.

/__opt__aboutcom__coeus__resources__content_migration__simply_recipes__uploads__2015__01__perfect-popcorn-vertical-b-1800-b6948302f0f1460a93eb9d4d73623831.jpg)