Hello Everyone.

I'm noticing a different between all servers, using different access methods, when accessing the same Truenas Core server running 13.0-U1.1.

The server is an AMD 3700x, 64 GB of memory, 10GBE networking, and 20 disks configured as 10 vDev's. The disks are SSD's. The pool does not have Cache, Log, metadata, dedupe, or hot spares configured.

The connectivity to the server is not routed.

Each ESXi server is an AMD 5600g, 129 GB of memory, and 10 GBE connection.

The windows admin box is a 5900x, 32GB of memory, and 10 GBE connection.

The switch is a Unifi US-16-XG.

Jumbo frames (MTU) have been configured across all systems and on the Unifi US-16-XG

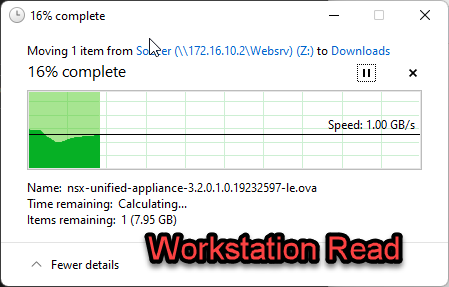

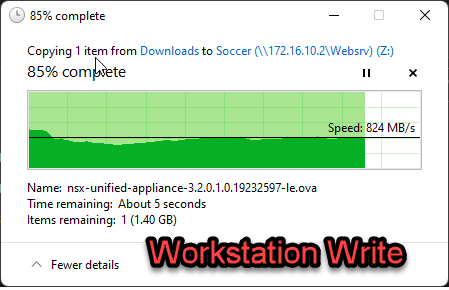

when using the windows host, we see SMB writes over 800MB/s and reads up to 1.14 GB /s

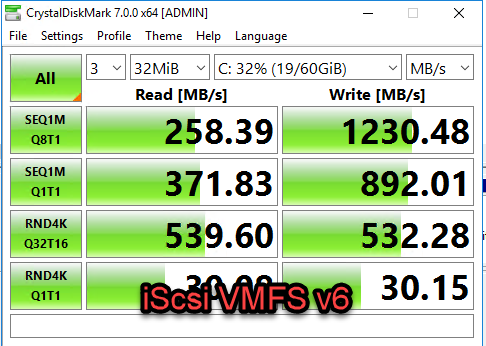

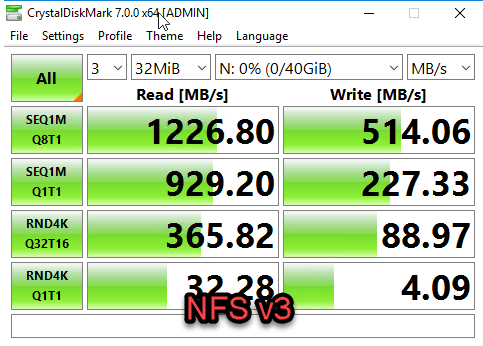

For NFS and iSCSI testing, i spun up a single VM and placed a disk on each type of Data store. Using Crystal disk mark, we see the below performance for reads and writes. I notice that iSCSI has slow reads but fast writes and NFS has fast reads and slow writes.

delayed ack has been disabled on the ESXi hosts.

I changed the ESXi IO restrictions from 128 to 512 k today via esxcli system settings advanced set -o /ISCSI/MaxIoSizeKB -i 512

Any idea what I can do to even out the speeds?

I'm noticing a different between all servers, using different access methods, when accessing the same Truenas Core server running 13.0-U1.1.

The server is an AMD 3700x, 64 GB of memory, 10GBE networking, and 20 disks configured as 10 vDev's. The disks are SSD's. The pool does not have Cache, Log, metadata, dedupe, or hot spares configured.

The connectivity to the server is not routed.

Each ESXi server is an AMD 5600g, 129 GB of memory, and 10 GBE connection.

The windows admin box is a 5900x, 32GB of memory, and 10 GBE connection.

The switch is a Unifi US-16-XG.

Jumbo frames (MTU) have been configured across all systems and on the Unifi US-16-XG

when using the windows host, we see SMB writes over 800MB/s and reads up to 1.14 GB /s

For NFS and iSCSI testing, i spun up a single VM and placed a disk on each type of Data store. Using Crystal disk mark, we see the below performance for reads and writes. I notice that iSCSI has slow reads but fast writes and NFS has fast reads and slow writes.

delayed ack has been disabled on the ESXi hosts.

I changed the ESXi IO restrictions from 128 to 512 k today via esxcli system settings advanced set -o /ISCSI/MaxIoSizeKB -i 512

Any idea what I can do to even out the speeds?

Last edited: