clifford64

Explorer

- Joined

- Aug 18, 2019

- Messages

- 87

I have a TrueNAS system that acts as an iSCSI SAN for two ESXi hosts.

iSCSI network is 10Gb and segmented from data network. Have Jumbo Frames turned on. Not sure if it is working properly, I have a Mikrotik CRS305-1G-4S+IN and it is weird to configure jumbo frames.

TrueNAS Specs:

E3-1245 V2 (Hardly ever see it go above 5% usage, not sure if this is normal)

32GB ECC

2TBx12 drives. Drives are setup in mirrors and striped across 6 mirrors.

Truenas version 12.0 U2

ESXi Hosts: running 7.0, Ryzen 2700x, 32GB Ram.

I am getting very slow iSCSI read performance. Trying to perform a backup right now of a single VM that is 6TB, I am getting incredibly slow read performance and trying to do anything on any other vm is impossible.

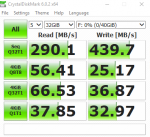

This also shows when trying to do a storage vmotion of a VM from the iSCSI to a local SSD on either of my two ESXi hosts. Transferring the VM to a local datastore shows reads of only 30-80 MB/sec.

I have about 10 VMs in general. Very low usage in terms of compute. I do have a media server VM, so that does use quite a bit of computer here and there.

I have tried using a 500GB L2ARC drive, but haven't had any really noticeable differences in performance when using it.

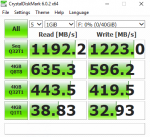

When performing crystal disk mark tests on VMs stored on SAN, I get 10GB and saturate the the iSCSI network. Writing VMs to the SAN gets speeds of 300-400MB/sec. But trying to do anything with writes seems to be painfully slow and with the backup going, VMs are unusable. The Backup is only reading the VM at 30-70 MB/sec.

Any ideas on how to troubleshoot this?

iSCSI network is 10Gb and segmented from data network. Have Jumbo Frames turned on. Not sure if it is working properly, I have a Mikrotik CRS305-1G-4S+IN and it is weird to configure jumbo frames.

TrueNAS Specs:

E3-1245 V2 (Hardly ever see it go above 5% usage, not sure if this is normal)

32GB ECC

2TBx12 drives. Drives are setup in mirrors and striped across 6 mirrors.

Truenas version 12.0 U2

ESXi Hosts: running 7.0, Ryzen 2700x, 32GB Ram.

I am getting very slow iSCSI read performance. Trying to perform a backup right now of a single VM that is 6TB, I am getting incredibly slow read performance and trying to do anything on any other vm is impossible.

This also shows when trying to do a storage vmotion of a VM from the iSCSI to a local SSD on either of my two ESXi hosts. Transferring the VM to a local datastore shows reads of only 30-80 MB/sec.

I have about 10 VMs in general. Very low usage in terms of compute. I do have a media server VM, so that does use quite a bit of computer here and there.

I have tried using a 500GB L2ARC drive, but haven't had any really noticeable differences in performance when using it.

When performing crystal disk mark tests on VMs stored on SAN, I get 10GB and saturate the the iSCSI network. Writing VMs to the SAN gets speeds of 300-400MB/sec. But trying to do anything with writes seems to be painfully slow and with the backup going, VMs are unusable. The Backup is only reading the VM at 30-70 MB/sec.

Any ideas on how to troubleshoot this?