velocity08

Dabbler

- Joined

- Nov 29, 2019

- Messages

- 33

HI Team

Here's an interesting observation wondering if anyone has seen this behavior before and/ or could explain it.

New SuperMicro FreeNas 11.3 U2 box

32 Threads

128 GB Ram

Dual 10 GB SFP+

iSCSI Multi Path

ESXi DataStore

VMware RoundRobin set in iSCSI adapter on ESXi.

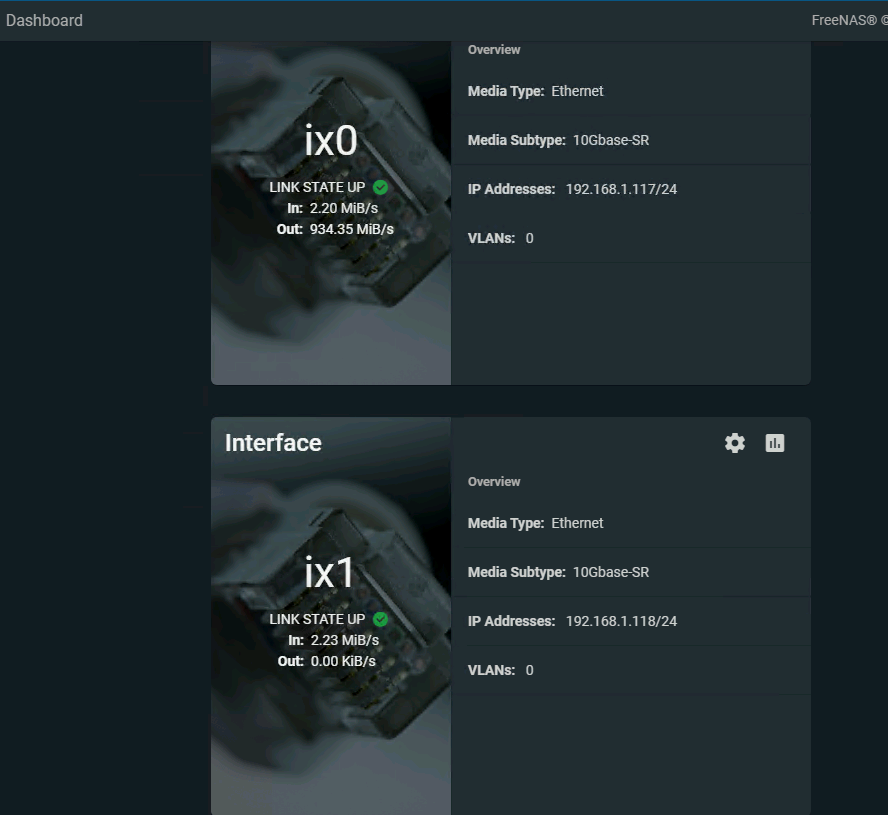

When performing IO load testing for reads/ writes/ mixed loads we see in/ out data on one of the 10 GB Nic ix0 all the time which is expected.

On the second 10 GB Nic ix1 we only ever see in data Zero out data back to ESXi.

see screen shot for example.

any ideas or theories?

""Cheers

G

Here's an interesting observation wondering if anyone has seen this behavior before and/ or could explain it.

New SuperMicro FreeNas 11.3 U2 box

32 Threads

128 GB Ram

Dual 10 GB SFP+

iSCSI Multi Path

ESXi DataStore

VMware RoundRobin set in iSCSI adapter on ESXi.

When performing IO load testing for reads/ writes/ mixed loads we see in/ out data on one of the 10 GB Nic ix0 all the time which is expected.

On the second 10 GB Nic ix1 we only ever see in data Zero out data back to ESXi.

see screen shot for example.

any ideas or theories?

""Cheers

G