clifford64

Explorer

- Joined

- Aug 18, 2019

- Messages

- 87

Background: I am in the process of performing some upgrades and redoing my system. Currently, I have two ESXi servers in a homelab connecting to my TrueNAS system. The systems are to help me learn and mess around with as I work in IT. I have also started using them for a few personal items like plex media server and a game server. I have added a few SSDs I had lying around to create an SSD pool for running virtual machines and then utilize my main storage pool as just file level storage over SMB for my plex server.

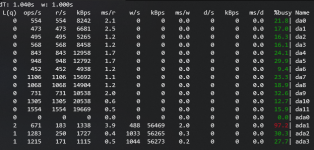

After adding the three SSDs to their own RAIDZ1 Pool, I created an iSCSI LUN and attached them to my ESXi servers. I vMotioned a single VM to the pool for just testing purposes and I was getting pretty slow performance. I used gstat -dp to monitor the individual disk usage and noticed that a single SSD (ada1) was reporting busy while the others were barely doing anything. This was also the case when performing an iozone test on the pool. This SSD in question is a Samsung 850 pro. I would expect an 850 pro to perform better than this. vMotion speeds to the SSD pool was about 80MB/sec or less. These are all older drives, so I am wondering if this one is starting to degrade and is on the failure path.

I vMotioned the VM back to my normal spinning storage iSCSI pool and read speeds were great. 400MB/sec read from SSD and 400MB/sec write to spinning pool. The bottleneck in that instance was the 10gb network. I then recreated the pool in a mirror without ada1 using just the other two in a mirror. I performed the vMotion again and both drives were busy and being fully utilized. This new vMotion was about 200-250MB/sec write to the SSD pool.

I am also guessing performance is going to suffer because the 3 SSDs are all different models.

I have:

ada1 - Samsung 850 Pro

ada2 - crucial MX 300

ada3 - Samsung 850 Evo

My goal for an SSD pool is just generally more fast and responsive OS's for the VMs I am running.

Questions:

1. Is there anything else that can be wrong with the 3 drive SSD pool with a single busy drive? Or is it a combo of probably bad drive and using multiple different models?

2. Any recommendations of SSDs to use in a 3 drive RAIDz1 pool? I would prefer to stick with standard consumer drives as I don't have a whole lot of money for this upgrade. Or are standard consumer drives a bad idea no matter what? I don't have a massive workload. I have:

3. Any special settings or anything I need to take into consideration for an SSD pool vs a traditional HDD pool?

After adding the three SSDs to their own RAIDZ1 Pool, I created an iSCSI LUN and attached them to my ESXi servers. I vMotioned a single VM to the pool for just testing purposes and I was getting pretty slow performance. I used gstat -dp to monitor the individual disk usage and noticed that a single SSD (ada1) was reporting busy while the others were barely doing anything. This was also the case when performing an iozone test on the pool. This SSD in question is a Samsung 850 pro. I would expect an 850 pro to perform better than this. vMotion speeds to the SSD pool was about 80MB/sec or less. These are all older drives, so I am wondering if this one is starting to degrade and is on the failure path.

I vMotioned the VM back to my normal spinning storage iSCSI pool and read speeds were great. 400MB/sec read from SSD and 400MB/sec write to spinning pool. The bottleneck in that instance was the 10gb network. I then recreated the pool in a mirror without ada1 using just the other two in a mirror. I performed the vMotion again and both drives were busy and being fully utilized. This new vMotion was about 200-250MB/sec write to the SSD pool.

I am also guessing performance is going to suffer because the 3 SSDs are all different models.

I have:

ada1 - Samsung 850 Pro

ada2 - crucial MX 300

ada3 - Samsung 850 Evo

My goal for an SSD pool is just generally more fast and responsive OS's for the VMs I am running.

Questions:

1. Is there anything else that can be wrong with the 3 drive SSD pool with a single busy drive? Or is it a combo of probably bad drive and using multiple different models?

2. Any recommendations of SSDs to use in a 3 drive RAIDz1 pool? I would prefer to stick with standard consumer drives as I don't have a whole lot of money for this upgrade. Or are standard consumer drives a bad idea no matter what? I don't have a massive workload. I have:

- 2 Domain Controllers for testing

- an online running intermediate CA

- a powered off offline root CA

- A Veeam backup server that runs off a local SSD on the esxi host and a veeam proxy running on truenas storage (backs up to a local RAID controllers on that host separate of truenas)

- A pfsense router

- An Ubuntu reverse Proxy

- An Ubuntu Web server

- An Ubuntu game hosting server, Minecraft, Valheim, Zomboid (only about 3-5 friends play on them at max and only utilized about 2-4 times per week)

- A RedHat email server for testing (less than 2 emails a week)

- A vCenter

- An Ubuntu Media server running the arrs (main media storage will be on spinning storage and I would like to run the OS on the SSD pool)

3. Any special settings or anything I need to take into consideration for an SSD pool vs a traditional HDD pool?