-

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum has become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

z2pool gone crazy after stupid tinkering-no data related issues but: inaccessible GUI options, eternal resilver loop and wrong disk identifiers

- Thread starter ElGusto

- Start date

- Joined

- Aug 9, 2022

- Messages

- 321

(When the time comes) Hook it up to a laptop (USB to SAS/SATA ~ 15$) running ubuntu (or whatever) live cd, run gparted, erase partition table (don't create new gpt).Yes. What method would you use for erasing the drive? Just dd zeroes over the GPT table? Full erasure would take days, again probably.

- Joined

- Aug 9, 2022

- Messages

- 321

Or ( ) run the server with a linux live cd and do it there? Remove all other drives as to not get confused and suffer an accident, even.

) run the server with a linux live cd and do it there? Remove all other drives as to not get confused and suffer an accident, even.

Hello!

The resilver has run though - and sadly has started over again.

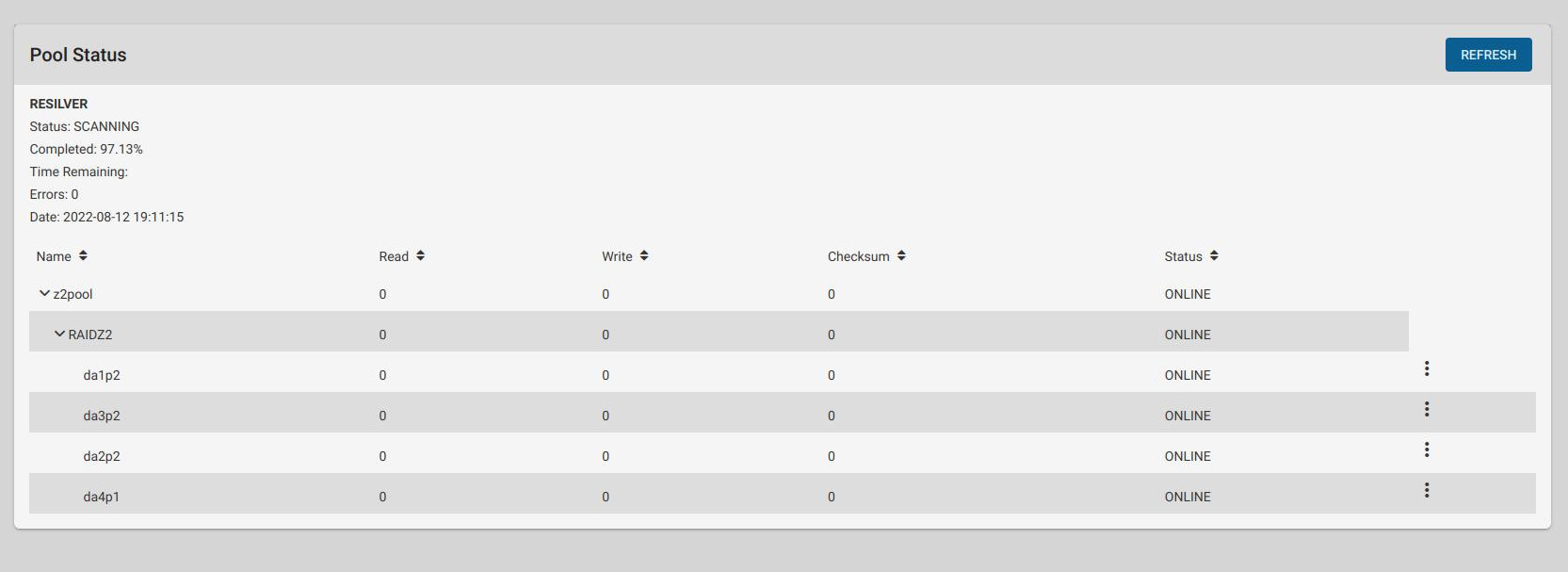

Here's a screenshot I made shortly before it was done:

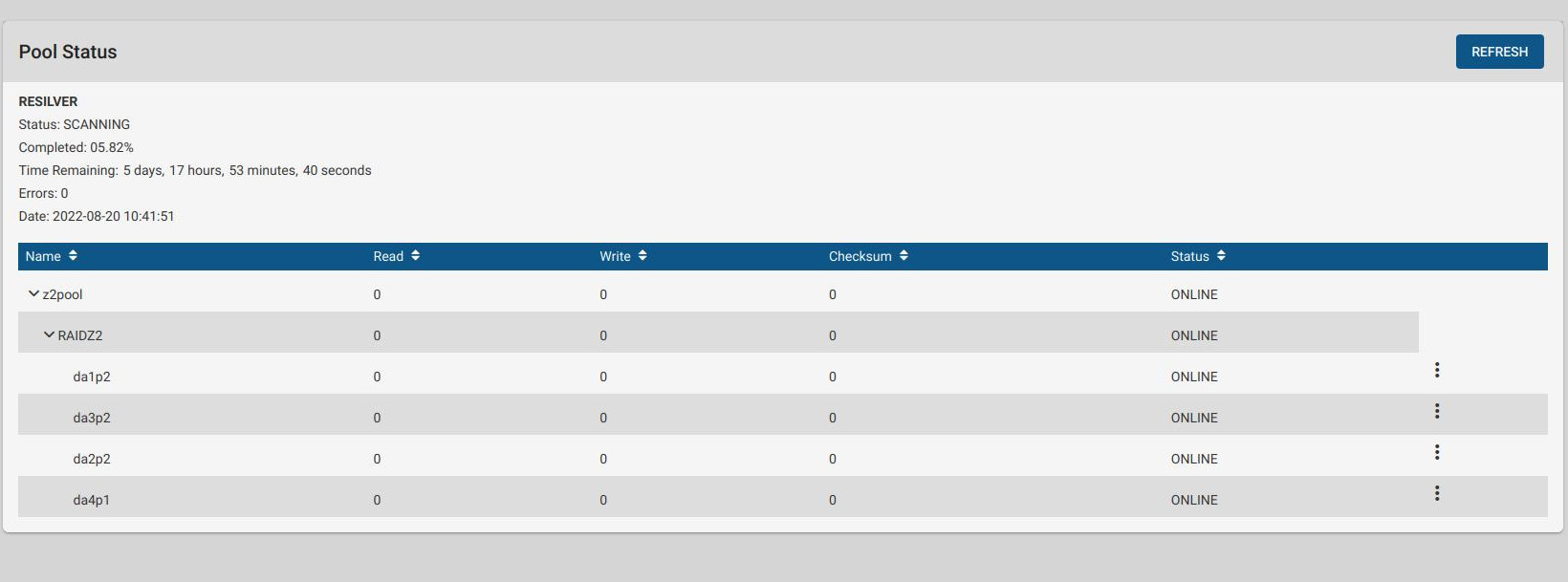

As you can see there are no errors reported. But still it started over again:

But I noticed two differences:

1. There is an estimated time shown, opposed to what it was like before (no remaining time given in the 1st.

screenshot)

2. The estimated time of about 5 days is just half of what the previous resilver took.

I don't know if this means a lot, but I guess I will have it run though once more ...

The resilver has run though - and sadly has started over again.

Here's a screenshot I made shortly before it was done:

As you can see there are no errors reported. But still it started over again:

But I noticed two differences:

1. There is an estimated time shown, opposed to what it was like before (no remaining time given in the 1st.

screenshot)

2. The estimated time of about 5 days is just half of what the previous resilver took.

I don't know if this means a lot, but I guess I will have it run though once more ...

Last edited:

Yes, I would. If I had some spare storage :(

Aside from this I think there has to be a solution.. like clearing all the ZFS database, as it obviously has some permanent meta data stored, which lead o the pool always being imported with some errors, until I did it with manual parameter override, as described in post 43.

So I see a good chance, that the existing pool would run fine, if I imported it into another host system.

Aside from this I think there has to be a solution.. like clearing all the ZFS database, as it obviously has some permanent meta data stored, which lead o the pool always being imported with some errors, until I did it with manual parameter override, as described in post 43.

So I see a good chance, that the existing pool would run fine, if I imported it into another host system.

- Joined

- Jul 12, 2022

- Messages

- 3,222

That's why you want 3-2-1: 3 copies of each file in 2 different systems, 1 of which offsite.Yes, I would. If I had some spare storage :(

Aside from this I think there has to be a solution.. like clearing all the ZFS database, as it obviously has some permanent meta data stored, which lead o the pool always being imported with some errors, until I did it with manual parameter override, as described in post 43.

So I see a good chance, that the existing pool would run fine, if I imported it into another host system.

Expensive, but helpful.

I personally don't have my backup offsite, but that's enough redundancy for me (although I am talking about less than 3TB) and it allows me to experiment a bit and be more daring.

- Joined

- Jul 12, 2022

- Messages

- 3,222

Do a clean installation then.So I see a good chance, that the existing pool would run fine, if I imported it into another host system.

- Joined

- Dec 30, 2020

- Messages

- 2,134

Likely, some drives are so strained by the resilver that they have to go offline, or at least become unresponsive, while they work out committing all writes in SMR layout; so the drives get out of sync with the rest of the array and ZFS has to resilver again. And again. And again.The resilver has run though - and sadly has started over again.

You may hope that there will be less and less to resilver and the loop will eventually end.

You should fear that the drives will get so stressed from constant resilver that they will eventually break down—all of them is short sequence.

For your own safety, replace these SMR drives with CMR ones. Or use the SMR drives as a traditional RAID6, but not with ZFS.

Hello!

Could this happen without an single reported error?so the drives get out of sync with the rest of the array and ZFS has to resilver again. And again. And again.

- Joined

- Jul 12, 2022

- Messages

- 3,222

Courtesy of SMR drives.Could this happen without an single reported error?

Try looking into system logs.

- Joined

- May 17, 2014

- Messages

- 3,611

Good point. You might have to look at the OS logs and look for disk timeouts.Hello!

Could this happen without an single reported error?

If this SMR too busy is the issue, their may be a way to work around it. Newer OpenZFS includes a way to throttle re-silvers, slowing down re-syncs. This might allow ZFS to reduce the speed on which it's attempting to write, allowing the SMR drive to "catch up". I don't have the details of this tunable, perhaps this might help;

https://openzfs.github.io/openzfs-d...ing/Module Parameters.html?highlight=resilver

It's worth a shot.

Well, I had this idea of 'too slow' drives, too - but I cannot see how there would be anything that intentionally lead to the resilver process repeating without reporting any problem. Because IMHO such behaviour would clearly qualify as a bug...

If this SMR too busy is the issue, their may be a way to work around it. Newer OpenZFS includes a way to throttle re-silvers, slowing down re-syncs. This might allow ZFS to reduce the speed on which it's attempting to write, allowing the SMR drive to "catch up". I don't have the details of this tunable, perhaps this might help;

..

Otherwise - bugs are a real thing, even in ZFS. I have seen other threads, where actually bugs in ZFS lead to repeated resilvers. Like some bug which as been in the zed daemon (don't know if this even exists on TrueNAS/BSD) or the above already mentioned 'deferred resilver' issue.

Well, I will keep your proposal in mind.

My current 'roadmap' now is:

1. Wait for the current resilver to finish, again, because it's showing an estimated time now, opposed to before, and because it seems to run twice as fast as before.

2. If this doesn't help I will toggle the deferred feature up to two times, and run an resilver each time after.

3. If this also fails I will try to fix the issue with da4 (different disk layout) as a last resort and then let it resilver again.

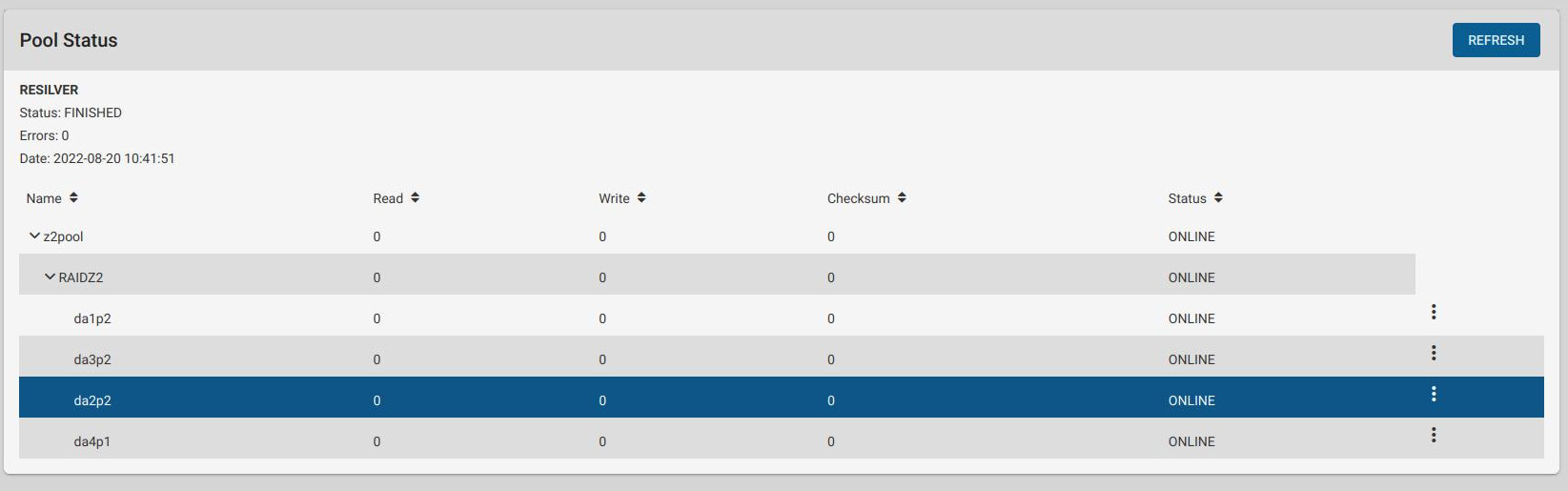

Yippie! It finally FINISHED!

After about a dozen of repeated resilvers it finally stopped after the one resilver that followed after the issue that made da1 not being properly accessible by the WebUI was fixed.

So it seemingly was all about some error in TrueNAS' configuration data which probably resulted from all the restarts and offline/onlie procedures I committed too fast.

Thanks to all for your hints!

I am now wondering if it's worth 'fixing' da4 with it's strange partitioning scheme, which for unknown reasons is different from the other drives...

So, what do you people think about this:

Would it have any negative impact on the pool or is it just a 'cosmetic' problem?

Should I even bother about changing this, or just ignore it?

What I think is, it might be possible for this difference to impact performance, as the other drives wouldn't work 'synchronously' to da4 .. but does this even matter for a soft raid like z2? Probably such issues only affect hardware RAIDs!?

root@truenas[~]# zpool status z2pool

pool: z2pool

state: ONLINE

scan: resilvered 5.54T in 6 days 14:01:50 with 0 errors on Sat Aug 27 00:43:41 2022

config:

NAME STATE READ WRITE CKSUM

z2pool ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

gptid/2affd65a-0bc6-11ed-bd90-000c29077bb3 ONLINE 0 0 0

gptid/10046937-6b06-11eb-9b87-000c29077bb3 ONLINE 0 0 0

gptid/aa7c274d-0bc3-11ed-bd90-000c29077bb3 ONLINE 0 0 0

gptid/9ce5247f-331b-3148-9b38-c958d2bd057a ONLINE 0 0 0

errors: No known data errors

root@truenas[~]#

After about a dozen of repeated resilvers it finally stopped after the one resilver that followed after the issue that made da1 not being properly accessible by the WebUI was fixed.

So it seemingly was all about some error in TrueNAS' configuration data which probably resulted from all the restarts and offline/onlie procedures I committed too fast.

Thanks to all for your hints!

I am now wondering if it's worth 'fixing' da4 with it's strange partitioning scheme, which for unknown reasons is different from the other drives...

So, what do you people think about this:

root@truenas[~]# gpart show /dev/da1

=> 40 15628053088 da1 GPT (7.3T)

40 88 - free - (44K)

128 4194304 1 freebsd-swap (2.0G)

4194432 15623858696 2 freebsd-zfs (7.3T)

root@truenas[~]# gpart show /dev/da2

=> 40 15628053088 da2 GPT (7.3T)

40 88 - free - (44K)

128 4194304 1 freebsd-swap (2.0G)

4194432 15623858696 2 freebsd-zfs (7.3T)

root@truenas[~]# gpart show /dev/da3

=> 40 15628053088 da3 GPT (7.3T)

40 88 - free - (44K)

128 4194304 1 freebsd-swap (2.0G)

4194432 15623858696 2 freebsd-zfs (7.3T)

root@truenas[~]# gpart show /dev/da4

=> 34 15628053101 da4 GPT (7.3T)

34 2014 - free - (1.0M)

2048 15628034048 1 !6a898cc3-1dd2-11b2-99a6-080020736631 (7.3T)

15628036096 16384 9 !6a945a3b-1dd2-11b2-99a6-080020736631 (8.0M)

15628052480 655 - free - (328K)

Would it have any negative impact on the pool or is it just a 'cosmetic' problem?

Should I even bother about changing this, or just ignore it?

What I think is, it might be possible for this difference to impact performance, as the other drives wouldn't work 'synchronously' to da4 .. but does this even matter for a soft raid like z2? Probably such issues only affect hardware RAIDs!?

Last edited:

- Joined

- Apr 24, 2020

- Messages

- 5,399

Leave it alone, and monitor pool stability for a while. I would wait a month before attempting a replacement of da4 to correct the partitioning.

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum will now become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums.Related topics on forums.truenas.com for thread: "z2pool gone crazy after stupid tinkering-no data related issues but: inaccessible GUI options, eternal resilver loop and wrong disk identifiers"

Similar threads

- Replies

- 3

- Views

- 3K

- Replies

- 10

- Views

- 3K

- Replies

- 3

- Views

- 3K

- Replies

- 4

- Views

- 4K