Elliot Dierksen

Guru

- Joined

- Dec 29, 2014

- Messages

- 1,135

I know seen it multiple times from @jgreco and @HoneyBadger among others, but I had never gotten around to testing it. Yes, you were all right that a bunch of mirrors do perform better. I went with a real world test for me which is migrating my 4 productions VM's from local storage in the ESXi server to a FreeNAS NFS share and back.

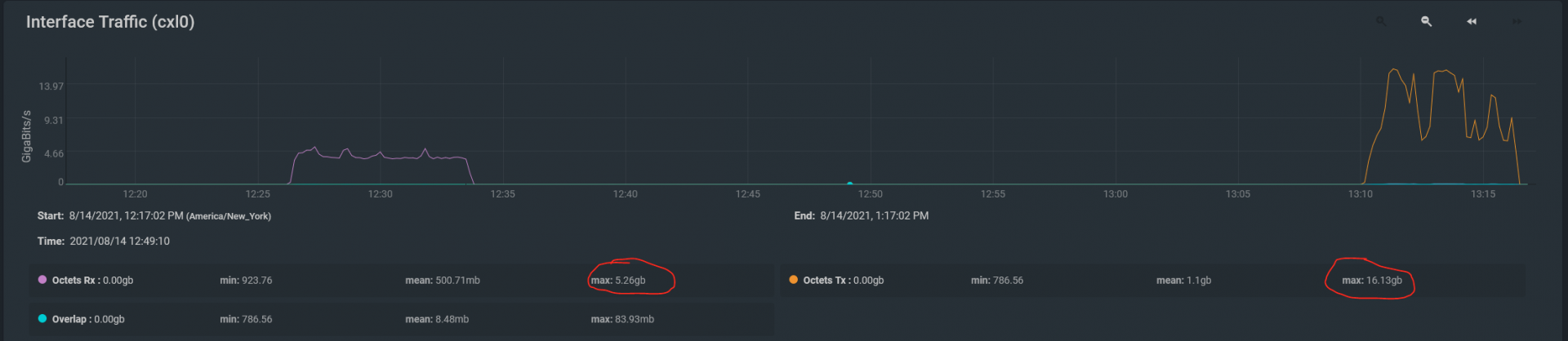

This is my external array with 16 3.5" drives. Before it was 2 x 8 disk RAIDZ2 vdevs with an Optane SLOG. Now it is 8 x 2 disk mirrors and the same Optane SLOG. I could get close to the same numbers reading off the the Z2 vdevs, but the writes were around 2G with peaks at around 3.8G. Now the writes were consistently around 4G with peaks around 5.2G. Now i have to debate if I want to destroy and redo all the storage in my primary FreeNAS.

Towards that, would you expect to see similar numbers in the primary system? The drives in the external array are 6G SAS drives at 7200rpm. The ones in the primary are 2.5" 6G SATA 5400rpm drives. Just curious

This is my external array with 16 3.5" drives. Before it was 2 x 8 disk RAIDZ2 vdevs with an Optane SLOG. Now it is 8 x 2 disk mirrors and the same Optane SLOG. I could get close to the same numbers reading off the the Z2 vdevs, but the writes were around 2G with peaks at around 3.8G. Now the writes were consistently around 4G with peaks around 5.2G. Now i have to debate if I want to destroy and redo all the storage in my primary FreeNAS.

Towards that, would you expect to see similar numbers in the primary system? The drives in the external array are 6G SAS drives at 7200rpm. The ones in the primary are 2.5" 6G SATA 5400rpm drives. Just curious