I'm having trouble following TrueNAS Scale procedures when a disk fails. One of the disks in my NAS has failed. From the UI I can't figure out how many disks are actually in that NAS, which one has failed and what is happening now. You could say I'm a little surprised at how badly UI communicates with me (except the email notification that a disk failed, that worked perfectly fine).

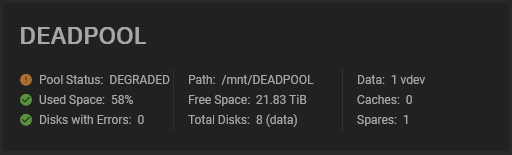

Home screen actually says the pool is degraded, but no notification in the top right corner. I would expect that failed disk would light up all kinds of warnings, but no. All I see is small orange status on home screen.

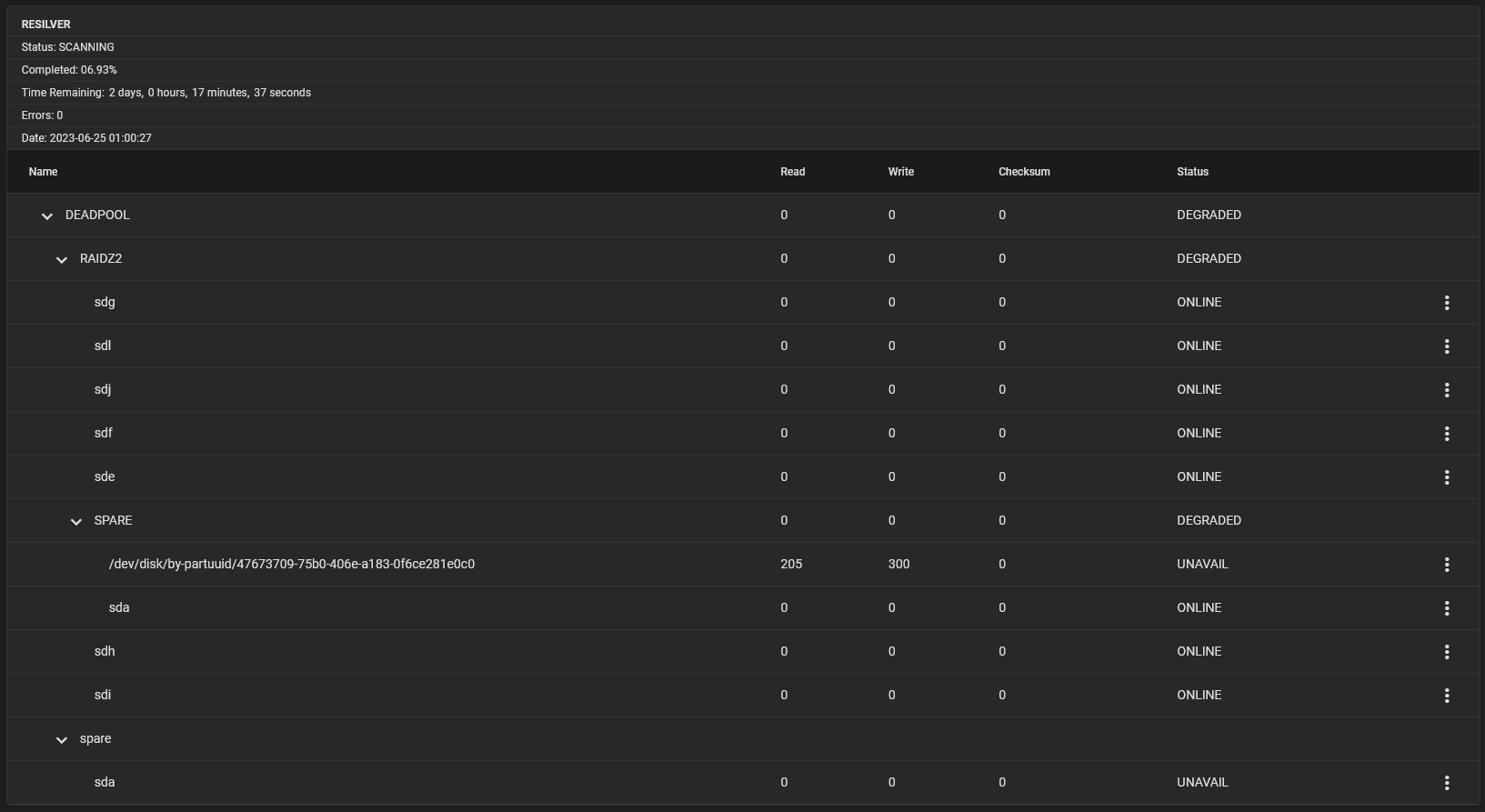

When I click on pool status icon in there, I am presented with a screen that says two things are unavail. So did one drive fail or two? Is there 8 drives total or 9? The above screen says total disks 8 (data), spares: 1. So total disks is actually 9?

From this screen I still can't figure out what is actually wrong. There are two spare branches, although I'm pretty sure I only had 1 spare disk in that pool when I was setting it up. Also it says in the SPARE branch that sda is online, but in the second spare branch, it says sda is unavail. What do I make of this? And finally, TrueNAS is running "resilvering." Why?

I guess what I exected is pretty straightforward instruction that tells me SN of the failing disk, so I can just replace it, but instead I am presented with all of this above and I can't figure anything out. What am I missing here?

Home screen actually says the pool is degraded, but no notification in the top right corner. I would expect that failed disk would light up all kinds of warnings, but no. All I see is small orange status on home screen.

When I click on pool status icon in there, I am presented with a screen that says two things are unavail. So did one drive fail or two? Is there 8 drives total or 9? The above screen says total disks 8 (data), spares: 1. So total disks is actually 9?

From this screen I still can't figure out what is actually wrong. There are two spare branches, although I'm pretty sure I only had 1 spare disk in that pool when I was setting it up. Also it says in the SPARE branch that sda is online, but in the second spare branch, it says sda is unavail. What do I make of this? And finally, TrueNAS is running "resilvering." Why?

I guess what I exected is pretty straightforward instruction that tells me SN of the failing disk, so I can just replace it, but instead I am presented with all of this above and I can't figure anything out. What am I missing here?