Hello,

I recently inherited a large FreeNAS build. Being no expert, I read the manual and have poked around this forum and Google for a few days now.

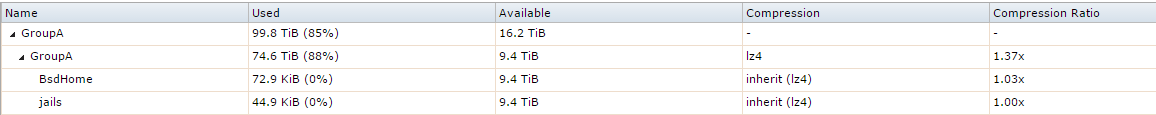

When I inherited the pool, I noticed that it was at above 90% utilization so I deleted several million files to free up about 4TB of space. This is the current status:

This pool is 4 vdevs of 8 4TB drives each in RaidZ2.

My problem is that I don't see how it's possible that I'm using 74 TiB of disk space. The largest directory is 18.6TB and when I add all the other directory sizes together they sum to less than 30TB.

My method of calculation is using windows explorer on each directory at a time and taking the larger of "Size" and "Size on disk". While doing this I noticed that at times the "Size on disk" would be WAY larger than size. For example, on one folder the size was 42 GB but size on disk was 1.13 TB. The pattern I found is that "size on disk" uses a minimum size of 1MB per file so that folders with many small files had huge "size on disk". Not 1KB. 1MB. 1.13 TB was for 1M files.

Again, even with these inflated numbers, the sum of the directories is less than 30TB.

I'm not super familiar with FreeBSD / Unix, but I tried several variants of the "du" and "df" commands. However, these commands fail utterly when used in a large directories. My latest "du" has been running for over 48 hours.

I'm wondering if there is some issue with how the disks were set up that is causing these very high estimates.

using:

zdb -U /data/zfs/zpool.cache

I see ashift was set to 9. Maybe not great.

using:

zfs get all GroupA

I see recordsize is 128K. Also lz4 is on. Dedup is off.

I can't remember the command I ran to see that (free space?) disk fragmentation was 42%

I was running snapshots but deleted all of them after I deleted the 4TB of files. The amount of space released corresponded to the amount of disk usage that windows explorer had said was the "size" of the folder.

FreeNAS-9.3-STABLE-201506162331

262084MB memory

using NFS and CIFS

I recently inherited a large FreeNAS build. Being no expert, I read the manual and have poked around this forum and Google for a few days now.

When I inherited the pool, I noticed that it was at above 90% utilization so I deleted several million files to free up about 4TB of space. This is the current status:

This pool is 4 vdevs of 8 4TB drives each in RaidZ2.

My problem is that I don't see how it's possible that I'm using 74 TiB of disk space. The largest directory is 18.6TB and when I add all the other directory sizes together they sum to less than 30TB.

My method of calculation is using windows explorer on each directory at a time and taking the larger of "Size" and "Size on disk". While doing this I noticed that at times the "Size on disk" would be WAY larger than size. For example, on one folder the size was 42 GB but size on disk was 1.13 TB. The pattern I found is that "size on disk" uses a minimum size of 1MB per file so that folders with many small files had huge "size on disk". Not 1KB. 1MB. 1.13 TB was for 1M files.

Again, even with these inflated numbers, the sum of the directories is less than 30TB.

I'm not super familiar with FreeBSD / Unix, but I tried several variants of the "du" and "df" commands. However, these commands fail utterly when used in a large directories. My latest "du" has been running for over 48 hours.

I'm wondering if there is some issue with how the disks were set up that is causing these very high estimates.

using:

zdb -U /data/zfs/zpool.cache

I see ashift was set to 9. Maybe not great.

using:

zfs get all GroupA

I see recordsize is 128K. Also lz4 is on. Dedup is off.

I can't remember the command I ran to see that (free space?) disk fragmentation was 42%

I was running snapshots but deleted all of them after I deleted the 4TB of files. The amount of space released corresponded to the amount of disk usage that windows explorer had said was the "size" of the folder.

FreeNAS-9.3-STABLE-201506162331

262084MB memory

using NFS and CIFS