Mastakilla

Patron

- Joined

- Jul 18, 2019

- Messages

- 203

Hi everyone,

A couple of days ago, one of my HDDs caused troubles during a scrub of my main pool. In the pool status window, it had multiple read and write errors and caused the degrading of my pool. Also it seemed like this HDD became unreachable or something, as I couldn't run a SMART test on it anymore (it didn't find the HDD or something like that).

After powering off / on my server, the HDD became available again and TrueNAS automatically started resilvering my pool. It also shortly became Online (Healthy) again, but after awhile it became Online (Unhealthy) (I don't remember the exact moment that it became unhealthy or what triggered it).

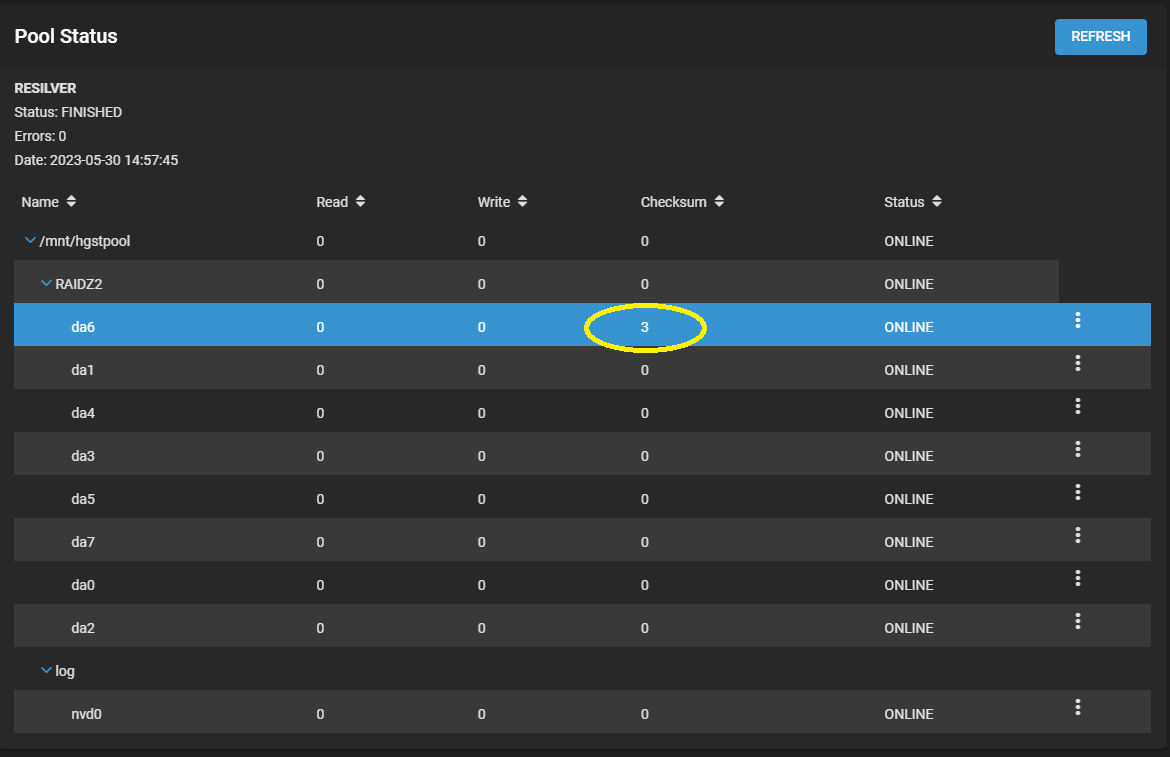

I ran a long SMART test on it, which has completed this morning. The pool status window in TrueNAS also seems to have reset (it no longer shows the read and write errors, but it does show 3 CKSUM errors now).

I'm wondering now if I should replace the HDD or give it another chance? It is RAIDZ2, so I should be fine if it dies on me again. But I also already have a spare HDD ready, in case it is better to replace it...

Below you can find some output of my research into this...

Pool status:

SMART output after completing a long SMART test:

Dmesg output from before power off / on the server. Below I've copied the parts that I think that are relevant (please let me know if you need the full output):

A couple of days ago, one of my HDDs caused troubles during a scrub of my main pool. In the pool status window, it had multiple read and write errors and caused the degrading of my pool. Also it seemed like this HDD became unreachable or something, as I couldn't run a SMART test on it anymore (it didn't find the HDD or something like that).

After powering off / on my server, the HDD became available again and TrueNAS automatically started resilvering my pool. It also shortly became Online (Healthy) again, but after awhile it became Online (Unhealthy) (I don't remember the exact moment that it became unhealthy or what triggered it).

I ran a long SMART test on it, which has completed this morning. The pool status window in TrueNAS also seems to have reset (it no longer shows the read and write errors, but it does show 3 CKSUM errors now).

I'm wondering now if I should replace the HDD or give it another chance? It is RAIDZ2, so I should be fine if it dies on me again. But I also already have a spare HDD ready, in case it is better to replace it...

Below you can find some output of my research into this...

Pool status:

SMART output after completing a long SMART test:

data# smartctl -a /dev/da6

smartctl 7.2 2021-09-14 r5236 [FreeBSD 13.1-RELEASE-p7 amd64] (local build)

Copyright (C) 2002-20, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF INFORMATION SECTION ===

Model Family: HGST Ultrastar He10

Device Model: HGST HUH721010ALN600

Serial Number: 2YHUHLHD

LU WWN Device Id: 5 000cca 273d9af54

Firmware Version: LHGAT38Q

User Capacity: 10,000,831,348,736 bytes [10.0 TB]

Sector Size: 4096 bytes logical/physical

Rotation Rate: 7200 rpm

Form Factor: 3.5 inches

Device is: In smartctl database [for details use: -P show]

ATA Version is: ACS-2, ATA8-ACS T13/1699-D revision 4

SATA Version is: SATA 3.2, 6.0 Gb/s (current: 6.0 Gb/s)

Local Time is: Wed May 31 12:05:50 2023 CEST

SMART support is: Available - device has SMART capability.

SMART support is: Enabled

=== START OF READ SMART DATA SECTION ===

SMART overall-health self-assessment test result: PASSED

General SMART Values:

Offline data collection status: (0x80) Offline data collection activity

was never started.

Auto Offline Data Collection: Enabled.

Self-test execution status: ( 0) The previous self-test routine completed

without error or no self-test has ever

been run.

Total time to complete Offline

data collection: ( 93) seconds.

Offline data collection

capabilities: (0x5b) SMART execute Offline immediate.

Auto Offline data collection on/off support.

Suspend Offline collection upon new

command.

Offline surface scan supported.

Self-test supported.

No Conveyance Self-test supported.

Selective Self-test supported.

SMART capabilities: (0x0003) Saves SMART data before entering

power-saving mode.

Supports SMART auto save timer.

Error logging capability: (0x01) Error logging supported.

General Purpose Logging supported.

Short self-test routine

recommended polling time: ( 2) minutes.

Extended self-test routine

recommended polling time: (1086) minutes.

SCT capabilities: (0x003d) SCT Status supported.

SCT Error Recovery Control supported.

SCT Feature Control supported.

SCT Data Table supported.

SMART Attributes Data Structure revision number: 16

Vendor Specific SMART Attributes with Thresholds:

ID# ATTRIBUTE_NAME FLAG VALUE WORST THRESH TYPE UPDATED WHEN_FAILED RAW_VALUE

1 Raw_Read_Error_Rate 0x000b 100 100 016 Pre-fail Always - 0

2 Throughput_Performance 0x0005 134 134 054 Pre-fail Offline - 96

3 Spin_Up_Time 0x0007 146 146 024 Pre-fail Always - 451 (Average 447)

4 Start_Stop_Count 0x0012 100 100 000 Old_age Always - 248

5 Reallocated_Sector_Ct 0x0033 100 100 005 Pre-fail Always - 0

7 Seek_Error_Rate 0x000b 100 100 067 Pre-fail Always - 0

8 Seek_Time_Performance 0x0005 128 128 020 Pre-fail Offline - 18

9 Power_On_Hours 0x0012 096 096 000 Old_age Always - 28856

10 Spin_Retry_Count 0x0013 100 100 060 Pre-fail Always - 0

12 Power_Cycle_Count 0x0032 100 100 000 Old_age Always - 248

22 Helium_Level 0x0023 100 100 025 Pre-fail Always - 100

192 Power-Off_Retract_Count 0x0032 099 099 000 Old_age Always - 1802

193 Load_Cycle_Count 0x0012 099 099 000 Old_age Always - 1802

194 Temperature_Celsius 0x0002 157 157 000 Old_age Always - 38 (Min/Max 18/65)

196 Reallocated_Event_Count 0x0032 100 100 000 Old_age Always - 0

197 Current_Pending_Sector 0x0022 100 100 000 Old_age Always - 0

198 Offline_Uncorrectable 0x0008 100 100 000 Old_age Offline - 0

199 UDMA_CRC_Error_Count 0x000a 200 200 000 Old_age Always - 0

SMART Error Log Version: 1

No Errors Logged

SMART Self-test log structure revision number 1

Num Test_Description Status Remaining LifeTime(hours) LBA_of_first_error

# 1 Extended offline Completed without error 00% 28856 -

# 2 Short offline Completed without error 00% 28835 -

# 3 Short offline Completed without error 00% 28726 -

# 4 Short offline Completed without error 00% 28702 -

# 5 Short offline Completed without error 00% 28678 -

# 6 Short offline Completed without error 00% 28654 -

# 7 Short offline Completed without error 00% 28630 -

# 8 Short offline Completed without error 00% 28606 -

# 9 Short offline Completed without error 00% 28582 -

#10 Short offline Completed without error 00% 28558 -

#11 Short offline Completed without error 00% 28534 -

#12 Short offline Completed without error 00% 28510 -

#13 Short offline Completed without error 00% 28486 -

#14 Short offline Completed without error 00% 28462 -

#15 Short offline Completed without error 00% 28438 -

#16 Short offline Completed without error 00% 28414 -

#17 Short offline Completed without error 00% 28390 -

#18 Short offline Completed without error 00% 28366 -

#19 Short offline Completed without error 00% 28342 -

#20 Short offline Completed without error 00% 28318 -

#21 Short offline Completed without error 00% 28294 -

SMART Selective self-test log data structure revision number 1

SPAN MIN_LBA MAX_LBA CURRENT_TEST_STATUS

1 0 0 Not_testing

2 0 0 Not_testing

3 0 0 Not_testing

4 0 0 Not_testing

5 0 0 Not_testing

Selective self-test flags (0x0):

After scanning selected spans, do NOT read-scan remainder of disk.

If Selective self-test is pending on power-up, resume after 0 minute delay.

Dmesg output from before power off / on the server. Below I've copied the parts that I think that are relevant (please let me know if you need the full output):

...

mps0: Controller reported scsi ioc terminated tgt 14 SMID 1394 loginfo 31110d00

mps0: Controller reported scsi ioc terminated tgt 14 SMID 526 loginfo 31110d00

(da6:mps0:0:14:0): WRITE(10). CDB: 2a 00 09 89 1d 27 00 00 02 00

mps0: Controller reported scsi ioc terminated tgt 14 SMID 1048 loginfo 31110d00

mps0: Controller reported scsi ioc terminated tgt 14 SMID 2102 loginfo 31110d00

mps0: Controller reported scsi ioc terminated tgt 14 SMID 1554 loginfo 31110d00

mps0: Controller reported scsi ioc terminated tgt 14 SMID 513 loginfo 31110d00

mps0: Controller reported scsi ioc terminated tgt 14 SMID 568 loginfo 31110d00

(da6:mps0:0:14:0): CAM status: CCB request completed with an error

(da6:mps0:0:14:0): Retrying command, 3 more tries remain

(da6:mps0:0:14:0): READ(10). CDB: 28 00 52 29 f7 f7 00 00 05 00

(da6:mps0:0:14:0): CAM status: CCB request completed with an error

(da6:mps0:0:14:0): Retrying command, 3 more tries remain

(da6:mps0:0:14:0): READ(10). CDB: 28 00 52 29 f7 f1 00 00 11 00

(da6:mps0:0:14:0): CAM status: CCB request completed with an error

(da6:mps0:0:14:0): Retrying command, 3 more tries remain

(da6:mps0:0:14:0): READ(10). CDB: 28 00 52 29 f8 02 00 00 16 00

(da6:mps0:0:14:0): CAM status: CCB request completed with an error

(da6:mps0:0:14:0): Retrying command, 3 more tries remain

(da6:mps0:0:14:0): READ(10). CDB: 28 00 76 8f c0 74 00 00 05 00

(da6:mps0:0:14:0): CAM status: CCB request completed with an error

(da6:mps0:0:14:0): Retrying command, 3 more tries remain

(da6:mps0:0:14:0): READ(10). CDB: 28 00 76 8f c0 6e 00 00 06 00

(da6:mps0:0:14:0): CAM status: CCB request completed with an error

(da6:mps0:0:14:0): Retrying command, 3 more tries remain

(da6:mps0:0:14:0): READ(10). CDB: 28 00 52 29 f7 e0 00 00 06 00

(da6:mps0:0:14:0): CAM status: CCB request completed with an error

(da6:mps0:0:14:0): Retrying command, 3 more tries remain

(da6:mps0:0:14:0): READ(10). CDB: 28 00 52 29 f7 e0 00 00 06 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Retrying command (per sense data)

(da6:mps0:0:14:0): READ(10). CDB: 28 00 52 29 f7 f7 00 00 05 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Retrying command (per sense data)

(da6:mps0:0:14:0): READ(10). CDB: 28 00 52 29 f7 f1 00 00 11 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Retrying command (per sense data)

(da6:mps0:0:14:0): READ(10). CDB: 28 00 52 29 f8 02 00 00 16 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

...

(da6:mps0:0:14:0): READ(10). CDB: 28 00 52 29 f7 e0 00 00 06 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Error 5, Retries exhausted

GEOM_ELI: g_eli_read_done() failed (error=5) gptid/ef1cec0a-cdd4-11ea-a82a-d05099d3fdfe.eli[READ(offset=5629082468352, length=24576)]

(da6:mps0:0:14:0): READ(10). CDB: 28 00 52 29 f7 f7 00 00 05 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Error 5, Retries exhausted

GEOM_ELI: g_eli_read_done() failed (error=5) gptid/ef1cec0a-cdd4-11ea-a82a-d05099d3fdfe.eli[READ(offset=5629082562560, length=20480)]

(da6:mps0:0:14:0): READ(10). CDB: 28 00 52 29 f7 f1 00 00 11 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Error 5, Retries exhausted

GEOM_ELI: g_eli_read_done() failed (error=5) gptid/ef1cec0a-cdd4-11ea-a82a-d05099d3fdfe.eli[READ(offset=5629082537984, length=69632)]

(da6:mps0:0:14:0): READ(10). CDB: 28 00 52 29 f8 02 00 00 16 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Error 5, Retries exhausted

GEOM_ELI: g_eli_read_done() failed (error=5) gptid/ef1cec0a-cdd4-11ea-a82a-d05099d3fdfe.eli[READ(offset=5629082607616, length=90112)]

(da6:mps0:0:14:0): READ(10). CDB: 28 00 76 8f c0 6e 00 00 06 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Error 5, Retries exhausted

GEOM_ELI: g_eli_read_done() failed (error=5) gptid/ef1cec0a-cdd4-11ea-a82a-d05099d3fdfe.eli[READ(offset=8130305908736, length=24576)]

(da6:mps0:0:14:0): READ(10). CDB: 28 00 76 8f c0 74 00 00 05 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Error 5, Retries exhausted

GEOM_ELI: g_eli_read_done() failed (error=5) gptid/ef1cec0a-cdd4-11ea-a82a-d05099d3fdfe.eli[READ(offset=8130305933312, length=20480)]

(da6:mps0:0:14:0): WRITE(10). CDB: 2a 00 09 89 1d 27 00 00 02 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Error 5, Retries exhausted

GEOM_ELI: g_eli_write_done() failed (error=5) gptid/ef1cec0a-cdd4-11ea-a82a-d05099d3fdfe.eli[WRITE(offset=638101123072, length=8192)]

(da6:mps0:0:14:0): READ(10). CDB: 28 00 00 40 00 c2 00 00 02 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Retrying command (per sense data)

(da6:mps0:0:14:0): READ(10). CDB: 28 00 91 87 ff 42 00 00 02 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Retrying command (per sense data)

(da6:mps0:0:14:0): READ(10). CDB: 28 00 91 87 ff 82 00 00 02 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Retrying command (per sense data)

(da6:mps0:0:14:0): READ(10). CDB: 28 00 00 40 00 c2 00 00 02 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Retrying command (per sense data)

...

(da6:mps0:0:14:0): Retrying command (per sense data)

(da6:mps0:0:14:0): READ(10). CDB: 28 00 00 40 00 c2 00 00 02 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Error 5, Retries exhausted

GEOM_ELI: g_eli_read_done() failed (error=5) gptid/ef1cec0a-cdd4-11ea-a82a-d05099d3fdfe.eli[READ(offset=270336, length=8192)]

(da6:mps0:0:14:0): READ(10). CDB: 28 00 91 87 ff 42 00 00 02 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Error 5, Retries exhausted

GEOM_ELI: g_eli_read_done() failed (error=5) gptid/ef1cec0a-cdd4-11ea-a82a-d05099d3fdfe.eli[READ(offset=9983650177024, length=8192)]

(da6:mps0:0:14:0): READ(10). CDB: 28 00 91 87 ff 82 00 00 02 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Error 5, Retries exhausted

GEOM_ELI: g_eli_read_done() failed (error=5) gptid/ef1cec0a-cdd4-11ea-a82a-d05099d3fdfe.eli[READ(offset=9983650439168, length=8192)]

(da6:mps0:0:14:0): WRITE(10). CDB: 2a 00 07 6d 9e ec 00 00 01 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Retrying command (per sense data)

(da6:mps0:0:14:0): WRITE(10). CDB: 2a 00 07 6d 9e ec 00 00 01 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Retrying command (per sense data)

(da6:mps0:0:14:0): WRITE(10). CDB: 2a 00 07 6d 9e ec 00 00 01 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Retrying command (per sense data)

(da6:mps0:0:14:0): WRITE(10). CDB: 2a 00 07 6d 9e ec 00 00 01 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Error 5, Retries exhausted

GEOM_ELI: g_eli_write_done() failed (error=5) gptid/ef1cec0a-cdd4-11ea-a82a-d05099d3fdfe.eli[WRITE(offset=493282050048, length=4096)]

(da6:mps0:0:14:0): READ(10). CDB: 28 00 00 40 00 c2 00 00 02 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Retrying command (per sense data)

(da6:mps0:0:14:0): READ(10). CDB: 28 00 91 87 ff 42 00 00 02 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Retrying command (per sense data)

(da6:mps0:0:14:0): READ(10). CDB: 28 00 91 87 ff 82 00 00 02 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Retrying command (per sense data)

(da6:mps0:0:14:0): READ(10). CDB: 28 00 00 40 00 c2 00 00 02 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Retrying command (per sense data)

(da6:mps0:0:14:0): READ(10). CDB: 28 00 91 87 ff 42 00 00 02 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Retrying command (per sense data)

(da6:mps0:0:14:0): READ(10). CDB: 28 00 91 87 ff 82 00 00 02 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Retrying command (per sense data)

(da6:mps0:0:14:0): READ(10). CDB: 28 00 00 40 00 c2 00 00 02 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Retrying command (per sense data)

(da6:mps0:0:14:0): READ(10). CDB: 28 00 91 87 ff 42 00 00 02 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Retrying command (per sense data)

(da6:mps0:0:14:0): READ(10). CDB: 28 00 91 87 ff 82 00 00 02 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Retrying command (per sense data)

(da6:mps0:0:14:0): READ(10). CDB: 28 00 00 40 00 c2 00 00 02 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Retrying command (per sense data)

(da6:mps0:0:14:0): READ(10). CDB: 28 00 91 87 ff 42 00 00 02 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Retrying command (per sense data)

(da6:mps0:0:14:0): READ(10). CDB: 28 00 91 87 ff 82 00 00 02 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Retrying command (per sense data)

(da6:mps0:0:14:0): READ(10). CDB: 28 00 00 40 00 c2 00 00 02 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Error 5, Retries exhausted

GEOM_ELI: g_eli_read_done() failed (error=5) gptid/ef1cec0a-cdd4-11ea-a82a-d05099d3fdfe.eli[READ(offset=270336, length=8192)]

(da6:mps0:0:14:0): READ(10). CDB: 28 00 91 87 ff 42 00 00 02 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Error 5, Retries exhausted

GEOM_ELI: g_eli_read_done() failed (error=5) gptid/ef1cec0a-cdd4-11ea-a82a-d05099d3fdfe.eli[READ(offset=9983650177024, length=8192)]

(da6:mps0:0:14:0): READ(10). CDB: 28 00 91 87 ff 82 00 00 02 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Error 5, Retries exhausted

...

GEOM_ELI: g_eli_read_done() failed (error=5) gptid/ef1cec0a-cdd4-11ea-a82a-d05099d3fdfe.eli[READ(offset=32768, length=4096)]

(da6:mps0:0:14:0): READ(10). CDB: 28 00 91 87 ff f9 00 00 01 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Retrying command (per sense data)

(da6:mps0:0:14:0): READ(10). CDB: 28 00 91 87 ff f9 00 00 01 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Retrying command (per sense data)

(da6:mps0:0:14:0): READ(10). CDB: 28 00 91 87 ff f9 00 00 01 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Retrying command (per sense data)

(da6:mps0:0:14:0): READ(10). CDB: 28 00 91 87 ff f9 00 00 01 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Retrying command (per sense data)

(da6:mps0:0:14:0): READ(10). CDB: 28 00 91 87 ff f9 00 00 01 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Error 5, Retries exhausted

GEOM_ELI: g_eli_read_done() failed (error=5) gptid/ef1cec0a-cdd4-11ea-a82a-d05099d3fdfe.eli[READ(offset=9983650926592, length=4096)]

(da6:mps0:0:14:0): READ(10). CDB: 28 00 00 40 00 80 00 00 01 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Retrying command (per sense data)

(da6:mps0:0:14:0): READ(10). CDB: 28 00 00 40 00 80 00 00 01 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Retrying command (per sense data)

(da6:mps0:0:14:0): READ(10). CDB: 28 00 00 40 00 80 00 00 01 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Retrying command (per sense data)

(da6:mps0:0:14:0): READ(10). CDB: 28 00 00 40 00 80 00 00 01 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Retrying command (per sense data)

(da6:mps0:0:14:0): READ(10). CDB: 28 00 00 40 00 80 00 00 01 00

(da6:mps0:0:14:0): CAM status: SCSI Status Error

(da6:mps0:0:14:0): SCSI status: Check Condition

(da6:mps0:0:14:0): SCSI sense: NOT READY asc:4,0 (Logical unit not ready, cause not reportable)

(da6:mps0:0:14:0): Error 5, Retries exhausted

GEOM_ELI: g_eli_read_done() failed (error=5) gptid/ef1cec0a-cdd4-11ea-a82a-d05099d3fdfe.eli[READ(offset=0, length=4096)]

mps0: Controller reported scsi ioc terminated tgt 14 SMID 563 loginfo 31111000

mps0: Controller reported scsi ioc terminated tgt 14 SMID 950 loginfo 31111000

mps0: Controller reported scsi ioc terminated tgt 14 SMID 878 loginfo 31111000

mps0: Controller reported scsi ioc terminated tgt 14 SMID 2084 loginfo 31111000

mps0: Controller reported scsi ioc terminated tgt 14 SMID 207 loginfo 31111000

mps0: Controller reported scsi ioc terminated tgt 14 SMID 176 loginfo 31111000

mps0: Controller reported scsi ioc terminated tgt 14 SMID 1810 loginfo 31111000

mps0: Controller reported scsi ioc terminated tgt 14 SMID 1205 loginfo 31111000

mps0: Controller reported scsi ioc terminated tgt 14 SMID 1503 loginfo 31111000

...