NickF

Guru

- Joined

- Jun 12, 2014

- Messages

- 763

So I was on the couch with my wife and we were about to start a movie, time was about 8:00. The movie is hosted on my TrueNAS box and we were watching it on Plex which is also hosted on the same box. Everything was normal, but the movie wouldn't play. I was home all day, and didn't notice any problems or receive any alerts from my NAS.

I came down to my office to take a look and I was getting weird I/O errors when I tried to access files on my SMB share. I signed into TrueNAS and it said my pool was degraded and I had 40,000 some odd errors on one of the drives in one of my mirrors. Okay, no problem, I will eject the bad drive from the pool in the GUI and then go physically replace it with a cold spare. The system froze for several minutes and I couldn't do anything. I could still navigate the SMB share, but the TrueNAS UI was unresponsive. I tried to SSHinto the box, and the CLI was not responding. Then I got an email:

Then immediately after I received this email:

After a few about 7 or 8 minutes of nothing, I told the server to reset via the IPMI.

When the server came back up, the pool was in an errored state. I thought to export the pool and re-import the pool, as I've had some success with that in the past. But when I exported it, the option to import the pool wasn't present. I SSH'd back into the server and tried to manually import the pool:

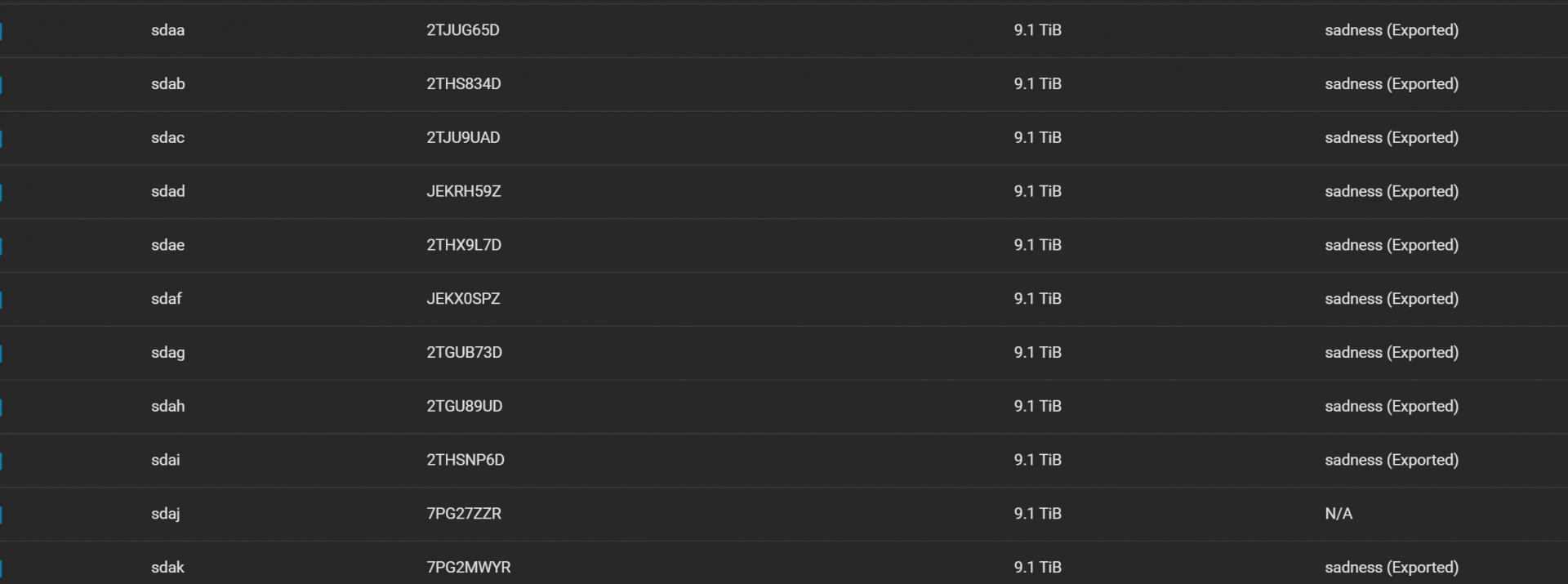

All of the disks are, at this point, showing up in the UI as in the pool called sadness, but exported. That is, with the exception of one, the one I removed earlier:

I tried to remove one of the SAS cables from each of the shelves, so that I had 1 SAS cable going from a single HBA to each of the two shelves. That didn't help matters any.

Digging in the logs, I'm not seeing much of anything useful in /var/log/messages before the system restarted at 8:30ish. At 2 AM today, I see some weird events happening:

Then I tried to restart a VM that was hosted on this pool that I hadn't noticed had crashed at around 8:17:

Any help is appreciated :)

Specs of my server are:

NewProd Server | SCALE 22.12 RC1

| Supermicro H12SSL-I | EPYC 7282 | 256GB DDR4-3200 | 2X LSI 9500-8e to 2X EMC 15-Bay Shelf | Intel X710-DA2 | 28x10TB Drives in 2 Way mirrors | 4x Samsung PM9A1 512GB 2-Way Mirrored SPECIAL | 2x Optane 905p 960GB Mirrored

The shelves are connected to the two HBAs in an "X" configuration, if that's helpful.

I came down to my office to take a look and I was getting weird I/O errors when I tried to access files on my SMB share. I signed into TrueNAS and it said my pool was degraded and I had 40,000 some odd errors on one of the drives in one of my mirrors. Okay, no problem, I will eject the bad drive from the pool in the GUI and then go physically replace it with a cold spare. The system froze for several minutes and I couldn't do anything. I could still navigate the SMB share, but the TrueNAS UI was unresponsive. I tried to SSHinto the box, and the CLI was not responding. Then I got an email:

TrueNAS @ prod

New alert:

The following alert has been cleared:

- Pool sadness state is DEGRADED: One or more devices has experienced an error resulting in data corruption. Applications may be affected.

The following devices are not healthy:

- Disk HUH721010AL4200 7PG33KKR is UNAVAIL

- Disk HUH721010AL4200 7PG3RYSR is DEGRADED

Current alerts:

- Pool sadness state is DEGRADED: One or more devices has experienced an error resulting in data corruption. Applications may be affected.

The following devices are not healthy:

- Disk HUH721010AL4200 7PG33KKR is UNAVAIL

- Disk HUH721010AL4200 7PG27ZZR is DEGRADED

- Disk HUH721010AL4200 7PG3RYSR is DEGRADED

- Failed to check for alert ActiveDirectoryDomainHealth: Traceback (most recent call last): File "/usr/lib/python3/dist-packages/middlewared/plugins/alert.py", line 776, in __run_source alerts = (await alert_source.check()) or [] File "/usr/lib/python3/dist-packages/middlewared/alert/source/active_directory.py", line 46, in check await self.middleware.call("activedirectory.check_nameservers", conf["domainname"], conf["site"]) File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1386, in call return await self._call( File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1335, in _call return await methodobj(*prepared_call.args) File "/usr/lib/python3/dist-packages/middlewared/plugins/activedirectory_/dns.py", line 210, in check_nameservers resp = await self.middleware.call('dnsclient.forward_lookup', { File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1386, in call return await self._call( File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1335, in _call return await methodobj(*prepared_call.args) File "/usr/lib/python3/dist-packages/middlewared/schema.py", line 1318, in nf return await func(*args, **kwargs) File "/usr/lib/python3/dist-packages/middlewared/schema.py", line 1186, in nf res = await f(*args, **kwargs) File "/usr/lib/python3/dist-packages/middlewared/plugins/dns_client.py", line 108, in forward_lookup results = await asyncio.gather(*[ File "/usr/lib/python3/dist-packages/middlewared/plugins/dns_client.py", line 40, in resolve_name ans = await r.resolve( File "/usr/lib/python3/dist-packages/dns/asyncresolver.py", line 114, in resolve timeout = self._compute_timeout(start, lifetime) File "/usr/lib/python3/dist-packages/dns/resolver.py", line 950, in _compute_timeout raise Timeout(timeout=duration) dns.exception.Timeout: The DNS operation timed out after 12.403959512710571 seconds

- Pool sadness state is DEGRADED: One or more devices has experienced an error resulting in data corruption. Applications may be affected.

The following devices are not healthy:

- Disk HUH721010AL4200 7PG33KKR is UNAVAIL

- Disk HUH721010AL4200 7PG3RYSR is DEGRADED

Then immediately after I received this email:

ZFS has detected that a device was removed.

impact: Fault tolerance of the pool may be compromised.

eid: 30800

class: statechange

state: UNAVAIL

host: prod

time: 2023-04-17 20:22:34-0400

vpath: /dev/disk/by-partuuid/e2f3d3c3-0033-4300-8c38-a7a56513f145

vguid: 0xF52756F46C368319

pool: sadness (0x8A76BCC157F6D093)

After a few about 7 or 8 minutes of nothing, I told the server to reset via the IPMI.

When the server came back up, the pool was in an errored state. I thought to export the pool and re-import the pool, as I've had some success with that in the past. But when I exported it, the option to import the pool wasn't present. I SSH'd back into the server and tried to manually import the pool:

root@prod[/var/log]# zpool import -a

cannot import 'sadness': no such pool or dataset

Destroy and re-create the pool from

All of the disks are, at this point, showing up in the UI as in the pool called sadness, but exported. That is, with the exception of one, the one I removed earlier:

I tried to remove one of the SAS cables from each of the shelves, so that I had 1 SAS cable going from a single HBA to each of the two shelves. That didn't help matters any.

Digging in the logs, I'm not seeing much of anything useful in /var/log/messages before the system restarted at 8:30ish. At 2 AM today, I see some weird events happening:

Apr 17 02:07:14 prod.fusco.me kernel: mpt3sas_cm1: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:14 prod.fusco.me kernel: mpt3sas_cm1: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:14 prod.fusco.me kernel: mpt3sas_cm1: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:14 prod.fusco.me kernel: mpt3sas_cm1: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:14 prod.fusco.me kernel: mpt3sas_cm1: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:14 prod.fusco.me kernel: mpt3sas_cm1: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:14 prod.fusco.me kernel: mpt3sas_cm1: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:14 prod.fusco.me kernel: mpt3sas_cm1: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:14 prod.fusco.me kernel: ses 13:0:9:0: Power-on or device reset occurred

Apr 17 02:07:14 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:14 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:14 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:14 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:14 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:14 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:14 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:14 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:14 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:14 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:14 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:14 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:14 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:14 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:14 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:14 prod.fusco.me kernel: ses 3:0:14:0: Power-on or device reset occurred

Apr 17 02:07:14 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:14 prod.fusco.me kernel: ses 3:0:28:0: Power-on or device reset occurred

Apr 17 02:07:16 prod.fusco.me kernel: mpt3sas_cm1: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:16 prod.fusco.me kernel: mpt3sas_cm1: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:16 prod.fusco.me kernel: mpt3sas_cm1: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:16 prod.fusco.me kernel: mpt3sas_cm1: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:16 prod.fusco.me kernel: mpt3sas_cm1: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:16 prod.fusco.me kernel: mpt3sas_cm1: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:16 prod.fusco.me kernel: mpt3sas_cm1: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:16 prod.fusco.me kernel: mpt3sas_cm1: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:16 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:16 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:16 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:16 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:16 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:16 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:16 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:16 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:16 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:16 prod.fusco.me kernel: ses 3:0:28:0: Power-on or device reset occurred

Apr 17 02:07:17 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:17 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:17 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:17 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:17 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:18 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:20 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:20 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:20 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:20 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:20 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:20 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Apr 17 02:07:20 prod.fusco.me kernel: mpt3sas_cm0: log_info(0x3112011a): originator(PL), code(0x12), sub_code(0x011a)

Then I tried to restart a VM that was hosted on this pool that I hadn't noticed had crashed at around 8:17:

At 20:22 is the first sign that there was a problem, and just there after is when I tried to remove the bad disk. But then there's nothing in the log until 20:30, when the server had started coming back up after it froze.Apr 17 20:17:36 prod.fusco.me kernel: br0: port 2(vnet1) entered disabled state

Apr 17 20:17:37 prod.fusco.me kernel: device vnet1 left promiscuous mode

Apr 17 20:17:37 prod.fusco.me kernel: br0: port 2(vnet1) entered disabled state

Apr 17 20:17:37 prod.fusco.me kernel: kauditd_printk_skb: 7 callbacks suppressed

Apr 17 20:17:37 prod.fusco.me kernel: audit: type=1400 audit(1681777057.381:67): apparmor="STATUS" operation="profile_remove" profile="unconfined" name="libvir>

Apr 17 20:17:38 prod.fusco.me middlewared[7268]: libvirt: QEMU Driver error : Domain not found: no domain with matching name '12_casey'

Apr 17 20:17:39 prod.fusco.me kernel: audit: type=1400 audit(1681777059.949:68): apparmor="STATUS" operation="profile_load" profile="unconfined" name="libvirt->

Apr 17 20:17:40 prod.fusco.me kernel: audit: type=1400 audit(1681777060.061:69): apparmor="STATUS" operation="profile_replace" profile="unconfined" name="libvi>

Apr 17 20:17:40 prod.fusco.me kernel: audit: type=1400 audit(1681777060.205:70): apparmor="STATUS" operation="profile_replace" profile="unconfined" name="libvi>

Apr 17 20:17:40 prod.fusco.me kernel: audit: type=1400 audit(1681777060.317:71): apparmor="STATUS" operation="profile_replace" info="same as current profile, s>

Apr 17 20:17:40 prod.fusco.me kernel: audit: type=1400 audit(1681777060.489:72): apparmor="STATUS" operation="profile_replace" profile="unconfined" name="libvi>

Apr 17 20:17:40 prod.fusco.me kernel: br0: port 2(vnet2) entered blocking state

Apr 17 20:17:40 prod.fusco.me kernel: br0: port 2(vnet2) entered disabled state

Apr 17 20:17:40 prod.fusco.me kernel: device vnet2 entered promiscuous mode

Apr 17 20:17:40 prod.fusco.me kernel: br0: port 2(vnet2) entered blocking state

Apr 17 20:17:40 prod.fusco.me kernel: br0: port 2(vnet2) entered listening state

Apr 17 20:17:40 prod.fusco.me kernel: audit: type=1400 audit(1681777060.729:73): apparmor="STATUS" operation="profile_replace" profile="unconfined" name="libvi>

Apr 17 20:17:40 prod.fusco.me kernel: audit: type=1400 audit(1681777060.809:74): apparmor="DENIED" operation="capable" profile="libvirtd" pid=16394 comm="rpc-w>

Apr 17 20:17:40 prod.fusco.me kernel: audit: type=1400 audit(1681777060.813:75): apparmor="DENIED" operation="capable" profile="libvirtd" pid=16394 comm="rpc-w>

Apr 17 20:17:40 prod.fusco.me kernel: audit: type=1400 audit(1681777060.881:76): apparmor="DENIED" operation="capable" profile="libvirtd" pid=16394 comm="rpc-w>

Apr 17 20:17:42 prod.fusco.me kernel: br0: port 2(vnet2) entered disabled state

Apr 17 20:17:42 prod.fusco.me kernel: device vnet2 left promiscuous mode

Apr 17 20:17:42 prod.fusco.me kernel: br0: port 2(vnet2) entered disabled state

Apr 17 20:17:43 prod.fusco.me kernel: audit: type=1400 audit(1681777063.029:77): apparmor="STATUS" operation="profile_remove" profile="unconfined" name="libvir>

Apr 17 20:17:51 prod.fusco.me middlewared[7268]: libvirt: QEMU Driver error : Domain not found: no domain with matching name '12_casey'

Apr 17 20:17:51 prod.fusco.me kernel: audit: type=1400 audit(1681777071.782:78): apparmor="STATUS" operation="profile_load" profile="unconfined" name="libvirt->

Apr 17 20:17:51 prod.fusco.me kernel: audit: type=1400 audit(1681777071.898:79): apparmor="STATUS" operation="profile_replace" profile="unconfined" name="libvi>

Apr 17 20:17:52 prod.fusco.me kernel: audit: type=1400 audit(1681777072.010:80): apparmor="STATUS" operation="profile_replace" profile="unconfined" name="libvi>

Apr 17 20:17:52 prod.fusco.me kernel: audit: type=1400 audit(1681777072.126:81): apparmor="STATUS" operation="profile_replace" info="same as current profile, s>

Apr 17 20:17:52 prod.fusco.me kernel: audit: type=1400 audit(1681777072.294:82): apparmor="STATUS" operation="profile_replace" profile="unconfined" name="libvi>

Apr 17 20:17:52 prod.fusco.me kernel: br0: port 2(vnet3) entered blocking state

Apr 17 20:17:52 prod.fusco.me kernel: br0: port 2(vnet3) entered disabled state

Apr 17 20:17:52 prod.fusco.me kernel: device vnet3 entered promiscuous mode

Apr 17 20:17:52 prod.fusco.me kernel: br0: port 2(vnet3) entered blocking state

Apr 17 20:17:52 prod.fusco.me kernel: br0: port 2(vnet3) entered listening state

Apr 17 20:17:52 prod.fusco.me kernel: audit: type=1400 audit(1681777072.558:83): apparmor="STATUS" operation="profile_replace" profile="unconfined" name="libvi>

Apr 17 20:17:52 prod.fusco.me kernel: audit: type=1400 audit(1681777072.890:84): apparmor="DENIED" operation="capable" profile="libvirtd" pid=16394 comm="rpc-w>

Apr 17 20:18:07 prod.fusco.me kernel: br0: port 2(vnet3) entered learning state

Apr 17 20:18:22 prod.fusco.me kernel: br0: port 2(vnet3) entered forwarding state

Apr 17 20:18:22 prod.fusco.me kernel: br0: topology change detected, propagating

Apr 17 20:18:23 prod.fusco.me kernel: br0: port 2(vnet3) entered disabled state

Apr 17 20:18:23 prod.fusco.me kernel: device vnet3 left promiscuous mode

Apr 17 20:18:23 prod.fusco.me kernel: br0: port 2(vnet3) entered disabled state

Apr 17 20:18:24 prod.fusco.me kernel: audit: type=1400 audit(1681777104.122:85): apparmor="STATUS" operation="profile_remove" profile="unconfined" name="libvir>

Apr 17 20:18:24 prod.fusco.me middlewared[7268]: libvirt: QEMU Driver error : Domain not found: no domain with matching name '12_casey'

Apr 17 20:18:25 prod.fusco.me kernel: audit: type=1400 audit(1681777105.639:86): apparmor="STATUS" operation="profile_load" profile="unconfined" name="libvirt->

Apr 17 20:18:25 prod.fusco.me kernel: audit: type=1400 audit(1681777105.759:87): apparmor="STATUS" operation="profile_replace" profile="unconfined" name="libvi>

Apr 17 20:18:25 prod.fusco.me kernel: audit: type=1400 audit(1681777105.871:88): apparmor="STATUS" operation="profile_replace" profile="unconfined" name="libvi>

Apr 17 20:18:26 prod.fusco.me kernel: audit: type=1400 audit(1681777105.991:89): apparmor="STATUS" operation="profile_replace" info="same as current profile, s>

Apr 17 20:18:26 prod.fusco.me kernel: audit: type=1400 audit(1681777106.171:90): apparmor="STATUS" operation="profile_replace" profile="unconfined" name="libvi>

Apr 17 20:18:26 prod.fusco.me kernel: br0: port 2(vnet4) entered blocking state

Apr 17 20:18:26 prod.fusco.me kernel: br0: port 2(vnet4) entered disabled state

Apr 17 20:18:26 prod.fusco.me kernel: device vnet4 entered promiscuous mode

Apr 17 20:18:26 prod.fusco.me kernel: br0: port 2(vnet4) entered blocking state

Apr 17 20:18:26 prod.fusco.me kernel: br0: port 2(vnet4) entered listening state

Apr 17 20:18:26 prod.fusco.me kernel: audit: type=1400 audit(1681777106.771:94): apparmor="DENIED" operation="capable" profile="libvirtd" pid=16394 comm="rpc-w>

Apr 17 20:18:41 prod.fusco.me kernel: br0: port 2(vnet4) entered learning state

Apr 17 20:18:43 prod.fusco.me kernel: br0: port 2(vnet4) entered disabled state

Apr 17 20:18:43 prod.fusco.me kernel: device vnet4 left promiscuous mode

Apr 17 20:18:43 prod.fusco.me kernel: br0: port 2(vnet4) entered disabled state

Apr 17 20:18:44 prod.fusco.me kernel: audit: type=1400 audit(1681777124.027:95): apparmor="STATUS" operation="profile_remove" profile="unconfined" name="libvir>

Apr 17 20:18:45 prod.fusco.me middlewared[7268]: libvirt: QEMU Driver error : Domain not found: no domain with matching name '12_casey'

Apr 17 20:18:46 prod.fusco.me kernel: audit: type=1400 audit(1681777126.615:96): apparmor="STATUS" operation="profile_load" profile="unconfined" name="libvirt->

Apr 17 20:18:46 prod.fusco.me kernel: audit: type=1400 audit(1681777126.739:97): apparmor="STATUS" operation="profile_replace" profile="unconfined" name="libvi>

Apr 17 20:18:46 prod.fusco.me kernel: audit: type=1400 audit(1681777126.843:98): apparmor="STATUS" operation="profile_replace" profile="unconfined" name="libvi>

Apr 17 20:18:47 prod.fusco.me kernel: audit: type=1400 audit(1681777126.975:99): apparmor="STATUS" operation="profile_replace" info="same as current profile, s>

Apr 17 20:18:47 prod.fusco.me kernel: audit: type=1400 audit(1681777127.143:100): apparmor="STATUS" operation="profile_replace" profile="unconfined" name="libv>

Apr 17 20:18:47 prod.fusco.me kernel: br0: port 2(vnet5) entered blocking state

Apr 17 20:18:47 prod.fusco.me kernel: br0: port 2(vnet5) entered disabled state

Apr 17 20:18:47 prod.fusco.me kernel: device vnet5 entered promiscuous mode

Apr 17 20:18:47 prod.fusco.me kernel: br0: port 2(vnet5) entered blocking state

Apr 17 20:18:47 prod.fusco.me kernel: br0: port 2(vnet5) entered listening state

Apr 17 20:18:47 prod.fusco.me kernel: audit: type=1400 audit(1681777127.387:101): apparmor="STATUS" operation="profile_replace" profile="unconfined" name="libv>

Apr 17 20:19:02 prod.fusco.me kernel: br0: port 2(vnet5) entered learning state

Apr 17 20:19:09 prod.fusco.me kernel: br0: port 2(vnet5) entered disabled state

Apr 17 20:19:09 prod.fusco.me kernel: device vnet5 left promiscuous mode

Apr 17 20:19:09 prod.fusco.me kernel: br0: port 2(vnet5) entered disabled state

Apr 17 20:19:10 prod.fusco.me kernel: audit: type=1400 audit(1681777150.100:102): apparmor="STATUS" operation="profile_remove" profile="unconfined" name="libvi>

Apr 17 20:19:12 prod.fusco.me middlewared[7268]: libvirt: QEMU Driver error : Domain not found: no domain with matching name '12_casey'

Apr 17 20:19:12 prod.fusco.me middlewared[7268]: libvirt: QEMU Driver error : Domain not found: no domain with matching name '12_casey'

Apr 17 20:19:13 prod.fusco.me middlewared[7268]: libvirt: QEMU Driver error : Domain not found: no domain with matching name '12_casey'

Apr 17 20:19:14 prod.fusco.me kernel: audit: type=1400 audit(1681777154.436:103): apparmor="STATUS" operation="profile_load" profile="unconfined" name="libvirt>

Apr 17 20:19:14 prod.fusco.me kernel: audit: type=1400 audit(1681777154.568:104): apparmor="STATUS" operation="profile_replace" profile="unconfined" name="libv>

Apr 17 20:19:14 prod.fusco.me kernel: audit: type=1400 audit(1681777154.684:105): apparmor="STATUS" operation="profile_replace" profile="unconfined" name="libv>

Apr 17 20:19:14 prod.fusco.me kernel: audit: type=1400 audit(1681777154.800:106): apparmor="STATUS" operation="profile_replace" info="same as current profile, >

Apr 17 20:19:15 prod.fusco.me kernel: audit: type=1400 audit(1681777154.976:107): apparmor="STATUS" operation="profile_replace" profile="unconfined" name="libv>

Apr 17 20:19:15 prod.fusco.me kernel: br0: port 2(vnet6) entered blocking state

Apr 17 20:19:15 prod.fusco.me kernel: br0: port 2(vnet6) entered disabled state

Apr 17 20:19:15 prod.fusco.me kernel: device vnet6 entered promiscuous mode

Apr 17 20:19:15 prod.fusco.me kernel: br0: port 2(vnet6) entered blocking state

Apr 17 20:19:15 prod.fusco.me kernel: br0: port 2(vnet6) entered listening state

Apr 17 20:19:15 prod.fusco.me kernel: audit: type=1400 audit(1681777155.180:108): apparmor="STATUS" operation="profile_replace" profile="unconfined" name="libv>

Apr 17 20:19:30 prod.fusco.me kernel: br0: port 2(vnet6) entered learning state

Apr 17 20:19:45 prod.fusco.me kernel: br0: port 2(vnet6) entered forwarding state

Apr 17 20:19:45 prod.fusco.me kernel: br0: topology change detected, propagating

Apr 17 20:20:02 prod.fusco.me kernel: br0: port 2(vnet6) entered disabled state

Apr 17 20:20:02 prod.fusco.me kernel: device vnet6 left promiscuous mode

Apr 17 20:20:02 prod.fusco.me kernel: br0: port 2(vnet6) entered disabled state

Apr 17 20:20:03 prod.fusco.me kernel: audit: type=1400 audit(1681777203.273:109): apparmor="STATUS" operation="profile_remove" profile="unconfined" name="libvi>

Apr 17 20:20:09 prod.fusco.me middlewared[7268]: libvirt: QEMU Driver error : Domain not found: no domain with matching name '12_casey'

Apr 17 20:20:10 prod.fusco.me kernel: audit: type=1400 audit(1681777210.958:110): apparmor="STATUS" operation="profile_load" profile="unconfined" name="libvirt>

Apr 17 20:20:11 prod.fusco.me kernel: audit: type=1400 audit(1681777211.078:111): apparmor="STATUS" operation="profile_replace" profile="unconfined" name="libv>

Apr 17 20:20:11 prod.fusco.me kernel: audit: type=1400 audit(1681777211.194:112): apparmor="STATUS" operation="profile_replace" profile="unconfined" name="libv>

Apr 17 20:20:11 prod.fusco.me kernel: audit: type=1400 audit(1681777211.306:113): apparmor="STATUS" operation="profile_replace" info="same as current profile, >

Apr 17 20:20:11 prod.fusco.me kernel: audit: type=1400 audit(1681777211.478:114): apparmor="STATUS" operation="profile_replace" profile="unconfined" name="libv>

Apr 17 20:20:11 prod.fusco.me kernel: br0: port 2(vnet7) entered blocking state

Apr 17 20:20:11 prod.fusco.me kernel: br0: port 2(vnet7) entered disabled state

Apr 17 20:20:11 prod.fusco.me kernel: device vnet7 entered promiscuous mode

Apr 17 20:20:11 prod.fusco.me kernel: br0: port 2(vnet7) entered blocking state

Apr 17 20:20:11 prod.fusco.me kernel: br0: port 2(vnet7) entered listening state

Apr 17 20:20:11 prod.fusco.me kernel: audit: type=1400 audit(1681777211.698:115): apparmor="STATUS" operation="profile_replace" profile="unconfined" name="libv>

Apr 17 20:20:12 prod.fusco.me kernel: audit: type=1400 audit(1681777212.038:116): apparmor="DENIED" operation="capable" profile="libvirtd" pid=16394 comm="rpc->

Apr 17 20:20:21 prod.fusco.me kernel: br0: port 2(vnet7) entered disabled state

Apr 17 20:20:21 prod.fusco.me kernel: device vnet7 left promiscuous mode

Apr 17 20:20:21 prod.fusco.me kernel: br0: port 2(vnet7) entered disabled state

Apr 17 20:20:21 prod.fusco.me kernel: audit: type=1400 audit(1681777221.554:117): apparmor="STATUS" operation="profile_remove" profile="unconfined" name="libvi>

Apr 17 20:22:35 prod.fusco.me kernel: WARNING: Pool 'sadness' has encountered an uncorrectable I/O failure and has been suspended.

Any help is appreciated :)

Specs of my server are:

NewProd Server | SCALE 22.12 RC1

| Supermicro H12SSL-I | EPYC 7282 | 256GB DDR4-3200 | 2X LSI 9500-8e to 2X EMC 15-Bay Shelf | Intel X710-DA2 | 28x10TB Drives in 2 Way mirrors | 4x Samsung PM9A1 512GB 2-Way Mirrored SPECIAL | 2x Optane 905p 960GB Mirrored

The shelves are connected to the two HBAs in an "X" configuration, if that's helpful.